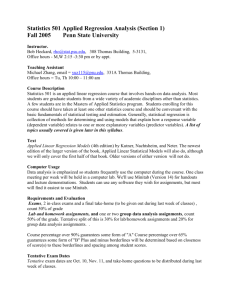

Lecture 4 - BYU Department of Economics

advertisement

Econ 388 R. Butler 2014 rev Lecture 4 Multivariate 2 ̂ . Inserting 𝜷 ̂ gives I. Recap on Projections. The vector of least squares residuals is 𝝁 ̂ = 𝒀 − 𝑿𝜷 𝝁 ̂ = Y − X(X’X) −1X’Y= (I − X(X’ X) −1X’ )Y = (I − PX )Y = MX Y The n × n matrices PX and MX are symmetric (PX = P’X, MX = M’X) and idempotent (PX = PX PX,, MX = MX MX) are called projection matrices as they ‘project’ Y onto the space spanned by X and the space that is orthogonal to X, respectively. Recall, MX and PX are orthogonal. Also, PX MX = MXPX = 0 We can see that least squares partitions the vector Y into two orthogonal parts Y = P y + M y = ̂+𝝁 projection onto X + residual (projection onto space orthogonal to X) = 𝑿𝜷 ̂ II. Partitioned Regression and Partial Regression Table 1: Projections everywhere 𝑃𝑋 = 𝑋(𝑋 ′ 𝑋)−1 𝑋′ and 𝑀𝑋 = 𝐼 − 𝑋(𝑋 ′ 𝑋)−1 𝑋′ and 1̅ is a vector of ones associated with the constant term Sample Model Normal Equation (minimize residual vector by Resulting Notes Regression choosing parameter values to make the parameter residual vector orthogonal to linear estimator combinations of the right hand side predictor variables) Finding the mean: 1̅′ 𝜇̂ = 0, or Decompose vector 𝛼̂ = 𝑌̅ ′ Y into its mean, 𝑌 = 𝛼̂1̅ + 𝜇̂ 1̅ (𝑌 − 𝛼̂1̅) = 0, or 𝛼̂ = 𝑌̅, and ∑(𝑦𝑖 − 𝛼̂) = 0, or deviations from its ∑(𝑦𝑖 ) = ∑(𝛼̂) , or ∑𝑦𝑖 =𝛼̂ ∑1, or ∑𝑦𝑖 =𝛼̂ 𝑛 mean 𝜇̂ = 𝑌 − 𝑌̅1̅ (order nx1) ′ ̅ ̂ Simple regression: 1 𝜇̂ = 0 and 𝑋′𝜇̂ = 0, X has order nx1, or 𝛽̂1 = ratio of the 𝛽1 = ∑(𝑥𝑖 − 𝑌 cov of (x,y) to 𝑥̅ )(𝑦𝑖 − 𝑦̅)/ 1̅′ (𝑌 − 𝛽̂0 1̅ − 𝛽̂1 𝑋) = 0, 𝑋′(𝑌 − 𝛽̂0 1̅ − 2 ̂ ̂ ̅ = 𝛽0 1 + 𝛽1 𝑋 + 𝜇̂ 𝛽̂1 𝑋) = 0, ∑(𝑥𝑖 − 𝑥̅ ) variance of x; 𝛽̂0 is the mean of y once ∑(𝑦𝑖 − 𝛽̂0 − 𝛽̂1 𝑥𝑖 ) = 0, ∑𝑥𝑖 (𝑦𝑖 − 𝛽̂0 − ̂0 = 𝑦̅ − 𝛽̂1 𝑥̅ the effect of x is 𝛽 𝛽̂1 𝑥𝑖 ) = 0, see chapter 2 of text and appendix taken out. A for solution General 𝑋′𝜇̂ = 0, X has order nxk, k equations in k 𝛽̂ = (𝑋′𝑋)−1 𝑋′𝑌 𝛽̂𝑗 generalizes the regression: unknowns: 𝑋′(𝑌 − 𝑋𝛽̂ ) = 0 simple model: 𝑌 = 𝑋𝛽̂ + 𝜇̂ ratio of partial covar to partial variances Centered R2: Y In our class, Where 𝑀1̅ are deviations from the means, so 𝑐𝑒𝑛𝑡𝑒𝑟𝑒𝑑 𝑅 2 = 𝑆𝑆𝑀 𝑆𝑆𝑅 ̂ ̂ and Xs deviated almost everything (𝑀̅1 𝑌)′(𝑀̅1 𝑌) = (𝑀̅1 𝑋𝛽 + 𝜇̂ )′(𝑀̅1 𝑋𝛽 + 𝜇̂ ) = =1 - 𝑆𝑆𝑇 𝑆𝑆𝑇 from means is done with the ̂ ̂ ̂ (𝑀̅1 𝑋𝛽 )′(𝑀̅1 𝑋𝛽 ) + (𝜇̂ ′𝜇̂ )′ + (𝑀̅1 𝑋𝛽 )′𝜇̂ + ̂ centered R2; adj𝑌 = 𝑋𝛽 + 𝜇̂ ((𝑀1̅ 𝑋𝛽̂ )′𝜇̂ )′ the last two r.h.s. terms=0 R2 compares two 𝑀1̅ 𝑌 = 𝑀1̅ 𝑋𝛽̂ + 𝜇̂ (why?), so regressions with So we can square ̂𝑖 − 𝑦̅))2= ∑(𝑦𝑖 − 𝑦̅)2 = ∑((𝑦𝑖 − 𝑦̂𝑖 ) + (𝑦 the same Y, n but both sides, ∑(𝑦𝑖 − 𝑦̂𝑖 )2 + ∑(𝑦̂𝑖 − 𝑦̅)2, or SST= SSM + with different Xs simplify SSR Frisch theorem for Preliminary cleansing of Y (of X1 correlations): Symmetry and Even for subsets of ′ −1 idempotency of variables (not just 𝑀𝑋1 𝑌 = 𝑌 − 𝑋1 (𝑋1 𝑋1 ) 𝑋1 𝑌 part of 𝑌 = 𝑋1 𝛽̂1 + a single variable), ̂ 𝑀 ensure the Y uncorrel with 𝑋1 𝑋2 𝛽2 + 𝜇̂ , where 𝑋1 we have partitioned the Xs into two arbitrary subsets 𝑋1 , 𝑋2 Preliminary cleansing of X2 (of X1 correlations): 𝑀𝑋1 𝑋2 = 𝑋2 − 𝑋1 (𝑋1 ′ 𝑋1 )−1 𝑋1 𝑋2 part of 𝑋2 that is uncorrel with 𝑋1 Regressing cleansed Y on cleansed X2: 𝛽̂2 = ((𝑀𝑋1 𝑋2 )′(𝑀𝑋1 𝑋2 ))−1 (𝑀𝑋1 𝑋2 )′(𝑀𝑋1 𝑌) = −1 (𝑋2 ′𝑀𝑋1 𝑋2 ) (𝑋2 ′𝑀𝑋1 𝑌) last equivalencies the respective coefficients are the cleansed (of corr w/ X1) partial correl of X2 and Y This last result is “partialing” or “ netting” out the effect of X1. For this reason, the coefficients in a multiple regression are often called the partial regression coefficients. Based on Frisch Theorem, we also have the following results: IIa. Regression with a Constant Term The slopes in a multiple regression that contains a constant term are obtained by transforming the data to deviations from their means and then regressing the variable Y in deviation form on the explanatory variables, X, also in deviation form (second row above). IIb. Partial Regression and Partial Correlation Coefficients ̂ , 𝒄̂, and 𝒅 ̂ ), and Consider the following sample regression functions, with least squares coefficients (𝒃 ̂) residual vectors ̂ 𝒆 and 𝒖 Y = X𝑏̂ + ̂ 𝒆 ̂ Y = X𝒅 + Z𝒄̂ + 𝑢̂ A new variable Z is added to the original regression (which is observed, and included in the analysis—in omitted variable bias analysis given in the next lecture, it is not included in the sample regression but should have been), where 𝒄̂ is a scalar (a regression coefficient). We have ̂ = (X ’X)−1X’(Y − Z𝒄̂) = 𝑏̂ − (X’ X) −1X’ Z𝒄̂ 𝒅 Therefore, ̂ − Z𝒄̂ 𝑢̂ = Y− X𝒅 = Y− X[𝑏̂ − (X’X) −1X’ Z𝒄̂] − Z𝒄̂ = (Y − X𝑏̂) + X(X’ X) −1X ’ Z𝒄̂ − Zc = ̂ 𝒆 − M Z𝒄̂ Now, ̂ + 𝒄̂2(Z’M Z) − 2𝒄̂Z’M e 𝑢̂′𝑢̂ = ̂ 𝒆′𝒆 ̂+ 𝒄̂2(Z’M Z) − 2𝒄̂Z’M (M y) = ̂ 𝒆′𝒆 ̂ + 𝒄̂2(Z‘M Z) − 2𝒄̂Z’M (X𝑑̂ + Z𝒄̂ + 𝑢 = ̂ 𝒆′𝒆 ̂) 2 ‘ ’ ̂ + 𝒄̂ (Z M Z) − 2𝒄̂Z M Z𝒄̂ = ̂ 𝒆′𝒆 ̂− 𝒄̂2(Z‘M Z) ≤ ̂ ̂ = ̂ 𝒆′𝒆 𝒆′𝒆 : Hence, we obtain the useful result: ̂ is the sum of squared residuals when y is regressed on X and uiu is the If ̂ 𝒆′𝒆 sum of squared residuals when y is regressed on X and Z, then ̂ − 𝒄̂2(Z’M Z) ≤ ̂ ̂ 𝑢̂′𝑢̂ = ̂ 𝒆′𝒆 𝒆′𝒆 where 𝒄̂ is the coefficient on Z in the regression above, and M Z = [I−X(X’X) −1X’ ]Z is the vector of residuals when Z is regressed on X. IIc. Goodness of Fit and the Analysis of Variance R2 again, with more details on the derivation (the coefficient of determination, this is actually the centered R-square, when we talk about “R-square” in normal econometrese, we mean the centered R-square)-the proportion of the variation in the dependent (response) variable that is 'explained' by the variation in the independent variables. The idea is that the variation in the dependent variable, (called the total sum of squares, n SST= (Yi Y ) 2 || 1 ||2 , can be divided up into two parts: 1) a part that is explained by the regression i 1 model (the “sum of squares explained by the regression model”, SSE) and 2) a part that is unexplained by the model (“sum of squared residuals, SSR). Some of the math is given in Wooldridge, chapter 2 where he has used the following algebra result: (A i Bi ) 2 (A i2 2A i Bi Bi2 ) = A i2 2 A i Bi Bi2 . n n n n n i 1 i 1 i 1 i 1 i 1 ˆ i i Where i ˆ i i ̂ i n The step that is omitted is showing that (Yˆ Y ) uˆ =0. i 1 n n n i 1 i 1 i 1 i i This boils down pretty quickly into Yˆ iuˆi Y uˆi = Yˆi uˆi 0 , so you just have to show that n Yˆ uˆ = 0 in order to prove the result. i 1 i i It is zero because OLS residuals are constructed to be uncorrelated with predicted values of Y, since residuals are chosen so that they are uncorrelated with (or orthogonal to) the independent variables, they will be orthogonal to any linear combination of the independent variables including the predicted value of Y. So a very reasonable measure of goodness of fit would be the explained sum of squares (by the regression) relative to the total sum of squares. In fact, this is just what R2 is: R2 SSM / SST 1 SSR / SST It is the amount of variance in the dependent variable that is explained by the regression model (namely, explained by all of the independent variables). R2 = 1 is a perfect fit (deterministic relationship), R2= 0 indicates no relationship between the Y and the slope regressors in the data matrix X. The dark side of R2: R2 can only rise when another variable is added as indicated above. We can get to the same result more quickly by applying the M1 orthogonal projection operator (this takes deviations from the mean, as seem above) to the standard sample regression function. Adjusted R-Squared and a Measure of Fit One problem with R2 is, it will never decrease when another variable is added to a regression equation. To correct this, the adjusted R2 (denoted as adj-R¯2 ) was created by Theil (one of my teachers at the Univ of Chicago): ˆ ' ˆ /( n k ) adj R 2 1 Y ' M 1Y /( n 1) With the connection between R2 and adj-R2 being 3 adj R 2 1 (n 1) (1 R 2 ) (n k ) The adjusted R2 may rise or fall with the addition of another independent variable, so it is used to compare models with the same dependent variable with sample size fixed, and the number of right hand side variables changing. [[[[ Do You Want a Whole Hershey Bar? Well, Consider the following diagram for a simple regression model when answering these three multiple choice questions: Y X 1. The sum of the squared differences between where the solid bar ( Y ) hits the Y axis and the dotted lines hit the Y axis: a. Is the SST (sum of squares total) b. (Yi Y )2 c. An estimate of the variance of Y (when dividing by n-1) d. all of the above 2. The sum of the square of the distances represented by the short, thick lines are a. SST (sum of squares total) b. SSE (explained sum of squares) c. SSR (residual sum of squares) d. none of the above 3. The sum of squares mentioned in question 1 will equal the sum of squares mentioned in 2 when a. ˆ0 , ˆ1 both must be equal to zero b. ̂1 is equal to zero, regardless of the intercept coefficients value c. ̂0 is equal to zero, regardless of the slope coefficient value d. when men at BYU are encouraged to wear beards ]]]]] III. In summation: Three Really Cool Results from Regression Projections Really Cool Result 1: Decomposition Theorem Any vector Y can be decomposed into orthogonal parts, a part explained by X and a part unexplained by X (or orthogonal to the X); it’s the Pythagorean theorem in the regression context ( X is the space orthogonal to X). PXY 4 Y MXY X 0 PXY || ||2 || ||2 || ||2 (this is just the Pythagorean theorem) which is easily derived from the basic results on the orthogonal projectors, namely: a. PX and MX are idempotent and symmetric operators, such that b. PXMX=0 (they are orthogonal, or at right angles, so that the inner product of anything they jointly project will be zero) and c. I=PX+MX (there is the part explained by X, the PX part, and the part unexplained by X, the MX part, and that together they sum to the identity matrix, I, by defintion). The identity matrix I transforms a variable into itself (i.e., it leaves the matrix or vector unchanged). Proof of the Pythagorean theorem, given the decomposition: Y ' Y Y ' IY Y '( PX M X )Y Y ' PX Y Y ' M X Y ( PX Y ) ' PX Y (M X Y ) ' M X Y (this last equality results from the idempotent and symmetry properties) Or || Y ||2 || PX Y ||2 || M X Y ||2 Really Cool Result 2: Projections on Ones—Means and Deviations If X is a vector of ones, the P1 X X 1 (a vector of the mean value for X) and M 1 X X X 1 (a vector of deviations of X from its mean). Since these are in orthogonal spaces, their inner product or dot product is zero, that is, by construction they are uncorrelated. Proof: (I is the nxn identity matrix, M 1 is also nxn) where 1 1(1`1) 1 1` (the orthogonal projection one the vector of constants) Since 1`1 n , then 1 (1`1) 1 , so then 1(1`1) 1 1` n 1 1 1 1 1 1` 1 1 1 11 n n 1 1 11 a nxn matrix of ones 1 1 1 1 1 1 1 1 1 1 1 2 , 1 For n 1 1 1 1 n n a nxn matrix of ones nx1 5 i n 1 1 2 i 1 n = X 1 ; and ( ) 3 1 1 1 1 i n n Really Cool Result3: The Partial Derivative Result for Matrices (Frisch theorem) When we have multivariate regression, with more than one slope regressor (right hand side independent variable), how do we interpret the coefficients? If we imagine it is a linear regression, such as 6) 11 2 2 residuals and suppose that X1 is just one variable (so X2 contains the intercept and other variables), and we take the partial derivative of Y with respect to X1 we get 1 . This suggests that 1 is the change in Y when we increase X1 by one unit, holding all other variables constant (the same as in partial differentiation). And so it is, 1 is the slope of Y with respect to X1, all other things equal. Now suppose that X1 contains m variables, so the 1 coefficient vector has m elements in it: x11 x 12 . X 1 1 . . x1n x21 x22 . . . x2 n ... xm1 ... xm 2 1 ... . 2 . ... . . ... . ... xmn m In what sense are these jointly “partial effects” (analogous to taking the partial derivative)? For this arbitrary division of the regressors in equation (6)—that is, 1 and 2 are of any arbitrary dimension (from 1 to k, where k < n, the sample size)—we have the Frisch theorem. The Frisch theorem says that, the regression in equation (6), consider another regression: 7) X 2 X 2 1 residuals Then the following are always true: a) the estimate of 1 from equation (6) will be identical to the estimate of from equation (7), b) the residuals from these two equations will also be identical, and so c) ̂1 is the estimate of the effect of X1 on Y, after the influence of X2 (that is, the influence of all the variables in X2) has been factored out. 6 If we applied the Frisch theorem to the simple regression model, where X1 (in equation 6) =X (the slope variable), and X2 (of equation 6) = 1 , a vector of ones to account for the intercept coefficient, then the regression of Y, deviated from its mean from by projecting it orthogonal to 1 , on X deviated from its mean from by projecting it orthogonal to 1 , should yield the slope coefficient in the simple regression. And so it does, as indicated in the following derivation (applying the matrix formula for a regression vector to the specification given in equation 7, where X 2 = 1 ): 1 ((1)`1) 1 (1)`1 and ( 1)`( 1) ( 1)`( 1) 1 1 1 1 2 1 where 1 . , 1 . . n 1 1 1 2 . 1 . . n Remember that 1 takes deviations from the mean (so it is orthogonal to 1 , that is, 1`(1 ) 0 and 1`( 1 ) 0 since the sum of deviations is zero.) That’s why 1 (( 1 )`( 1 )) 1 ( 1 )`( 1 ) ( )( ) ( ) where i i 2 i i n i n , the formulas in the book and from lecture 1 for the simple regression model. IV. A. Illustration Using Stata Code with the Simple Wage-College Regression Example: Meaning of the slope coefficient, 1 , in simple model: 8) 0 1 or 0 1 1 and 1 1 1 9) STATA Code (for the 3-observation example): # delimit ; clear; input y x; 1.3 .5 ; 0 -.3 ; .5 1.4 ; end; generate ones=1; regress y x; 7 predict resids, residuals; list y x resids; regress y ones, noconstant; predict residy, residuals; regress x ones, noconstant; predict residx, residuals; regress dresidy residx; regress residy residx, noconstant; predict resids2, residuals; list y x resids resids2; [[[Do You Want a Whole Hershey Bar? 1. The Frisch (or partial derivative) theorem says (among other things) that: a. the education coefficient in a wage regression has the same value regardless of which other regressors are included in the analysis b. the education coefficient in a simple wage regression with a constant will have the same value as the coefficient in the simplest regression without a constant c. the education coefficient in a wage regression is an estimate of education on wages after the influence of the other variables (in the regression) have been factored out d. all of the above 2. If the regression space (X) consists of only a single vector of ones (denoted 1 ), then a. the orthogonal projection of Y on 1 , P1 , produces a vector of means of Y b. the orthogonal projection onto the space orthogonal to 1 , M 1 , produces a vector of deviations of Y from its mean c. P1 M 1 =0 d. all of the above 3. Since M1 is a orthogonal projection, and hence, symmetric and idempotent, it follows that for any vectors X and Y: a. M1X = M1Y b. M1X = I - M1Y c. (M1X)’Y = (M1X)’(M1Y) =(X)’(M1Y) d. none of the above ]]]]] IV B. Woody Restaurant example Find 2 (suppose it is competitors, with 1 = income and population; notice the roles of 2 and 1 from the theorem is arbitrary) and the residuals from 11 2 2 residuals And 2 (and the residuals) from 1 12 2 residuals Here’s the STATA code to check this out: # delimit ; infile revenue income pop competitor using "e:\classrm_data\woody3.txt", clear; generate ones=1; regress revenue income pop competitor; predict resids, residuals; 8 list resids; regress revenue income pop; predict residy, residuals; regress competitor income pop; predict residx, residuals; regress residy residx, noconstant; predict resids2, residuals; list revenue resids resids2; VI.Correlations From the Regression Projections.[Adapted from Wonnacott, p. 306] X y Cos Simple correlation r Cos Partial correlation r | 1 (also the centered R in this example) Cos Cos2 Multiple correlation R Squared multiple correlation R2 (uncentered R2) Note: |Cos | | Cos | r R |Cos | | Cos | r | 1 R Appendix on “Frisch” (or Partial Derivative) Theorem—A Picture of the theorem in three dimensions The partial derivative theorem for regression models says that for the following two regressions: Y X1 ˆ1 X 2 ˆ2 ˆ and M X `1 Y M X1 X 2 ˆ ˆ M X 1 , it’s the case that ˆ2 ˆ and ˆ ˆ M X 1 , where the variables in the second regression are residuals from regressing Y and X2 on X1 respectively: Y X 1ˆ M X1 Y and X X ˆ M X . So first, we show just the first regression: 2 1 X1 2 9 X2 Y X2 X1 X1 O Top view of the X1, X2 plane Upper side view of regression Now we do the second regression (using the residuals from the X1 regressions) by itself (essentially decomposing Y and X2 in terms of X1) X2 Y X2 X1 X1 O Top view of the X1, X2 plane Upper side view of regression And finally we add them together so that equivalencies become apparent—obviously ˆ ˆ M X 1 by inspection of the graphs below, and ˆ2 ˆ by the law of similar triangles. In fact, that is all the partial derivative (Frisch) theorem really is—just a restatement of the Pythagorean theorem and elementary properties of geometry (in Euclidean space). X2 Y X2 X1 X1 O Upper side view of regression Top view of the X1, X2 plane General Results on the Frisch Theorem 10 Applies whenever there are two or more regressors (right hand side terms) in our model. Consider an arbitrary division of the regressors and write them as 6) 11 2 2 residuals Let 1 is the projection of Y to the space orthogonal to X1, or in other words, the residuals of the regression of Y onto X1 (this is analogous to the decomposition of Y into the part explained by the whole X vector, Y=PXY + MXY, but it is a different decomposition, as X1 is lower dimension than X, and M1 is higher dimension than MX): 1 1 part of y exp lained by 1 part of y orthogonal to 1 Similarly, if X1 has dimension n-x-z and X2 has dimension n-x-(k-z), X1 2 are the residuals from regression(s) of 2 on 1 (X2 are the dependent variable(s) and X1 are the independent variables), the dimension of X1 being n-x-n and the dimension of X1 n-x-z so that 12 has dimension n-x-z. Then consider another regression: 7) X1 X1 2 residuals Then the Frisch Theorem says that a) the estimate of 2 from equation (6) will be identical to the estimate of from equation (7), b) the residuals from these two equations will also be identical, and c) ˆ2 is the estimate of the effect of X2 on Y, after the influence of X1 has been factored out, d) and as a result of a and b, we have Y ' M X1Y Y ' M X Y Y ' PM X X Y or 1 || M X1Y ||2 || M X Y ||2 || PM X X Y ||2 , or 1 || M X1Y ||2 || PM X X Y ||2 || M X Y ||2 . 1 Applying this result to the case where X1 is just the intercept (the vector of ones we denote as 1 ), and X as all the regressors, including the intercept, then result (d) becomes Y ' M 1Y Y ' M X Y Y ' PM 1 X Y or || M 1 Y || 2 || M X Y || 2 || PM 1 X Y || 2 , or || M 1Y ||2 || PM 1 X Y ||2 || M X Y ||2 , that is, variation in Y (where variation is calculation by deviating Y from its mean using a vector of ones, M 1Y ) can be explained by variation in Y due to variation in the Xs (more specifically, that part of the Xs not associated with the constant, PM 1 X Y ) and the variation in Y that is unexplained by all the Xs (including the intercept, M X Y ). Equivalently, the total sum of squares for Y (SST) equals the part of the variations explained by variations in the model (SSM, where the Xs are the model, and M 1 X deviates X from their means, or takes variations of X) and the part left unexplained, captured by the sum of squared residuals (SSR). Appendix SAS code (for the sample model of ln(wages) on college): 11 data one; input y x; ones=1; cards; 1.3 .5 0 -.3 .5 1.4 ; proc reg; model y=x; output out=two r=resids; run; proc print; var y x resids; run; proc reg; model y=ones/ noint; output out=two r=residy; run; proc reg; model x=ones; output out=two r=residx; run; proc reg; model residy=residx/noint; output out=two r=resids2; run; proc print; var y x resids resids2; run; Here’s the SAS code to check for Woody’s Restaurant Data results: data one; infile "e:\classrm_data\woody.txt"; input revenue income pop competitor; ones=1; run; proc print; run; proc reg; model revenue=income pop competitor; output out=two r=resids; run; proc print; var resids; run; proc reg; model revenue=income pop; output out=two r=residy; run; proc reg; model competitor=income pop; output out=two r=residx; run; proc reg; model residy=residx/ noint; output out=two r=resids2;run; proc print; var revenue resids resids2; run; 12