COMPARING ITERATIVE CHOICE

advertisement

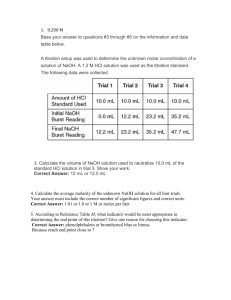

COMPARING ITERATIVE CHOICE-BASED METHODS IN THE ELICITATION OF UTILITIES FOR HEALTH STATES Jose Luis Pinto Prades Glasgow Caledonian University Joseluis.pinto@gcu.ac.uk Ildefonso Mendez Universidad de Murcia Graham Loomes University of Warwick 23-05-2014 ABSTRACT It is a usual practice to elicit preferences for health states with iterative choicebased methods. The Time Trade-Off and the Standard Gamble use different search strategies like Bisection, Titration (Bottom-up, Top-down), “Ping-pong” or others. There is evidence that different methods produce different results. This has been attributed to the presence of psychological biases. This paper shows that different iterative procedures will produce different results even if there are no psychological biases. We only have to assume that are stochastic. We assume that individual preferences are characterized by a certain probability distribution. We assume that the response of the subject to each question of the iteration process comes from his/her “true” distribution (no biases) and compare (using simulation) the mean of this “true” distribution with the mean elicited with the iterative procedure. We find that the difference between the “true” and the elicited mean was higher for Titration than for Bisection. There are two strategies that seem to perform better than the rest. One is to randomize all starting points and use Randomization. The other is to adapt the use starting points to the severity of the health state. In this case, we should proceed in stages. First, we should estimate the range where most of the probability mass is located. Next, we adapt the starting point to the severity of the health state. In this case, Titration perform better than Bisection. 1. Introduction Several preference elicitation methods belong to the category of “matching”. Matching methods ask subjects to establish indifference between two options. One of the options has all attributes predetermined at some fixed level. In the other option all levels of attributes except one are predetermined. Subjects are asked to state the level that is missing such that the two options have the same utility level. However, the usual procedure of establishing indifference is asking subject several iterative choice questions. That is, each question is related to the response to the previous question. The idea is that those questions make possible to estimate an interval where the indifference point is located. The higher the number of questions, the narrower is the interval. Finally, once the interval is narrow enough, subjects are asked an open question about where in this interval the indifference point is located or they assume that the middle point of the interval is the indifference point. We will call these methods “Iterative Choice-Based” (ICB). In health economics they are widely used to elicit preferences for health states with methods such as Time Trade-Off (TTO) or Standard Gamble (SG). These methods are very efficient since they get a lot of information about the structure of preferences at the individual level with a reduced number of questions. Unfortunately, ICB methods are not without problems. For example, different iteration mechanisms produce different results. One example is Lenert et al (1998). They compared different several iteration mechanisms and they found that utilities changed depending on the iteration procedure. More specifically, they compared “top-down titration” vs “ping-pong”. The first method asks subjects if they would accept some risk of death (in the Standard Gamble) in order to avoid a certain illness. If they accepted, risks were increased (1%, 2%, 3%...) until indifference was reached. In the “ping-pong” the subject was offered the following sequence of risks for SG: 1%, 99%, 2%, 98%, 10%, 90%, 80%, 20%, 70%, 30%, 60%, 40%, 50%. They found that titration consistently produced higher utilities. Brazier and Dolan (2005) also compared Ping-pong and Titration with similar results. Hammerschmidt et al (2004) also provide evidence that different iterative procedures lead to different utilities. In summary, there is evidence that different ways of iterating produce different values. The reaction of researchers to these findings has been different. 1. In some cases, they use a unique iteration method. This may not a problem if the objective of the paper is to test hypothesis that require holding everything constant (like the iteration method). However, it is more worrying if the objective is to compare utilities produced by different methods. 2. In other cases (Bleichrodt et al , 2005), they use procedures that try to increase the probability that each choice in the iteration process is treated as independent as possible from the rest. In some respect, they try to elicit preferences as if each choice was the first choice in the sequence assuming this is the most unbiased choice. This is based on the idea that the problem of iterative methods comes from anchoring effects. They assume that the subject will reveal her “true” preferences if each step in the iterative choice procedure is independent from the previous one. 3. Another potential strategy is to randomize the starting point. Apparently, the theory behind this is randomization averages out errors and biases. This implicitly assumes that anchoring effects generate a distribution of errors with zero mean. 4. Finally, some researchers (Stein et al, 2009) claim that some search procedures are less biased than others and they use those methods they believe less biased. All these approaches have one thing in common. They assume that different search methods produce different results because there is a psychological bias that generates this phenomenon. This bias usually stems from the fact people do not treat each choice independently from previous choices in the iteration process. This leads some authors to conclude that “utility values are heavily influenced by, if not created during, the process of elicitation” (Lenert et al 1998). That is, they question the same existence of the concept of preference given those results. The objective of this paper is to show that the fact that different ICB produce different results can be explained in a less radical way. We can keep the assumption that preferences do exist but they are not deterministic, they are stochastic. Different ICB will produce different results if preferences are stochastic. We will show that even in the absence of any psychological bias, iterative methods might produce different results depending on the iterative procedure used. This does not conflict with the interpretation of these effects as biases. The two effects can go together. What we will show is that we do not need to resort to psychology in order to explain why utilities can be different depending of the iterative procedure used. The only assumption we need is that preferences are stochastic. This is hardly an assumption since there is plenty of evidence that there is an element of variability in preferences for health states. We are not aware of any test-retest study that found a correlation coefficient of 1.0. For example, Feeny et al (2004) report Intraclass Correlation Coefficient (ICC) ranging from 0.49 to 0.62. Although they report that mean agggregate utilities are stable over time, the ICC reveals that there is variability at the individual level. Very often this result (variability at the individual level and stability at the aggregate level) does not worry too much researchers, since they are interested about the preferences of groups of subjects. They interpret the variability at the individual level as the consequence of random error. We will show that this element of variability may be relevant in order to explain why different ICB methods may produce different utilities. The structure of the paper is as follows. First, we show with the help of some examples, the intuition that is behind our hypotheses. Next, we present the methodology that we use to derive our predictions. We show our results and discussion closes the paper. 2. ITERATIVE CHOICES: SOME EXAMPLES. We will start with some examples to show the intuition behind our argument. Assume a subject who has to evaluate a health state (better than death) with the TTO. Assume that duration in bad health is 10 years and we want to know the duration in full health that the subject considers equivalent to 10 years in bad health. Researchers have to take several decisions: a) which is the level of precision that we will use? b) Which is the starting point? c) How many iterations (e.g., iterative choices) are going to be used? d) Which search procedure is going to be used? Assume that the researcher follows next strategy: 1. Decides to use the year as the maximum level of precision in the iteration process, that is, the researcher wants to know the value of X and Y such that (10 years, bad health)<(X years; full health) and (10 years, bad health)>(Y years; full health) such that X-Y=1. 2. Decides to estimate the middle point between X and Yas the true utility so utilities will be 0.95. 0.85 and so on. 3. Decides to randomise the starting point. That is 10% of the sample will start with the choice (10 years, bad health; death) vs (1 year, full health;death), then 10% will start with the choice (10 years, bad health; death) vs (2 year, full health;death) and so on. 4. Decides to use Bisection as the “search procedure”. That is, if subjects prefer (10 years, bad health; death) to (1 year, full health;death) the next question will be (10 years. bad health; death) vs (6 years, full health;death) and so on. Assume that the subject has to evaluate a health state that it is not too severe. Since her preferences are stochastic, we will assume that her responses are generated by some probability distribution (see column “True distribution” in Table 1). This distribution implies that, if the subject were asked repeatedly to chooce between (9 years, FH) vs (10 years, bad health) in 60% of the cases, she would choose (10 years bad health). In this case, the utility would be 0.95. However, in 40% of the cases, she would choose (9 years, FH). Assume that the first question, generated randomnly, is the choice between (8 year, FH) vs (10 years, bad health). In 80% of the cases the subject will choose (10 years, bad health) implying that U>0.8 and in 20% of the cases (8 years, FH). This is the essence of the concept of “stochastic” preferences. The subject does not always give the same response but this does not imply that she is irrational or that their preferences do not exist. Why is it that subjects give different responses in different moments of time? We do not really know. We can simply assume that subjects can be in different “states of mind” on different occasions. In some cases, she perceives the health state as being very mild and she is not willing to give up 1 year of life to improve quality of life. In some other cases, she perceives that health state a little bit more severe and she is willing to give up more of one year. This is what we observe in surveys: variability at the individual level. However, most probably the subject can give a rational explanation of both decisions. Maybe in some cases she considers that some aspect of her life and in some cases she focuses on some other aspect. One implication of this perspective about preferences is that giving different responses to the same question does not imply that she has made a mistake. Stochastic preferences imply that all “states of mind” are equally valid. In principle, there are not mistakes. The concept of error only applies to cases such as the subject pressing the key of option A while the subject prefers B. Apart from that, we can talk about preferences as a distribution, with some mean, median or some other moment but not exactly about wrong and right responses. Not even about “contradictions”. That is, if health state A is better than B, the fact that the subject may give an answer such that U(B)>U(A) does not have to be a mistake. If distributions overlap this will happen with some degree of probability as long as subjects do not remember (or link) the two responses. However, in this paper we will assume that the objective of the elicitation procedure is to estimate the mean. Once we have explained what we understand by stochastic preferences, we will show how they affect ICB methods. Let us assume that the first choice is (10Y, bad health) vs (9 years full health). We will show that, in this case, the expected mean is 0.893. If we start with (10Y, bad health) vs (1 years full health) the expected mean is 0.847. The reason is that the probability distributions that are generated starting from 9 and starting from 1 are different, even if every single answer comes from the “True” probability distribution, with mean 0.872. Let us see one example. We will show that the probability of identifying the indifference interval between 8-9 years in full health is 28.51% and not 20% (the true probability) if we start from 9. Why is that? If she starts with (10Y, bad health) vs (9 years full health) she will end in the interval 8-9 years in full health if: 1. In the choice between (10Y, bad health) vs (9 years full health) she prefers (9 years full health). The probability of this response is 40%. The iterative process (assuming Bisection) will present the choice between (10Y, bad health) vs (5 years full health). 2. In the choice between (10Y, bad health) vs (5 years full health) she prefers (10Y, bad health). The probability of this response is 99%. The iterative process will present the choice between (10Y, bad health) vs (7 years full health). 3. In the choice (10Y, bad health) vs (7 years full health) she prefers (10Y, bad health). The probability of this response is 90%. The iterative process will then proceed to show her the choice between (10Y, bad health) vs (8 years full health). 4. In the choice (10Y, bad health) vs (8 years full health) she prefers (10Y. bad health). The probability of this response is 80%. In summary, if she starts with the choice (10Y, bad health) vs (9 years full health) she will end up in the interval 8-9 years in full health if all of the above happens and the probability is 0.4x0.99x0.9x0.8=0.285. However, this is not the true probability (it is 20%). Each time that we start from (10Y, bad health) vs (9 years full health) there are some chances that the subject will end up in a different interval, given the stochastic nature of her preferences. If we were able to repeat these questions a large number of times (and the subject would suffer some kind of instantaneous amnesia) she would end up in the different intervals of Table 1 in the frequencies that we present in the last two columns of Table 1. This would produce a mean of 0.893 if we had started from (9Y,FH) and of 0.847 if we had started from (1Y,FH). What it is important is to observe is that utilities change with the starting point even if we assume that there are no psychological biases. Each response in the iteration process comes from the “true” distribution. Even more, we observe a certain tendency to obtain higher utilities if we start from above (9 year full health) than if we start from below (1 year full health). This could be interpreted as evidence of anchoring effects while we see that this is not the case. Of course, this does not exclude that anchoring has an effect. What we say is that we do not need any sort of psychological bias (e.g.. anchoring) to observed utilities changing when the starting point changes. This example highlights several issues: 1. The “solution” to the problem generated by iterative methods is not to use methods that encourage subjects to treat each choice as independently as possible from the rest of choices. In our example, the subject has treated each choice independently from the rest. 2. Randomization does not have to be the right solution. Randomisation is the right approach only when the “mean of the means” estimated from each starting point coincides with the “true mean”. This would only happen in very specific cases. Moreover, randomising has a potential problem: it increases sample error since it reduces the number of subjects starting from different points. The potential benefit of having observations all along the different starting points can be offset by the increase in error generated by the smaller sample size in each starting point. 3. In order to find “solutions” it is important to have observations of distributions at the individual level. This is an important point that we would like to stress. At the aggregate level we observe that different subjects give different responses. This variability may come from two sources. One is that subjects have heterogeneous but deterministic preferences. Another is that preferences are stochastic at the individual level. If subjects have heterogeneous but deterministic preferences iterative methods will not be problematic. For example, assume that we observe that in our sample 50% of subjects state that the utility of a health state is in the interval 0.6-0.7 and the other 50% that the utility is in the interval 0.9-1.00. This could be because our sample is split in two different groups with very different views about health. However, one single group with homogeneous but stochastic preferences could generate this response pattern as well. In the first case, we would always obtain the same mean irrespectively of the starting point. This would not happen in the second case. In this paper we will show that in order to know which is the best strategy with iterative methods depends on the characteristics of the underlying (stochastic) utility functions. However, since there is no empirical evidence about how those functions may look like (at the individual level), we will make some assumptions that we think are reasonable in order to compare iterative methods. The method that we will use to approach this problem is simulation. 3. METHODS. Variability in the preferences of respondent i is measured by means of a probability function that assigns a value pij to each of the j=1.....J intervals in which the range of variation of X is partitioned. where pij≥0 and =1 for all i. Those intervals can be life years in full health in the case of TTO or probabilities in the case of SG. This is not relevant in our case. The average utility of respondent i., μi, is a weighted average of his utility evaluated at the midpoint of each interval. The parameter of interest is μ. the average utility across all the respondents. The researcher observes the individual choices and his goal is to elicit μ. We assume the researcher deals with this identification problem using ICMM methods. We characterize the identification problem as follows. We assume that ranges from 0 to 10. J equals 10 intervals of equal amplitude and we consider nine starting points corresponding to values 1 to 9 of . The objective is to estimate where in the 10 intervals generated the indifference point is located. We assume that indifference is in the middle of the interval and we want to reach this interval through a series of iterative choices. The researcher has to take some decisions like: 1. Which is the starting point? 2. Which is the search procedure we are going to use? 3. Are we going to set a limit on the number of iterations? In this paper we will assume that there will be no limit on the number of iterations, that is, we will ask as many questions as necessary to place the subject in one of the 10 intervals that we have decided to use. For this reason we will concentrate on the first two issues. More specifically, we assume that the researcher has next options about the starting point and about the search procedure: 1. Starting point. Options: a. Randomize. This implies that we used each of the 9 potential starting points equally. b. Use a fixed point. Three cases have been used in the literature: i. The upper end, e.g. (10 years bad health) vs (9 year full health) ii. The lower end, e.g. (10 years bad health) vs (1 year full health) iii. The middle point, e.g. (10 years bad health) vs (5 years full health)1 2. Which search procedure? In this paper we will simulate the effect of three search methods: a. Bisection: this method is characterized by halving the indifference interval in two parts of equal size. For example, if one subject prefers (10 years bad health) to (4 years in full health), the next choice would be between (10 years bad health) and (7 years in full health) since (7 years in full health) is the middle point between 4 and 10. b. Titration: this method usually starts from one end or another of the distribution and moves up or down in units until the subject changes her preferences from one option to the other. The literature refers to top-down titration when the subject starts with high values (e.g. 9 years full health) and moves down (8, 7…). When the subject starts with low values (e.g. 1 year in full health) and moves up (2, 3…) the literature refers to bottom-up titration. In this paper we will also use two other options, namely, randomizing and starting from the middle. c. Ping-pong: in this method subjects start from one of the two ends and move to the other end narrowing down the interval where indifference is reached. For example, the researcher could start from one end (10 year bad health) vs (9 years full health) [the Ping] or from the other end (1 year full health) [the Pong]. While this would theoretically lead to 12 (4 starting points x 3 search procedures) different iterations procedures, only 10 are feasible since Ping-pong cannot start from the middle by definition. One interesting case is Titration+Middle since this is basically the method used by the Euroqol group. To compare all these iterative procedures we randomly generate 1000 distributions of a given type and we extract a sample of size 100 out of each distribution. This number (n=100) was arbitrary but we think it is a generous one since it refers to the number of subjects who evaluate one health state at a time. There are no many examples of surveys where more than 100 subjects have evaluated more than one health state. We also analyzed the effect of increasing the number of observations to 500 and 1000 but we do not present the results here. The main assumption that we make in our simulations is about the shape of the stochastic utility functions. We make next assumptions: 1. There are seven types of distributions according to their degree of skewness, namely severely left skewed (LL), left skewed (L), slightly left skewed (SL), normal (N), slightly right skewed (SR), right skewed (R) and severely right skewed (RR). 2. These distributions are centered in the following intervals of x: 0-1 [LL], 1-2 [L], 2-3 [SL], 4-6 [N], 7-8 [SR], 8-9 [R] and 9-10 [RR]. In health economics each interval could be related with the severity of the health state. In the TTO we can think of as the number of life years people are willing to give up or in the SG as the risk of death they are willing to accept. In this case, the LL distribution would characterize very mild health states and the RR distribution the severe health states. So LL distributions would imply high utility values or alternatively, low values of . The opposite with RR distributions. The justification of these assumptions is related to the McCrimmon and Smith(1986) and Butler and Loomes(2007) view of imprecise preferences. They assumed that variability proportional to the range of potential responses. The “model” of preferences that support these functions is as follows. We assume that each subject has some sort of core preferences. That is, a band of responses (or even a unique value) on which she would settle if asked to think about the question repeatedly, with opportunities for deliberation and reflection. So if we were to represent the probability distribution of her responses, we might expect the modal response to lie somewhere in this core, while allowing for the distribution to stretch away from this area, within the bounds of the response interval. Depending on the location of the core relative to the upper and lower bounds of the range, we might expect such a distribution to be more or less skewed. Specifically, where the core lies near the bound, we would expect that the skewness would be more pronounced, because of end-of-scale effects. We would expect positive skew when the core is close to the lower bound of the response interval and negative skew when it is close to the upper bound. In practice, assume that the health state to be evaluated is mild and the median utility is about 0.9. This implies that the distance from the median to the upper part of the distribution (0.999) is much smaller than the distance to the lower part of the scale (0.000). The probabilities that the subject gives an answer lower than 0.9 are higher than the probability that the subject gives an answer between 0.9 and 1. That is, subjects may know, for example that the health state is not too severe so they should not give up too many life years, say 1 or 2 at the most. That is where the core could be located. However, from time to time they can be in a different “state of mind” and give a value higher than 2. This will produce a skewed distribution with the probability mass located between 1 and 2 but with some responses higher than 2. We generated 1000 distributions of each of each type centered in the intervals already mentioned. Each distribution was generated by randomly extracting, for each interval of , a number from a uniform distribution defined on different intervals depending on the type of distribution that we aimed to generate. The sum of the probabilities was normalized to one. This way of proceeding ensures that the validity of our results does not depend on the particular distributions considered. The severely skewed distributions are characterized as lognormal distributions whose means are located in the first or last intervals. The remaining type of distributions are not necessarily well-shaped distributions. In order to compare the effect of the different iterative procedures on the utilities estimated we assume that: a) the main moment of the distribution is the mean, b) the researcher only has one opportunity to observe the mean, that is, she can only ask one question to each subject and observes only one aggregate distribution. Although have estimated 1000 means for each iterative procedure the researcher will see the result of only one of those results in practice. For this reason, we will compare iterative procedures according to: a) The probability of making a mistake, that is, of not estimating the true mean. b) The size of the error, that is, estimated mean – true mean. Since simulation will produce 1000 means it is going to be highly improbably to produce exactly the same mean as the true one. However, in many cases differences can be negligible. We will consider that there is an error threshold below which errors will be irrelevant. We have established in 0.03 the threshold of the error to be considered “significant” since it has been suggested (Wyrwich et al 2005) that those differences have clinical significance. For this reason, our results will only focus on differences between the true and the estimated mean that are at least of 0.03. Iterative methods will be compared according to the probability of making a mistake equal or larger than 0.03 and the size of the error. One option is to estimate the mean of all errors (equal or bigger than 0.03), this is what we will call the Mean Absolute Error (MAE). However, minimizing MAE may not be the main objective of a researcher since MAE does not reflect the variability of errors. For this reason, we will present the error corresponding to three percentiles: 5%, 50% and 95%. In this way, the range of errors can also be observed. 4. RESULTS Table 1 shows the probability that the mean is above or below the true mean in more than 0.03 points (on a utility scale from 0 to 1). We can see that 1. Bisection tends to perform better than Titration. The combination that clearly outperforms all the rest if when Bisection is combined with Randomization. 2. Titration only produce good results when the starting point is close to the interval where the probability mass in located (the core). That is, in the topdown strategy, only performs well for mild health states (Severely Left Skewed). In the bottom-up strategy, only performs well for severe health states (Severely Right Skewed). When starting from the middle of the distribution, only performs well for health states with intermediate health states. Randomization hardly improves Titration. 3. The pattern with Ping-pong is similar to Titration. It only performs well when the starting point coincides with the core, that is, it works well for mild health states when we start from above and for severe health states when it starts from below. 4. The method used by the Euroqol group generates values that are too low for mild health states and too high for severe health states. The implication of these results is that in order to have good results it is important: a) to use Bisection b) choose the starting point close to the core. It seems that all methods work well when the starting point is close to the true mean. It is interesting to observe that Randomization in itself does not generate unbiased estimates. The reason is that the main assumption behind the use of Randomization is that errors cancel each other out. However, Randomization includes several starting points that are far from the core. If this is the case, it will produce some means that are far from the true mean. For example, assume we use Randomization coupled with Titration and we want to estimate the utility of a mild health state. In that case, Randomization will include a lot of questions that are far from the place where most of the probability mass is located and error will not cancel each other out. This only happens when Randomization is used with Titration for intermediate health states. This is the only case where errors will cancel each other out using Randomization. Bisection performs better than Titration because it does not ask too many questions on the “wrong” side of the distribution. Titration has to ask a lot of questions until it gets to the core, if it starts from the wrong place. This “inflates” the part of the distribution where the questions are asked. That is, assume that a health state is mild. Bisection will quickly chop-off the tail to the right of the distribution while Titration will keep asking questions in that part of the distribution. This will increase the chances that subjects may respond affirmatively to a question where there are asked to trade-off a large number of life years in order to avoid a mild health state. Although the chances that the subject will accept to give up a large number of life years are small, if the iteration method asks a lot of questions in these range it maximizes the chances of getting affirmative responses to “extreme” questions. Something similar seems to happen with Pingpong. If something like this lies behind of our results one consequence could be that, a process that adjusts the starting point to the severity of the health state could work quite well. What happens if we adapt the starting point to the severity of the health state? Can we improve Titration or Bisection using this strategy? We will call this strategy Adaptive Starting Point (ASP). According to this strategy we start from (8 years full health) vs (10 years bad health) in the Severely Left Skewed distribution and then proceed by reducing in 1 year in full health for the rest of distributions. That is, we start from (7 years, full health) in the case of the Left Skewed distribution, from (6 years, full health) in the case of the Slightly Left Skewed and so on until we reach the Severey Right Skewed where the first choice would be (2 years in full health) vs (10 years bad health). The results for ASP can be seen in Table 2. We also include in this table Randomization+Bisection since it is the strategy that performs better in Table 1. Surprisingly (or maybe not) the best method involves Titration. However, this fits perfectly well with the explanation we have provided above. Titration performs better than Bisection in the ASP strategy because all questions are produced in the range where most of the probability mass is located. Bisection is less efficient since, in order to find the place where the core is located, needs to ask questions that are far from it. Another way of illustrating this point is analyzing the amount of errors after a certain number of iterations. Table 3 presents the percentage of errors larger than 0.03 in Bisection + Randomization and Titration+ASP. It can be seen that Titration+ASP reduces very quickly the number of errors. In fact, the percentages in Table 4 after three iterations are very similar to the percentages in Table 3 for Titration+ASP. There is very little to be gained after three iterations. This does not happen with Bisection+Randomization that needs more iterations to find the interval where indifference in located. This is a logical implication of asking more questions outside the main range. We now move to the second characteristic we will use to evaluate Iteration Methods, that is, how big is the mistake. This information is provided in Table 4. We present the errors that correspond to the centiles 5%, 50% and 95%. In this way, the whole range of errors can be easily perceived. Methods based on Titration produce the largest errors. The Titration method that produces better results is the Titration+ASP. Randomization also gives rise to large errors (>0.1) when it is combined with Titration. We can also see the size of errors using the Mean Absolute Error, which is the mean of all errors in absolute value. This is shown in Table 5. The MAE cannot be interpreted as the “most common error” since, as we know, the same mean can proceed from different distributions. We suggest that for the researcher it is even more important to be sure that the chances of making a big error are small. For this reason, Table 5 should be read in conjunction with Table 4. DISCUSION We have shown that different iterative procedures produce different results even if the respondent always responds truthfully (from an stochastic point of view). Means tends to be correlated with the starting point. However this effect is not produced from what it has traditionally considered the “starting point bias”. There seems to be logic in our results, namely, the estimated means will be closer to the true mean if most of the questions of the iterative procedure are asked in the area where most of the probability mass is located. This is the reason why Titration performs so badly. However, we have also seen that if the starting point is far from the mean results are not going to be good even with Bisection. In any case, Bisection usually performs better than Titration. There two ways of establishing the starting point that perform fairly well, namely, randomization and “adaptive starting”. It seems that randomization is the simplest to implement since it does not require any previous information. If we want to use “adaptive starting” we need some hypothesis about the severity of the health state. In order to have this information some piloting is necessary. Randomization does not require of any previous information. Two caveats apply here. One is that randomization alone does not guarantee the best results. The second is that randomization may not perform so well if we change the shape of the probability distributions. That is, one of the reasons that randomization works well, coupled with Bisection, is that we did not place a limit on the number of questions, so the search procedure tends to find the main probability mass sooner or later. However, it is clear that Randomization works through a process of “error compensation” and it involves asking questions that we know are going to give rise to biased estimates. Bisection does not work well when we start from the wrong place but expect that errors will cancel each other out. This is not the philosophy behind “adaptive starting”. In this case we are always asking questions in the right part of the distribution. That is why it performs so well, coupled with Titration. It starts from the right place and Titration asks questions in the right part of the distribution. In summary, it seems that the two main options are Bisection+Randomization or ASP+Randomization/Titration. In one case (Randomization), we hope that the different biases introduced by the questions asked from the “wrong starting point” will be compensated by the biases introduced by a “wrong starting point” of the other end of the scale. In the other case (ASP), we hope that the starting point is the right one. If it is, the chances of making an important mistake are small either with Bisection or Titration. We understand that some researchers may be uneasy about using the ASP strategy. It has been traditionally assumed that using different starting points introduce biases in the elicitation procedure. This is because these effects have been attributed to anchoring. We show this does not have to be the case. We have shown that using the same starting point may also produced biased estimates. Of course, one cannot use ASP without any previous information about where the core is. It requires piloting. In some respect, the ASP strategy is similar to the strategy used to establish the bid values in Dichotomous Choice Contingent Valuation (DCCV) (Kanninen, B. J. 1993; Hanemann, M., Loomis, J., & Kanninen, B. 1991). In order to ask the right questions (the right bids) one needs to do some piloting using open-ended WTP. The problem with those methods is that sometimes the researcher may feel that once she has enough information to set up the bids correctly she already has almost knows the final answer….However, the message of ASP is that it can be a good idea to proceed in stages. First, do some piloting to see where utilities are placed. Second, adjust the starting point to the previous information. It is a kind of Bayesian way to proceed. Finally, one could think that, if iterative procedures have all these problems, it is better to use non-iterative methods. To some extent, this is true. However, we should make several caveats in relation to non-iterative methods. First, they still need piloting to know where to put the “bids”. Asking many questions in areas of the response scale where there is little movement is very inefficient. Second, it is complicated to elicit individual preferences with non-iterative methods for two reasons: a) more questions are needed per subject b) we have to deal with errors (contradictions) in the process. Third, we can use non-iterative methods to obtain preferences at the aggregate level as in Double Bound Dichotomous Choice (DBDC). However, the evidence that comes from DBDC is that those methods may have problems of anchoring (Kanninen, B. J. 1995) when more than one dichotomous question is used. In health economics it would be unfeasible to elicit the utility of a large set of health states using DBDC. Fourth, the evidence that comes from DBDC is that when we elicit preferences only at the aggregate level, the shape of the distribution function that we assume generates these responses is extremely important and small changes in the assumptions about this function can produce important changes in the results (Alberini, 2005). Fifth, these problems are enhanced by the fact that preferences are heterogeneous while most of these techniques assume a common distribution function for all subjects. Sixth, they are not useful for individual decision-making. It is true that there have been advances in the literature that have improved many of those problems of non-itearative procedures. In spite of that, abandoning iterative methods may have an important problems. Gathering information is stages, using Bayesian methods can be a good alternative to non-iterative methods. REFERENCES Alberini, A. (2005). What is a life worth? Robustness of VSL values from contingent valuation surveys. Risk Analysis : An Official Publication of the Society for Risk Analysis, 25(4), 783-800. Bleichrodt, H., Doctor, J., & Stolk, E. (2005). A nonparametric elicitation of the equity-efficiency trade-off in cost-utility analysis. Journal of Health Economics, 24(4), 655-78. Brazier, J., & Dolan, P. (2005). Evidence of preference construction in a comparison of variants of the standard gamble method. HEDS Discussion Paper 05/04. Butler. D J and G C Loomes. 2007. Imprecision as an account of the preference reversal phenomenon. American Economic Review 97 (1): 277-297. Feeny, D., Blanchard, C. M., Mahon, J. L., Bourne, R., Rorabeck, C., Stitt, L., & Webster-Bogaert, S. (2004). The stability of utility scores: Test-retest reliability and the interpretation of utility scores in elective total hip arthroplasty. Quality of Life Research : An International Journal of Quality of Life Aspects of Treatment, Care and Rehabilitation, 13(1), 15-22. Hanemann, M., Loomis, J., & Kanninen, B. (1991). Statistical efficiency of doublebounded dichotomous choice contingent valuation. American Journal of Agricultural Economics, 73(4), 1255-1263. Kanninen, B. J. (1993). Optimal experimental design for double-bounded dichotomous choice contingent valuation. Land Economics, 138-146. Kanninen, B. J. (1995). Bias in discrete response contingent valuation. Journal of Environmental Economics and Management, 28(1), 114 – 125. Lenert, L. A., Cher, D. J., Goldstein, M. K., Bergen, M. R., & Garber, A. (1998). The effect of search procedures on utility elicitations. Medical Decision Making, 18(1), 76-83. MacCrimmon. Kenneth. and Maxwell Smith. 1986. “Imprecise Equivalences: Preference Reversals in Money and Probability.” University of Brit-ish Columbia Working Paper 1211. Stein, K., Dyer, M., Milne, R., Round, A., Ratcliffe, J., & Brazier, J. (2009). The precision of health state valuation by members of the general public using the standard gamble. Quality of Life Research : An International Journal of Quality of Life Aspects of Treatment, Care and Rehabilitation, 18(4), 509-1. Wyrwich Kathleen W, Monika Bullinger. Neil Aaronson, Ron D Hays. Donald L Patrick, Tara Symonds. and The Clinical Significance Consensus Meeting Group. 2005. Estimating clinically significant differences in quality of life outcomes. Quality of Life Research 14 (2): 285-295.