syll 114

Phys 114 – Introduction to data reduction with Applications (3-0-3)

Spring 2014, 8:30-9:55 am,, M W, Room 314F

Topics :

Objectives:

An introduction to both the theory and application of error analysis and data reduction methodology.

Topics include the binomial distribution and its simplification to Gaussian and Poisson probability distribution functions, estimation of moments, and propagation of uncertainty. Forward modeling, including least-squares fitting of linear and polynomial functions, is discussed. The course enables students to apply the concepts of data reduction and error analysis using data analysis software applied to real data sets found in the physical sciences.

By the end of the course, students should

1.

Be able to address the pros and cons of various methods of measurement

2.

Be conversant with the data reduction and error analysis concepts mentioned above,

3.

Be able to analyze 1D and 2D data sets to find computational estimates of PDFs, moments, and to address the appropriateness of various forward models,

4.

Be familiar with various measurement techniques so as to best experimentally determine PDFs, moments, and the appropriateness of various forward models,

5.

Be able to devise an experiment capable of making a measurement to a pre-determined level of precision,

6.

Be able to create figures that are journal-quality,

7.

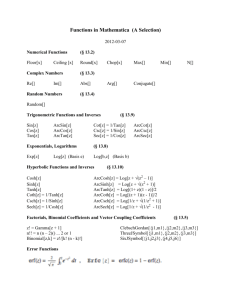

Be extremely familiar with the Mathematica software package so as to utilize it in subsequent classes and research endeavors.

Instructor: Vitaly A. Shneidman, Ph.D.

Email: viitaly@njit.edu, Office: 452 TIER

Office Hours: TBA

MATH 111 Co-requisite:

Course Materials: Bevington, P.R. and D. K. Robinson, Data reduction and error analysis for the physical sciences, 3rd ed. , McGraw-Hill, Boston, 2003.

Licensed use of Mathematica 9

Course Requirements and Grading Policy :

Homework: 30%

Homework is given every week and is considered an important part of the class. The homework usually consists of reading the text, short answer questions, and numerous mathematical calculations. An assignment is given on the first lecture of the week [when theoretical material is

covered] and will require measurements or calculations to be performed during that week either at the second lecture or outside of class. Students are encouraged to work together on the homework problems, though each student is responsible for handing in an individual homework set.

3 Exams (2 during the semester and 1 final, worth 20% each): 60%

The purpose of the exams is to test the individual student's progress in the class. Exams are closed book/notes. Exams will be announced ahead of time.

In-class quizzes and class participation 10%

There will be short, in-class quizzes at random times roughly every 2-3 weeks. In addition, attendance at lecture is expected and will be rewarded.

Grade Distribution: For each exam, and for the total of your homework grade, the grade distribution will be determined according to the gaussian (normal) distribution. This is called grading on a curve. It will also be determined on an absolute scale, as shown in the last column of the table below. We will discuss as a class which grading scheme is fairest, and why. If your final grade according to the Statistical Placement is worse than in the Absolute placement, then the latter will be used.

Letter Grade Statistical Placement

A >2 s above mean

Absolute alternative placement score > 85%

B

C

D

F

>1 s above mean within 1 s of mean

> 1 s below mean

> 2 s below mean

75% < score < 85%

60% < score < 75%

50% < score < 60%

< 50%

THE NJIT INTEGRITY CODE WILL BE STRICTLY ENFORCED AND ANY VIOLATIONS

WILL BE BROUGHT TO THE IMMEDIATE ATTENTION OF THE DEAN OF STUDENTS.

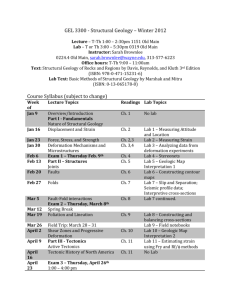

Week

1

2

3

4

5

6

X

7

Date

Jan 22

Jan 27,29

Feb 3,5

Feb 10,12

Feb 17,19

Feb. 24,26

Feb 26

March 3,5

Topic

INTRODUCTION: Fundamental limitations of accuracy, and randomness in Physics , Lecture Notes.

Review of Mathematica: capabilities and programming environment.

“IntrooductionToMathematica.doc”.

Typpes of numbers. Symbolic and numerical integration. Making plots, using functions. Non-elementary (special)) functions used in data analysis.

APPLICATION: Write a basic Mathematica program to plot generated data.

More on Mathemtica. Lists and arrays of data.

“114_intro2.pdf”.

Lists. Basic matrix/array operations for reading in data and for graphical output

Choosing a plot scale, data vs. histograms

APPLICATION: Write a basic Mathematica program to read in real data and make a plot (the take home “HAT” project)

Uncertainties in Measurement: Chap 1 +Lecture Notes

Parent distributions

Sample mean and sample standard deviation

Percent error, SNR—reduction of noise through repeated measurements

APPLICATION: Given a counting experiment [e.g., CCD] find various quantities.

Probability Distribution Functions: Chap 2 +Lecture Notes

Discrete and continuous PDF’s and CDF’s. Binomial

Gaussian, Poisson, Other [Lorentzian-Cauchy, exponential, gamma, etc.]

Moments, focusing on the first and second moments.

Multivariable distributions and correlations. Lecture Notes.

Change of variables. Multivariate Gaussian.

EXAM 1 (Review Feb 24, Exam Feb 26)

Monte Carlo Methods. Chapter 5+Lecture Notes.

Finding area. The rejection method. Generation of random numbers with exponential, normal and Poisson distributions.

8

9

March 10,12

Error Analysis: Chap 3 +Lecture Notes

Statistical uncertainty . Bias. Propagation of Errors.

Designing an experiment to make measurements to a particular precision

APPLICATION: Propagation of errors in a “complex” measurement.

March 16-23 SPRING BREAK

March 24, 26

Estimators: Chap 4 +Lecture Notes

Chi-square, Student's t-Test. Kolmogorov-Smirnov test.

Moments: Mean, variance, skew, and kurtosis

APPLICATION: Spectral Kurtosis PDF.

10

March 31, April

2

The Forward Model I: Chap 6

Linear and log-linear forward model and least-squares fitting to a linear data set.

The Forward Model III: and Chap 7 & Chap 8

11

X

April 7,9

April 9

Polynomial forward model and least-squares fitting to a polynomial data set

Generalized forward model

Generalized least-squares fitting

APPLICATION: Fitting a spectral line (take home project).

EXAM 2 (Review Apr 7, Exam Apr 9)

Filtering and convolution. Lecture Notes.

12 April 14, 16

In class project “Saturn”.

2D Data sets and images. Lecture Notes .

13 April 21, 23

14 May 5

Creating 2D Gaussians and other functions

Convolution and deconvolution.

Review for Final Exam http://ist.njit.edu/software/mathematica/9/win.php