The Single document summarizer is an application which is

advertisement

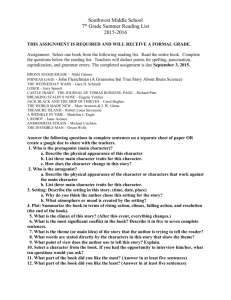

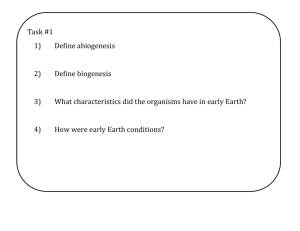

Single Document Summarization using Information Retrieval Approach Priyanka Sarraf [1] Yogesh Kumar Meena [2] M.Tech Scholar, Banasthali University, Jaipur [1] Assistant Professor, MNIT, Jaipur [2] Sarrafpriyanka60@gmail.com[1] Abstract Automatic summarization is the process of reducing a document by a computer program to create summary which retains the important aspects of the original document. As the use of whole document is very tedious task so summary helps identifying the general overview and its importance for us. Generally, there summarization: are two extraction approaches and of automatic abstraction. Extractive methods work by selecting a subset of existing words, phrases, or sentences in the original text to form the summary. Whereas, abstractive methods build an internal semantic representation and then use natural language generation techniques to create a summary that is closer to what a human might generate. Such a summary might contain words not explicitly present in the original. The state-of-the-art abstractive methods are still quite weak, so most research has focused on extractive methods. amount of documents listed in the order of estimated relevance. It is not possible for users to read each document in order to find useful ones. Automatic text summarization systems helps in this task by providing a quick summary of the information contained in the document. An ideal summary in these situations will be one that does not contain repeated information and includes unique information from multiple documents on that topic. The Single document summarizer is an application which is proposed to extract the most important information of the document. In automatic summarization, there are two distinct techniques either text extraction or text abstraction. Extraction is a summary consisting of a number of sentences selected from the input document. An abstraction based summary is generated where some text units are not present into the input document. With extraction based summary technique, some more features are added based on Information Retrieval. However, the total system is alienated into three segments: pre-processing the test document, sentence scoring based on text extraction and summarization based on sentence ranking. This paper presents basic model for extractive based generic text summarization and discuss evaluation methods for extracting summary. Keywords: Document summarization, extraction, In this approach generic based single document summarization system is proposed using extraction based summary techniques. Preprocessing, Sentence position value , Key words. 1. Introduction 2. Related Work With the increasing amount of online information, it becomes extremely difficult to find relevant information to users. Information retrieval systems usually return a large There are many research studies that have been conducted for automatic text summarization. In this section, related studies will be mentioned briefly. Fang Chen et al. in their work considered 3 features at sentence level. As per Sentence Location Feature, sentences at the beginning and at the end of a paragraph are more likely to contain material useful for summary. The second feature, based on paragraph location is similar to sentences, as paragraphs also are usually hierarchically organized, with crucial information at the beginning and concluding paragraphs. The third feature is Sentence Length Feature, where sentences that are too short or too long tend not be included in summaries. A fixed threshold of 5 words for shorter sentences and 15 words for longer sentences is used to decide whether the sentence should be included in summary. [8] Paice in his work examines the significance of each sentence by looking at the clues for identifying the importance of the sentence. He also provides scores for these sentences based on the clues. Finally sentences were retrieved based on some fixed threshold or by selecting sentences with highest score. He experimented sentence scoring with the following seven type of clues namely "Frequency-keyword approach", "titlekeyword method", "location method", "syntactic criteria"," Indicator phrase method", "cue method" and "relational criteria".[9] Radev et al. have presented an extraction based summarization scheme named as centroid based summarization. The main contributions of their paper are: the use of cluster -based relative utility (CBRU) and cross sentence informational subsumption (CSIS) for evaluation of single and multi document summaries. MEAD is an implementation of the centroid-based method that assigns scores to sentences based on sentence-level and inter sentence features, including cluster centroids, position, TF*IDF, etc.[10] Rada et al. in their work ranked the sentences in a given text with respect to their importance for the overall understanding of the text. They constructed a graph by adding a vertex for each sentence in the text, and edges between vertices are established using sentence inter-connections (measured as a function of content overlap). They view such relations between two sentences as a process of "recommendation" to other sentences in the text that address the same concepts. [11] Krysta et al. in their work presented a new approach based on neural nets called NetSum. The authors extracted three sentences from a single news document that best match various characteristics of the three document highlights. Each highlight sentence is human-generated. The output of system consists of purely extracted sentences, where there is no sentence compression or sentence generation. The authors have made studies on two separate problems based on the sentence order in matching the highlights. First, they extract three sentences that best "match" the highlights as a whole and they have disregarded the sentence ordering. The second task considers ordering and compares sentences on an individual level. [12] Luhn work on single-document summarization proposed the frequency of a particular word in a document to be a useful measure of significance. Though Luhn’s methodology was a preliminary step towards the summarization, but many of his ideas are still found to be effective for text. In the first step, all the stop words were removed and rests of the words were stemmed to their root forms. A list of content words then compiled and sorted by decreasing frequency, the index providing an important measure of the word. From every sentence a significance factor was extracted that reflects the number of occurrences of significant words within a sentence, and correlation between them is measured due to the intervention of non-significant words. All the sentences are positioned in order to their significance factor, and the top positioned sentences are finally selected to form the automatically generated abstract. [1] Jing presented a sentence reduction system for removing irrelevant phrases like prepositional phrases, clauses, to infinitives, or gerunds from sentences. Their main contribution is determining less important phrases in a sentence using reduction decisions. The reduction decisions are based on syntactic knowledge, context, and probabilities computed from corpus analysis. [13] Edmundson et al. proposed a typical structure of text summarization methodology in. They incorporated both word frequency and positional value ideas generated by two of his previous works. The first of two other features used was the presence of cue words (occurrence of words like significant, or hardly), and the second one was the skeleton of the document (the sentence is a title or heading). The sentences were scores based on these features to extract sentences for summarization. [14] Baxendale, also done at IBM and published in the same journal, provides early insight on a particular feature helpful in finding salient parts of documents: the sentence position. Towards this goal, the author examined 200paragraphs to find that in 85% of the paragraphs the topic sentence came as the first one and in 7% of the time it was the last sentence. Thus, a naive but fairly accurate way to select a topic sentence would be to choose one of these two. This positional feature has since been used in many complex machine learning based systems. [15] 3. System Description Goal of extractive text summarization is selecting the most relevant sentences of the text. The Proposed method uses statistical and Linguistic approach to find most relevant sentence. Summarization system consists of 3 major steps, Preprocessing ,Extraction of feature terms and algorithm for ranking the sentence based on the optimized feature weights. 3.1 Pre-processing This step involves Sentence segmentation, Sentence tokenization, Stop word Removal and Stemming. 3.1.1 Sentence Segmentation It is the process of decomposing the given text document into its constituent sentences along with its word count. In English, sentence is segmented by identifying the boundary of sentence which ends with full stop ( . ) , question mark (?), exclamatory mark(!). 3.1.2 Tokenization It is the process of splitting the sentences into words by identifying the spaces, comma and special symbols between the words. So list of sentences and words are maintained for further processing. 2.1.3 Stop Word Removal Stop words are common words that carry less important meaning than keywords .This words should be eliminated otherwise sentence containing them can influence summary generated. 2.1.4 Stemming A word can be found in different forms in the same document. These words have to be converted to their original form for simplicity. The stemming algorithm is used to transform words to their canonical forms. In this work, Porter’s stemmer is used that splits a word into its root form using a predefined suffix list. 2.2 Feature Extraction After an input document is tokenized and stemmed, it is split into a collection of sentences. The sentences are ranked based on four important features: Frequency, Sentence Position value, Cue words and Similarity with the Title. 2.2.1 Frequency Frequency is the number of times a word occurs in a document. If a word’s frequency in a document is high, then it can be said that this word has a significant effect on the content of the document. The total frequency value of a sentence is calculated by sum up the frequency of every word in the document. 2.2.2 Sentence Position Value Position of the sentence in the text, decides its importance. Sentences in the beginning defines the theme of the document whereas end sentences conclude or summarize the document. The positional value of a sentence is computed by assigning the highest score value to the first sentence and the last sentence of the document. Second higher score value to the second sentence from starting and second last sentence of the document. Remaining sentences are assigned score value zero. 2.2.3 Key Words Key words are the important words in the document. These key words are inputted from the user. If a sentence contains keywords then assign score value one to the sentence, otherwise score value is zero for the sentence. 2.2.4 Similarity with the Title The similarity with the title consists of the words in titles and headers. These words are considered having some extra weights in sentence scoring for summarization. . If a sentence contains words in title and header then assign score value one to that sentence, otherwise score value is zero for the sentence. 2.3 Sentence Scoring The final score is a Linear Combination of frequency, Sentence positional value, Key Words and Similarity with the title of the document. 2.4 Sentence Ranking After scoring of each sentence, sentences are arranged in descending order of their score value i.e. the sentence whose score value is highest is in top position and the sentence whose score value is lowest is in bottom position. 2.5 Summary Extraction After ranking the sentences based on their total score the summary is produced selecting X number of top ranked sentences where the value of X is provided by the user. For the readers’ convenience, the selected sentences in the summary are reordered according to their original positions in the document. systems and establish a baseline. Besides this, manual evaluation is too expensive: as stated by Lin (2004), large scale manual evaluation of summaries as in the DUC conferences would require over 3000 hours of human efforts. Two main criteria for evaluating the proficiency of a system is precision and recall which are used for specifying the similarity between the summary which is generated by a system versus the one generated by human. These terms are defined by following equations: Text Document Precision= Correct / (Correct + Wrong) Recall= Correct / (Correct + Missed) Preprocessing Sentence Segmentation Porter Stemming Algorithm Tokenization Stop Words Stop Word Removal Stemming Frequency Sentence Position Value Key Words Similarity with the Title Sentence Scoring Sentence Ranking Summary Figure 1. Steps of the proposed text summarization technique where, Correct is the number of sentences that are the same in both summary which are produced by human and system.; Wrong is the number of sentences presented in summary and produced by system but is not included in human generated summary; Missed is the number of sentences which are not appeared in system generated summary but presented in the summary produced by human. Therefore, Precision specifies the number of suitable sentences which are extracted by system and Recall specify the number of suitable sentences that the summarization system missed. 5. Conclusion and Future Scope This Paper discusses the single document summarization using extraction method. This paper presents basic steps of summarization of documents and summarization evaluation methods. This proposed method is implemented in java and is under development. In future we want to extract summary using abstractive based summarization approach and compare the results between both the approaches. 6. References [1] H. P. Luhn, The automatic creation of literature abstracts, in IBM Journal of Research Development, volume 2, number 2, pages 159-165, 1958. [2] Mani, I.: Automatic Summarization. John Benjamins Publishing Co., Amsterdam (2001). [3] 4. Summary Evaluation Evaluating a summary result is a difficult task because there does not exist an ideal summary for a given document or set of documents. The absence of a standard human or automatic evaluation metric makes it very hard to compare different Wei Liu, WANG, The Document Summary Method based on Statements Weight and Genetic Algorithm, International Conference on Computer Science and Network Technology, 2011 [4] Automated Bangla Text Summarization by Sentence Scoring and Ranking, Md. Iftekharul Alam Efat, IEEE, 2013. [5] The Porter Stemming Algorithm [Online].Available:http://tartarus.org/~martin/PorterStem mer/ [6] Mr. Vikrant Gupta, Ms. Priya Chauhan, Dr. Sohan Garg. Mrs. Anita Borude, Prof. Shobha Krishnan “A Statistical Tool for Multi-Document Summrization” , International Journal of Scientific and Research publication, Volume 2, Issue 5, may 2012 ISSN 2250-3153 [7] Chetana Thaokar, Dr.Latesh Malik, Test Model for Summarizing Hindi Text using Extraction Method, IEEE, 2013 [8] Fang Chen, Kesong Han and Guilin Chen, "An Approach to Sentence Selection Based Text Summarization", In the Proceedings of IEEE Region 10 Conference on Computers, Communications, Control and Power Engineering, Volume: l,pp.489- 493,2002. [9] C.D.Paice,"Constructing Literature Abstracts by Computer: Techniques and Prospects", Information Processing andManagement 26: 171 - 186, 1990. [10] Dragomir R. Radev, Hongyan Jing, Malgorzata Stys and Daniel Tam, "Centroid-based summarization of multiple documents", Information Processing and Management: 40 (2004) 919-938, 2004. [11] Rada Mihalcea and Paul Tarau, "An Algorithm for Language Independent Single and Multiple Document Summarization", Proceedings of the International Joint Conference on Natural Language Processing (IJCNLP), Korea, 2005. [12] Krysta M. Svore, Lucy Vanderwende and lC.Christopher Burges, "Enhancing Single-document Summarization by Combining RankNet and Third-party Sources", Proceedings of the Joint Conference on Empirical Methods in Natural Language Processing and Computational Natural Language Learning, Prague, pp.448-457,2007. [13] Hongyan Jing, Sentence Reduction for Automatic Text Summarization, in Proceedings of the sixth conference on Applied natural language processing, pages 310-315, 2000. [14] H. P. Edmundson. New Methods in Automatic Extracting. Journal of. ACM, 16(2):264-285, 1969. [15] P.B. Baxendale. Man-made index for technical literature An experiment. In IBM Journal of Research and Development, pages 354-361, 1958.