Richa Goyal M.Tech CSE 3

advertisement

Chapter 1

Introduction

Text mining is text analysis technique, part of data mining. Its basic aim is to extract

useful information from the available large amount of data. Text summarization is the

technique of creating abstract or summary of one or more text, automatically by the

computer system. Research in this field has already been started in sixties in American

research libraries. Huge number of data, books, and scientific papers are needs to be

available electronically which should be easily reachable and searchable. American

libraries has started to show their interest in this field, because they were feeling the

necessity to store all the books, research papers etc should be easily stored in a limited

database. As they were having limited storage capacity they required to summarize the

documents, indexing and made searchable. Even some books or documents contain

summary with them but if there were no readymade summary then one was able to make.

Luhn(1958), Edmunsun(1969) and Salton(1988) started research many years ago. With

increasing the use of internet, there have been awakening interests of text summarization

techniques. As the time changed the scenario has also been changed. At that time storage

space was limited but people had time to extract and study the text as per their use. But

today there is limitless storage and cheap as well. Information available over the internet

or system is available in teemingness and innumerable. This is manually impossible to

search. It was very difficult to search, select which information should unify. Therefore

filtration of the information is also required.

NLP make the computer system capable to understand human language. This is unit of

artificial intelligence. Implementation of NLP applications is very challenging because

computer system require to human to speak unambiguously, précised and in highly

structured way which is not always possible. Modern algorithms of NLP are based on

machine learning and statistical machine learning. For automated summarization, concept

of automated learning is required. Automated learning procedures can make use of

statistical inference algorithms. Major application areas of NLP is automatic

summarization, co reference resolution, discourse analysis, machine translation,

morphological segmentation, named entity recognition, natural language generation,

1

natural language understanding, optical character recognition, part of speech tagging,

parsing, relationship extraction, sentence boundary disambiguation, sentiment analysis,

speech recognition, speech segmentation, word sense disambiguation, information

retrieval, information extraction, speech processing, stemming etc. NLP is the basic

domain for automated text epitomize is to solve the information overloading problem

different techniques are under research [1-3]. Automated text summarization is the hard

problem of NLP. One needs to understand the key points of the available text to analyze

and summarize the data. NLP investigated the subfield of recapitulation. For automated

text summarization we need to understand text summarization first. The term Automated

text summarization means recapitulate the text automatically rather than manually. Text

summarization is followed by text categorization. The main idea behind the automatic

summarization is present the main information of a document in less space. Summaries

depends on different aspects like indicative summaries, contains the information about

the text relevance i.e. what is text about rather than displaying any content whereas

informative summary provide the shorter version of the detailed document. Topic

oriented summaries are built on the bases of the topic desired by the reader’s interest

whereas the generic summaries depicts the information according to the author’s mindset.

In text categorization rudimentary idea is to consider the various features exist between

distinct structural and statistical relationship. These features are character n-grams, word

frequency, lemmas, stems, POS tags etc. and targeted categories [4]. To categorize the

data automatically items to be classified should contain some pertaining features useful to

distinguish and differentiate the data among different categories.

To epitomize the data automatically and accurately to maximum extent categorization

played a wide and important role by which we can analyze which document is more

relevant to which category and then we provide summary of multiple documents related

to single domain. In this paper we maintained a ratio of data on the basis of

categorization and summarization through which we can find its closeness to specific

cluster.

The summarization task is needed to identify informative evidence from a given data,

which are most pertinent to its content and create a synoptic version of the original

document. The informative evidence analogous with techniques used in recapitulation

2

may also provide clues for text categorization. To achieve this objective we proposed a

criterion function which decides the relevance of the document. This paper shows the

improvement in efficiency and accuracy of the categorization and summary of text

documents after removing its ambiguity. To remove the ambiguity, correlation among the

text data is found, the discovered pattern helps to classify the data and then we apply

discrete differential approach to categorize and sequential similarity approach for

summarize the data.

1.1 Problem Statement

World Wide Web demands text summarization as it contains lots of data in huge amount

which is not easily accessible by the users. As it requires so much time to find,

understand and analyze the data. Sometimes after large consumption of time people find

the data or document irrelevant to them. Therefore we require a technique which would

be helpful in determining the relevant text as well as documents.

1.2 Aim/Objective

Perform Centroid based multi document summarization on text data. The main aim of

this project is to build an application which provides automation in terms of text

categorization as well as summarization by measuring and considering the features of

original text.

To optimize text categorization and summarization technique we are studying different

available approaches and research done in this area. For this project our priority is to give

some experimental results which can be used by any organization and can perform better.

1.3 Motivation

Text categorization and summarization comes into scenario for organization of national

conferences during research work. The objective to optimize automatic summarization is

to cut the cost and increase the benefits.

Day by day WWW is overloading information and data in huge amount. This all data is

available to access by users. But as they do not have so much time to spent over the big

data to search and analyze data relevant to their need.

3

They required most relevant documents related to their needs and in shortened format so

that they could get information easily. But here if the summary is related to some abstract

only then it did not provide them the actual idea behind the document. Similarly in

research organizations, when they need to classify the multiple documents related to

different domains and need to understand the author’s scenario they need to study them.

But they do not have time read and understand huge amount of data, efficiency and

accuracy rate also decreases. Therefore need of automated text summarization is

generated. And some research work already has been implemented in past years.

Business, pharmaceutical industries, academic research are some domains in which data

is found in vast amount. Business uses such techniques to competitor and customer data

to evaluate his value in the market. Biomedical and pharmaceuticals need this application

to analyze the disease, patient related data. They required mining patents and research

articles related to different kind of medicines or already introduced medications to

improve them.

Significance of online available textual data and information in discrete fields and

domains.

This area required huge improvements and scope is so vast.

This kind of application can help user to understand and get clarity about the

prototype developed in their mind.

Speedy and easy access to the data and information is required.

Different discrete domains need automates text summarization for efficient work.

1.4 Background

Text summarization is [5] technique to shorten the text data as compared to the given

data. We need this concept to use storage space optimally. As the available information is

increasing day by day with increase in technologies and experiments it becomes very

difficult to summarize the data manually. That’s why requirement of automatic

summarization increased. Automatic summarization is when computer system shortens

the data from the actual data automatically.

In recent time there has already been a volatile outgrowth of written information

available in the World Wide Web. Hence there was growing requirement of rearranging,

4

and accessing these data in pliable way for the easy access. Solution available to this

problem is text summarization. By categorizing the data initially is add-on to the

summarization solution. Before summarize if data is classified into their relevant

categories than it will be much better result for the user. He does not need to read

unnecessary data whether it is available in summarized form.

Automated text summarization works on natural language. Natural Language Processing

(NLP) is the part of artificial intelligence. Natural Language Processing includes two

approaches i.e. natural Language Generation and Natural language Understanding. By the

term Natural language Understanding we mean that consider a spoken/written sentence

and working out what does it mean. And Natural language Generation means that

consider some formal structure/representation of what we want to say and then working

out a way to express in natural/human language.

Applications of NLP are machine translation, database access, information retrieval, text

categorization, extracting data from text etc. It includes some steps for changing the text

in natural language. These are:

Speech recognition

Syntactic analysis

Semantic analysis

Pragmatic analysis

In speech recognition analogue signals are converted into frequency spectrum, basic

sound of signal, convert phonemes into words. But the problem arises is no simple

mapping between sounds and words. In syntactic analysis rules of syntax represent the

correct organization of words. It provides a structure for grouping the words to create a

meaningful sentence. It also includes parsing in which rules and sentence is provided and

need to check whether sentence is correct grammatically or not. Grammatical rules are:

o

Sentence

o

Noun phrase

proper noun

o

Noun phrase

determiner, noun

o

Verb phrase

verb, noun phrase

noun phrase, verb phrase

Complication with syntactic analysis is about ambiguity and incorrect parsing of

sentence. Therefore next step of semantic analysis is required. This technique helps in

5

analyzing the meaning of the sentences with its syntactical structure i.e. grammar +

semantics. But problem of ambiguity is not resolved here also. Pragmatic analysis uses

context of utterance, handling pronouns, handling ambiguity.

Text summarization is balanced to become a universally accepted solution to the larger

problem of content analysis. Initially summarization task is considered only as NLP

problem. Text summarization consist some steps to complete its procedure. These steps

are:

Database

Tokenization

Stop word removal

Weighted Term

POS Tagging

Word sense

disambiguation

Pragmatic Analysis

Extracted

Summary

Figure 1.1: Summarization steps

6

1.4.1 Types of summary:

Summarization are classified into different categories, each one has their own difficulty

level to make the summarization automatic. They are as follow:

Classification on the basis of text origin:

Extractive Summarization:

Summary consist the text already present in actual text.

Abstractive summarization: Some new text is generated by the summarizer.

Classification on the basis of their purpose:

Indicative summarization:

This classification is on the basis of reader’s perspective.

This type of summary gives idea to the reader whether it would be worthwhile reading

the entire document or not.

Informative Summarization: This kind of summary contains the main idea of the original

text. This is according to author’s point of view. Summary delivers the main idea of the

author which he wants to deliver to the reader.

Critical Summarization:

This sort of the summary criticize the original document, if

we consider a scientific paper then this would contain methods and experimental results.

In case of level to automate summarization, indicative summary has higher probability

and capability to make the summarization automatic. Whereas critical summarization has

least chances.

1.4.2 Types of Evaluation:

Evaluation is quite difficult for summarization techniques and approaches. It could be

divided into two phases:

Intrinsic:

where the summary is tested in of itself called intrinsic. These tests are

made to measure the summary quality and informativeness.

Extrinsic:

In this phase, summarization system is relative to a real world task.

7

1.4.3 Current state-of-art:

NIST (National Institute of Standards and Technology) execute the Document

Understanding Conference (DUC) in which the minimum summarizing techniques are

compared. The examples of latest techniques are defined in proceedings of DUC2001[9].

1.4.4

Multi-Document Summarization:

By the term multi document summarization we meant that to compress or shorten the

data from various documents and generate a single summary for them. An issue is that of

novelty-detection. This issue summarizes the first document only for given an ordered set

of documents. These documents can be ordered by searching engine hits with respect to

relevance or news articles which could be organized by date. After summarizing the first

document only unseen information was summarized from rest of the documents.

Multi Document Summarization is not completely successfully implemented, has some

issues. But these issues differ from the issues available in single document

summarization.

Higher compression is needed, that is if 10 percent summary is generated for the single

document and there is no. of documents available then if collaboration of individual page

summary would not be good, not acceptable. Hence basic extractive summarization is in

question mark.

Also, the data in multiple documents could be redundant and we need to find out which

data is important and need to include in the summary. Intersecting sentences between two

duplicated data or repeated data need to extract. Rhetorical relation defines the structure

of similarity between two sentences i.e. it identifies whether two sentences are contradict

to each other or they are delivering the same information. It also identifies that which

sentence is delivering the more refresh information.

1.4.5

Use of Discourse Structure:’

By the term discourse structure we understand that establishing the relationship between

sentences. The order of the sentences is not organized randomly. They have some co8

relation with each other. Might be one sentence is delivering the basic of any concept or

showing history and adjacent sentence is elaborating the idea of the previous information.

These are just few of the relations existing in Rhetorical Structure theory(RST)[12].

A summarizer using RST would perform well, although automatically parsing RST

relations is difficult.

1.4.5 Extractive Indicative Summaries

Various methods are present for selecting which sentences to use in summary. Luhn[11]

did early work on this and later Edmundson[10]. Luhn proposed sentences could be

selected if they contained Content words in a document. Content words are defined as

words that occur between given frequencies, top words would not be included and

frequency limit would be determined from a corpus.

1.5 Major Approaches:

Single document extractive summarization approach:

Early techniques for sentence extraction figure out the score of every sentence based on

their features like sentence positioning. This feature of positioning was described by the

Baxendale in 1958 [5] and Edmundson in 1969 [6]. Along with these features Lun in

1958 introduced a feature of word and phrase frequency. Edmundson also told us about

the key phrase features. In recent times more sophisticated techniques are introduced to

extract the sentences from the given text. These techniques are based on machine learning

and natural language analysis. Machine learning helps in identifying the important

features where as natural language extracts the key passages and relation among words

rather than bag of words.

Chen, Kupiec and Padersen[1] in 1995 used Bayesian classifier technique to develop a

summarizer. They combined features and characteristics from corpus of scientific articles

and abstracts. Lin and Hovy(1997)[7] worked using machine learning to learn new

individual features. They worked on the sentence position feature. And analyzed how the

position of sentence affects the sentence selection. Whereas Mittal[2] worked at the

phrases and crucial words for their syntactic structure. He used statistical approach. Ono,

9

Sumita and Miike analyzed about the position of the text found according to the

predefined structure. This methodology needs a system that could work out on discourse

structure of reliability.

Single document summarization through abstraction:

Abstractive approaches are those which do not follow extraction. These approaches

emphasis on information extraction, ontological information, information fusion and

compression. This method gives rise to the more accurate with high quality summary to

their restricted domain.

Reduction process was also a good research area for the other researchers. Knight and

Marcu(2000)[3] worked over rule reduction. They tried to compress the parse tree to

generate a shorter summary still maximal grammatical version. This approach

summarized the sentence accordingly (like two sentences is summarized in to one

sentence or three sentences into either two or one and so on). Abstractive summarization

has not build up beyond of proof-of-concept stage.

10

Chapter 2

Literature Survey

A big amount of work is done on automatic text summarization in recent years. Most of

the reachers were concerned with extractive summarization technique. Extractive

summarization and abstractive summarization are two approaches in this area. In

extractive summarization some important sentences are selected and combined to make

summary of the original text. It could be call subset of the original text which contains

important information. Whereas, in abstractive summarization, summary contains the

abstraction of the original text. It contains the hidden meaning of text.

Banxendale, 1958 analysez that topic sentence comes mostly in the starting of any

paragraph in the document whereas paragraph’s last line also contains topic line in the

document[6]. He found 85% of paragraphs contain first line as a topic line and 7% of

paragraphs contain it as last line. So the topic could be chosen by among them. This

posiitonal approach, has been used in many complex machines, learning based system.

Edmundson(1969), draws a system that provide extract of the document[7]. The author

developed a set of rules for extracting data manually from the original data that was

implied in a set of four hundred technical documents. With two new features presence of

cue words and skeleton of document, two characteristics of word frequency and

importance of position were merged from the previous works.

McKeown and Radev, 1995 developed a template-driven message understanding

systems. He used already existing technology for this[8]. McKeon in1999; Radev et

al,(2000), others generate a composite sentence from each cluster(Barzilay et al,1999),

while some approaches work dynamically by including each candidate passage only if it

is considered novel with respect to the previous included passages.

The recapitulation techniques are divided into two categories: supervised and

unsupervised. Supervised techniques relay on machine learning algorithms trained on

pre-existing document summary pair. Whereas, Unsupervised techniques are based on

properties and heuristic search derived from the text. Summarization task is divided into

two classes, for positive and negative samples. Positive samples are those sentences

which are considered in summary sentences, whereas, Negative samples are those which

11

are not part of the summary [8]. In [11], the use of Genetic Algorithm(GA), feed forword

neural network(FNN), probabilistic neural network(PNN), mathematical regression and

Gaussian mixture model(GMM) for automatic summarization have been studied. The

article [12] presents multi document, multi-lingual, theme based summarization system

based on modeling text cohesion.

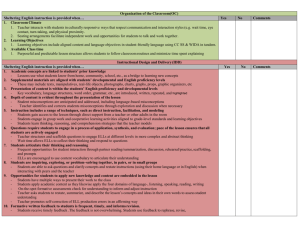

Figure 2.1 Clustering techniques

In recent time, graph based methods are used for sentence ranking. For computing

sentence value Lexrank[10] and [11] are two available systems uses the algorithms Page

Rank and HITS. Eignvector centrality in graph representation was the concept used by

Lexrank. Graph based method are completely unsupervised methods and derive

extractive summary by using given text.

Summarization task is next divided into query and generic based approach. In query

based approach summarization is done on the basis of query[12,13,14,15,16] while

generic approach gives an overall sense of document’s content[7-14,15,2,13]. QCS

system follows three step procedures that is query, cluster and then summarize[16]. In

[12] are developed a generic, a query- based, and a hybrid summarizer. The generic

summarizer used a combination of different and discussed information and via traditional

12

surface-level analysis information is obtained. For query term information, query based

summarizer used, and the hybrid summarizer uses some dissertate information with

query-term information.

Automatic document summarization is extremely entombed-disciplinary research area

related with artificial intelligence, computer science, multimedia, statistic, as well as

cognitive psychology. The Event indexing and summarization(EIS) was introduced as an

intelligent system. This system was based on a cognitive psychology model (the eventindexing model) and the roles and importance of sentence and their syntax in document

understanding.

Rokaya[16] uses the technique for abstractive summarization for custom summary which

is based on interested field and keywords for the user need. Thesse keywords and of

interest are determine and selected by user. Rokaya and power link algorithm to get a

much shorter summary. This algorithm works with the concept of co-word analysis

researches. This follows a template based approach in which topic sentence plays an

important role. In this extraction is divided in two different phase i.e. extract the package

and then delete the non effective sentences.

Most starting experiments on single-documnt summarization started with technial

articles. In 1958, Luhn, explain:ed there search performed at IBM in the 1950s. Luhn

explained that to know the scope of importance of meaning of any word, need to

calculate frequency of those words. Some key concepts are already included in this paper

which has a vital scope in future. In this system, first step includes the stanching,

stanching of words are performed to their basic form. This step is followed by the next

step which is stop word removal. Assembling of words in order of decreasing frequency

is done by the Luhn. Then indexing is performed which is very important for

summarization. On a sentence level, an significant factor was transmitted that represents

the occurrence of crucial terms in the sentences and the space among them due to the

ability insignificant words. Sentences ranking is also important to get more specific

summary. Sentence indexing should be done on the basis of their importance level. Their

significance factors play an important role. The sentence has more significance are on the

top in the stack whereas having the low significance should come last in the stack. Top

level sentences are selected for create summary. Related work performed by

13

Benxendale(1958) at IBM. He worked for additional characteristics which are helpful in

identifying more relevant components of any article. This characteristic is sentence

position. For completing his study author read two hundred paragraphs. He analyzed that

85% of stanzas contains the title of the document. Thus, for selecting a correct topic

sentence, pick one of these. Various multifarious machine learning based systems used

this approach of sentence positioning since many years.

In 1969, Edmunson introduced a system that produces article extracts. His letter

contribution was the elaboration of discrete structure for researches related towards

extractive summarization.

The expansion of typical structure was a initial contribution forr extractive

summarization of extraction type. Initially, the writer launched a process of genrating

extract manually. This mannual extract is having utility in these 400 documents. The 2

chracteristics of positinal significance & wrd frequncy were merged from two former

mechanisms. Other two different words charcteristics were usd and these are: cue-words

existence & document framing. Encumbrnces were integrated to evry of this

charcteristics and features. These characteristics are integrated manually to cut down

every sentence. Study gives the result that 40% of the extracts accorded the extracted

manually.

In this paper authors presented a new schema that is called H-Base which is used to

process transaction data for market basket analysis algorithm. Market basket analysis

algorithm runs on apache Map-Reduce and read data from and, then the transaction data

is converted and sorted into data set having (key and value pair) and after the completion

of whole process, it stores the whole data to the distributed file system.

The size of data is growing day by day in the field of bio-informatics so it is not easy to

process and find important sequences that are present in bio-informatics data using

existing techniques. Authors of this paper basically discussed about the new technologies

to store and process large amount of data that is and “Green-plum”. Green-plum is the

massively parallel processing technique used to store of data, is also used to process huge

amount of data because it is also based on parallel processing and generates results in

very less time as compared to existing technologies to process the huge amount of

14

data.Morphological alternates and synonyms were also combined while seeing semantic

terms, the previous being identified by using Wordnet in 1995. The corpus helped in the

trials was from newswire, few of them belong to the assessments.

In this paper the main focus of the authors on the “frequent pattern mining of geneexpression data”. As we know that frequent pattern mining has become a more debatable

and focused area in last few years. There are number of algorithms exist which can be

used to frequent pattern from the data set. But in this paper authors applied the

fuzzification technique on the data set and after that applied number of techniques to find

more meaningful frequent patterns from data set.

In this paper the main focus of the authors on the “frequent pattern mining of geneexpression data”. As we know that frequent pattern mining has become a more debatable

and focused area in last few years. There are number of algorithms exist which can be

used to frequent pattern from the data set. But in this paper authors applied the fuzzification technique on the data set and after that applied number of techniques to find

more meaningful frequent patterns from data set.

In this paper, authors described that the classification and patterns in the stock market or

inventory data is really significant or important for business-support and decisionmaking. They also proposed a new algo that is algorithm for mining patterns from large

amount of stock market data for guessing factors that are affecting or decreasing the

product.

A big amount of work is done on automatic text summarization in recent years. Most of

the reachers were concerned with extractive summarization technique. Extractive

summarization and abstractive summarization are two approaches in this area. In

extractive summarization some important sentences are selected and combined to make

summary of the original text. It could be call subset of the original text which contains

important information. Whereas, in abstractive summarization, summary contains the

abstraction of the original text. It contains the hidden meaning of text.

Banxendale, 1958 analysez that topic sentence comes mostly in the starting of any

paragraph in the document whereas paragraph’s last line also contains topic line in the

15

document[6]. He found 85% of paragraphs contain first line as a topic line and 7% of

paragraphs contain it as last line. So the topic could be chosen by among them. This

posiitonal approach, has been used in many complex machines, learning based system.

Edmundson(1969), draws a system that provide extract of the document[7]. The author

developed a set of rules for extracting data manually from the original data that was

implied in a set of four hundred technical documents. With two new features presence of

cue words and skeleton of document, two characteristics of word frequency and

importance of position were merged from the previous works.

A metric:s set was represented :by Lin(2004);have; developed values of auto-matic

asses:sment of: Summaries. Li in 2006, sug:gested to use:of: an in-formation-the:oretical

technicue to automatical: asse:ssment of summariess. The vital: im-pression is to use a

devia:tion degree:: b/w a pair of like:lihood distrib:ution, in this case the JensenShannnon: deviation, where the first deliver:y is derive:ates from an autom:atic summary

and :the 2nd derived frm a: refer:ences set: summaries. This me:thod has the benefit of

enhancing :both the single-docum:ent and, the: multi-document summarization circumst,ances.

16

Chapter 3

Project Design and Implementation

3.1 AAutomaticuSummarizationimethods

3.1.1 Automationkextraction,

3.1.1.1,The, principle, of automatedyextractiont

The fundamental idea of automatic extraction is that an article consist group of sentences

where sentences are group of words. These sentences and words are arranged in specific

order to make a meaningful paragraph and sentence respectively. Automated extraction

includes fourthsteps:

Measuringgthegweightgofgterm.

Measuringkthetweightoofksentence.

Arrange these sentenceshinbdecreasingoorder according to theirrweight. Then a

thresholdevalue is decided. Sentence whoseeload better then verg values are

nominated as summarizedd text. Theoorder of all the summary sentences will be

as same as in theooriginal text.

3.1.1.2 Sentence Weighting

In automated extrection, masuring thenweightnofnsentencesnusuallynusesnthenmodel

whichh dependssonstermskenumeration.lInkthelvectorlspacelmodel, thelsentencelSiois

definedkaspSu=<W11,W21,W31,……..,Wni> ,,eachjdimensionoofothislvectorpsignifiespthe

weightoofltheitermlwi:

Wm=<hF(wmi),iT(wm),oL(wm),oS(wm),kC(wm),oI(wm)i>

Therweightlofkthektermowm islcalculatedlaskfollows:

Score(wm)=x12*;Fi(iwii)+kx2l*iTl(;wi)+ix3.*.L.(.wi)+.x4.*S(.wi.)+.x5*C.(.wi.)+.x6*.I(.wi)

Where..x1, .x2, .x3, .x4, .x5 and .x6.are.adjustments coefficient, discrete papers containing

textual data.have.different-adjustment-coefficients. Frequency-of-term-is-abbreviated-as

17

Where.x1,_ x2,_x3,_x4,_x5 and_x6 are-adjustment-coefficients,_different coefficient values

are for different documents are assigned. F represents the frequency of terms. Title of the

documents referred as T, L represents position of the word in document. S stands for

syntactic

structure.

Cue-Terms

are-abbreviated-as--C,

and-Indicative-phrases-is

abbreviated-as I[9.].

3.1.2 Understanding-basedoAutomatic0Summarization

In concern with obtaining language structure summarization uses semantic information.

Domain information is also needed toppresumeaandojudge-toofetch0the0meaning0of.

expression. Ultimately.the0summary0has0been0produced0using0meaningoexpression. It

0consist0four0steps:

Parsingo: - Tooparseithe_sentences,-use of0linguistic0information0in0dictionary

Then0creates0the0syntax0tree.

Semantic0Analysis: - semantic analysis is transformation of syntax tree into

syntactic. These syntactic expressions are based_on0the0logic0and0meaning. It

uses semantic0information0of0the0knowledge.

Pragmatic0Analysis0and0Information0Extraction: - the in formations are

pre-stored0into the database or system is the base for analyses of context and then

collects the extract central information.

Summary generation: - Now information centralized in the system is

transformed into a information table. This centralized information stored in

information table in the précised and unabridged form.[9]

3.1.3 4Information- Extraction

For information extraction is the foundation of structure of the summary. The summary

structure is divided into 2 phases:

first one belongs to selection of the data and second

phase in concerned with the generation of summary.

3.1.4 Discourse based automated text summarization:

Discourse0is0a0basic0framework.dDifferent0parts0ofodiscourseoendureodifferent

,

functionsg. A complex relationship exists between them. Automatic summarization based

18

on discourse tried to examine the fundamental discourse characteristics try tooexamine

fundamental characteristics of discourse to find article’s central data providing

information.

In today’s scenario, the automated summarization rely on significant

researchotopics: structure analysis based on rhetorical approach,opragmatic analysis,

latent semantic analysis [9]. This summarization process is implemented on the

categorized documents. This approach is based on centroid based multi document

summarization. But the addition in this system is this approach is, it follows semantic

based categorization.

3.2 Approach to Design

Original text

Tagger tool

Document

set

Training

model

Algorithm

Summarized text

Figure 3.1

Design Approach

3.2.1 Database:

In this project firstly we created a repository of the documents. These documents are the

source of data to be summarized. Either one or multiple paper could be fetched for

applying process. Database contains the output of the process also. We stored the

categorization output into the database for the further reference as well as it also contains

the summarized data of previous work.

To implement the categorization we need to perform some steps mentioned ahead.

19

3.2.2 Tokenization

Token- tokens are individual units having individual meanings. A sentence contains no.

of tokens or we can say that a group of token forms a sentence. These tokens are

separated by a delimiter. To obtain the tokens a facile program divides the text into

words. And it identified separation in two words by the delimiter between them. This

program also separates the punctuation building block dividing easily at whitespace &

punctuation-marks. Mostly the languages are not completely punctuated which arises

some confusion.

Text is simply a data that is unknown to the information contained in itself. This is just a

combination of words and sentences. Tokenization is the process of broken up into

phrases and words. These phrases and words are series of characters without specific

knowledge about sentence boundaries and words. These tokens also consider numbers,

parentheses, punctuation marks and question mark also.

Alphabetical language generally serve sentence containing words these words are

separated by blank. Removing the white space is performed by tokenizzer. These spaces

are replaced by boundaries of words. Different marks like punctuation, question and

parenthesis marks need to remove, is alraedy very short and accurate. The main and most

important difficulty is the term’s disambguity. that is vague among sentence0marker. In

this project firstly we created a repository of the documents. These documents are the

source of data to be summarized. Either one or multiple paper could be fetched for

applying process. Database contains the output of the process also. We stored the

categorization output into the database for the further reference as well as it also contains

the summarized data of previous work.

Tokenization instruction can be represented as:

First we need to distinguish the series of character at locations of white space and take

out the quotes, punctuationomarks and parenthesisoatobothoendsotoogetotheoseriesoof

tokenss. Mentioned instruction is impartially right due to punctuation and white space are

barely valid displayers of word boundaries.

20

In semantics, the procedure of token making, tokenization, is dividing the sequence of

albhabets into punctuations, numerals and otherisymbols. These9terms and series of

communication are known as token and the systems and tools fulfilling some

tokenization process are called tokenizer.

To understand the main process of tokenizer read the statement given below:

Inputodata:

I am preparing my thesis report

Output tokens.:

I

am

preparing

my

thesis

report

According to the above expression we understand that each word of the sentence

separated by space is called token.

Hence the system brokes the textodata into the tokens:

Discrete lyrics at punctuation matter, removes the punctuation. A-dot(.) that do

not trailed by white space is considered as a portion of a token.

Discrete lyrics at hyphens, til there is a counting of the token is continuing,

overall token is fixed as anoartifactocountoand0is notoseparated.

Tokenization process is a word status process. The crucial problem is how to analyze the

description-of-what is intended by the single “term”. Generally the tokenizer rely on

meek heuristic, instance: All contractual sequences of alphabetical single tokens contains

the characters; and numbers also. The subsequent lists of tokens, punctions and

whitespace may get involved.

3.2.3 Stop0Word0Removal

Stopwords are oridinary words. These words having less substaintial meaning than the

keywords. Search engines are generally discard the stopwords from a sentence.

21

Stopwords are the utmost significant result of the keyword phrases or sentences.

Approximately half of the words in the sentence are stopwords. That means fifty percent

of the text is not relevant for search engine.

3.2.4 Weighted Term

Weighted terms are counted based on their frequency of the co occurrence in the

document. This process is followed after the stop word removal. Frequency of individual

word is computed by computing the no. of repeated words. Highest the frequency of the

word, higher will be the weight of the term. For example if a word is having frequency of

no. of repetition is 200 in a document is the more weighted term than the word is

repeating itself in the document less than 200.

POS tagging consist multiple approach in it. These approaches has their own significance

for tagging. The figure given below give the idea about the different type of POS tagging

models:

Supervised POS tagging model.

o Rule based POS tagging model

BrilliPOS-tagging

o Stochastic POS tagging Model

N-gram POS-tagging

Maximum0Likelihood

HiddenoMarkovoModel

o Neural POS taggingiModel

Unsupervised POS tagging model.

o Rule based POS-taggingiModel

o Stochastic POS-TaggingiModel

o Neural POS-TaggingiModel

22

POS tagging

Supervised

Rule based

tagging

Unsupervised

Neural

tagging

Stochastic

tagging

Rule Based

Tagging

Brill

Brill

N-gram based

Maximum

likelihood

Stochastic

tagging

Neural

tagging

Baum –

Welch

Algorithm

Hidden Markov

Model

Viterbi

Algorithm

Figure 3.2 Different POS tagging model

3.3 Methods for Automatic Text Summarization:

1)Naïve-Bayes Methods

Kupiec(1995) discover a method derived from Edmunson(1969) that is able to learn from

data. Using a naïve-based classifier the classification function categorizes each sentence

as worthy of extraction or not, using a naive-Bayes classifier. Let s be a sentence, S the

super set of sentences that make up the summary, and F1,…..,Fk the features. The

features willing to (Edmundson, 1969), but it includes the length of sentence and the

uppercase words presence. To check and analyse the system, a structure of the technical

documents with the manual abstracts was used in the following way: for each sentence

present in the manual abstract, the authors analyzed its match with the actual document

23

sentences manually and created a mapping (e.g. exact match with any sentence, matching

a join of two sentences, not matched, etc.).

2) Rich Features and Decision Trees

Sentence positioning was the single feature studied by the Lin and Hovy in 1997. Author

considered the sentence by its weight and weighing these sentences are based on their

position in document, author termed it as “position method”. This idea is based on the

discoursed structure of the document. and that the sentences of greater topic centrality

tend to occur in certain specifiable locations (e.g. title, abstracts, etc). Author selects the

sentences by the keywords present in the topic of the document. And the sentences

contain the keywords are considered for the summary. They then ranked the sentence

positions by their average yield to produce the Optimal Position Policy (OPP) for topic

positions for the genre.

Multiple researches had been done by the considering the baseline features like position

of the sentence or using a easy combination of multiple features. Then machine extracted

sentences and manually extracted sentences were matched and result was found in favor

of decision tree classifier. Whereas for three topics naive combination won. Lin

concluded that this happens because of independence of some features to each other.

3) Hidden Markov Models

By analyzing the problem of inter-independence of the sentences , Conroy and O'leary

(2001) modeled the problem of extracting a sentence from a document using a hidden

Markov model (HMM). This model was sequential model. And author used this model

for considering local dependencies between Only three features were used: position of the

sentence in the document (built into the state structure of the HMM), number of terms in

the sentence, and likeliness of the sentence terms given the document terms.

4) Log Linear Model

Osborne (2002) claims that subsisting approaches to recapitulation have always assumed

feature independence. The author used log-linear models to deflect this hypothesis and

showed experimentally that the system produced better extracts than a naive-Bayes

model, with naïve-model and hidden markov model. The log-linear model surmounted

24

the naive-Bayes classifier with the anterior, demonstrating the early effectualness and its

strength.

5) Neural Networks and Third Party Features

Svore et al. (2007) introduced an algorithm rely on neural nets. Datasets used to evaluate

this algorithm belongs to third party. This algorithm was proposed to handle the problem

of extractive summarization with statistical significance. The labels of sentences and their

features like positioning in any data or article used by the author to train the model so that

proper ranking of sentence could be made in test document. Some of the disserted

features based on position or n-grams frequencies have been observed in previous work.

6) Maximal Marginal Relevance

Carbonell & Goldstein in 1998 provide their major share to work with topic driven

summarization technique. Authors introduced maximal marginal relevance measure.

They merge two concepts of query relevance and freshness of information. Based on

topic the algorithm finds the relevant sentences and then sanctions the redundant data.

They used linear combination of two measures. These two parameters were query data set

of the user, or we can say that the user profiles. This profile contains the relevant search

of the user according to his need. Second parameter was the data or documents searched

by the search engine. The best feature of this algorithm was topic-orientation. This

algorithm works with the topic keywords. And based on these keywords system was able

to find documents relevant to the user’s need identified by his query profile.

7) Graph Spreading Activation

This methodology follows the concept of graph based summarization. In which pairs of

similarities and dissimilarities were made. In 1997 Mani and Bloedorn proposed a

framework of information extraction that was not text exactly. The summary data shown

by using this approach was based on graph representation. Entities and relationships

among them were shown by nodes and edges. Despite the sentence extraction, using

spread activation technique they detect prominent regions of the graph. This approach

shares the title of being title driven. Summarization is done by employing pair of graphs,

then nodes common between them are distinguished by their synonyms or if they are

sharing the same stem. Correspondently the nodes are not sharing the same node are not

having same or similar meaning i.e. they are not common. Two different scores are

25

computed for each and every sentence in two documents. First score represents common

nodes present in the document graph, this value is the average weight of these common

nodes; and second value that computes instead the average weights of difference nodes.

8) Centroid-based Summarization

To prevailing the concept of extractive summarization a method was introduced called

Centroid Based Method. MEAD methodology employed for implementing abstractive

summarization over the extractive summarization. This is a centroid based approach. It

contains three main features necessary to extract cluster from original text. These features

include centroid score, position of the centroid and overlap the first sentence. For

calculating centroid Tf-idf algorithm is in use. Now for sentence clustering centroid score

is the measure. On the basis of the centroid score cluster of the sentences is made up by

indexing technique. For indexing position value of text is also required. Length of the

summary is also a constraint. Sentence selection is stiffened by the length of the summary

and tautological sentences are averted by checking cosine similarity against prior ones

[5]. 0 and 1 are two values used in previous researches for summarize the text by

extracting the sentences from original data using extractive summarization. Sentences

having value 0 are not the part of summary whereas value 1 sentences are the part of

summary [6]. Then a conditional random field is applied to attain this task [7]. A new

extractive approach based on man folds ranking to query based document recapitulation

proposed in [8].

9) Multilingual Multi-document Summarization

Summarization is highly required for multiple documents written in various languages.

Then Evans(2005)done work on this. He contributed his efforts towards multi-lingual

summarization for the multiple languages. Previously Hovy and Lin already worked on

this in 1999. But research work is at its early stage for multi lingual summarization.

Framework developed by Evans appears quite useful for newswire applications.

Newswire applications required combination of information from foreign news agencies.

Evans (2005) emphasis was on the resultant language. He considered the language in

which all the documents must be summaries from other languages. Resultant language

was decided on the basis of either user’s requirement or the documents available in

26

different languages. Final summary must be grammatically correct because machine

translation is known to be far from perfect. Result must have higher coverage of different

language documents than just the English documents.

3.4 Implementation:

In this paper we proposed immerging two discrete approaches for text summarization.

This technique is the compound of centroid based and multi document summarization.

This idea arises from MEAD approach. Discrete Differential Semantic based (DDS)

algorithm proposed and implemented to employ these approaches.

We manually created a corpus containing different files in different format and related to

different domains and

categories. As per the main aim of summarization of text from multiple documents we

applied categorization followed by summarization using discrete differential semantic

algorithm. First we found sentence similarity on the basis of term co-occurrence. Let a

document D= {d1, d2, d3,…,dn), where n is the number of sentences. Let W= {w1, w2,

w3,….wm} represents all the discrete words present in document D, where m is the no. of

words. In most existing document clustering algorithms, documents uses vector space

model (VSM) [17]. In m- imensional space, each paper is constituted using these words.

The measure of feature space is highly dimensional in these algorithms which could

create a resistance in clustering algorithm and can cause of big challenge.

In our method, a sentence Su is represented as a group of discrete terms appearing in it,

Su = {t1, t2,……….tmu}, where mi is the number of different terms appearing in the

sentence. In this paper, to calculate to measure likeliness in different sentences we used

Google Distance. This distance is normalized Google distance (NGD)[18]. But to

optimize the summarization result this process is followed by categorization.

In this paper we implemented abstractive summarization. And to optimize automated

summarization unsupervised approach of clustering is adapted. Before calculating

similarity between the sentences we need to compute similarities between the words.

First we categorize the multiple documents in different categories optimally. For that we

worked over the semantic expression of the words. Each word has different meaning at

different places. In previous work these words are categorized on the bases of pre trained

27

wordnet. And these methods find the weighted term based on Tf-idf algorithm. Where

words are weighed with their occurrences in the document and categorized on the bases

of available wordnet related to the different domains. Higher the no. of occurrences,

higher will be the weight. Whereas in proposed methodology words are analyzed by their

semantic meaning. Each keyword is correlated with their adjacent words occurring in the

sentence and then the meaning of the word is judged by the system. This approach

improves the quality of clustering the documents in

any cluster. This approach works with the semantic categorization. In this paper, a

criterion function is considered for clustering the documents. This criterion function is to

judge the distance from the centroid of any document in a specific cluster. It also deflects

the similarity measure of any document to the other domains. So that we can analyze the

highest relevance of the document to a particular domain. Then the multiple documents

related to a field could be summarized using NGD[18]. Now after categorizing the

documents in their most relevant clusters, we proceed to the summarization part. For that

we need to calculate the Normalized Google Distance between two terms. Similarity

measure is between term tx and ty to calculate.

1. Pages that contain the occurrence of term tx are denoted by function of x i.e. f(tx).

2. Then no. of pages containing the occurrence of both the terms tx and ty is denoted by

function of tx and ty i.e. f(tx,ty).

3. D denotes the total number of pages.

4. Now we use binary logarithmic function to calculate similarity measure.

5. The maximum no. pages containing log f(tx) and log f(ty) is calculated individually and

stored in a function f(max).

6. The value of log(tx,ty) is to be subtracted from f(max).

7. Then calculate log N.

8. Find minimum of logarithmic value of f(tx) and logarithmic value of f(ty). and store

the result in variable f(min).

9. Subtract the result of step 8 from the result of step 7.

10. Divide the value obtained from step 6 with the value obtained from step 9.

11. Let NGD(tx, ty) denotes the resultant of Step 10.

28

SimNGD(tx,ty) = -exponential(-NGD(tx, ty))

(1)

If the value of NGD(tx, ty) is 0 that means tx and ty are the similar terms.

If the value of NGD(tx, ty) is 1 that means both the terms are different.

Expression (1) represents the similarity measure between the terms.

By using the expression (1), similarity among the sentences Su and Sv is obtained.

𝑆𝑖𝑚𝑁𝐺𝐷(𝑆𝑢 , 𝑆 𝑣 ) =

∑𝑡𝑥 ∈𝑆𝑢 ∑𝑡𝑦 ∈𝑆𝑣 𝑆𝑖𝑚 𝑁𝐺𝐷(𝑡𝑥 , 𝑡𝑦 )

𝑚𝑢 𝑚𝑣

(2)

Where mu and mv are the maximum no. of terms in a sentence Su and Sv respectively.

After calculating the similarity between the sentences in cluster C={S1,S2,…….Sn),

collection of similar sentences i.e. nearest to a centroid, are clustered. And By using this

technique we summarize the data given in the original documents.

For experiments we prepared our own corpus containing number of documents. These

documents could be of any length. CIDR algorithm is used to create the clusters. This

system added the feature of semantic categorization also. This system is able to read

multiple documents concurrently. And analyze the category based on the semantic

meaning. The meaning of the words is analyzed by their adjacent words. Then the

dictionary stored in the system, this dictionary consists all possible meanings of any

word. Then words of meaning are compared with the actual word in the document. And

after that an individual virtual file is created containing number of words according to

their meaning after stopword removal. Now the final words are found proceed to relate

them to the wordnet available. And then more appropriate category is derived.

For example:

If a sentence is:

This phone belongs to the apple.

Now the token apple could have different meanings individually. Those meanings could

be apple a fruit or apple a company. But with the sentence it has more accuracy about its

meaning. The sentence shows that the word apple belongs to the company only. It is not

about a fruit.

29

This is the significance of the meaning of any word. Hence semantic categorization is

more important for more accuracy of the document. Which provides a discrete

differential categorization base to the summarization.

implemented as discussed above.

30

For summarization NGD is

Chapter 4

Experiment Results

The designs and implantation screens are shown below:

Figure 4.1: Browse Different POS tagging model

31

Above screen is having a text field for browsing the document or documents. These

documents are stored at any location in the system; it could be any single folder or

multiple folders. The files should be in .doc or .docx format. Once the document is

browsed user need to select his 2nd operation which h/she wants to perform.

1) Literal categorization: literal categorization is based on word net only. In this

there is no significance of their meaning.

2) Semantic categorization: this kind of categorization is completely based on the

semantic meaning of the word. This gives more accuracy to the categorization

process.

3) View history of the categorization: this button is to study the category of the

previous documents.

4) Show summarizer details: this is to view the previous summaries stored in the

system for previously processed documents.

32

Figure 4.2 Window available for categorization and summarization as well.

This window appears after selecting one of the categorization techniques. It could be

literal or semantic. This window is having two functionalities. Categorization and

summarization both the processes are included. If we want to perform summarization

directly as the old tools did, the n we can do. Else summarization should be performing

after categorization. Which categorization technique will be performed depends on the

selection done on the previous screen. For say, if we have selected the lexical

categorization then here the system performs only lexical categorization. The grid shown

in left side is to select multiple no. of file to categorize in related categories. Initially all

check boxes are ticked. We need to deselect the unwanted files for the process. Then

proceed to categorization.

33

Figure 4.3

Categorization details

Now the files selected in previous screens are categorized according to the related

categories. These file names and categories are shown in the grid presented in the

downwards direction. The categorization process is done on the back end of the system

but if the user wants to justify his results he would be able to check detailed process of

categorization. For that the user needs to check the box of a file for which h/she wants to

read details. But the point need to keep in mind is only file could be select at a time.

The button of fill dictionary is to add the dictionary in the system. If we want to use this

system for another language we could insert and attach a new dictionary with this system

using this button.

34

Figure 4.4 Lexical Categorization

The details of selected files for lexical categorization are shown in above screen.

This screen contains the final category of the file. This category is computed on the basis

of matching ratio to the other categories .i.e. the file is how much related to each category

is identified by using the mathematical expression:

Matched ratio=

____total number of matched words____

Total number of words in document

List of matched words are also shown in the screen. Highest the no. of ratio, higher the

relevance to the category. Now from here we could proceed to the summarization.

35

Figure 4.5 Semantic categorization

If we have selected for semantic categorization in the initial screen then we proceeded to

this window only. Similarly to the previous screen, documents related to their categories

are shown with their names and related categories but to justify the result of these

categories we are having an option to analyze the result by their details. To observe the

details we need to select one file at a time. And the details are shown in above grid. This

grid contains the category of the highest relevance to the document, in the very first line.

Then it shows no. of matched words and calculated ratio also.

Details of each category and similarity level are also shown in the next part of the grid.

Same as the lexical, highest the ratio means higher the relevance.

36

Figure 4.6

Summary of multiple documents

This screen contains the final summary calculated on the bases of NGD. And the stored

location of the files is also shown in the above field. As per our aim summarization is

done for multiple documents. Which could be perform for individual also as per the

user’s need. The separation of the summaries of different documents is shown by a line.

So that it would be easy to differentiate by the user for different documents.

37

Chapter 6

Conclusion

In this project,

proposed work of optimization of

automated-text-summarization

methodology is implmented by Discrete differential algorithm based on NGD that is

Normalized Google Distance. In this work, we proposed an automatic text summarization

method by NGD which is done after the categorization implemented with the base of

centroid based multi document summarization approach. We have introduced an

unsupervised approach to optimize automated document summarization. This approach

consists of two steps. First, sentences are categorized semantically as well as

syntactically. And their efficiency of correctness is compared. And proposed algorithm

gives the improved results. Secondly, when accuracy of the related category’s documents

is found, summarization is performed. In our study we developed a discrete differential

semantic based technique to optimize the objective function for summarization, which

gives better result to the user. When comparing to previous methods which does not

include ambiguity removal we found better results.

38

Chapter 7

Future Research

We performed Automatic Text Summarization by designing a discrete differential

centroid based multi document semantic summarization, summary can be extracted on

the bases of this approach. This approach focused on the semantic categorization of the

documents to divide them in to discrete categories. Features of sentence positioning, its

frequent patterns and meaning related to the sentence are the main features that are

considered. Scope of the project is:

Project could be multilingual. To reduce the language gap which is not presented

in this project and the system would be able to summarize the documents written

in different languages.

The another enhancement of this developed system could be, the existing system

is not supporting all formats of file. The updated system would be able to support

all kind of documents.

Updated online system could resolve the issues related to access with World Wide

Web directly, for that system should work online so that storage space used due to

downloading files could be save.

Multiledia files could also be the part of this system in future.

39

References:

[1] Kupies, Julian, Jan O. Pedersen, and Francine Chen. “A trainable document

summarizer” in Research and Development in Information Retrieval, Pages68-73,1995.

[2] Witbrock, Michael and Vibhu Mittal, “ Ultra-summarization: A statistical approach to

generating highly condensed non-extractive summaries” in proceedings of 22nd Annual

International ACM SIGIR Conference on Research and Development in Information

Retrieval, Berkeley, pages 315-316, 1999.

[3] Knight, Kelvin and Daniel marcu, “Statistic-based summarization-step one: sentence

compression”, in proceeding of the 17th National Conference of American Association

for Artificial Intelligence(AAAI-2000), pages 703-710, 2000.

[4] M.A. Fattah and F. Ren,” GA, MR, FFNN,PNN and GMM based Models for

automated text summarization,” Computer Speech and Language, Vol. 23, No. 1, pp.

126-144,2009.

[5]P.B. Baxendale, “Machine Made Index of Technical Literature: An experiment”

IBM Journal Of Research and Development, Vol2, no. 4, PP. 354-361,1958.

[6] Edmunson, H.P. “New methods in automatic extracting” journal of the ACM, 16(2):

264-285.[2,3,4], 1969.

[7] Lin, C. and E. Hovy “Identifying topics by position” in fifth conference on Applied

Natural Language Processing, association for Computational Linguistics, 31 march-3

april, pages 283-290, 1997.

[8] Radev, D. R., Hovy, E., and McKeown, “Introduction to the special issue on

summarization. Computational

Linguistics”, 28(4):399-408. [1, 2], 2002.

[9] M.-R. Akbarzadeh-T., I. Mosavat, and S. Abbasi, “Friendship Modeling for

Cooperative Co-Evolutionary Fuzzy Systems: A Hybrid GAGP Algorithm,” Proceedings

of the 22nd International Conference of North American Fuzzy Information Processing

Society, pp.61-66, Chicago, Illinois, 2003.

[10] G. Erkan and D. R Radev, “lexrank: Graph based lexical cenrality as salience in text

summarization,” Journal of Artificial Intelligence Research, Vol. 22, pp. 457–479, 2004.

[11] D. Radev, E. Hovy, and K. McKeown, “Introduction to the special issue on

summarization,” Computational Linguistics, Vol. 28, No. 4, pp. 399–408, 2002.

40

[12] D. Shen, J.-T.Sun, H. Li, Q. Yang, and Z. Chen, “Document summarization using

conditional random

fields,” Proceedings of the 20th International Joint Conference on Artificial Intelligence

(IJCAI’07), Hyderabad, India, pp. 2862–2867, January 6–12, 2007.

[13] D. M. Dunlavy, D. P. O’Leary, J. M. Conroy, and J. D. Schlesinger, “QCS: A

system for querying, clustering and summarizing documents,” Information Processing

and Management, Vol. 43, No. 6, pp. 1588–1605, 2007.

[14] X. Wan, “A novel document similarity measure based on earth mover’s distance,”

Information Sciences, Vol. 177, No. 18, pp. 3718–3730, 2007.

[15] S. Fisher and B. Roark, “Query-focused summarization by supervised sentence

ranking and skewed word distributions,” in proceedings of the Document Understanding

Work shop (DUC’06), New York, USA, 8p, 8–9 June 2006.

[16] J. Li, L. Sun, C. Kit, and J. Webster, “A queryfocused multi-document summarizer

based on lexical chains,” Proceedings of the Document Understanding Conference

(DUC’07), New York, USA, 4p, 26-27 April 2007.

[17] X. Wan, “Using only cross-document relationships for both generic and topicfocused multi-document summarizations”, Information Retrieval, Vol 11, No. 1, pp 2549, 2008.

[18] Mahmoud Rokaya, “automatic summarization based on field coherent passages”, in

proceeding of International journal of computer applications, vol 79, No 9, 2013.

[19] J.Han an M. Kamber, “Data Mining: Concepts and technique (2nd edition)”, Morgan

Kaufman, San Francisco, 800p, 2006

[20] M.A. Fattah and F. Ren,” GA, MR, FFNN,PNN and GMM based Models for

automated text summarization,” Computer Speech and Language, Vol. 23, No. 1, pp.

126-144,2009

[21] U. Hahn and I. Mani, “The challenges of automatic summarization,” IEEE

Computer, Vol. 33, No. 11, pp. 29–36, 2000.

[22] I. Mani and M. T. Maybury, “Advances in automated text summarization,” MIT

Press, Cambridge, 442p, 1999.

41