Chapter 4b Notes (Word)

advertisement

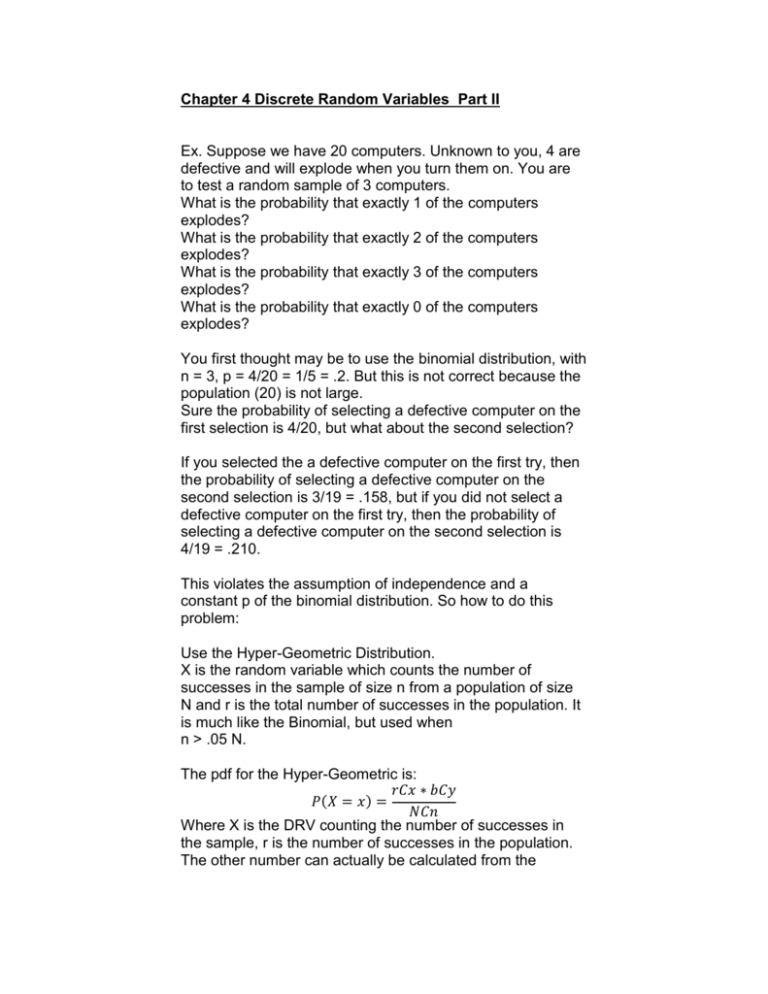

Chapter 4 Discrete Random Variables Part II Ex. Suppose we have 20 computers. Unknown to you, 4 are defective and will explode when you turn them on. You are to test a random sample of 3 computers. What is the probability that exactly 1 of the computers explodes? What is the probability that exactly 2 of the computers explodes? What is the probability that exactly 3 of the computers explodes? What is the probability that exactly 0 of the computers explodes? You first thought may be to use the binomial distribution, with n = 3, p = 4/20 = 1/5 = .2. But this is not correct because the population (20) is not large. Sure the probability of selecting a defective computer on the first selection is 4/20, but what about the second selection? If you selected the a defective computer on the first try, then the probability of selecting a defective computer on the second selection is 3/19 = .158, but if you did not select a defective computer on the first try, then the probability of selecting a defective computer on the second selection is 4/19 = .210. This violates the assumption of independence and a constant p of the binomial distribution. So how to do this problem: Use the Hyper-Geometric Distribution. X is the random variable which counts the number of successes in the sample of size n from a population of size N and r is the total number of successes in the population. It is much like the Binomial, but used when n > .05 N. The pdf for the Hyper-Geometric is: 𝑟𝐶𝑥 ∗ 𝑏𝐶𝑦 𝑃(𝑋 = 𝑥) = 𝑁𝐶𝑛 Where X is the DRV counting the number of successes in the sample, r is the number of successes in the population. The other number can actually be calculated from the previous, y = n – x which is the number of failures in the sample and b = N – r = number of failures in the population. max[0, n – b] ≤ x ≤ min [r, n] In the computer example above: N = 20, n = 3, r = 4 where X counts the number of defective computers. P(X = 1) = 4 C 1 * 16 C 2 / 20 C 3 = 480 / 1140 = .421 x 0 1 2 3 P(X = x) 0.491 0.421 0.084 0.004 The above probabilities were found using the HYPGEOMDIST function in Excel. Sad to say that your calculators do not have a Hyper-Geometric function. Note that as N increases the Hyper-Geometric distribution approaches the Binomial distribution. In fact most problems that we use the Binomial for, are theoretically HyperGeometric. However, we rarely know the population size, N, and the approximation is very good. Note also that we did problems like this already in chapter 3 when we did combination problems. Ex. There are 15 students in a class. There are 8 women and 7 men. 4 are randomly selected to be on a committee. Let X be the number of women on the committee. Find the probability distribution of X. N = 15, n = 4, r = 8. Possible values for X = 0, 1, 2, 3, 4 x 0 1 2 3 4 P(X = x) 0.026 0.205 0.431 0.287 0.051 Also note that if X is Hyper-Geometric with N, n and r then rn E( X ) N r ( N r ) n( N n) Var ( X ) N 2 ( N 1) so for the previous example: E(X) = 8*4/15 = 2.133 Var(X) = 8(7)(4)(11)/225*14 = .783 σ = .884 In class examples: The Geometric Distribution: A Geometric RV: counts the number of “trials” necessary to realize the first “success”. Trials mean the number of times the experiment is run. Success is not necessarily a good thing, just what you are counting. If X has a geometric distribution, then the pmf is p(X= x) = p(1 – p)x – 1 for x = 1, 2, 3, … p(X=x) = 0 otherwise 1 𝐸(𝑋) = 𝑝 𝑉𝑎𝑟(𝑋) = 1−𝑝 𝑝2 p is the probability of a success at each trial. So if p = .75 then the P(X = 2) = p(2) = .75 (.25) = .1875 Characteristics of a Geometric Random Variable: 1. The experiment consists of identical trials. 2. Each trial results in either a Success or Failure 3. The trials are independent 4. The probability of success, p, is constant from trial to trial Note how this differs from Binomial: 1. Binomial has a fixed number of trials. Geometric does not. 2. Binomial counts number of successes. Geometric counts number of trial until first success. On the TI83/84 If p = .75 and you want the P(X = 2) [2nd][DISTR] go down to geometpdf(p, x) geometpdf(.75, 2)[ENTER] = .1875 On the TI83/84 If p = .75 and you want the P(X ≤ 2) [2nd][DISTR] go down to geometcdf(p, x) geometcdf(.75, 2)[ENTER] .9375 P(X ≤ 2) = P(X = 1) + P(X = 2) Example: A test of weld strength involves loading welded joints until a fracture occurs. 20% of the fractures occur in the beam and not the weld. If X = the number of trials up to and including the 1st beam fracture? Find the probability that the first test with a beam fracture is the 3rd test. Find the probability that the first test with a beam fracture is at most on the 3rd test. What are the mean and standard deviation of X X ~ Geom(p = .20) P(X = 3) = .128 P(X ≤ 3) = .488 E(X) = 1/.2 = 5 Var(X) = .8/.04 = 20 The Poisson Distribution Characteristics: 1. The experiment consists of counting the number of times a certain event occurs during a specific time or in an area. 2. The Probability that the event occurs in a given time or area is the same for all units. 3. The number of events that occur in one unit is independent of the other units of time or area. 4. The mean or expected number of events in each unit is denoted by the Greek letter lambda = μ. Other books use λ If Y has a Poisson Distribution with mean μ then the pdf for Y is: 𝑃(𝑌 = 𝑘) = 𝜇 𝑘 𝑒 −𝜇 𝑘! for k = 0, 1, 2, … E(Y) = μ and Var(Y) = μ Ex. The average number of occurrences of a certain disease in a certain population is 2 per 1000 people. In a random sample of 1000 people from this population, what is the probability that exactly 1 person in the sample has the disease? What is the probability that at least 1 person in the sample has the disease? Y = counts the number of people in the sample with the disease. Y has a Poisson distribution with μ = 2. P(Y = 1) = 21 * e -2 / 1! = .270 P(Y ≥ 1) = 1 – P(Y = 0) = 1 - .135 = .865 On the TI83/84 page 214 [2nd] [DIST] (VARS key) C: poissonpdf(μ, k) poissonpdf(μ, k) gives P(Y = k) for the last example: poissonpdf(2, 1) = .270 poissonpdf(2, 0) = .135 On the TI83/84 [2nd] [DIST] (VARS key) poissoncdf(μ, k) poissoncdf(μ, k) gives P(Y ≤ k) In Class examples: