Chapter 3 notes (WORD)

advertisement

Chapter 3 Random Variables

A Random variable is any rule that associates a number with each outcome

of the sample space S.

A RV is called a variable but is really a function whose domain is S and

whose range is a set of Real numbers.

Traditionally capital letter are used to denote RV’s and lower case letters

are used to denote specific values of the RV.

Ex. Roll 2 fair dice. Let Y = sum of the dice. Note that Y is not a number, but

the values Y can equal are numbers.

S = {(1,1), (1,2),… (6,6)}

possible value for Y = {2, 3, 4, …, 12}

P(Y = 2) = 1/36 “The Probability that the random variable Y takes on the

value 2 is 1/36.”

P(2 < Y ≤ 5) = P(Y = 3) + P(Y=4) + P(Y=5) = 2/36 + 3/36 + 4/36 = 9/36

Could also write as

P(2 < Y ≤ 5) = P(3) + P(4) + P(5) = 2/36 + 3/36 + 4/36 = 9/36

There are two types of RV’s. Discrete and Continuous.

For a discrete RV, the possible values are at most countably infinite.

A RV is continuous if both of the following are true:

1. The set of possible values consists of either of all the numbers in an

interval or in a disjoint union of such intervals. [(0,1) or (0,1) U (2,3)]

2. No possible value of the variable has positive probability, that is,

for all possible c, P(X = c) = 0.

The probability distribution or probability mass function of a random

variable gives the probability the random variable takes on each of its

values. The pmf for a DRV is defined for every x by p(x) = P(X = x) =

P(all s in S: X(s) = x).

The pmf gives the probability that the RV takes on each specific value.

The pmf is usually given as a table or a function.

Discrete RV’s count the number of some object, occurrence or subject.

For all Discrete Random Variables or Probability Distributions

1.

∑P(Y = y) =1

where the sum is over all possible values y.

2.

P(Y = y) ≥ 0 for all possible values y

1

Ex. A package of 5 pens. Let Y be the RV that counts the number of pens

that are defective in the sample.

The probability distribution is given as follows:

Y

0

1

2

3

4

5

P(y) .75

.10

.06

.04

.03

.02

P(Y=0) = .75

0 pens of 5 was defective

P(Y=1) = .10

P(Y=2) = .06

P(Y=3) = .04

P(Y=4) = .03

P(Y=5) = .02

Note that all probabilities are between 0 and 1 and the sum of the

probabilities = 1.

What is the probability that at most 2 pens are defective?

P(Y ≤ 2) = P(Y=0) + P(Y=1) + P(Y=2) = .75 + .10 + .06 = .91

What is the probability that at least 1 pen is defective?

P( Y ≥ 1) = 1 – P(Y = 0) = 1 - .75 = .25

Ex. Let X be a DRV such that p(x) = .1x for x = 1, 2, 3, 4

the pmf as a table is:

X

1

2

3

4

p(x) .1

.2

.3

.4

Note that all p(x) > 0 and ∑p(x) = 1

Probability Histogram

0.45

0.4

0.35

0.3

0.25

p(x)

0.2

0.15

0.1

0.05

0

1

2

3

4

x

The cumulative distribution function (cdf) labeled F(x) for a DRV X with pmf

p(x) is defined for every x by:

F(x) = P(X ≤ x) = ∑p(y) (summation over all y ≤ x)

F(x) is the probability that X is less than or equal to x.

for any numbers a and b with a < b:

P(a ≤ X ≤ b) = F(b) – F(a –1)

2

Ex.

Y

P(y)

0

.75

1

.10

2

.06

3

.04

4

.03

5

.02

F(0) = .75 = P(Y ≤ 0)

F(1) = .85 = P(Y ≤ 1) = p(0) + p(1)

F(1.5) = .85 = P(Y ≤ 1.5)

p(1.5) = 0 = P(Y = 1.5)

P(2 ≤ Y ≤ 4) = F(4) – F(1) = .98 - .85 = .13 = p(2) + p(3) + p(4)

y

F(y) = P(Y ≤ y)

y<0

0

0≤y<1

.75

1≤y<2

.85

2≤y<3

.91

3≤y<4

.95

4≤y<5

.98

y≥5

1.0

There are a few special DRV we will mention by name shortly.

In Class: 11, 15, 24, 16

#11. X

4

6

p(x) .45

.40

8

.15

0.5

0.45

0.4

0.35

0.3

0.25

p(x)

0.2

0.15

0.1

0.05

0

4

6

8

x

c. P(X ≥ 6 ) = .55

P(X > 6) = .15

3

Expected Values

Expected value = mean (weighted by probabilities)

E(X) = μ = μx = ∑x P(X = x) = ∑x p(x)

E(X) is the mean of the conceptual population, long run average.

Pen example:

E(Y) = 0*.75 + 1*.10 + 2*.06 + 3*.04 + 4*.03 + 5*.02

E(Y) = .56

Does this mean that there is 0.56 defective pens in each package of 5?

No.

It means on average there is 0.56 defective pens in each package of 5.

So if you had 100 packages of 5 pens, there would be about 56 defective

pens.

Geometric RV: counts the number of “trials” until the first “success”.

trials mean the number of times the experiment is run.

success is not necessarily a good thing, just what you are counting.

If X has a geometric distribution, then the pmf is

p(x) = p(1 – p)x – 1

for x = 1, 2, 3, …

p(x) = 0

otherwise

p is the probability of a success at each trial.

So if p = .75 then the P(X = 2) = p(2) = .75 (.25) = .1875

Prove that E(X) = 1/p for X a Geometric RV with parameter p.

pf.

d

x

E ( X ) x 1 xp(1 p) x 1 p 1 p

x 1 dp

d

x

1 p

E ( X ) p

dp x 1

we know that

1 p x 1 p 1 p

1 (1 p )

p

x 1

so

d 1 p

p 1 p 1

p

E ( X ) p

p2

dp p

p

Properties of Expected Values:

Let X and Y be Discrete RV’s

1.

2.

If c is a constant then E(c) = c

If c is a constant then E(cX) = cE(X)

4

3.

E(X + Y) = E(X) + E(Y) = μx + μy

4.

In general, E(XY) ≠ E(X)E(Y), if X and Y are independent then

E(XY) = E(X)E(Y)

5.

Ex.

E(h(X)) = ∑ h(x) p(x)

E(X2) = ∑ x2 p(x)

6.

Variance(X) = V(X) = σ2 = E[(X – μ)2]

where μ = E(X)

Standard Deviation(X) = σ = √( σ2)

summed over all possible x.

Exercise:

Show that σ2 = E(X2) – μ2.

σ2 = E[(X – μ)2] = E[X2 –2μX + μ2 ] = E(X2 –2 μ2 + μ2)

σ2 = E(X2) – μ2

For pen example:

Recall E(Y) = .56

Y

P(Y)

0

0.75

1

0.1

2

0.06

3

0.04

4

0.03

5

0.02

Y–μ

(Y – μ )^2

*p(y)

-0.56

0.3136

0.2352

0.44

0.1936

0.01936

1.44

2.0736

0.124416

2.44

5.9536

0.238144

3.44

11.8336

0.355008

4.44

19.7136

0.394272

Variance

Stdev

1.366

1.169

Y

P(Y)

0

0.75

1

0.1

2

0.06

3

0.04

4

0.03

5

0.02

Y^2

E(Y^2)

0

1.68

0.1

0.24

0.36

0.48

0.5

Variance

Stdev

1.366

1.169

Let X be a RV with mean μ and variance σ2, and let a and b be real

numbers, then

V(aX + b) = σ2 (aX + b) = a2 σ2, and σ(aX + b) = |a|σ

#29

x

p(x)

0

.08

1

.15

2

.45

3

.27

4

.05

5

E(X) = 0*.08 + 1*.15 + 2*.45 + 3*.27 + 4*.05

E(X) = 0 + .15 + .90 + .81 + .20 = 2.06

V(X) = .08*2.062 + .15*1.062 + .45*.062 + .27*.942 + .05*1.942

V(X) = .9364

σ = .9677

E(X2) = .08*0 +.15*1 +.45*4 + .27*9 + .05*16 = 5.18

V(X) = 5.18 – 2.062 = .9364

Chebyshev’s Inequality

Let X be a RV with mean μ, standard deviation σ, and k > 1, then

P(|X - μ)| ≥ kσ) ≤ 1/k2.

#44

The probability that any value x is at least k standard deviations from the

mean is at most 1/k2.

P(|X - μ)| ≥ 2σ) ≤ ¼ = .25

P(|X - μ)| ≥ 3σ) ≤ 1/9 = .111

P(|X - μ)| ≥ 4σ) ≤ 1/16 = .0625

P(|X - μ)| ≥ 5σ) ≤ 1/25 = .04

P(|X - μ)| ≥ 10σ) ≤ 1/100 = .01

What does P(|X – μ| ≥ 3σ) mean?

P(|X – μ| ≥ 3σ) = P(X - μ ≤ -3σ) + P(X – μ ≥ 3σ)

P(X ≤ μ – 3σ) + P(X ≥ μ + 3σ)

For exercise 13, μ = 2.64 and V(X) = 2.3074

σ = 1.5440

P(|X – μ| ≥ 3σ) = P(X ≤ 2.64 – 3*1.544) + P(X ≥ μ + 3*1.544)

= P(X ≤ 2.64 – 4.632) + P(X ≥ 2.64 + 4.632) =

P(X ≤ -1.992) + P(X ≥ 7.272) = 0 which ≤ 1/9

The bounds are conservative.

c.

X

p(x)

x*p(x)

x*x*p(x)

-1

1/18

0

8/9

1

1/18

- 1/18

1/18

0/18

0/18

1/18

1/18

E(X) = 0

E(XX) = 1/9

V(X) = 1/9

σ = 1/3

P(|X – μ| ≥ 3σ) = P(X ≤ -1) + P(X ≥ 1) = 1/9

by Chebyshev’s Inequality

P(|X – μ| ≥ 3σ) ≤ 1/9, so the inequality is exact.

6

d.

X

p(x)

x*p(x)

x*x*p(x)

-1

1/50

0

24/25

1

1/50

- 1/50

1/50

0/50

0/50

1/50

1/50

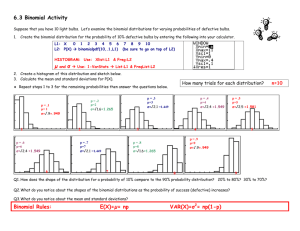

The Binomial Probability Distribution.

Ex.

Test of 3 Multiple Choice Questions. The probability of getting any

one question right is ¼ = .25 Assume that the questions are independent.

Let Y = # of questions right

S = {RRR, RRW, RWR, WRR, WWR, WRW, RWW, WWW}

Note: P(Y=3)=P(RRR) ≠1/8

The outcomes are not equally-likely!

A tree diagram may help.

P(Y=0) =¾*¾*¾ = 27/64=.42

P(Y=1)=P(RWW+WRW+WWR)

P(Y=1) =3 * (¾*¾*¼) = 27/64 =.42

P(Y=2)=P(WRR+RWR+RRW)

P(Y=2) =3 * (¾*¼*¼) = 9/64 =.14

P(Y=0)=P(RRR)=¼*¼*¼ =1/64 = .02

Note: 27/64+27/64+9/64+1/64=1

A Binomial Random Variable counts the number of “successes” in n trials.

Ex. The number of correct answers in 3 questions.

Five Characteristics of a Bin R.V.

1.

Fixed # n of identical trials. Ex. 3 True-False questions or Selecting

10 people from a large population.

2. The outcome of each trial can be classified as a Success or Failure.

Success is not necessarily a good thing.

3. The probability of a Success at each trial = p, is the same for all trials.

This also means that the probability of a Failure is the same also = 1-p = q.

4. The trials of the experiment are independent. Outcomes of previous

trials to not affect future trials.

5. The random variable counts the number of Successes in the n trials.

Clues that the RV is Binomial.

1. Random sample of size n

7

2. Sample comes from a large population, or sampling with replacement.

(this will make the trials independent)

3. Each trial can be classified as a Success or Failure.

Binomial Formula

P(X = x) =b(x; n, p)= n C x p x q n – x

For x = 0, 1, 2, … , n

For Multiple Choice test example. Y is Bin(n=3, p = 1/4 )

Sampling with replacement, if you answer T to question 1 you can answer

T again, T is replaced.

k = 0 means 0 questions correct.

P(Y=0) = 3 C 0 (¼) 0 (¾) 3

P(Y=0) = 1 (1) (27/64) = 27/64

k = 1 means 1 question correct.

P(Y=1) = 3 C 1 (¼) 1 (¾) 2

P(Y=1) = 3 (9/16) (1/4) = 9/64

On the TI83/84

[2nd] [DIST] (VARS key)

0: binompdf(n, p, x)

binompdf (n, p, x) gives P(Y = x)

b(x; n, p)

if Y is Bin(n=3, p = ¼ )

P(Y = 2) = binompdf(3, ¼ , 2) = 9/64 = 0.1406

On the TI83/84

[2nd] [DIST] (VARS key)

binomcdf(n, p, x)

binomcdf(n, p, x) gives P(Y ≤ x)

B(x; n, p)

if Y is Bin(n=10, p = .6)

P(Y ≤ 8) = binomcdf(10, .6, 8) = 0.9536

B(8; 10, .6)

What if you were asked for P(Y > 8)?

P(Y > 8) = 1 – P(Y ≤ 8) = 1 - .9536 =.0464

Use pdf for probability that Y exactly = a number and

Use cdf for probability that Y <, > , ≤ , ≥ numbers.

8

Ex.

In the NBA Dolph Schayes makes 90% of his free throws. In a particular

game he shoots 10 free throws. Let X count the number free throws made.

What must you assume for X to have a binomial distribution. Specify n and

p. Find the probability that he made all 10 free throws. Find the probability

that he made 9 free throws.

Clues that it is binomial: sampling with replacement, each trial is a success

or failure. A success = made the free throw.

a. we need to assume that the free throws are independent.

b. n = 10 and p = .9

c. P(X = 10) = use pdf = P(X = 10) = .3487

P(X = 9) use pdf = P(X = 9) = .3874

Extra Questions:

What is the probability that Dolph makes:

d. at most 9 free throws

P(X ≤ 9) = use cdf = P(X ≤ 9) = .6513

Or P(X ≤ 9) = 1 – P(X > 9) = 1 – P(X =10) = 1 - .3487

e. at least 8 free throws?

P(X ≥ 8) = 1 – P(X < 8) = 1 - P(X ≤ 7) = 1 - .0702 = .9298

f. 0 free throws.

P(X = 0) = 1 E-10 = 0.0000000001 ≈ 0

g. at least 1 free throw.

P(X ≥ 1) = 1 – P(X = 0) ≈ 1 – 0 ≈ 1

The mean or expected value of a Binomial RV is μ = np.

The standard deviation of a Binomial RV is σ = √(npq)

Recall q = 1- p

Ex2. It is known that 10% of the US population is left-handed. A random

sample of 15 people is taken.

1. What is the probability exactly 0 people are left-handed?

2. What is the probability exactly 1 person is left-handed?

3. What is the probability exactly 2 people are left-handed?

4. What is the probability less than 3 people are left-handed?

5. What is the probability of at least one left-handed person?

9

6. What are the mean and the standard deviation of left handed people in

the sample?

Answers:

Let X count the number of left-handed people in the sample. Since we

have a large population and each trial can be classified as a success or

failure, X is a binomial random variable. Its parameters are n = 15 and p =

0.10. Then the answers to the questions are:

1.

P(X = 0) = .2059

2.

P(X = 1) = .3432

3.

P(X = 2) = .2669

4.

P(X < 3) = P(X≤ 2)=.8159 (used cdf)

P(X< 3) = .2059 + .3432 + .2669

P(X <3) = .816 (Round off error)

5.

P(X ≥ 1) = 1 – P(X < 1) = 1 – P(X = 0) = 1 - .2059 = .7941

6.

μ= n*p = 15 * .1 = 1.5

σ = √(npq) = √(15 * .1 * .9) = √1.35 = 1.162

In Class. 48, 55, 57, 62

48. Circuit Boards

X = number of defective circuit boards in the sample.

n = 25, p = .05.

X ~ binomial because we are sampling from a large population.

a. P(X ≤ 2) = .8729 = probability that there are at most 2 defectives.

b. P(X ≥ 5) = 1 – P(X ≤ 4) = 1 - .9928 = .0072 = Probability that there are at

least 5 defectives.

c. P(1 ≤ X ≤ 4) = .7154 = Probability that there are between 1 and 4

defectives, inclusive.

d. P(X = 0) = .2774

e. μ = 25 * .05 = 1.25

σ = √(25 * .05 * .95) = 1.090

Prove that b(x; n, p ) = b(n - x; n, 1 – p)

Let q = 1 - p

b(x; n, p ) = nCx px q n-x

b(n-x; n, q ) = nC(n-x) qn-x (1- q) n-(n-x)

but 1 – q = p and n – (n – x) = x and we have previously shown that

nCx = nC(n-x) so

b(n-x; n, q ) = nCx qn-x p x = b(x; n, p )

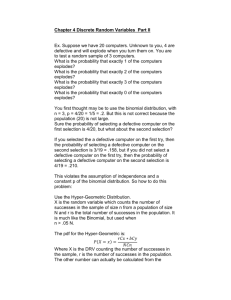

Ex. Suppose we have 20 computers. Unknown to you, 20% or 4 computers

are defective and will explode when you turn them on. You are to test a

random sample of 3 computers. What is the probability that exactly 1 of

10

the computers explodes? What is the probability that exactly 2 of the

computers explodes?

What is the probability that exactly 3 of the computers explodes? What is

the probability that exactly 0 of the computers explodes?

Your first thought may be to use the binomial distribution, with n = 3, p =

4/20 = 1/5 = .2. But this is not correct because the population (20) is not

large. Sure the probability of selecting a defective computer on the first

selection is 4/20, but what about the second selection? If you selected the

a defective computer on the first try, then the probability of selecting a

defective computer on the second selection is 3/19 = .158, but if you did

not select a defective computer on the first try, then the probability of

selecting a defective computer on the second selection is 4/19 = .210. This

violates the assumption of independence and a constant p of the binomial

distribution. So how to do this problem:

Use the Hyper-Geometric Distribution.

Y is the random variable which counts the number of successes in the

sample of size n from a population of size N and M is the total number of

successes in the population. It is much like the Binomial, but used when

n > .05 N. Each outcome can be characterized as a Success or Failure and

there is a sample of size n.

The pdf for the Hyper-Geometric RV is:

MCx * ( N M )C (n x) C ( x, M ) * C (( n x), ( N M ))

NCn

C (n, N )

max[0, n – (N-M)] ≤ k ≤ min [M, n]

P(Y x)

In the computer example above:

N = 20, n = 3, M = 4 where Y counts the number of defective computers.

P(Y = 1) = 4 C 1 * 16 C 2 / 20 C 3 = 480 / 1140 = .421

h(y; n, M, N) = h(1; 3, 4, 20)

k

0

1

2

3

P(Y = k)

0.491

0.421

0.084

0.004

The above probabilities were found using the HYPGEOMDIST function in

Excel. Sad to say that your calculators do not have a Hyper-Geometric

function.

Note that as N increases the Hyper-Geometric distribution approaches the

Binomial distribution. In fact most problems that we use the Binomial for,

are theoretically Hyper-Geometric. However, we rarely know the

11

population size, N, and the approximation is very good. How good? We

shall see lab.

Note also that we did problems like this already in chapter 3 when we did

combination problems.

Ex. There are 15 students in a class. There are 8 women and 7 men. 4 are

randomly selected to be on a committee. Let Y be the number of women

on the committee. Find the probability distribution of Y.

Y is Hyper-Geometric with N = 15, n = 4, M = 8. Possible values for Y = 0, 1,

2, 3, 4

k

0

1

2

3

4

P(Y = k)

0.026

0.205

0.431

0.287

0.051

Also note that if Y is Hyper-Geometric with N, n and M then

Mn

E (Y )

N

( N n) nM M

Var (Y )

*

* 1

( N 1) N

N

so for the previous example:

E(Y) = 8*4/15 = 2.133

V(Y) = 8(7)(4)(11)/225*14 = .783

σ = .884

In Class: 68, 71

64. 68. Cameras. X = number of 3 mega-pixel cameras in sample.

X ~ HG(n=5, M=6, N=15)

P(X =2) = 1260/3003 = .4196

P(X ≤2) = (126 + 756 + 1260)/3003 = 2142/3003 = .7133

P(X ≥ 2) = 1 – (P(0) + P(1)) = 2373/3003 = .7902

71

The Negative Binomial Distribution

1. Sequence of independent trials. (With replacement or large population)

12

2. Each trial results in a Success (S) or Failure (F).

3. The probability for a success is constant from trial to trail.

P(S on any trial) = p

4. The experiment continues until r successes are observed, r is a preset

positive integer.

X counts the number of failures that precede the rth success.

X~NB(r, p)

x

nb( x; r , p) x r 1C r 1 p r 1 p

x=0, 1, 2, …

E( X )

r (1 p)

p

V (X )

r (1 p)

p2

Notes: for a Negative Binomial RV, there is no fixed sample size.

Some other books count the total number trial not the failures.

75. Sex of children

p = .5, 1 – p = .5

X = number of male children until 2 females, so r = 2

P(X = x) = (x + 1)C 1 (.52)(.5x) x = 0, 1, …

P(X = x) = (x + 1) .52+x x = 0, 1, …

P(X = 2) = 3 C 1 .54 = 3/16 = .1875

P(at most 4 children) = P(X ≤ 2) = P(0) + P(1) + P(2)

= .25 + .25 + .1875 = .6875

E(Males) = 2 * .5 / .5 = 2

E(number of children) = 2 + 2 = 4

The Geometric Distribution is a special case of the Negative Binomial

when r =1, and usually counts the number of trials, not failures.

Ex. A door-to-door encyclopedia salesman is required to document 5 inhome visits each day. Suppose that he has a 30% chance of being invited

into a home and that each home is independent. What is the probability

that he requires fewer than 8 houses to achieve the 5 required?

nb(x; p = .3, r = 5)

13

P(X < 3) = P(X ≤ 2) = P(X = 0) + P(X = 1) + P(X = 2)

P(X = 0)= 4 C 4 * .3^5 * .7^0 = .0024

P(X = 1) = 5 C 4 * .3^5 * .7^1 = .0085

P(X = 2) = 6C4 * .3^5 * .7^2 = .0178

P(X < 3) = .0288

The Poisson Distribution

Characteristics:

1.

The experiment consists of counting the number of times a certain

event occurs during a specific time or in an area.

2.

The Probability that the event occurs in a given time or area is the

same for all units.

3.

The number of events that occur in one unit is independent of the

other units of time or area.

4.

The mean or expected number of events in each unit is denoted by

the Greek letter lambda = λ.

If Y has a Poisson Distribution with mean λ then the pdf for Y is:

x e

P( X x)

=p(x; λ) for k = 0, 1, 2, …

x!

E(Y) = λ and Var(Y) = λ

Ex.

The average number of occurrences of a certain disease in a certain

population is 2 per 1000 people. In a random sample of 1000 people from

this population, what is the probability that exactly 1 person in the sample

has the disease? What is the probability that at least 1 person in the

sample has the disease?

Y = counts the number of people in the sample with the disease.

Y has a Poisson distribution with λ = 2.

P(Y = 1) = 21 * e -2 / 1! = .270

P(Y ≥ 1) = 1 – P(Y = 0) = 1 - .135 = .865

On the TI83/84

[2nd] [DIST] (VARS key)

C: poissonpdf(λ, k)

poissonpdf(λ, k) gives P(Y = k)

for the last example:

14

poissonpdf(2, 1) = .270

poissonpdf(2, 0) = .135

On the TI83/84

[2nd] [DIST] (VARS key)

poissoncdf(λ, k)

poissoncdf(λ, k) gives P(Y ≤ k)

In Class: 75, 83

79.

X ~ Poisson(λ = 5)

P(X ≤ 8) = .9319

P(X = 8) = .932 - .867 = .065

P(9 ≤ X) = 1 - .932 = .068

P(5 ≤ X ≤ 8) = .932 - .440 = .492

P(5 < X < 8) = P(X ≤ 7) – P(X ≤ 5) = .867 - .616 = .251

88.

15