Node Cover - Erickson, Isaac

advertisement

Isaac Erickson

University of Mary Washington

Parallelizing the Node Cover Problem

Isaac Erickson

University of Mary Washington

CPSC 370W Parallel Computing

Fall 2012

Isaac Erickson

University of Mary Washington

Parallelizing the Node Cover Problem

Isaac Erickson

CPSC 370W Parallel Computing

Fall 2012

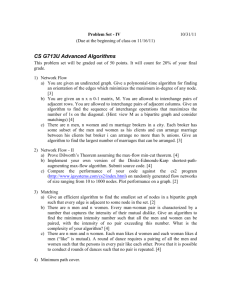

For this project, my goal was to illustrate the differences in form and performance

for OpenMP and MPI. To do this I used the Node Cover NP complete problem (often

referred to as Vertex Cover) as a medium by which to run comparisons. Generally

speaking I found that as more CPUs are applied to the problem, the faster the task was

completed.

For the purpose of these experiments, we used the Node Cover NP complete

problem. As defined by Dr. Bin Ma, the University Research Chair at the University of

Waterloo:

Vertex Cover

- Instance: A graph G =< V,E >,

V = {v1, v2,…, vn}, E = {e1, e2, … , em}.

- Solution: A subset C ⊆ V, such that for any

ej = (va, vb) ∈ E, either va ∈ C or vb ∈ C.

- Objective: Minimize c = |C|.

In layman’s terms, this means that for any graph of size N, there can be found a

subset of N that satisfies the requirement that all vertices have at least one end that is a

member of that subset, and that that subset of N is the smallest possible subset while

still satisfying that requirement. To test this, I wrote three different versions of the same

algorithm to test them, a linear, an OpenMP, and a MPI version.

The base sequential version of my algorithm used a modified depth first search

to find the optimal subset of N recursively. I used a two dimensional array to represent

each node and their individual links to other nodes and an additional two arrays to keep

track of which nodes had been visited or covered in the current search. Then the

search was run using each individual node as a starting point to insure that all possible

configurations of the subset were examined. The actual subset was not saved, only an

integer representing the size of the subset |S ⊆ N|, because as I was using a randomly

generated tree for each test, the node covers were irrelevant, only the time it took to find

them. On testing with a single core on our class cluster, the problem exhibited

exponential growth. With only 500 nodes in the tree an answer was generated in just

under 30 seconds. With 1,000 nodes it took about 5 minutes. A 5,000 node test took

half an hour. When increased to 10,000 nodes the process took 13 hours, and with

2,000 added for a total of 12,000 nodes it took just over 23 hours to complete.

Isaac Erickson

University of Mary Washington

With the 12,000 node benchmark found using the cluster and BCCD, the same

algorithm was executed on Ranger which yielded a 21.75 hour completion time.

12,000 Nodes

10,000 Nodes

Ranger

5,000 Nodes

Linear Time

1,000 Nodes

500 Nodes

0

200

400

600

800

1000

1200

1400

1600

The first parallel version was created using OpenMP. Fortunately the

composition of the sequential version was such that it lent itself to being parallelized

very easily. Each iteration of the recursive algorithm had been called in turn for each

starting node from a “for” loop. All I had to do was place that “for” loop inside another

and specify what section of the tree each thread would be responsible for running

iteration for. In addition to this I had to move the primary array into global space. Using

OpenMP, I had trouble passing the private version to each individual thread. This led to

minor changes in the recursive function.

My OpenMP version was run three times for 12,000 nodes, twice on Ranger and

once on our class Cluster. The first execution on ranger seemingly failed. It exited out

of the process without returning any value for the minimum node cover set in just under

five seconds, meaning it created the random node tree and started the specified 16

threads, then exited out for un-known reasons. After it failed I ran a full blown test on

the cluster for 12,000 nodes for trouble shooting purposes. This finished in the

projected time of just under 2 hours with a good value for the node cover set. Then with

no changes to the code, it was run on Ranger again with a different compiler. That

execution did return a value for the node cover set, however it finished faster than

expected. It should have finished in time comparable to the class cluster results, but

instead it finished in exactly the same time as the 48 core MPI execution that we will

discuss next.

12,000 MPI48

Ranger

12,000 OpenMP

Cluster

0

2000

4000

6000

8000

Isaac Erickson

University of Mary Washington

Adapting the previous code for MPI was not difficult. After removing the OpenMP

code from the algorithm, the base MPI statements were inserted and a few

transmissions were added. The only tricky part was broadcasting the primary array to

all child processes from the primary process. On receipt of the broadcast, the child

process copies would be populated with only the memory locations for the pointers to

the second dimension arrays, the rest would be filled with garbage. To solve this I

broadcasted each second dimension array individually in a “for” loop.

The MPI version of my algorithm was executed three times on Ranger, for 16,

32, and 48 cores. All three were run with 12,000 nodes for input. The 16 core

execution completed in about 1 hour and 12 minutes. The 32 core finished more

quickly in 28 minutes, and the 48 core execution completed in 16 minutes.

Ranger

MPI 16

MPI 48

MPI 32

MPI 32

MPI 48

MPI 16

0

1000

2000

3000

4000

5000

6000

In most cases each parallel algorithm exhibited speed ups that were about the

same as the time to complete sequentially divided by the number of cores applied. The

only exception to this was the OpenMP execution on Ranger. The 16 core MPI

execution mathematically should have completed in no more then 1/16 th the time of the

sequential version, about one hour and twenty one minutes. It finished on Ranger in

one hour, twenty one minutes, and one second. The 32 core MPI execution finished

nine minutes slower than projected however. 1/32nd of the sequential version being 40

minutes, it finished in 49 and one half minutes. This was about a 19% loss in speedup.

The 48 core MPI execution however only lost about 4%, finishing in 28 minutes instead

of the expected 27 minutes. This was substantially less of a loss over its 32 core

counterpart. So the 16 core MPI version exhibited close to 100% efficiency, the 32

cores efficiency dropped to only 84%, and the 48 core efficiency came back up to 97%.

The OpenMP execution on our class cluster yielded a speedup of 11.68,

substantially less than the MPI16s which was at 16.21 for the same number of cores.

But these are two different systems. The problem is that the OpenMP execution on

Ranger finished at break neck speed, generating a 46.85 speedup. This is equal to the

MPI48 version. So ether Ranger is extremely efficient with managing threads, or only

about one third of the permutations for N were calculated.

From this data we gleam two important facts to remember for future testing.

One: that not all systems are equal. What runs on one can crash another or produce

random unpredictable results. Two: that there is a predictable overall decrease in

Isaac Erickson

University of Mary Washington

efficiency as the number of cores is increased, but efficiency can fluctuate along that

curve depending on the makeup of the algorithm.

In conclusion, both MPI and OpenMP are effective methods of performing tasks

in parallel. MPI appears to be more stable than OpenMP, probably because it creates

fully fledged copies of the main program that run independently rather than trying to

maintain control over multiple threads, insuring that the maximum size of any running

program is minimal. If one fails, all do not. Additionally there is predictability to the

speed up received by applying cores to both with regards to my algorithm, but the actual

results will fluctuate slightly.

This work was supported by the National Science Foundation through XSEDE

resources with grant ASC120039: "Introducing Computer Science Students to

Supercomputing in a Parallel Computing Course" and by the Texas Advanced

Computing Center, where the supercomputer we used is located. We wish to thank

XSEDE and TACC for their support

Thanks to Dr. Toth for providing superb instruction and a private cluster for class

use, and to the University of Mary Washington.

Depth-first search (DFS) for undirected graphs. (n.d.). Retrieved December 11, 2012, from Algorithms

and Data Structures: http://www.algolist.net/Algorithms/Graph/Undirected/Depth-first_search

Goldreich, O. (2010). P, NP, And NP-Completeness: The Basics Of Computational Complexity [e-book].

Ipswich, MA: Cambridge University Press.

Gusfield, D. (2007, Fall). ECS 222A - Fall 2007 Algorithm Design and Analysis - Gusfield. Retrieved

November 15, 2012, from UCDavis Computer Science:

http://www.cs.ucdavis.edu/~gusfield/cs222f07/tillnodecover.pdf

Lyuu, Y.-D. (2005). Prof. Lyuu's Homepage . Retrieved November 15, 2012, from National Taiwan

University: http://www.csie.ntu.edu.tw/~lyuu/complexity/2005/20050602.pdf

Ma, B. (n.d.). CS873: Approximation Algorithms. Retrieved October 23, 2012, from University of

Western Ontario: http://www.csd.uwo.ca/~bma/CS873/setcover.pdf

Muhammad, Dr. R. (n.d.). Department of Computer Science, KSU. Retrieved October 23, 2012, from Kent

State University:

http://www.personal.kent.edu/~rmuhamma/Algorithms/MyAlgorithms/AproxAlgor/vertexCover.htm

Vazirani, U. V. (2006, July 18). Retrieved December 02, 2012, from Electrical Engineering and Computer

Sciences UC Berkeley: http://www.cs.berkeley.edu/~vazirani/algorithms/all.pdf

Wayne, K. (2001, Spring). Theory of Algorithms "NP-Completeness". Retrieved December 08, 2012, from

Princeton University: http://www.cs.princeton.edu/~wayne/cs423/lectures/np-complete-4up.pdf