A Conceptual Introduction to Simulation and the Bootstrap I

advertisement

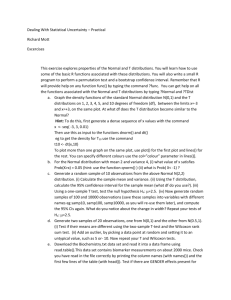

A Conceptual Introduction to Simulation and the Bootstrap I. Introduction: ``Nobody does math anymore, since computers are so powerful.’’ This remark came from Jianqing Fan, Princeton Professor of Mathematics during his plenary address at the 2011 Summer Meeting of the Society of Political Methodology. Why did he make such a statement? On one level, the statement is obvious hyperbole: Fan is well aware that analytical proof is still the `gold standard’ in statistics and understanding statistics’ inner workings and basic concepts still requires mathematical knowledge. However, on another level, Fan’s statement carries a great deal of truth. As estimation techniques become ever more complex, analytical solutions become ever more difficult to acquire. Instead, scholars increasingly rely on the power of computers (which, itself, is continuously increasing). This, in turn, means many problems in statistics can be addressed with programming, rather than pure mathematics. The power of statistical computing is perhaps demonstrated best by two methods: the bootstrap and Monte Carlo simulations. Both are powerful simulation methods that are in many ways the cornerstones of statistics-based political methodology. For instance, the majority of papers in the primary journal for political methodology (and the top cited journal in political science) Political Analysis use Monte Carlos to demonstrate the properties of new estimation techniques. Indeed, it is difficult to attend a talk at the Society of Political Methodology’s summer meetings without hearing at least one reference to Monte Carlo results. With respect to the bootstrap, this has become a `go to’ tool for identifying the statistical significance of some atypical estimand (i.e. an estimand that is not reported by a canned routine in Stata or R). When asked ``how do you know that [BLANK] is statistically significant from zero?’’, the common reply is ``oh, I can just bootstrap it!’’ The bootstrap and Monte Carlo are, in my opinion, the two most important tools in a methodologist’s kit. Equipped with these tools, a political scientist can consider strange estimators and feel free to explore a host of methodological problems. With a Monte Carol, a political scientist can gain a sense of an estimator’s statistical properties. With the bootstrap, a political scientist can use any estimation approach to gain statistical insight into his/her data of interest (e.g. is the coefficient or other estimand in which you are interested statistically significant from zero). In short, no matter how exotic the technique, the analyst can understand and utilize it. Unfortunately, despite being around for some time, students are still frequently left to `figure it out’ when it comes to learning these techniques.1 Therefore, this memo seeks to provide a basic conceptual introduction to the bootstrap (with a subsequent memo on Monte Carlos). I also provide a memo with examples of code for conducting a bootstrap (in Stata). 1 Fortunately, Walter Mebane, John Jackson, and Rob Franzese were willing to teach me how to conduct both bootstraps and Monte Carlos. Additionally, I received an initial introduction to Monte Carlos from Bill Even shortly after completing my undergraduate studies at Miami University. II. The Bootstrap: A Definition Bootstrapping is a resampling technique used to obtain estimates of summary statistics (Efron in Annals of Statistics 1979). It is a play off of the phrase ``to pull oneself up by his bootstraps’’, which is a colloquial way of saying ``to use your own grit and determination to achieve a certain goal.’’ In this case, we are not talking out your own ``grit and determination’’ but your own ``data’’ (even if the data are limited). III. The Bootstrap: A Motivation Recall how an earlier memo discussed the concept of a superpopulation: a hypothetical infinite population from which the finite observed population is a sample (see Hartley and Sielken in Biometrica June 1975). While the idea of a super population addresses one problem (the problem of not having a larger distribution from which observational data is drawn), it presents another problem: what is the distribution of the super population? If you don’t know the distribution of the superpopulation, then you can’t possibly extract draws from it (and, therefore, you can’t devise meaningful statistical inferences). One option is to assume it. Is this totally unreasonable? Possibly not, since, by the various Central Limit Theorems, we know that, given certain conditions, the mean of a sufficiently large number of independent random variables, each with finite mean and variance, will be approximately normally distributed. However, how big is ``sufficiently large’’? This is one of the difficulties with most mathematical proofs in statistics – you might be able to analytically prove the consistency and efficiency of, say, a ham sandwich, but it’s all based on asymptotics (i.e. really, really ridiculously large samples). Efron (1979) offers a work around. If we at least assume that it has a finite variance, then, according to Efron (1979) we can estimate the distribution, F, of some prespecified random variable, R(X, F), on the basis of the observed data X (or random sample x). In other words, we can think of bootstrapping as a way of using your observed sample to approximate a larger sample from the superpopulation. IV. The Bootstrap: What it Does The bootstrap is actually a straightforward procedure. Suppose one is interested in knowing if a parameter value that is estimated from your observational data (say the relationship between military capabilities and war victory) is truly different from zero. When we state this, we are asking, ``if I drew repeated samples of data from the super population, would I find that the parameter of interest was different from zero an `acceptable’ number of times (where `acceptable’ is a pre-specified threshold chosen by the analyst)?’’ When one uses a boostrap, they answer the above question by conducting the following procedure: Step 1: With your original observational dataset, compute the parameter of interest. Step 2: Resample with replacement from your observational dataset to produce a new realization of your dataset (where, in this new realization of the dataset, war might, for example, occur more or less frequently than in your original dataset). Step 3: Compute the parameter of interest using your resampled dataset. Store this value. Step 4: Repeat steps 2 and 3 a bunch of times (>=1,000 times). Step 5: Compute desired summary stats of your 1,000+ realizations of your parameter of interest (i.e. find the mean of this parameter’s value; find the 2.5 percentile and 97.5 percentile values of this parameter; compute standard errors; etc.). In short, the bootstrap uses your observational data and, by repeatedly resampling with replacement, creates a `bootstrap distribution’ that can approximate the true distribution of your superpopulation. It’s almost like magic! V. The Bootstrap: Why it Works I will not discuss the formalities of the bootstrap here. Indeed, in his 1979 paper, Efron seemed content to allow the results from his bootstrap examples speak for themselves. However, a bit of intuition could prove useful. Suppose that you could draw one sample from the superpopulation. With that sample, you compute the mean of that sample. Then you draw another sample from the superpopulation and compute the mean again. Next, you compute the average value of the means from your two drawn samples. If you did this over and over and over and over….and over again, then the average from all of these draws would begin to approximate the actual mean of your superpopulation’s distribution. Unfortunately, you can’t do what I just described. This is because, quite simply, you don’t know the superpopulation, nor its distribution. Instead, you only have your one dataset, which is a single draw from that superpopulation. Fortunately, we can assume that your observed dataset must tell us something about the superpopulation from which it was drawn (since it comes from the superpopulation). Returning to the example of war, it might be the case that war occurs more frequently in the superpopulation than in the observed sample drawn from the superpopulation. However, unless the observed sample is some really bizarre draw from the superpopulation (and, hence, a really bizarre depiction of human behavior), then we can probably feel safe using it to learn something about the super population. In fact, even if it is a bizarre draw, if we repeat the resampling procedure enough times, it should also begin to tell us something informative about the actual superpopulation. Having motivated the use of the bootstrap, we can now turn to actually programming and running bootstraps (in the example memo).