MDM4U Probability Distributions

advertisement

MDM4U Probability Distributions

Probability experiments often have numeric outcomes counted or

measured. A random variable is a variable whose possible values are

numerical outcomes of a probability experiment. Random variables are

usually denoted by upper case (capital) letters. The possible values are

denoted by the corresponding lower case letters, so that we talk about

events of the form [X = x]. A random variable, X, has a single value (x)

for each outcome in an experiment.

Example

Show a random variable for tossing a coin.

Random variable X = “number of times a head is flipped in one coin

toss”,

Possible values of X: x ∈ {0, 1}.

Example

Show a random variable for rolling a die

Random variable X = “number on the top of a die”

Possible values of X: x ∈ {1, 2, 3, 4, 5, 6}.

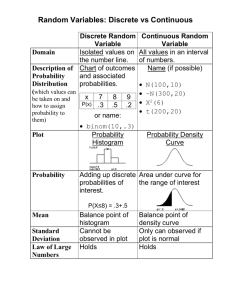

Types of random variables

There are two types of random variables, discrete and continuous.

1. Discrete random variable is a variable which can only take a

countable number of values. Thus, all possible values of a discrete

random variable could be numbered. Although the set of all possible

numerical outcomes of a discrete random variable could be infinite (but

countable!), for many discrete random variables this set is finite. We call

them finite random variables.

2. Continuous random variable can get all real values from some

interval on the number line. The set of all possible numerical values of a

continuous random variable cannot be numbered.

Example

Classify the following random variables as discrete or continuous.

1. Length of time you stay in a class.

2. Number of classes you attend in a day.

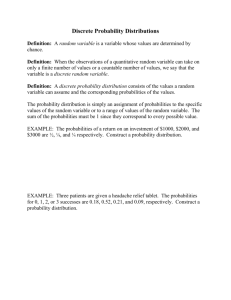

Random variables are described by their probabilities. For a discrete

random variable, its probability distribution gives each possible value

and the probability of that value:

x

𝑃[𝑋 = 𝑥]

𝑥1

𝑝1

𝑥2

𝑝2

𝑥3

𝑝3

…

…

𝑥𝑛

𝑝𝑛

Example

Probability distribution for number of tattoos each student has in a

population of students

Tattoos

Probability

𝑃[𝑋 = 𝑥]

0

0.850

1

0.120

2

0.015

3

0.010

4

0.005

Note: The total of all probabilities across the distribution must be 1, and

each individual probability must be between 0 and 1, inclusive:

0.850 + 0.120 + 0.015 + 0.010 + 0.005 = 1.

The notation P(x) is often used for 𝑃[𝑋 = 𝑥]. In this example, 𝑃(4) =

𝑃[𝑋 = 4] = 0.005.

Example

Probability distribution for number of heads in 4 flips of a coin

Heads

Probability

0

1

16

1 4

1

𝑃(0) = ( ) =

2

16

4

1

4 1

𝑃(1) = ( ) ( ) = 4 ∙

=

1 2

16

4

1

4 1

(

)

𝑃 2 = ( )( ) = 6 ∙

=

2 2

16

4

1

4 1

𝑃(3) = ( ) ( ) = 4 ∙

=

3 2

16

1 4

1

𝑃(4) = ( ) =

2

16

1 1 3 1 1

+ + + +

=1

16 4 8 4 16

1

1

4

2

3

8

3

1

4

4

1

16

1

4

3

8

1

4

The cumulative probabilities are given as

The interpretation is that F(x) is the probability that X will take a value

less than or equal to x. The function F is called the cumulative

distribution function (CDF).

For example, consider random variable X with probabilities

x

𝑃(𝑥)

0

0.05

1

0.10

2

0.20

3

0.40

4

0.15

5

0.10

For our example,

𝐹 (3) = 𝑃[𝑋 ≤ 3] = 𝑃(0) + 𝑃(1) + 𝑃(2) + 𝑃(3)

= 0.05 + 0.10 + 0.20 + 0.40 = 0.75.

One can of course list all the values of the CDF easily by taking

cumulative sums:

x

𝑃(𝑥)

F(x)

0

0.05

0.05

1

0.10

0.15

2

0.20

0.35

3

0.40

0.75

4

0.15

0.90

5

0.10

1.00

The values of F increase.

Expected Value is the average of the outcomes. The expected value of X

is denoted either as E(X) or as μ. It’s defined as

The calculation for this example is

E(X) = 0 × 0.05 + 1 × 0.10 + 2 × 0.20 + 3 × 0.40 + 4 × 0.15 + 5 × 0.10

= 0.00 + 0.10 + 0.40 + 1.20 + 0.60 + 0.50

= 2.80

E(X) is also said to be the mean of the probability distribution of X.

The probability distribution of X also has a standard deviation, but one

usually first defines the variance. The variance of X, denoted as 𝑉𝑎𝑟(𝑋)

or σ2, is

This is the expected square of the difference between X and its expected

value, μ. We can calculate this for our example:

x

0

1

2

3

4

5

x – 2.8

–2.8

–1.8

–0.8

0.2

1.2

2.2

(𝑥 – 2.8)2

7.84

3.24

0.64

0.04

1.44

4.84

𝑃(𝑥)

0.05

0.10

0.20

0.40

0.15

0.10

(𝑥 – 2.8)2 𝑃(𝑥)

0.392

0.324

0.128

0.016

0.216

0.484

The variance is the sum of the final column. This value is 1.560.

This is not the way that one calculates the variance, but it does illustrate

the meaning of the formula. There’s a simplified method, based on the

result

This is easier because we’ve already found μ, and the sum

is fairly easy to calculate.

For our example, this sum is

02×0.05 + 12×0.10 + 22×0.20 + 32×0.40 + 42×0.15 + 52×0.10 = 9.40.

Then

This is the same number as before, although obtained with rather less

effort.

The standard deviation of X is determined from the variance.

Specifically, the standard deviation of X is 𝜎 = √Var (𝑋). In this

situation, we find: σ = √1.56 ≈ 1.25.

Example

Given the following probability distribution, determine the expected

value and standard deviation.

x 𝑃(𝑥)

2 0.4

4 0.1

6 0.5

Solution

E(X) = 2 ∙ (0.4) + 4 ∙ (0.1) + 6 ∙ (0.5)

= 0.8 + 0.4 + 3

= 4.2

The expected value is 4.2.

Var(𝑋) = 22 ∙ (0.4) + 42 ∙ (0.1) + 62 ∙ (0.5) – 4.22

= 1.6 + 1.6 + 18 – 17.64 = 3.56

σ = √3.56 ≈ 1.9.

The standard deviation is 1.9.

Uniform Discrete Distribution is a distribution of probabilities with

equally likely outcomes.

Example Determine the probability distribution, expected value, and

standard deviation for the following random variable: the number rolling

on a dice.

Solution

The probability distribution is uniform:

x

𝑃(𝑥)

1

2

3

4

5

6

1

6

1

6

1

6

1

6

1

6

1

6

1

1

1

1

1

1

21

6

6

E(X) = 1 ∙ + 2 ∙ + 3 ∙ + 4 ∙ + 5 ∙ + 6 ∙ =

6

6

1

1

6

1

6

1

6

1

Var(X) = 1 ∙ + 4 ∙ + 9 ∙ + 16 ∙ + 25 ∙ + 36 ∙

=

σ=√

35

12

91

6

6

–

49

4

≈ 1.7

=

6

35

12

6

6

6

7

= = 3.5.

1

6

2

–

49

4

Example Determine the probability distribution and expected value for

the following random variable: the sum of the numbers rolling on two

dice.

Solution

x

P(x)

2 3 4 5 6 7 8 9 10 11 12

1 2 3 4 5 6 5 4 3 2 1

36 36 36 36 36 36 36 36 36 36 36

E(X) = 2 ∙

1

36

6

+3∙

2

36

5

+4∙

3

36

4

+5∙

4

+6∙

36

3

5

36

+

2

1

+ 7 ∙ + 8 ∙ + 9 ∙ + 10 ∙ + 11 ∙ + 12 ∙

36

36

36

36

36

36

= 7.