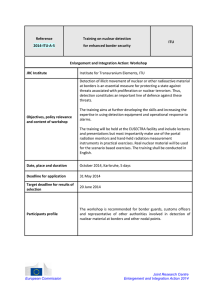

Northwestern-Kirshon-Miles-All-Harvard

advertisement