downloading

advertisement

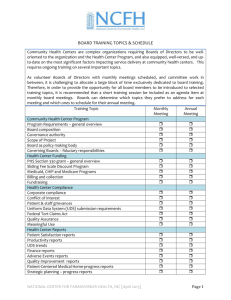

IPbus Performance Measurement Ideas for 904 Test Stand in Summer 2013 Tom Williams version 2nd May 2013 904 μTCA Test Stand Setup The physical setup required for performance measurements to start is summarized below: Based on discussion with Marc Dobson, the PCs will (I think) be connected to the μTCA crate via our own dedicated switch, with some additional connection route between the PCs and the 904 904 network 1Gb/s Switch general network for ssh access to PCs. 1Gb/s uTCA crate MCH (Vadatech) 4 GLIB boards 1Gb/s PC A (SLC6, 64-bit) PC B (SLC6, 64-bit) Must have potential to setup 1Gb/s cable directly from one of PCs to μTCA crate – for debugging if we get unexpected results (e.g. never reach 1Gb/s over network). Also useful to check extra latency from network switches. Marc Dobson said this will be possible in our last meeting. All of the measurements below of course require the test stand is setup as above such that: All components have power Each board has a unique MAC address “officially” assigned by CMS Each board has a unique IP address. (We will have our own 192.168.xxx.yyy subnet for the boards.) N.B. How will the IP address assignment work? RARP? Will both the software and firmware be available? The PCs and boards are connected via Ethernet such that one can send a “ping” between the two PCs, and such that each PC can ping each board. This of course requires that the boards have MAC & IP addresses One of the PCs must have JTAG / similar access to the boards in order to be able to reload firmware onto the boards easily. Some other important points/principles: The basic test executables used should be packaged up in the IPbus software suite release (for sake of reproducibility). o Then just write wrapper scripts that iterate the measurements vs the “x” variable, and parse the output of the PerfTester commands. o However, the ControlHub will likely be the component controlling the number of packets in flight (rather than making several different firmware images). Hence, it will have to be re-compiled from the tagged sources for the different measurements vs “nr in-flight”, with the appropriate “MAX_IN_FLIGHT” macro redefined each time. It would be nice to be using a tagged copy of the firmware by this stage as well Should check at all stages that behavior of measured bandwidths/latencies vs. “number of transactions”/”number in-flight” are close to values expected based on ping latency between PCs/boards & sequence diagrams in spec document. Definitions Bandwidth (bits/s) = Nr registers modified * 32 / latency Latency = time taken for uHAL client to perform IPbus transactions. This should be measured in the uHAL client PC, starting from first corresponding uhal::read/write call, and stopping when the dispatch() method returns. The performance measurements are separated into 4 sets here … Part A: Simplest topology (1 packet in-flight) Performance for 1 client, talking to 1 board, with 1 packet in-flight … i.e. check that latencies and bandwidth have expected values in simplest possible topology. Measure latency & bandwidth as function of number of words read/written/modified for: Sequence of single register reads/writes @ random addresses Block read/write (Optional extra) RMW transactions @ random addresses Repeat in the following configurations: Protocol: 1. Direct UDP 2. via ControlHub (both client and ControlHub on same PC) 3. via ControlHub (client and ControlHub on different PCs) Client: On PC A & PC B Compare measured latencies & bandwidths with rough expectation from sequence diagrams, and ping latency. N.B: Check that as number of packets increases, the “via ControlHub” bandwidth asymptotically approaches the “direct UDP” bandwidth. Part B: Simplest topology, with multiple packets in-flight Performance for 1 client, talking to 1 board, with N packets in-flight … i.e. see how high we can get the bandwidth by increasing the number of packets in-flight Measure bandwidth for block reads/writes as a function of number of packets in-flight, N – calculate both all data down cable (i.e. full IPbus packet + UDP header), and only counting the registers written/read Repeat with the following configurations: Both with uHAL client / ControlHub running on same PC and on different PCs Transaction: Block read only & block writes only (Results should be the same.) Is 1Gb/s ever reached? N.B: Calculate what fraction of data travelling along the cable is the values that will be written to the registers. Use these results to decide what number of packets in-flight to use for all future measurements. Part C: Multiple clients and/or boards Performance for simultaneous large block reads/writes from n clients, talking to 1 or n boards … Questions to answer with these measurements: Does the bandwidth of each client get throttled back equally, or do some clients see higher fraction of bandwidth than others? Does the ControlHub ever limit the bandwidth? Repeat with the following configurations: Setup: o 1) all clients talking to a different board; 2) all clients talking to the same board o uHAL clients on either same / different PC as ControlHub Transaction: Block reads only & block writes only Measure as a function of number of clients, n: Total bandwidth (all clients summed up) Average bandwidth per client Range of bandwidths for different clients ControlHub memory & CPU usage N.B. Since we will only have 4 boards, we would need to put multiple ipbus endpoints in each board in order to simulate n > 4. However, when doing this, we of course need to check that the block read/write bandwidth to 2 IPbus endpoints on the same board is the same as for 2 IPbus endpoints each on their own board. Part D: Packet loss & MCH switch congestion Main aspects to check here: 1. Level of packet loss in real usage: Aim: Check if can see any packet loss when put system under maximal realistic load Continuous block read from each board in crate, each to separate uHAL client. Look at ControlHub stats command output to count number of packets lost. Are any packets lost? If so, how much does bandwidth degrade by? This should be tested with both types of MCH – i.e. NAT and Vadatech – if possible. Repeat with other traffic injected over the switch in order to replicate realistic Point 5 situation, where there will be > 1 crate and > 1 ControlHub connected to each switch. 2. Reduction in performance from artificially inducing packet loss Measure for packet loss on UDP & TCP sides separately Compare with prediction Part E: System/component robustness tests Possible ideas: Emulate MCH dying during/before IPbus communication with board. o i.e. pull the Ethernet cable out of MCH? o What does uHAL end-user see? Emulate boards dying during/before IPbus communication. o i.e. switch off that individual board or cause it to crash somehow. o What does the uHAL end-user see? o How will MCH death eventually be detected in Point 5? IPMI? o Can/should the ControlHub in principle differentiate between “dead MCH” and “dead board” situations – e.g. “no route to host” when try to send udp vs. “no response to UDP“?