proposed dynamic clustering based on discrete pso-ga

advertisement

Sci.Int.(Lahore),27(3),2055-2061,2015

ISSN 1013-5316; CODEN: SINTE 8

2055

PROPOSED DYNAMIC CLUSTERING BASED ON DISCRETE PSO-GA

Hamid Tabatabaee 1,* ,Maliheh Hassan Pour2,Ameneh fakhraee3, ,Mohammad Reza Baghaeipour4

1,* Young Researchers and Elite Club, Quchan Branch, Islamic Azad University, Quchan, Iran.

3,2 Department of Computer Engineering ,Mashhad Branch, Islamic Azad University, Mashhad, Iran.

4 Department of Computer Engineering, Ferdows Higher Education Institute, Mashhad, Iran.

ABSTRACT: Data clustering is the process of identifying clusters or natural groups based on similarity measures. Despite the

improved clustering analysis algorithms, most of the clustering algorithms likewise require number of clusters as an input

parameter. Hence, this paper describes a new method of clustering, called dynamic clustering based on discrete particle

swarm optimization and genetic algorithms (DPSO-GA). The proposed algorithm automatically determines the optimal

number of clusters and at the same time clusters the data set with minimal user intervention. In this method, the discrete

PSO algorithm as well as genetic are employed with a new display solutions which are of variable length to determine the

number of clusters and initial cluster centers for K-Means algorithm to improve its performance. The results obtained from five

UCI evaluation datasets show that the proposed algorithm has higher performance in comparison with other methods.

Keywords: Clustering, Particle swarm optimization, Genetic algorithms.

1. INTRODUCTION

Clustering is one of the important category of Unsupervised

learning methods for grouping objects into groups distinct

from the clusters [ 1]. Cluster grouping is usually defined as

the objects grouped into one cluster are similar according to

the given criteria while they are different with the objects

from other clusters according to the criteria given [ 1] .

In recent years, clustering is widely used in various fields

such as pattern recognition, machine learning and market

analysis [ 2 ]. Analysis of clustering is a significant technique

in data mining [ 3 ] . The primary objective of clustering is to

put similar data within a cluster and then collect the highsimilarity clusters [ 3 ]. Through clustering, valuable

information such as the distribution and characteristics of

data which come from a very large data is obtained and

hidden information is extracted by reducing the complexity of

the data. Thus, the results of clustering can be effectively

applied to solve problems or to help with decision making.

Four types of clustering algorithms have been identified: the

first group of clustering algorithms is based on the idea that

the neighborhood data should be placed in the same

cluster. As it is stated in [ 4 ] this type of algorithms is robust

to recognize the clusters of any shape, but it fails when there

is a small spatial separation between the clusters. The second

class of clustering algorithms has been made by means of the

dynamic changes in the cluster to make the ultimate

solution. These algorithms are the most popular clustering

algorithms contains k-means [ 5 ] and the other category is

based on model-based clustering [ 6 ]. The fourth class

of clustering algorithms characterized by different methods

which optimize the different attributes of data set [ 7 , 8 ].

So far, the speed and efficiency of data processing has been

the focus of clustering development and the most clustering

methods such as k-means need to get the number of clusters

as an input parameter. Appropriate number of clusters may

have an impact on clustering results. For example, the smaller

number of clusters can help the clarity of the

original data structure but the hidden information cannot be

detected. On the other hand, using large number of clusters

may result in high heterogeneity of the same cluster sets but it

ignores the basic structure of data [ 3] .

In recent years, due to their ability to solve various problems

with very little change, evolutionary computation algorithms

are widely used for clustering problems. On the other hand,

these algorithms can manage the limitations in a good

way. Hruschka et al. [ 9 ] have applied evolutionary

algorithms to clustering problems in which the genetic

algorithm is used employed. In this study, a simple coding

procedure manipulates the fixed length individuals. The

objective function maximizes the between-cluster similarity

and between-cluster dissimilarity. In addition, this procedure

finds the true number of clusters based on external

criteria. There are various kinds of evolutionary methods that

have studied clustering problems, such as evolutionary

programming [ 10 ], particle swarm optimization [ 11 , 12 ],

ant colony algorithm [ 13 ] or bee colony algorithm [ 14 ].

When

the

length

of

display

solutions

in evolutionary algorithms is equal to the number of data,

then clustering efficiency will be reduced when there is a

large number of data [ 15 ].So this paper suggests the

dynamic clustering method based

on discrete

particle

swarm optimization and genetic algorithm (DPSO-GA) . The

proposed algorithm automatically determines the optimal

number of clusters and simultaneously clusters the data set

with minimal user intervention.

The proposed method uses discrete PSO algorithm [ 16 ] and

genetic algorithms with a new display solutions which are of

variable length, we use the number of clusters and the

primary centers of the clusters to specify the algorithm of our

K-Means and thus raise the efficiency of clustering.

The rest of the paper is organized as follows: In Section 2,

particle swarm optimization algorithm and discrete particle

swarm optimization algorithm is described. In Section 3 and

Section 4, the genetic algorithms are briefly explained. In

Section 5, the details of the proposed algorithm are

given. Experimental results on five databases are reported in

space Section 6. Finally, concluding remarks are presented in

Section 7.

2.Particle swarm optimization Algorithms (PSO)

PSO Algorithms [17 ] acts on a population of particles.

In PSO the practical solution is called a particle and a fitness

value of each particle is given through the objective

function. Each particle has its own velocity (V id) and

location (X id). The velocity and position of particle is

modified according to its own flying experience and the

population of particles. Thus, each particle moves towards the

probability of best given ( pbest ) and the global best

( gbest ). Initially, the algorithm randomly produces the initial

May-June

2056

ISSN 1013-5316; CODEN: SINTE 8

velocity (V id) and the initial position (X id Points) of each

particle. Each particle remembers its best fitness value, and

further, the individual values are compared to determine the

best of the best called global best ( gbest ). Finally, the

velocity and position of each particle is modified by the

optimal individualal solution and the global best

solution. The optimal solution is determined by repeating the

calculations. Therefore, the update equation of the particle

velocity and position are the relations (1 ) and (2).

𝑣𝑖𝑑 = 𝑤𝑣𝑖𝑑 + 𝑐1 × 𝑟𝑎𝑛𝑑1 × (𝑝𝑏𝑒𝑠𝑡𝑖𝑑 − 𝑋𝑖𝑑 ) + 𝑐2 ×

𝑟𝑎𝑛𝑑2 × (𝑔𝑏𝑒𝑠𝑡𝑑 − 𝑋𝑖𝑑 )

(1)

Xid = X id + vid

(2)

Where c 1 and c 2 are the individualized and global learning

factors respectively and rand 1 and rand 2 are random

variables between 0 and 1, and w 1is the inertia factor.

3.Discrete PSO Algorithm

Discrete PSO algorithm deals with discrete variables and the

length of solutions (particles) are according to the number of

data (p = number of data). Amount of each component of the

particle (vector) is between 1 and n (k = number of clusters)

that represent the clusters that have been assigned to

it. Particle X i records the best acquired place into a separate

particle called pbest i. The Particle populations, in addition,

save the best global position demonstrated by gbest [ 16 ].

The initial population of particles is produced by a set of

randomly generated integers between 1 and n. The particles

are fixed-length strings of integers are encoded as follows:

Xi = {xi1 , xi2 , … , xip }

in

which xij ∈ {1,2, . . , n}, i =

1,2, . . , m , j = 1,2, … , p.

For example, given the number of 3 clusters (k = 3) and 10

data (p = 10), the particle X1 = {1,2,1,1,2,2,1,3,3,2} indicates

a candidate solution that in this way, data solutions 1, 3, 4, 7,

have been assigned to Cluster 1and data 2, 5, 6 and 10 to

Cluster 2 and data 8 and 9 to Cluster 3. The particles

population thus is produced and fitting of each particle is

calculated.

The particle velocity is a set of transfers applied to

solutions. The rate of (transfer) each particle is calculated by

subtracting the two positions (of particles). The difference

between X i and pbesti suggest changes that will be necessary

for particle i to move from X to pbest. μ is the number of

elements against zero in subtraction. If the difference

between an element of X i and pbesti is zero, it means

that there is the possibility to change the location and that

element is subjected to change by the operation described

below.

First, the new vector P is generated in which the locations of

elements in subtracting vectors against zero are stored. We

generate a random number β. This number (β ) corresponds to

the number of changes that must be made on the basis of the

difference between X i and X i pbest i , so β lies in the range

(0, μ ). Then we generate the binary vector Ψ according to

measure μ where each component is associated with a

component of vector P. The β number of elements in the

vectorΨ is randomly initialized with 1 and the remainder is

equal to zero. If the binary number is equal to

1 means that the component must change, and if the binary

number is equal to zero, there is no need to change . A similar

process is run based on the updated particle position and the

Sci.Int.(Lahore),27(3),2055-2061,2015

global best position to calculate the particle in next step [16].

For instance, if

(𝑋𝑖 − 𝑝𝑏𝑒𝑠𝑡𝑖 ) = (1 − 1, 2 − 3, 1 − 2, 1 − 1, 2 − 2, 2 −

1, 1 − 2, 3 − 3, 3 − 2, 2 − 1) =

(0, −1, −1, 0, 0, 1, −1, 0, 1, 1)

(3)

The new P vector is equal to P = (2,3,6,7,9,10) and

suppose μ = 6 , β = 5 and ψ = (0,1,1,1,1,1).

This means that the element positions 3, 6, 7, 9 and 10 in the

vector X i are initialized by the same element positions from

pbest i. vector. The process is repeated with new particle and

gbest until the final particle is achieved.

4.Genetic Algorithms

GA algorithm is based on the idea of "survival of the fittest in

natural selection" suggested by Darwin. Algorithms GA

consists of selection operators 2 , reproduction operators 3 crossover 4 and mutation operators 5 . The fitted genes

passed on the offspring by the best parents of the generation

to reach the optimal solutions during the search relatively

quickly. GAs is employed in many applications.

5.The proposed algorithm

In the proposed method, a new display is used to show the

particles in order to reduce the size of particles. In the

following sections, first the new display and

crossover, mutation and elitism operators will be described

and then the proposed algorithm is given.

5.1

Display

In the proposed method for clustering procedure the coding

method by Falkenaure [ 18 , 19 ] in combination with the

aforementioned coding method [ 15 ] are used.. This means

that

every

individual

has

a

variable

length

for displaying solutions.

In the proposed display each individual is composed of two

parts c = [l, g] in which the first part refers to element section

and the second part shows the grouping section of the

individual.

In element section, every position is assigned to Element in

every individual, every place into a small group of data (sub

X). In this method, the stored value in this position shows the

cluster to which the data in the X group belong. For example,

the different parts of the solution (individual) for clustering

of N subgroups and k clusters is shown by following

relationship (4 ).

l1 , l2 , … , lN |g1 , g 2 , … , g k

(4)

li Indicates a cluster that the data in the subgroup i have been

assigned to it, while the group section represents the list of

tags associated with solution clusters.

lj = g i ⇔ ∀xk ∈ Xj , xk ∈ Gi

(5)

Note that the length of the element for the given problem is

fixed (equal to N), but it is not constant throughout the length

of the group and will vary from individual to

individual. Therefore, the proposed method does not require

the number of clusters as an input parameter but investigates

the fitted value of k based on the objective function.

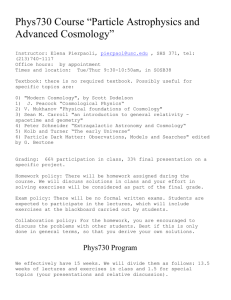

For example, Figure 1 shows a model of proposed method

solution to the problem of clustering. In this problem, of data

clustering lies within five subgroups so the length of the

element equals to 5.In this solution, the subgroup data X 1

belongs to cluster G1 , the subgroup data X 2 and

May-June

Sci.Int.(Lahore),27(3),2055-2061,2015

ISSN 1013-5316; CODEN: SINTE 8

Figure 1 Particle display in the algorithm DPSO-GA.

X 5 belongs to G2 and the subgroup data X 3 and

X 4 belongs to cluster G3 .

With this display methodology, the near data must first be

grouped into Xi subgroups. The closest neighbor for each

data, k methodology can be used to use to get the groups X i.

5.2

Crossover Operator

Crossover operator used in genetic algorithm of this paper is

similar to [ 1 ] which has been accepted for the clustering

problem. This crossover operator creates a child from two

parents composed of the following steps:

Initially, two parents are chosen randomly and crossovering

is done on two points of their group.

Elements related to selected groups of individuals within the

child come first.

Elements from the selected group of second individual enter a

second child if the elements are not initialized previously by

the first individual.

Elements that have not been initialized before are assigned to

the current groups.

Empty clusters are removed.

Current group labels in children are modified from 1 to k.

Relationship (6 ) is another example of the crossover operator

implemented in this paper.

ind1 = [1 3 2 1 4 1 1 2 3 2 1 3 4 2 1|1↓ 2 3↓ 4]

a)

ind2 = [3 1 2 1 3 2 2 1 3 1 2 3 2 2 2|↑ 1 2↑ 3]

b)off = [− 3 2 − − − −2 3 2 − 3 2 3|2 3]

c)off = [− 3 2 1́ − 2́ 2́ 2 3 2 2́ 3 − 2 2́|2 3 1́ 2́]

d)off = [3 3 2 1́ 1́ 2́ 2́ 2 3 2 2́ 3 2 2 2́|2 3 1́ 2́]

e)off = [2 2 1 3 3 4 4 1 2 1 4 2 1 1 4|1 2 3 4]

(6)

5.3

Mutation Operator

Mutation operator makes small modifications in each

individual of population with a low probability of occurring

in A and D to discover the new areas of the search and when

the algorithm is close to the convergence escapes the local

optimum [1].

1-Mutation by dividing cluster: dividing the selected cluster

into two different clusters. Samples which belong to the

main cluster with equal probability are assigned to the new

cluster. Note that one of the new clusters produced keeps its

class tag in the group section while new label of k + 1 is

assigned to the other group.

2057

Selecting the first cluster to divide the cluster

size is associated with larger clusters are more

likely

to

split. For example, the application of this operator on the

individual used in the mutation operator of relation (6 ) is

shown in equation (7 ) in which cluster 1 is selected to divide.

22 1 3 3 4 4 5 2 1 4 2 5 1 4|1 2 3 4 5

(7)

Mutation operator and merging: Merging two existing

clusters selected randomly is done within a cluster. As for the

mutation in cluster division, the probability of cluster

selection depends on the size of clusters. To show the

function of this operator, this operator is implemented on the

individual whom the mutation operator is performed on

(relation (6 )). In this case, suppose two clusters 2 and 4 have

been selected for merging.

2 2 1 3 3 2 2 1 2 1 2 2 1 1 2| 1 2 3

(8)

Note that the two mutation operators are applied in a

sequence (one after another) with independent probabilities.

Comparative form of the mutation operator probability is

implemented. In this case, the mutation probability in the first

generations is lower and get higher in the last generations to

have the opportunity to escape from the local optimum.

j

Pm (j) = Pmi + (Pmf − Pmi )

(9)

TG

Pm (j) is the implemented mutation probability the

j generation, TG indicates the total number of generations

and Pmi and Pmf show the initial and final

values, respectively.

5.4

Replacement and elitism

In the proposed approach, the elitist approach has

been used for the construction of the next generation. In

elitism, the best individuals in j generation automatically are

placed to the j + 1 generation to ensure that the best

individuals found so far remains in the evolution by

the algorithm

5.5 Proposed algorithm

The algorithm proposed in this paper uses a discrete PSO

algorithm and genetic algorithm in which the mutation and

genetic crossover operators are added by discrete PSO

method. It is expected that the combined algorithm increase

the global search ability and escape from local optimal

solution. Discrete PSO algorithm which has memory

can establish the rapid correlations based on current optimal

solution with the velocity of the rapid convergence. But when

the optimal particle lens is a local optimal solution, the global

optimal

solution

cannot

be

found. Therefore,

this paper uses genetics to overcome this drawback. So, a

parent is produced by PSO and then the parent uses the

mutation and crossover operator in genetics to produce

another parent.

Finally, the next generation of parents selected by elitism is

determined. This selection process continues until the

terminating condition is met. Then k-means is used to modify

the cluster centers. Figure 3 shows the flowchart of the

proposed algorithm.

May-June

2058

ISSN 1013-5316; CODEN: SINTE 8

Start

Subgroups formation: The subgroup to which

each data belongs is calculated by use of nearest

neighbors.

Generation of initial

population

Determine the best overall solution (gbest) and the best solution of

each particle (Pbesti)

Update the position of each particle

(Solution)

Update the population 1

Generation of

population 1

Update the population 2

Crossover between the best of

each particle (gbest) and the

general (pbest)

Mutation of the general best (gbest)

Generation of population 2

Elitism selection

Is the end

condition

satisfied?

K-means algorithm

End

Figure 2 Flowchart of the proposed algorithm.

May-June

Sci.Int.(Lahore),27(3),2055-2061,2015

Sci.Int.(Lahore),27(3),2055-2061,2015

ISSN 1013-5316; CODEN: SINTE 8

At first, the algorithm k is used to define the subgroup

nearest neighbors of each data to which they belong. Then,

the particle population is randomly generated based on the

display method mentioned in section 5.1. The length of each

particle is equal to the total number of subgroups where data

is located and the number of clusters that the subgroups were

assigned to, the length of the particles is changing. Then, a

discrete PSO and genetic algorithms are used to find the

initial cluster centers and the number of clusters after a

specified number of iterations. Then K-means is used for

setting more precise (better) results.

The steps of the proposed algorithm are as follows:

Step 1- Parameters values include population size (number of

particles), the maximum number of clusters (N c), and the

mutation rate are initialized.

Step 2- The subgroups of data using algorithm k, the nearest

neighbors are determined.

Step 3- The initial position (X i) of a single particle in a

population is generated according to equation (4 ).

Step 4: The values of the fitness of all particles are calculated.

Step 5- Pbest i (the probability of the best given particle i so

far) and gbest (the global best solution found so far) is

specified.

Step 6: The place of each particle is updated according to the

procedures set forth in Section 3.

Step 7- Perform steps 1 and 2 below on the parent updated in

Step 6:

Copy all the particles to generate the population 1.

Do Crossovering on two points calculated in step 5 on Pbest i

and gbest and the mutation on gbest. The population 2 is

produced by this process.

Step 8- Populations 1 and 2 are combined and the fitness

value of each particle is calculated.

Step 9-Elitism selection is applied on populations 1 and 2 to

generate the next generation

Step 10- Return to step 4 until a predefined number of

iterations will be obtained.

Step 11- According to gbest, the initial cluster centers and the

number of clusters are identified and then the identified

parameters are given to k-means algorithm as an input to

group the data into clusters.

6. EXPERIMENTS

Five data sets are used for clustering analysis and the results

of the proposed algorithm in comparison with DCPG

algorithms [ 3 ] is presented according to these 5 databases.

6.1 Databases

In this paper, different methods are tested on 5 databases

from UCI database. The database properties are provided

in the table .

Table 1

Database Properties

Number of

Number of

Database

data

Properties

Iris

150

4

Wine

178

13

Number of

Classes

3

3

Glass

214

9

6

Seed

210

7

3

Tissue

106

9

6

2059

6.2

Preprocessing

Data preprocessing is done on the basis that the maximum

and minimum values for each attribute in the data set is found

and then each attribute is subtracted from its minimum value

and the obtained value is divided by the difference between

maximum and minimum value of that attribute.

[3]. Equation (10) described methods to obtain a normalized

value of attribute i of data x:

x −x

xi = i min

(10)

xmax −xmin

6.3

Experiments Results and the Analysis

Setting parameters for the algorithm are shown in Table 2:

Table 2

Setting the values of algorithm parameters DPSO-GA

Value

Description of parameters

500

The total number of iterations

0.9- 0.1

Minimum and maximum values of the

mutation rate

20

Population size

1

crossover rates

In addition to the parameters listed in Table 1 , the maximum

number of clusters should be identified for the

implementation of the algorithm. Zhang et al. [ 20 ] stated

that the number of maximum clusters should not be more

than the root of the number of data in the dataset ; so the

maximum values of clusters for iris and Wine databases

equals to 13, for the Glass database is 15, Tissue database

equals to 11 and for the seed database is 15.

The number of experiments for each database is equal to 20

and the result average of these 20 times is reported as the

results of the algorithm.

6.4

Estimation Algorithm

Clustering Quality measurement method is based on the fact

that how well-connected the things are to each other. Former

clusterings stressed high similarity clustering of data set in

the same cluster and attempted to find the shortest distance

between data and the cluster center. In dynamic clustering,

the distance between and within clusters are considered as

measurement index. As a result, the modified index Turi

[ 21 ] is accepted in relation (11 ) .

intra

VI = (c × N(0,1) + 1) ×

(11)

inter

Where (c × N(0,1) + 1) is considered as penalty term to

prevent too many clusters; c is a constant equals to 30 and

N(0,1) shows a Gaussian function with the mean of 0 and the

standard deviation of 1 from the number of clusters.

N(μ, σ) =

1

√2πσ

e

2

(k−μ)2

]

2σ2

[−

(12)

Equation (12 ) shows the Gaussian distribution function with

the mean of µ and the standard deviation of σ.

Turi shows that the result of dynamic clustering is placed in

range 2 of the maximum number of clusters. Penalty term can

prevent the data to group in two clusters and enable the

clustering algorithms to find the appropriate number of

clusters [ 21 ] .

In this experiment, since Seed , Iris and Wine have a small

number of clusters, N (0.1) is accepted to calculate the

VI index and since Glass and Tissue have more clusters N

(2,1) is admitted instead of N (0, 1) to calculate the VI index

May-June

2060

ISSN 1013-5316; CODEN: SINTE 8

Np

Where Np represents the total number of data. Smaller value

of intra indicates better performance of clustering for

algorithms. Finally, inter is the distance between two clusters

defined by the equation (14 ) .

inter = min{‖mk − mkk ‖2 }

(14)

∀k = 1,2, . . , K − 1 , kk = k + 1, . . , K

Inter focuses on the minimum distance between clusters and

is defined as the minimum distance between cluster centers.

Another criterion used to evaluate clustering is the Rand

index [ 22 ] calculated the similarity between the obtained

partition and the optimal solution, it means the percentage of

right decisions taken by the algorithm.

TP+TN

R(U) =

(15)

TP+FP+TN+FN

Where TP and TF are, respectively, the number of correct or

incorrect attributions. When the decision involves assigning

two elements to the same cluster. TN and FN show the

number of correct and incorrect attributions when the

decision involves assigning two elements to different clusters.

Note that R is in the range [ 1,0 ] and the R-value is closer to

1 indicates better quality of solutions .

6.5

EVALUATION OF RESULTS

Clustering performance indicators are the VI measured values

, if the VI is smaller (larger) the results of clustering

algorithm will be better (worse). VI value shows that the

training process obtains convergence algorithm.

Considering the number of clusters, the obtained number of

clusters by the algorithm nearer to the correct number of

clusters shows the better results of clustering algorithm.

In the experiment, some comparisons are also made on the

Rand-index values and the number of clusters. Since the

initial solution is generated randomly, evaluation is done

based on the mean values of 20 times experiments and

standard deviations.

The results based on the Rand index, the number of clusters

and the standard deviation from the implementation of

various algorithms on five databases are reported in

Tables (3)- (7). As a result, the proposed method achieved

better performance in terms of determining the number of

clusters compared to other methods.

Table 3

Comparison of results obtained by different

methods on Iris Database

Rand

0:01 ± 0.8 7

12:03 ± 0.86

Number of clusters

Methods

0.3 ± 3.1

DPSO-GA

0.4 ± 2.95

DGPSO

Table 4

Comparison of results obtained by different

methods on Glass Database

Rand

Number of clusters Methods

0:01 ± 0.66

0.6 ± 5.45

DPSO-GA

.02 ± 0.63

0.0 ± 5

DGPSO

Sci.Int.(Lahore),27(3),2055-2061,2015

Table 5

Comparison of results obtained by different

methods on Seed Database

Rand

Number of clusters Methods

0.0 5 ± 0.84

0.48 ± 2.85

DPSO-GA

0.05 ±0.75

0.37 ±2.15

DGPSO

Table 6

Comparison of results obtained by different

methods on Tissue Database

Rand

Number of clusters Methods

0.02 ± 0.77

0.31 ± 5.1

DPSO-GA

0.08 ±0.70

0:44 ± 5.25

DGPSO

Table 7

Comparison of results obtained by

different methods on Wine Database

Rand

Number of clusters Methods

0.01 ± 0.93

0.22 ± 3.05

DPSO-GA

0.07 ±0.91

0.39 ± 2.95

DGPSO

. The value of Intra is shown in relation (13 ) that is the

average of intra-cluster distance.

1

intra = ∑Kk=1 ∑u∈Ck‖u − mk ‖2

(13)

7. CONCLUSION AND FUTURE WORKS

This paper proposed the DPSO-GA algorithm to solve the

problem of setting the number of clusters and also to find the

appropriate number of clusters according to the data

characteristics. Five UCI datasets are employed with different

numbers of clusters, different sizes and different types of data

to verify that the DPSO-GA algorithm can offer better

clustering results. Using a discrete PSO and GA algorithm

DPSO-GA achieved better results.

Clustering is a data mining method, which focuses on

identifying relationships between data. In future work, we

plan to suggest a multi-objective method based on DPSO-GA

to identify the relationships with multiple criteria.

REFERENCES

1. S. Salcedo-Sanz , Et al. , "A new grouping genetic

algorithm for clustering problems," Expert Systems with

Applications, vol. 39, pp. 9695-9703, 2012 .

2. M. Omran , Et al. , "Dynamic clustering using particle

swarm optimization with application in unsupervised

image classification," in Fifth World Enformatika

Conference (ICCI 2005), Prague, Czech Republic ,

2005, pp. 199-204 .

3. R. Kuo , Et al. , "Integration of particle swarm

optimization and genetic algorithm for dynamic

clustering," Information Sciences , vol. 195, pp. 124140, 2012 .

4. D.-X. Chang , Et al. , "A genetic algorithm with gene

rearrangement for K-means clustering," Pattern

Recognition, vol. 42, pp. 1210-1222, 2009 .

5. T. Kanungo , Et al. , "An efficient k-means clustering

algorithm: Analysis and implementation," Pattern

Analysis and Machine Intelligence, IEEE Transactions

on, vol. 24, pp.881-892, 2002 .

6. GJ McLachlan and KE Basford, "Mixture models.

Inference and applications to clustering," Statistics:

Textbooks and Monographs, New York: Dekker,

1988, vol. 1, 1988 .

May-June

Sci.Int.(Lahore),27(3),2055-2061,2015

7.

8.

9.

10.

11.

12.

13.

14.

15.

ISSN 1013-5316; CODEN: SINTE 8

S. Dehuri , Et al. , "Genetic algorithms for multi-criterion

classification

and

clustering

in

data

mining," International Journal of Computing &

Information Sciences, vol. 4, pp.143-154, 2006 .

S. Mitra and H. Banka " Multi-objective evolutionary

biclustering of gene expression data, " Pattern

Recognition, vol. 39, pp. 2464-2477, 2006 .

ER Hruschka and NF Ebecken, "A genetic algorithm for

cluster analysis," Intelligent Data Analysis, vol. 7,

pp. 15-25, 2003 .

M. Sarkar , Et al. , "A clustering algorithm using an

evolutionary programming-based approach," Pattern

Recognition Letters, vol. 18, pp. 975-986, 1997 .

T. Cura, "A particle swarm optimization approach to

clustering," Expert Systems with Applications, vol. 39,

pp. 1582-1588, 2012 .

S. Das , Et al. , "Automatic kernel clustering with a

multi-elitist

particle

swarm

optimization

algorithm," Pattern

Recognition

Letters, vol. 29,

pp. 688-699, 2008 .

H. Jiang , Et al. , "Ant clustering algorithm with Kharmonic means clustering," Expert Systems with

Applications, vol. 37, pp. 8679-8684, 2010 .

C. Zhang , Et al. , "An artificial bee colony approach for

clustering," Expert Systems with Applications, vol. 37,

pp. 4761-4767, 2010 .

L. Cagnina , Etal. , "An efficient Particle Swarm

Optimization

approach

to

cluster

short

texts," Information Sciences, 2013 .

16.

17.

18.

19.

20.

21.

22.

May-June

2061

H. Sahu , Et al. , "A discrete particle swarm

optimization approach for classification of Indian coal

seams with respect to their spontaneous combustion

susceptibility," Fuel Processing Technology, vol. 92,

pp. 479-485, 2011 .

RC Eberhart and J. Kennedy, "A new optimizer using

particle swarm theory," in Proceedings of the sixth

international symposium on micro machine and human

science 1 995 , pp. 39-43 .

E. Falkenauer, "The grouping genetic algorithmswidening the scope of the GAs," Belgian Journal of

Operations Research, Statistics and Computer

Science, vol. 33, p. 2, 1992 .

E. Falkenauer, Genetic algorithms and grouping

problems : John Wiley & Sons, Inc., 1998 .

D. Zhang , Et al. , "A dynamic clustering algorithm

based on PSO and its application in fuzzy

identification," in Intelligent Information Hiding and

Multimedia

Signal

Processing,

2006.

IIHMSP'06. International Conference on , 2006, pp. 232235 .

RH Turi, Clustering-based colour image segmentation :

Monash University PhD thesis, 2001 .

WM Rand, "Objective criteria for the evaluation of

clustering methods," Journal of the American Statistical

association, vol. 66, pp. 846-850, 1971.