CONFIDENCE INTERVALS » » » » » » Where exactly do confidence

advertisement

CONFIDENCE INTERVALS

Where exactly do confidence intervals come from?

Suppose that X is a random variable, possibly multivariate, with a distribution f(x | )

that depends on parameter . The problem may involve other parameters beside . Let

C(X) be set that depends on X only. If P[ C(X) ] = 1 – α, then we say that C(X) is

a 1 – α confidence set for . For many applications, the set is an interval, but it does

not have to be.

The problem, of course, is making this work in any actual story. There are several

common approaches.

Solve a routine distribution problem

EXAMPLE 1. Suppose that X1, X2, …, Xn is a sample from a normal population with

mean and standard deviation , both unknown. It is well-known that the statistic

X

t= n

follows the distribution tn – 1 , the t distribution with n – 1 degrees of

s

freedom. Then we have the straightforward probability statement

P tn/21

n

X

tn/21 1

s

This is routinely rewritten as

s

s

P X tn/21

X tn/21

1

n

n

from which we make the standard 1 – α confidence interval as X tn/21

s

, meaning

n

s

/2 s

, X tn/21

X tn1

.

n

n

X

tn1 1 , and this would

It’s also true that P n

s

s

lead to the one-sided interval X tn1

, . The last version would be

n

s

read “I am 1 – α confident that exceeds X tn1

.”

n

Note 1a.

1

gs2011

CONFIDENCE INTERVALS

s

Note 1b. The parallel interval on the other side is , X tn1

, and the

n

s

statement is “I am 1 – α confidence that is less than X tn1

.”

n

The original probability statement used < rather than ≤ . Using ≤

s

s

would get the closed confidence interval X tn/21

.

, X tn/21

n

n

Since the random variables are continuous, there is no meaningful distinction, but

the open interval is perceived as shorter (better) because it does not contain its

endpoints.

Note 1c.

Note 1d. We use the word “confidence” rather than “probability” once we have

actual numbers. We don’t like to make a statement of the form “The probability

is 95% that is in the interval (144, 190).” The interval (144, 190) has nothing

random to it, so we don’t like to attribute a probability to it.

EXAMPLE 2. Suppose that X1, X2, …, Xn is a sample from a normal population with

n 1 s 2

mean and standard deviation , both unknown. The statistic

2

n

X

=

i 1

i

X

2

2

follows the chi-squared distribution on n – 1 degrees of freedom. It

n 1 s 2

follows that P

2n1 1 . The symbol 2n 1 is the upper

2

alpha point for the chi-squared distribution with n – 1 degrees of freedom. The

n 1 s 2

probability inequality can be written as P 2

1 . This leads to the

2

n1

n 1 s 2

, .

1 – α confidence interval for 2 as

2

n 1

1

n 1 s 2

Note 2a. It’s also true that P

2n1 1 leading to the

2

2

n 1 s

1

1 – α confidence interval as 0 ,

The symbol 2n 1

is the

1 .

2

n 1

lower alpha point for the chi-squared distribution with n – 1 degrees of freedom.

Printed tables of the lower percentage points are not easy to find, but in any case

we use software for these.

2

gs2011

CONFIDENCE INTERVALS

n 1 s 2

1

Note 2b. It’s also true that P 2n1 2

2n1 2 1

2

2

n 1 s 2

n 1 s

leading to the 1 – α confidence interval

. In looking

,

1 2

2

2 2

n1

n 1

at this, it helps to recall that 2n 1 2 < 2n 1 2 .

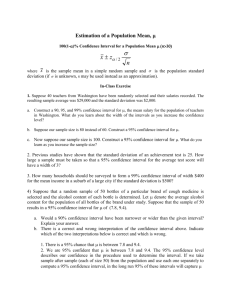

chi-squared density with 15 degrees of freedom:

1

This picture shows the

Distribution Plot

Chi-Square, df=15

0.08

0.07

Density

0.06

0.05

0.04

Density height =

0.0072

Density

height =

0.0195

0.03

0.02

0.01

0.00

0

5

10

15

20

X

25

30

35

40

The lower 2.5% point is 6.26, and the upper 2.5% point is 27.49. The density

heights are very different, however.

Note 2c. The interval of 2b is not going to be the shortest possible. The length

1

1

of the interval of 2b is n 1 s 2

. We can seek a

1 2

2

2

2

n1

n 1

1

1

1

1

probability so that

, and this is

1

1

2n1

2n1

2n1 2 2n1 2

3

gs2011

CONFIDENCE INTERVALS

not an easy problem. Some will revise the problem to picking upper and lower

points that have equal probability density heights.

EXAMPLE 3. If X1, X2, …, Xm and Y1, Y2, …, Yn are independent samples with means X

and Y and with equal standard deviation , then define the pooled standard deviation as

sp =

m 1 s X2 n 1 sY2

mn2

The statistic t =

mn

mn

X X

Y Y

has the t distribution with

sp

m + n - 2 degrees of freedom. This leads to the 1 – α confidence interval for X - Y as

X

Y tm/2n 2 s p

mn

mn

Note 3a. There are one-sided versions of this interval as well.

EXAMPLE 4. If X1, X2, …, Xm and Y1, Y2, …, Yn are independent samples with means X

s X2

2X

and Y and standard deviations X and Y , then the statistic F =

has the F

sY2

Y2

distribution with (m – 1, n – 1) degrees of freedom. Then

P

s X2

2X

sY2

Y2

Fm1,n 1 1

which can be rewritten as

2

s2

P 2Y Y2 Fm1,n1 1

sX

X

This leads to the one-sided 1 – α confidence interval for the ratio

sY2

0

,

Fm1,n 1

s X2

Y2

as

2X

Y2

.

This

can

be

regarded

as

an

upper

bound

for

.

2X

4

gs2011

CONFIDENCE INTERVALS

Note 4a. The same setup leads to the 1 – α confidence interval for the ratio

s2

as 2 X

,

s

F

Y m1,n 1

2X

Y2

2X

.

This

is

a

lower

bound

for

.

2

Y

2X

s X2

Note 4b. The upper bound interval for 2 is 0 , 2 Fn1, m1 , corresponding

Y

sY

2

2

s

, .

to the lower bound interval for 2Y as 2 Y

X

s X Fm 1, n 1

s X2

2

X

1 2

2

1 , so that

P

F

F

Note 4c. It is also true that

m 1, n 1

m 1,n 1

sY2

2

Y

we can construct a two-sided interval. The F distribution, like the chi-squared of

note 2b, is also non-symmetric, so there are similar problems in constructing this

interval.

EXAMPLE 5. Suppose that X1, X2, …, Xm and Y1, Y2, …, Yn are independent samples

with means X and Y and with equal standard deviation . You seek a confidence

interval for the parameter ratio X . This will require solving a distribution problem

Y

X

. The technique goes by the name Fieller’s Theorem. (This is

Y

pronounced “filer.”) The technique is complicated and will not be done in detail here.

This can also be done when X and Y are correlated, and one of the interesting

byproducts is an interval for the x-intercept in a simple regression.

related to the ratio

5

gs2011

CONFIDENCE INTERVALS

Use Wald intervals based on maximum likelihood estimation and Fisher’s information

Suppose that random X has a distribution f(x | ) and that ̂MLE is found through

maximum likelihood estimation. Since ̂MLE has a limiting normal distribution with

1

1

variance

, this can be estimated as

. It follows that

I

I ˆ

MLE

ˆ MLE z/2

1

I ˆ

MLE

is an approximate 1 – α confidence interval.

EXAMPLE 6. Suppose that X is a binomial random variable (n, p). The maximum

X

n

likelihood estimate for p is p̂MLE =

. Also, I pˆ MLE =

. The

n

pˆ MLE 1 pˆ MLE

confidence interval that results from this is pˆ MLE z/2

pˆ MLE 1 pˆ MLE

.

n

n

n x

Note 6a. For this random variable, the likelihood is L = p x 1 p .

x

n

This results in log L = log x log p n x log n p . Then the

x

d

X

n X

log L =

score random variable is S =

. Then I(p)

p

1 p

dp

1

1

nX

X

= Var S = Var

= Var X

=

1 p

p

p 1 p

2

2

n

1

1

1

= np 1 p

=

. Then

np 1 p

p

1

p

p

1

p

p

1

p

n

I pˆ MLE =

.

pˆ MLE 1 pˆ MLE

Note 6b. This very standard interval is also derived as the byproduct of a normal

approximation to a discrete random variable. This is sometimes “corrected for

pˆ MLE 1 pˆ MLE

1

continuity” to the interval pˆ MLE z/2

.

n

2n

Note 6c. Since X takes only the n + 1 values from 0 to n, there are only n + 1

different confidence intervals that can result in this problem. This is a disturbing

thought when n is small.

6

gs2011

CONFIDENCE INTERVALS

Invert hypothesis tests

Suppose that the random variable X has a distribution which depends on , and perhaps

on other parameters as well. A hypothesis test for H0: = 0 at level α is done by

designing a rejection region for random variable X. If X , then H0 is rejected. The

accept set is the complement of . A 1 – α confidence set can be constructed as

0 H0 is accepted with data X = 0 X A . The set of course depends on

the 0 under test, so we can describe it as (0). Construction of the confidence set

requires solving for 0 in the condition X (0). At this point the subscript on 0 is

not all that helpful, so we can describe the confidence set in terms of solving for in the

relationship X ().

EXAMPLE 7.

Suppose that X is a binomial random variable (n, p). Suppose also that we would like

a confidence interval for p in the form ( pL , 1 ). The subscript L denotes “lower.” If

we set up the test H0: p ≤ p0 versus H1: p > p0 , it will happen that large values for p0

(near 1) are easily accepted, while small values for p0 lead to rejection. The set of

acceptable values for p0 will then be an interval of the form ( pL , 1 ).

Let’s set up the test with n = 25 and with α = 0.05. The most powerful test of

H0: p = p0 versus H1: p = p1 (with p1 > p0) can be found by the Neyman-Pearson

lemma. The same test will be found for every p1 that is larger than p0 , so the test is

uniformly most powerful. This test designs as { X ≥ c }.

If, for example, p0 = 0.60 in the statement of H0, use

P[ X ≥ 19 | p = 0.60 ] = 0.0736

P[ X ≥ 20 | p = 0.60 ] = 0.0294

to set = { X ≥ 20 }. Using the smaller value 19 would violate the specified type I error

probability of 0.05. It should be observed that data x = 18 would lead to acceptance

of H0.

Suppose that the actual data value is x = 18. Certainly p0 = 0.60 would be an accepted

comparison value. Values larger than 0.60 are also accepted. We note that

P[ X ≥ 18 | p = 0.60 ] = 0.1536, which is bigger than 0.05.

Would p0 = 0.50 be accepted? Find P[ X ≥ 18 | p = 0.50 ] = 0.0216. This value is below

0.05, so the value 0.50 is not accepted; it must lie outside the confidence set.

7

gs2011

CONFIDENCE INTERVALS

At this point, we see that the interval [0.50, 1] is too big for the confidence set and

[ 0.60, 1 ] is too small.

Would p0 = 0.55 be accepted? Find P[ X ≥ 18 | p = 0.55 ] = 0.0639. This is over 0.05, so

this leads to acceptance. This table will help:

Trial p

0.50

0.51

0.52

0.53

0.54

0.55

0.60

P[ X ≥ 18 ]

0.0216

0.0231

0.0342

0.0425

0.0623

0.0639

0.8464

Comment

0.50 is too small

Cross-over this value to next

Cross-over previous value to this

0.60 is too big

The value for pL is somewhere between 0.53 (too small) and 0.54 (too big). The search

can be continued to get the next decimal place:

Trial p

0.530

0.536

0.537

0.538

0.540

P[ X ≥ 18 ]

0.0425

0.0482

0.0492

0.0502

0.0523

Comment

0.530 is too small

Cross-over this value to next

Cross-over previous value to this

0.540 is too big

The confidence interval, to a very close approximation, is [0.538, 1].

8

gs2011

CONFIDENCE INTERVALS

Note 7a. This particular procedure has many problems with the two-sided interval.

Suppose that we have this binomial random variable and that we wish to test H0: p = p0

versus H1: p p0 and that our sample size is not large enough to be comfortable with the

approximation in Example 6. There are two common strategies for using the exact

binomial probabilities.

The Clopper-Pearson method tests H0: p = p0 versus H1: p > p0 at level

2

and

simultaneously tests H0: p = p0 versus H1: p < p0, also at level 2 . The

confidence set consists of those p0 values which are accepted in both tests. The

problem with this method is that, in some problems the confidence set is not a

connected interval.

The Wilson-Sterne method tests H0: p = p0 versus H1: p p0 by forming the

rejection set in order of he probabilities P[ X = x | p0 ] For example, to test

H0: p = 0.40 versus H1: p 0.40 with n = 10, we examine the probabilities

P[ X = x | p = 0.40 ] :

x

P[ X = x ]

0.006047

0.040311

0.120932

0.214991

0.250823

0.200658

0.111477

0.042467

0.010617

0.001573

0.000105

0

1

2

3

4

5

6

7

8

9

10

9

gs2011

CONFIDENCE INTERVALS

These are now to be sorted:

x

10

9

0

8

1

7

6

2

5

3

4

P[ X = x ]

0.000105

0.001573

0.006047

0.010617

0.040311

0.042467

0.111477

0.120932

0.200658

0.214991

0.250823

10

10, 9

10, 9, 0

10, 9, 0, 8

10, 9, 0, 8, 1

P[ | H0 ]

0.000105

0.001678

0.007725

0.018342

0.058653

The test at level 0.05 rejects if X {10, 9, 0, 8}. The actual α is 0.018342.

Enlarging by including { X = 1 } would bring the type I error probability

over 0.05. The actual type I error probability of 0.018342 is not divided equally

between the two tails.

It can shown that the Wilson-Sterne procedure is superior to Clopper-Pearson.

Most people find Clopper-Pearson easier to understand, and it gets much more

use.

A confidence set based on Wilson-Sterne is a simple connected interval.

10

gs2011

![The Average rate of change of a function over an interval [a,b]](http://s3.studylib.net/store/data/005847252_1-7192c992341161b16cb22365719c0b30-300x300.png)