Final Project - Alexander Hardt

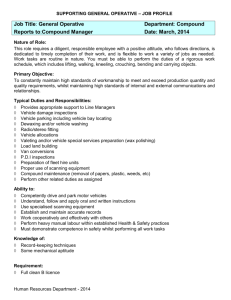

advertisement

1 Total Vehicle Sales Forecast Final Project Alexander Hardt Dr. Holmes Economic Forecasting 309-01W Summer II 8/6/2013 2 Executive Summary For this project I created a twelve month forecast for Total Vehicle Sales in the United States using four different methods. These four techniques are called exponential smoothing, decomposition, ARIMA, and multiple regression. To do so I picked one dependent (Y) variable along with two independent (X) variables and collected 80 monthly observations for each variable. This historical data allowed me to create four different forecasting models which predict future Vehicle Sales with low risk of error. The best model according to the lowest error measures was winter’s exponential smoothing method because it had the lowest MAPE along with the lowest RMSE for the fit period as well as the forecast period. Introduction I chose the Y variable to be Total Vehicle Sales in the United States because I have a strong interest in the auto industry and would like to work for a German car maker in the future. The auto industry is very vulnerable to the state of the economy because people tend to postpone high-item purchases like a car when times are tough. Therefore, the variables that cause a change in vehicle sales numbers must be indicators of economic performance. In order to forecast the dependent variable Y (Total Vehicle Sales), I chose two independent variables, X1 and X2 that are closely related to Y. These are going to be Employment non-farm and the Personal Saving Rate. The hypothesis I make for the first X variable is that employment numbers are logically related to vehicle sales because the more people are in the workforce, the more people earn an income which is necessary to make high-item purchases like a personal car. The hypothesis for the second X variable is that the personal saving rate has an inverse linear relationship to vehicle 3 sales because the more people hold on to their disposable income, the less spending occurs which hurts vehicle sales numbers. Since I am using three completely different variables in my forecast, the means, ranges, and standard deviations for each variable are going to differ from each other. In order to avoid forecasting difficulty, it is important to look at the variations about the mean value for each variable. The Y variable Total Vehicle Sales has a mean value of 1130.5, a range of 919.9, and a standard deviation of 243.0. Since it is important that the standard deviation is less than 50% of the mean value to avoid forecasting difficulty, these numbers indicate that I should be able to get a pretty accurate forecast. The X variable Employees non-farm shows a mean value of 133,784 with a standard deviation of 3,463 and a low range of 11,769 which are great numbers for an independent variable. The X variable Personal Saving Rate with a mean value of 4.157 and a standard deviation of 1.389 also indicate that I should not run into difficulties producing a forecast. Below are descriptive statistics for all the variables used: Descriptive Statistics: Total Vehicle Sales, Employees non farm, Saving Rate Variable Total Vehicle Sales Employees non farm Saving Rate N 68 68 68 N* 0 0 0 Variable Total Vehicle Sales Employees non farm Saving Rate Q3 1282.5 137029 5.200 Mean 1130.5 133784 4.157 SE Mean 29.5 420 0.168 StDev 243.0 3463 1.389 Minimum 670.3 127374 2.000 Q1 967.4 130916 2.800 Median 1090.3 133209 4.350 Maximum 1590.2 139143 8.300 Looking at the time series plot for the Y variable Total Vehicle Sales, one can notice a slight negative trend over the 68 observations studied. This characteristic is proven by the autocorrelation function which shows that the autocorrelation coefficients remain fairly large for several time periods before slowly declining. Furthermore, there could be a seasonal pattern in 4 the Y variable because there are spikes at the 12th and 24th lag of the autocorrelation function. This can be logically explained by the holiday sales events car dealers have during the Christmas season. The time series plot for the X variable Employees non-farm is very closely related to the Y variable and also shows a negative trend and seasonality. Furthermore, there is a noticeable cyclical pattern as well. The second X variable Personal saving rate shows a slight positive trend and cycle only. There is no seasonality here because the data I found was seasonally adjusted. Below are the three time series plots for all variables: Time Series Plot of Total Vehicle Sales 1600 Total Vehicle Sales 1400 1200 1000 800 600 1 7 14 21 28 35 Index 42 49 56 63 5 Time Series Plot of Employees non farm 140000 Employees non farm 138000 136000 134000 132000 130000 128000 126000 1 7 14 21 28 35 Index 42 49 56 63 Time Series Plot of Saving Rate 9 8 Saving Rate 7 6 5 4 3 2 1 7 14 21 28 35 Index 42 49 56 63 In order to be able to show the YX variable relationship, scatter plots are a great tool. Both of the X variables have a moderate to strong linear relationship with the Y variable. 6 However, the linear relationships are of different nature. While the variables Employees and Vehicle Sales are positively linearly related, the X variable Personal Saving Rate and Vehicle Sales exhibit a pretty strong negative linear relationship. The strength of this linear relationship is shown by the slope of the regression line in each scatter plot. Many values are very close to the regression line which is indicative of a strong linear relationship. However, there are also a few values that are far from the regression line which shows that there are extremes as well. Below are the scatter plots for each XY relationship: Scatterplot of Total Vehicle Sales vs Employees non farm 1600 Total Vehicle Sales 1400 1200 1000 800 600 126000 128000 130000 132000 134000 136000 Employees non farm 138000 140000 7 Scatterplot of Total Vehicle Sales vs Saving Rate 1600 Total Vehicle Sales 1400 1200 1000 800 600 2 3 4 5 6 Saving Rate 7 8 9 In researching X variables that help forecast the Y variable, the correlation matrix is the most important tool for forecasting personnel. It shows two values that measure the relationship between each variable. The Pearson correlation shows how strong the linear relationship is between two variables and the P-Value states the confidence interval which is an important factor in the decision to use a certain X variable. One wants to have at least 95% confidence. Both X variables have strong Pearson correlations with the Y variable and a perfect 0 P-Value which makes these variables significant and acceptable to use in the forecast. Furthermore, the correlations between the two X variables are less than each X variable’s correlation with the Y variable. Since these correlations are logical and prove the hypothesis made earlier, I will go on with the forecast. Below is the correlation matrix for all variables: 8 Correlations: Total Vehicle Sales, Employees non-farm, Saving Rate Employees non fa Total Vehicle Sa 0.673 0.000 Employees non-fa -0.608 0.000 -0.438 0.000 Saving Rate Cell Contents: Pearson correlation P-Value Body Exponential Smoothing The correct exponential smoothing method depends on the characteristics of the Y data. As you can tell from the time series plot below, the data series has a negative trend and seasonality shown by the repeated annual spikes in the data. Time Series Plot of Total Vehicle Sales 1600 Total Vehicle Sales 1400 1200 1000 800 600 1 7 14 21 28 35 Index 42 49 56 63 9 In order to further analyze the characteristics of the Y data, it is helpful to look at the autocorrelation function. The autocorrelation coefficients reveal that the data series definitely has negative trend as seen by the slowly decreasing autocorrelation coefficients. There is seasonality, although not significant, as shown by the spike in the 12th lag. Furthermore, there is also some sort of a cycle since the coefficients often go up and down. Autocorrelation Function for Total Vehicle Sales (with 5% significance limits for the autocorrelations) 1.0 0.8 Autocorrelation 0.6 0.4 0.2 0.0 -0.2 -0.4 -0.6 -0.8 -1.0 2 4 6 8 10 12 14 Lag 16 18 20 22 24 Since the data series has a trend and seasonality, the best method to use is winter’s exponential smoothing technique. It is the only method that can capture seasonality. The plot for Total Vehicle Sales using Winter’s method of exponential smoothing is seen below: 10 Winters' Method Plot for Total Vehicle Sales Multiplicative Method 1750 Variable Actual Fits Total Vehicle Sales 1500 Smoothing C onstants A lpha (lev el) 0.6 Gamma (trend) 0.1 Delta (seasonal) 0.8 1250 Accuracy Measures MA PE 5.89 MA D 63.70 MSD 7698.66 1000 750 500 1 7 14 21 28 35 42 Index 49 56 63 The exponential smoothing model coefficients that gave the lowest MAPE accuracy measures are alpha (level)= 0.6, gamma (trend)= 0.1, and delta (seasonal)= 0.8. A table showing the Y data (excluding hold out period), the fit values, and the corresponding residuals is included in the appendix. The goodness to fit measures attained with this model are MAPE= 5.89% , MAD= 63.70, MSD= 7698.66, and RMSE= 87.74. These accuracy measures are pretty good and indicate that an accurate forecast can be made. It can be seen that the fit graph is very close to the Y data graph which indicates that trend, cycle, and seasonality has been accounted for. Below is a time series plot of the Y data compared with the Fit period and a time series plot of the residuals: 11 Time Series Plot of Total Vehicle Sales, FITS1 1750 Variable Total Vehicle Sales FITS1 1500 Data 1250 1000 750 500 1 7 14 21 28 35 42 Index 49 56 63 Time Series Plot of RESI1 300 200 RESI1 100 0 -100 -200 -300 1 7 14 21 28 35 Index 42 49 56 63 12 It can be seen that there are no significant signs of trend, cycle, or seasonality in the residual’s time series plot. Most values are around 0 which indicates randomness. In order to prove randomness, the autocorrelation function of the residuals can help. Below is the autocorrelation function of the residuals: Autocorrelation Function for RESI1 (with 5% significance limits for the autocorrelations) 1.0 0.8 Autocorrelation 0.6 0.4 0.2 0.0 -0.2 -0.4 -0.6 -0.8 -1.0 2 4 6 8 10 12 14 Lag 16 18 20 22 24 Residual analysis with the autocorrelation function shows that there is no autoregressiveness in the residuals because no coefficients exceed the t-value lines. Furthermore, the LBQ coefficient at the 24th lag is 33.33 which is below the critical value of chi-square of 36.41. The histogram of the residuals however does show a slight skew to the left which is indicating an underestimation bias. This fact is supported by the mean shift to the right. The 13 mean of the residuals is 5.351 which is still very close to zero. Therefore, one can say that the residuals are random and the distribution is normal. Histogram of RESI1 Normal 25 Mean StDev N 5.351 88.23 68 Frequency 20 15 10 5 0 -200 -100 0 RESI1 100 200 It is proven that the residuals are random. The trend, cycle, and seasonality that existed in the original data series is not seen in the residuals. This shows that the model is successful at picking up the systematic variation of the Y data series. Therefore, the model will be able to generate an accurate forecast. Below are the one year forecast and a time series plot for the Y data series including the hold out period (index 69-80): 14 Winters' Method Plot for Y Total Vehicle Sales Multiplicative Method 2250 Variable Actual Fits Forecasts 95.0% PI Y Total Vehicle Sales 2000 1750 Smoothing C onstants A lpha (lev el) 0.6 Gamma (trend) 0.1 Delta (seasonal) 0.8 1500 1250 Accuracy Measures MA PE 5.89 MA D 63.70 MSD 7698.66 1000 750 500 1 8 16 24 32 40 48 Index 56 64 72 80 Time Series Plot of Y Forecast 1600 Y Forecast 1400 1200 1000 800 600 1 8 16 24 32 40 Index 48 56 64 72 80 15 The accuracy of the forecast for the hold out period is MAPE= 5.16809 and RMSE= 74.7947. When comparing the time series plot of the one year forecast with the actual hold out data for this period, we can see that both variables are very close to each other. The forecast and hold out variable cross each other multiple times. Therefore, there is little over- or underestimation. Time Series Plot of Vehicle HO, FORE1, UPPE1, LOWE1 2250 Variable Vehicle HO FORE1 UPPE1 LOWE1 2000 1750 Data 1500 1250 1000 750 500 1 2 3 4 5 6 7 Index 8 9 10 11 12 The forecast period residuals seem to be pretty random with the exception of Index 7 where the residual has an extreme negative. However, the autocorrelation function proves that there are no significant systematic patterns in the residuals because all coefficients are far from the t-value boundaries. Furthermore, the LBQ value of 7.13 at the 10th lag is far below the critical value of chi-square of 18.3070. Below are the time series plot of the forecast residuals and the autocorrelation function: 16 Time Series Plot of Frcst Resid 100 Frcst Resid 50 0 -50 -100 -150 1 2 3 4 5 6 7 Index 8 9 10 11 12 Autocorrelation Function for Frcst Resid (with 5% significance limits for the autocorrelations) 1.0 0.8 Autocorrelation 0.6 0.4 0.2 0.0 -0.2 -0.4 -0.6 -0.8 -1.0 1 2 3 4 5 6 Lag 7 8 9 10 17 The error measures improved from the fit to the hold out period. The MAPE of 5.89% improved to 5.17% and RMSE of 87.74 was lowered to 74.79. Furthermore, the forecast residuals show that trend, cycle, and seasonality have been accounted for so that there is no bias in the forecast. These results prove that the forecast accuracy is acceptable and the model successful. Decomposition The results table of the decomposition method of forecasting is included in the appendix. In order to determine the seasonal component of the Y data, one needs to look at the seasonal indices. The seasonal indices for 12 periods (monthly data) as well as a time series plot of the seasonal indices are displayed below: Seasonal Indices Period 1 2 3 4 5 6 7 8 9 10 11 12 Index 1.14258 1.01495 1.05177 1.13192 0.94791 0.92322 0.84718 1.06925 0.79222 0.91807 1.12809 1.03285 18 Time Series Plot of SeasInd 1.15 1.10 SeasInd 1.05 1.00 0.95 0.90 0.85 0.80 1 2 3 4 5 6 7 Index 8 9 10 11 12 When looking at the seasonal indices, one can notice that Vehicle Sales are periodic. There are relatively high sales in the Christmas season, early spring, and late summer and relatively low sales in late spring, early summer. The seasonal analysis will help to adjust the Y data for seasonality. Below is a time series plot comparing the Y data with the decomposition deseasonalized data: 19 Time Series Plot of Y Total Vehicle Sales, DESE2 1600 Variable Y Total Vehicle Sales DESE2 1400 Data 1200 1000 800 600 1 7 14 21 28 35 42 Index 49 56 63 From this comparison it is noticeable that the deseasonalized variable contains much less spikes and extreme up and down movements as the original Y data. This shows us that the strong seasonality that exists in the original Y data has been adjusted in the deseasonalized plot. The “goodness to fit” as measured by the MAPE and RMSE indicates a large decrease in accuracy compared to the previous model. The MAPE went up to 12.7% and the RMSE went up to 157.146. This accuracy might be too high to accept, however it can be adjusted through the cycle factors. To determine the residual distribution, one needs to look at the time series plot, the autocorrelation function, as well as a histogram of the residuals of the fit period: 20 Time Series Plot of RESI2 400 300 200 RESI2 100 0 -100 -200 -300 -400 1 7 14 21 28 35 Index 42 49 56 63 Autocorrelation Function for RESI2 (with 5% significance limits for the autocorrelations) 1.0 0.8 Autocorrelation 0.6 0.4 0.2 0.0 -0.2 -0.4 -0.6 -0.8 -1.0 2 4 6 8 10 12 14 Lag 16 18 20 22 24 21 Histogram of RESI2 Normal 20 Mean StDev N -0.5784 158.3 68 Frequency 15 10 5 0 -320 -160 0 RESI2 160 320 Based on these graphs, it can be said that the residuals are definitely not random. I can detect significant trend and some cycle by looking at the autocorrelation function. The very high LBQ value of 245 at the 12th lag proves that the residual distribution is autoregressive which means it is not random. The mean however is very close to 0. Since it is negative, there will be a slight tendency to over forecast. The one-year forecast for the hold out period using the decomposition model is displayed below: 22 Time Series Decomposition Plot for Y Total Vehicle Sales Multiplicative Model Variable Actual Fits Trend Forecasts Y Total Vehicle Sales 1600 1400 A ccuracy Measures MAPE 12.7 MAD 127.3 MSD 24694.8 1200 1000 800 600 1 8 16 24 32 40 48 Index 56 64 72 80 However, since the accuracy measures we observed earlier were too high, I have adjusted the forecast data with the last cycle factor. The time series plot of the Y data including the adjusted one-year forecast is displayed below: 23 Time Series Plot of Y w adj fcst 1600 Y w adj fcst 1400 1200 1000 800 600 1 8 16 24 32 40 Index 48 56 64 72 80 The adjustment was necessary because the decomposition model did not pick up cycle. The adjustment raised the forecast data by about 33% because the last cycle factor in the Y data was 1.33. The new forecast improved accuracy measures significantly. The MAPE was lowered to 8.22103% and the RMSE is now at 130.279. The closeness of the new forecast to the actual hold out period can be seen in the following time series plot: 24 Time Series Plot of Vehicle HO, New Fcst 1500 Variable Vehicle HO New Fcst 1400 Data 1300 1200 1100 1000 900 1 2 3 4 5 6 7 Index 8 9 10 11 12 Finally, we will look at the time series plot of the forecast residuals. The forecast residuals are much closer to 0 than before the adjustment through the cycle factor. However, there is still trend and cycle in the residuals. Time Series Plot of FcstResid 250 FcstResid 200 150 100 50 0 1 2 3 4 5 6 7 Index 8 9 10 11 12 25 ARIMA Time Series Plot of Y Total Vehicle Sales 1600 Y Total Vehicle Sales 1400 1200 1000 800 600 1 7 14 21 28 35 Index 42 49 56 63 Autocorrelation Function for Y Total Vehicle Sales (with 5% significance limits for the autocorrelations) 1.0 0.8 Autocorrelation 0.6 0.4 0.2 0.0 -0.2 -0.4 -0.6 -0.8 -1.0 2 4 6 8 10 12 14 Lag 16 18 20 22 24 26 Based on the analysis of the Y time series plot and autocorrelation function, it will be necessary to difference the data to make it stationary. Since the Y data has significant trend, the first step is to difference the data for trend. The time series plot and autocorrelation function for the first trend difference are: Time Series Plot of 1 Tren Dif 300 200 100 1 Tren Dif 0 -100 -200 -300 -400 -500 -600 1 7 14 21 28 35 Index 42 49 56 63 Autocorrelation Function for 1 Tren Dif (with 5% significance limits for the autocorrelations) 1.0 0.8 Autocorrelation 0.6 0.4 0.2 0.0 -0.2 -0.4 -0.6 -0.8 -1.0 2 4 6 8 10 12 14 Lag 16 18 20 22 24 27 We can see that there is no significant trend anymore. Therefore we will use only one difference for the nonseasonal model. To determine which model to use we need to also show the PACF for the first trend difference: Partial Autocorrelation Function for 1 Tren Dif (with 5% significance limits for the partial autocorrelations) 1.0 Partial Autocorrelation 0.8 0.6 0.4 0.2 0.0 -0.2 -0.4 -0.6 -0.8 -1.0 2 4 6 8 10 12 14 Lag 16 18 20 22 24 Based on the ACF and PACF of the first trend difference, we can determine that this is an MA 1 model because of one significant negative spike in the ACF and the PACF coefficients slowly approaching zero. Since the data also has seasonality, we will also take the seasonal difference. The time series plot and ACF for the first seasonal difference is shown below: 28 Time Series Plot of 1 Seas Dif 500 400 300 1 Seas Dif 200 100 0 -100 -200 -300 -400 1 7 14 21 28 35 Index 42 49 56 63 Autocorrelation Function for 1 Seas Dif (with 5% significance limits for the autocorrelations) 1.0 0.8 Autocorrelation 0.6 0.4 0.2 0.0 -0.2 -0.4 -0.6 -0.8 -1.0 2 4 6 8 10 12 14 Lag 16 18 20 22 24 We can see that the first difference is sufficient to take out significant seasonality and make the time series stationary. To determine which seasonal model coefficient to use, we need to look at the PACF below: 29 Partial Autocorrelation Function for 1 Seas Dif (with 5% significance limits for the partial autocorrelations) 1.0 Partial Autocorrelation 0.8 0.6 0.4 0.2 0.0 -0.2 -0.4 -0.6 -0.8 -1.0 2 4 6 8 10 12 14 Lag 16 18 20 22 24 It can be determined that the ARIMA model for the seasonal difference is also MA1. Therefore the menu section of the best ARIMA model should be (0,1,1) for the seasonal as well as non-seasonal section. After running this model we get the following results: Final Estimates of Parameters Type MA 1 SMA 12 Coef 0.3938 0.8476 SE Coef 0.1263 0.1199 T 3.12 7.07 P 0.003 0.000 Differencing: 1 regular, 1 seasonal of order 12 Number of observations: Original series 68, after differencing 55 Residuals: SS = 451577 (backforecasts excluded) MS = 8520 DF = 53 Modified Box-Pierce (Ljung-Box) Chi-Square statistic Lag Chi-Square DF P-Value 12 10.8 10 0.371 24 31.8 22 0.081 36 44.0 34 0.116 48 51.2 46 0.277 We can see that the coefficients for the MA 1 and SMA 12 model have a t-value over 1.96 and a p-value very close to zero. Also, the P-value in the Box-Pierce statistic is above 0.05. 30 These results are very good because they indicate that the model coefficients are significant and the ARIMA model should produce good forecast results. In order to determine the accuracy for the fit period, we will need to look at the MAPE and RMSE. These are shown below: MAPE= 6.51131% RMSE= 90.6118 The Fit MAPE of 6.5% is pretty decent along with the RMSE of 90.6118. Therefore we can determine that this model is accurate based on error measures. Furthermore, like said above, the LBQ associated p-values for the lag periods 12, 24, 36, and 48 are all above 0.05 which allows us to declare the residuals random. After running the ARIMA model we produce a 12-month forecast. A time series plot of the forecast residuals is displayed below: Time Series Plot of F Resid 150 F Resid 100 50 0 -50 1 2 3 4 5 6 7 Index 8 9 10 11 12 31 Based on the LBQ values we can say that the forecast residuals are random because they are below the chi square value at lag 12. However, the time series plot does indicate a little trend. The accuracy measures for the forecast period compared to the hold out period are displayed below: MAPE= 6.49819% RMSE= 96.7536 The accuracy measures went down slightly. However, it can be said that this is normal and we can still declare the ARIMA model accurate. A time series plot of the Y variable including the 12-month forecast is shown below: Time Series Plot of Y Total Vehicle Sales_1 Y Total Vehicle Sales_1 1600 1400 1200 1000 800 600 1 8 16 24 32 40 Index 48 56 64 72 80 The forecast looks reasonable because the forecast takes the same pattern as the historical observations before. 32 Multiple Regression Before running a multiple regression model, it is important to look at the XY relationships using a scatterplot and a correlation matrix. Both of the X variables have a moderate to strong linear relationship with the Y variable. However, the linear relationships are of different nature. While the variables Employees and Vehicle Sales are positively linearly related, the X variable Personal Saving Rate and Vehicle Sales exhibit a pretty strong negative linear relationship. The strength of this linear relationship is shown by the slope of the regression line in each scatter plot. Many values are very close to the regression line which is indicative of a strong linear relationship. However, there are also a few values that are far from the regression line which shows that there are extremes as well. Below are the scatter plots for each XY relationship: Scatterplot of Total Vehicle Sales vs Employees non farm 1600 Total Vehicle Sales 1400 1200 1000 800 600 126000 128000 130000 132000 134000 136000 Employees non farm 138000 140000 33 Scatterplot of Total Vehicle Sales vs Saving Rate 1600 Total Vehicle Sales 1400 1200 1000 800 600 2 3 4 5 6 Saving Rate 7 8 9 In researching X variables that help forecast the Y variable, the correlation matrix is the most important tool for forecasting personnel. It shows two values that measure the relationship between each variable. The Pearson correlation shows how strong the linear relationship is between two variables and the P-Value states the confidence interval which is an important factor in the decision to use a certain X variable. One wants to have at least 95% confidence. Both X variables have strong Pearson correlations with the Y variable and a perfect 0 P-Value which makes these variables significant and acceptable to use in the forecast. Furthermore, the correlations between the two X variables are less than each X variable’s correlation with the Y variable. These correlations are logical and prove the hypothesis made earlier. Below is the correlation matrix for all variables: 34 Correlations: Total Vehicle Sales, Employees non-farm, Saving Rate Employees non fa Total Vehicle Sa 0.673 0.000 Employees non-fa -0.608 0.000 -0.438 0.000 Saving Rate Cell Contents: Pearson correlation P-Value In order to further analyze the characteristics of the Y data, it is helpful to look at the autocorrelation function. The autocorrelation coefficients reveal that the data series definitely has negative trend as seen by the slowly decreasing autocorrelation coefficients. There is seasonality, although not significant, as shown by the spike in the 12th lag. Furthermore, there is also some cycle since the ACF’s often move up and down. Below is the ACF graph: Autocorrelation Function for Total Vehicle Sales (with 5% significance limits for the autocorrelations) 1.0 0.8 Autocorrelation 0.6 0.4 0.2 0.0 -0.2 -0.4 -0.6 -0.8 -1.0 2 4 6 8 10 12 14 Lag 16 18 20 22 24 Based on the analysis of the scatter plots and correlation matrix, it can be determined that there is no transformation needed for neither of the variables because the XX relationship is 35 weaker than both XY relationships and because there are no curvilinear relationships between the independent variables and the dependent variable. This model does require dummy variables because the Y data has seasonality. After creating the dummy variables and including them in the model, it can be determined that only the dummy variables m6 and m9 are significant and can be used in the multiple regression model. The best possible regression model using both X variables and the dummy variables m6 and m9 is displayed below: Regression Analysis: Y Total Vehi versus X1 Employees, X2 Saving Ra, ... The regression equation is Y Total Vehicle Sales = - 3076 + 0.0338 X1 Employees non farm - 69.5 X2 Saving Rate - 174 m6 - 182 m9 Predictor Constant X1 Employees non farm X2 Saving Rate m6 m9 S = 148.859 Coef -3076.4 0.033822 -69.54 -173.84 -182.08 R-Sq = 64.7% SE Coef 821.5 0.005926 14.58 64.11 70.45 T -3.74 5.71 -4.77 -2.71 -2.58 R-Sq(adj) = 62.5% Analysis of Variance Source Regression Residual Error Total DF 4 63 67 SS 2559676 1396018 3955694 Source X1 Employees non farm X2 Saving Rate m6 m9 Unusual Observations X1 Employees Y Total Vehicle DF 1 1 1 1 MS 639919 22159 Seq SS 1791670 478522 141458 148027 F 28.88 P 0.000 P 0.000 0.000 0.000 0.009 0.012 VIF 1.274 1.240 1.015 1.037 36 Obs 9 21 25 30 31 33 34 43 45 non farm 134994 135896 138105 137038 136355 131627 131387 130787 127374 Sales 1124.2 1063.4 1420.6 859.0 763.9 670.3 701.6 761.7 712.5 Fit 1133.4 1080.4 1017.3 988.2 1083.3 769.1 1005.7 1075.8 722.7 SE Fit 72.0 70.3 77.2 70.3 46.9 70.5 25.3 28.2 70.8 Residual -9.2 -17.0 403.3 -129.2 -319.4 -98.8 -304.1 -314.1 -10.2 St Resid -0.07 X -0.13 X 3.17RX -0.98 X -2.26R -0.75 X -2.07R -2.15R -0.08 X R denotes an observation with a large standardized residual. X denotes an observation whose X value gives it large leverage. Durbin-Watson statistic = 1.57157 To determine if the model is acceptable to use, we need to look at the R square value and the F statistic. The R sq value in this model is 64.7% which is good because it tells us that these coefficients explain more than half of the Y data. Furthermore, the F value of 28.88 is more than three times the book value. Also, the coefficients produced by the model are all significant because the p-values are far below 0.05 and the t-values above +/- 1.96. The signs of the coefficients also make logic sense and support my hypothesis from the proposal. Therefore the model is acceptable to use. The error measures for the fit period are a little bit higher than for other models. The MAPE is 10.56% and the RMSE is 143.282. However, they do not get lower using other predictors. An investigation of the best model gave the following results: There is no serial correlation. The Durbin-Watson statistic for this model is 1.57157. This is above 1.55 and below 2.45 which indicates no serial correlation. 37 There is no heteroscedasticity in this model. This can be determined by looking at the Residuals vs. order graph displayed below. The residuals seem to bounce around a zero mean and there is no noticeable megaphone effect. Residual Plots for Y Total Vehicle Sales Normal Probability Plot Versus Fits 99.9 400 99 Residual Percent 90 50 10 200 0 -200 1 0.1 -500 -250 0 Residual 250 -400 500 600 800 400 15 200 10 0 -200 5 0 1400 Versus Order 20 Residual Frequency Histogram 1000 1200 Fitted Value -320 -160 0 160 Residual 320 -400 1 5 10 15 20 25 30 35 40 45 50 55 60 65 Observation Order There is no multicollinearity. The VIF statistic for all the predictors is between 1.015 and 1.274. These are far below 2.5 which support the statement that there is no multicollinearity. Below is an autocorrelation function of the fit period residuals. It will be used along with the 4in1 plot above to evaluate the randomness of the residuals. 38 Autocorrelation Function for RESI1 (with 5% significance limits for the autocorrelations) 1.0 0.8 Autocorrelation 0.6 0.4 0.2 0.0 -0.2 -0.4 -0.6 -0.8 -1.0 2 4 6 8 10 12 14 Lag 16 18 20 22 24 Based on the information in the 4in1 plot it can be said that the histogram of the residuals has a normal distribution and that the residuals seem to be random because the residual versus order plot shows them fluctuating around zero. However, the autocorrelation function reveals that there is still significant seasonality and cycle in the residuals. The normal probability plot is good because most of the values fall on a linear line. The forecast for the hold out period using hold out x values to forecast Y produces the following error measures: MAPE= 11.6749% RMSE= 188.759 The error measures decline slightly from the fit period error measures which was expected. They are still pretty high. The time series plot of the forecast residuals is displayed below: 39 Time Series Plot of Fcst Resid 400 300 Fcst Resid 200 100 0 -100 -200 1 2 3 4 5 6 7 Index 8 9 10 11 12 Time Series Plot of Y Total Vehicle Sales 1600 Y Total Vehicle Sales 1400 1200 1000 800 600 1 8 16 24 32 40 Index 48 56 64 72 80 According to the time series plot, the residuals seem to fluctuate around zero. There may be a slight trend however it is not significant. When looking at the time series plot of the Y data 40 including the forecast for the hold out period, we can determine that the forecast looks reasonable when comparing it to the historical observations. Conclusion Although all the forecasting techniques delivered acceptable results, winter’s exponential smoothing method produced the best error measures. The relatively low MAPE of 5.89% for the fit period even improved to 5.17% for the forecast period. Another very successful model to forecast the Y variable Total Vehicle Sales is ARIMA. Its error measures were slightly higher with a MAPE of 6.51%, however they remained constant through the forecast period. The results of the multiple regression model were somewhat unsatisfactory because the variables used as predictors were all significant, had pretty high R square values and there was no multicollinearity or heteroscedasticity. Winter’s method of exponential smoothing was the best model to forecast Total Vehicle Sales because it was very successful in picking up the trend and seasonality that existed in the Y variable. Below is a table including the error measures of all forecast methods for the fit period as well as the forecast period: Forecast Model Error Comparison Fit Period Forecast Period RSME MAPE RSME MAPE Winter’s Exponential Smoothing 87.74 5.89% 74.79 5.17% Decomposition 157.146 12.70% 130.279 8.22% ARIMA 90.6118 6.51% 96.7536 6.50% Multiple Regression 143.282 10.56% 188.759 11.67% 41 Appendix A Total Vehicle Sales Forecast Data Observations Date Y Total Vehicle Sales X2 Employment non-farm X3 Personal Saving Rate 5/1/2006 1533.8 136621 2.6 6/1/2006 1545.3 137121 2.9 7/1/2006 1531.1 135945 2.3 8/1/2006 1530.1 136149 2.5 9/1/2006 1394.1 136817 2.6 10/1/2006 1260.6 137516 2.8 11/1/2006 1236.5 137898 2.9 12/1/2006 1476.4 137786 2.7 1/1/2007 1124.2 134994 2.5 2/1/2007 1285.1 135683 2.6 3/1/2007 1574.9 136576 2.8 4/1/2007 1366.0 137381 2.5 5/1/2007 1590.2 138323 2.2 6/1/2007 1481.3 138825 2.1 7/1/2007 1331.2 137425 2.1 8/1/2007 1500.5 137534 2.0 9/1/2007 1335.8 138096 2.3 10/1/2007 1256.5 138835 2.5 11/1/2007 1200.4 139143 2.3 12/1/2007 1414.1 138929 2.6 1/1/2008 1063.4 135896 3.7 2/1/2008 1196.5 136414 4.4 3/1/2008 1378.6 137003 4.5 4/1/2008 1273.1 137535 3.9 5/1/2008 1420.6 138105 8.3 6/1/2008 1212.6 138296 6.1 7/1/2008 1156.0 136811 5.1 8/1/2008 1269.1 136697 4.5 9/1/2008 984.6 136748 5.0 10/1/2008 859.0 137038 5.7 11/1/2008 763.9 136355 6.5 12/1/2008 916.1 135321 6.5 1/1/2009 670.3 131627 6.1 2/1/2009 701.6 131387 5.2 3/1/2009 872.8 131249 5.2 4/1/2009 832.6 131429 5.6 42 5/1/2009 6/1/2009 7/1/2009 8/1/2009 9/1/2009 10/1/2009 11/1/2009 12/1/2009 1/1/2010 2/1/2010 3/1/2010 4/1/2010 5/1/2010 6/1/2010 7/1/2010 8/1/2010 9/1/2010 10/1/2010 11/1/2010 12/1/2010 1/1/2011 2/1/2011 3/1/2011 4/1/2011 5/1/2011 6/1/2011 7/1/2011 8/1/2011 9/1/2011 10/1/2011 11/1/2011 12/1/2011 1/1/2012 2/1/2012 3/1/2012 4/1/2012 5/1/2012 6/1/2012 7/1/2012 8/1/2012 9/1/2012 938.3 874.8 1011.8 1274.6 759.5 853.9 761.7 1049.3 712.5 793.2 1083.9 997.4 1117.5 1000.5 1065.7 1011.5 973.9 965.2 888.0 1162.9 834.8 1009.3 1267.8 1177.2 1083.4 1076.5 1080.2 1096.6 1077.6 1046.9 1018.0 1272.3 934.7 1172.7 1431.9 1209.6 1361.9 1311.6 1178.4 1310.5 1210.3 131697 131510 129910 129786 130144 130741 130787 130242 127374 127811 128646 129770 130886 131004 129664 129728 130221 131195 131502 131199 128338 129154 130061 131279 131963 132453 131181 131457 132204 133125 133456 133292 130657 131604 132505 133400 134213 134556 133368 133753 134374 6.7 5.0 4.3 3.1 4.0 3.5 3.9 4.0 4.7 4.6 4.6 5.3 5.7 5.8 5.6 5.4 5.2 4.9 4.6 4.9 5.5 5.2 4.6 4.5 4.4 4.7 4.2 4.0 3.5 3.6 3.2 3.4 3.7 3.5 3.7 3.5 3.9 4.1 3.9 3.7 3.3 43 10/1/2012 11/1/2012 12/1/2012 1116.1 1165.1 1382.9 135241 135636 135560 3.7 4.7 7.4 Citations Y : http://research.stlouisfed.org/fred2/data/TOTALNSA X1: http://research.stlouisfed.org/fred2/series/PSAVERT X2: http://research.stlouisfed.org/fred2/data/PAYNSA Description of variables Y: Total Vehicle Sales in the United States, from May 2006 - Dec 2012, thousands of units, monthly data, not seasonally adjusted X1: All Employees in United States, from May 2006 - Dec 2012, thousands of persons, monthly data, not seasonally adjusted X2: Personal Saving Rate, from May 2006 – Dec 2012, in percent, monthly data, seasonally adjusted annual rate Appendix B Exponential Smoothing Data Table Y Total Vehicle Sales FITS1 RESI1 1533.8 1698.496172 -164.6961722 1545.3 1470.592482 74.70751775 1531.1 1492.204222 38.89577837 1530.1 1601.384932 -71.28493234 1394.1 1303.006613 91.09338685 1260.6 1284.940768 -24.3407677 1236.5 1178.326783 58.17321719 1476.4 1493.689081 -17.28908143 1124.2 1052.980873 71.2191265 1285.1 1232.190472 52.90952775 1574.9 1560.984218 13.91578233 1366.0 1429.945986 -63.94598628 1590.2 1500.449202 89.75079784 1481.3 1523.304025 -42.00402451 44 1331.2 1500.5 1335.8 1256.5 1200.4 1414.1 1063.4 1196.5 1378.6 1273.1 1420.6 1212.6 1156.0 1269.1 984.6 859.0 763.9 916.1 670.3 701.6 872.8 832.6 938.3 874.8 1011.8 1274.6 759.5 853.9 761.7 1049.3 712.5 793.2 1083.9 997.4 1117.5 1000.5 1065.7 1011.5 973.9 965.2 888.0 1478.653961 1437.70953 1288.356752 1219.158701 1186.891921 1452.777751 1045.564573 1181.90821 1455.696427 1257.16394 1411.849564 1346.901357 1208.035608 1270.601503 1097.47906 933.9126215 821.4632138 911.5968883 654.5066714 715.402073 812.3412269 748.5483182 865.8688387 816.0533407 821.0821421 1033.281023 1005.392701 795.9111855 790.9593759 947.8603482 755.3343873 802.9868702 978.8137012 966.6270408 1096.081647 1022.096204 1039.155534 1178.292827 775.2174272 952.0135485 896.6985774 -147.4539608 62.79047005 47.44324806 37.34129944 13.5080795 -38.6777513 17.83542716 14.59178983 -77.09642735 15.93606023 8.750435704 -134.3013572 -52.03560781 -1.501502668 -112.8790597 -74.91262152 -57.56321376 4.503111662 15.79332863 -13.80207298 60.4587731 84.05168179 72.43116129 58.74665929 190.7178579 241.3189767 -245.8927006 57.98881448 -29.25937585 101.4396518 -42.83438727 -9.786870178 105.0862988 30.77295918 21.4183528 -21.59620413 26.54446615 -166.7928274 198.6825728 13.18645152 -8.698577382 45 1162.9 834.8 1009.3 1267.8 1177.2 1083.4 1076.5 1080.2 1096.6 1077.6 1046.9 1018.0 1272.3 1161.222363 836.0672561 946.4286047 1275.674795 1167.021903 1313.351152 1067.389307 1121.312971 1150.439933 908.090978 1006.57492 955.8103638 1303.625058 1.677637326 -1.267256059 62.87139532 -7.874794951 10.17809712 -229.951152 9.110692541 -41.11297129 -53.83993314 169.509022 40.32507953 62.18963618 -31.32505816 Appendix C Decomposition Data Table Y Total Vehicle Sales HO 1533.8 934.7 1545.3 1172.7 1531.1 1431.9 1530.1 1209.6 1394.1 1361.9 1260.6 1311.6 1236.5 1178.4 1476.4 1310.5 1124.2 1210.3 1285.1 1116.1 1574.9 1165.1 1366.0 1382.9 1590.2 1481.3 1331.2 1500.5 1335.8 1256.5 1200.4 1414.1 1063.4 TREN2 1361.694124 1354.736483 1347.778842 1340.821201 1333.86356 1326.905919 1319.948278 1312.990637 1306.032997 1299.075356 1292.117715 1285.160074 1278.202433 1271.244792 1264.287151 1257.32951 1250.371869 1243.414228 1236.456587 1229.498946 1222.541306 SEAS2 1.142579926 1.014950259 1.051765392 1.131919943 0.94791084 0.923216552 0.847179762 1.069245167 0.792215329 0.918074702 1.128087943 1.032854185 1.142579926 1.014950259 1.051765392 1.131919943 0.94791084 0.923216552 0.847179762 1.069245167 0.792215329 CF 0.985831249 1.123862601 1.080105172 1.008168758 1.102592488 1.029043157 1.105761931 1.051635193 1.086541179 1.077518063 1.080458138 1.029092613 1.088843642 1.148071873 1.001102874 1.054316927 1.127028128 1.094568834 1.145965495 1.075659125 1.097968481 46 1196.5 1378.6 1273.1 1420.6 1212.6 1156.0 1269.1 984.6 859.0 763.9 916.1 670.3 701.6 872.8 832.6 938.3 874.8 1011.8 1274.6 759.5 853.9 761.7 1049.3 712.5 793.2 1083.9 997.4 1117.5 1000.5 1065.7 1011.5 973.9 965.2 888.0 1162.9 834.8 1009.3 1267.8 1177.2 1083.4 1076.5 1215.583665 1208.626024 1201.668383 1194.710742 1187.753101 1180.79546 1173.837819 1166.880178 1159.922537 1152.964896 1146.007255 1139.049615 1132.091974 1125.134333 1118.176692 1111.219051 1104.26141 1097.303769 1090.346128 1083.388487 1076.430846 1069.473205 1062.515564 1055.557924 1048.600283 1041.642642 1034.685001 1027.72736 1020.769719 1013.812078 1006.854437 999.8967962 992.9391553 985.9815144 979.0238735 972.0662326 965.1085916 958.1509507 951.1933098 944.2356689 937.278028 0.918074702 1.128087943 1.032854185 1.142579926 1.014950259 1.051765392 1.131919943 0.94791084 0.923216552 0.847179762 1.069245167 0.792215329 0.918074702 1.128087943 1.032854185 1.142579926 1.014950259 1.051765392 1.131919943 0.94791084 0.923216552 0.847179762 1.069245167 0.792215329 0.918074702 1.128087943 1.032854185 1.142579926 1.014950259 1.051765392 1.131919943 0.94791084 0.923216552 0.847179762 1.069245167 0.792215329 0.918074702 1.128087943 1.032854185 1.142579926 1.014950259 1.072135873 1.011121571 1.025743727 1.040692565 1.005881063 0.93081695 0.955151 0.890155909 0.802159248 0.782068606 0.747615368 0.742819569 0.67504053 0.68764993 0.720919807 0.739018734 0.780534529 0.876695804 1.032746702 0.739564527 0.85924556 0.84069502 0.923606704 0.852039163 0.823938345 0.922417447 0.933302 0.951662647 0.965705169 0.999444376 0.88753091 1.027523349 1.052909621 1.063086542 1.110891881 1.084035094 1.139111067 1.172934756 1.198236246 1.004203751 1.131620585 47 1080.2 1096.6 1077.6 1046.9 1018.0 1272.3 930.3203871 923.3627461 916.4051052 909.4474643 902.4898234 895.5321825 1.051765392 1.131919943 0.94791084 0.923216552 0.847179762 1.069245167 1.103958527 1.049204588 1.240516637 1.246878112 1.331465421 1.32871254 Comment Dr. Holmes allowed the use of Personal Saving Rate, seasonally adjusted, according to email from 7/15/2013