Tutorial for quantitative gene traits from the brain cancer data set.

advertisement

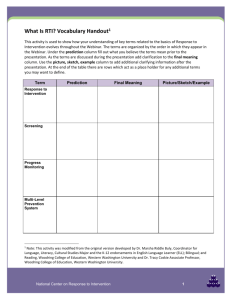

Tutorial for the randomGLM R package: prediction of gene traits Lin Song, Steve Horvath 1. Binary outcome prediction 1.1 Data preparation and RGLM prediction Here we use an empirical gene expression data set from the package to explain how RGLM [1] work. We start by loading required libraries and preparing data. The brain cancer data set contains a training set (55 samples across 5000 gene features), a test set (65 samples across 5000 features) but no outcomes. We use genes as outcomes instead. # load required library library(randomGLM) # load data data(brainCancer) # check data dim(brainCancer$train) dim(brainCancer$test) N = ncol(brainCancer$train) # sample a quantitative gene trait from all N genes (features), and exclude it from the feature space set.seed(1) traitIndx = sample(1:N, 1) y = brainCancer$train[, traitIndx] ytest = brainCancer$test[, traitIndx] x = brainCancer$train[, -traitIndx] xtest = brainCancer$test[, -traitIndx] # Since some of the column names are not unique we change them colnames(x) = make.names(colnames(x), unique = TRUE) colnames(xtest) = make.names(colnames(xtest), unique = TRUE); # generate binary outcomes by dichotomizing continuous outcomes at the median. Binary outcomes can be factors or numbers. y = as.factor(ifelse(y>median(y), 1, 0)) ytest = as.factor(ifelse(ytest>median(ytest), 1, 0)) In above, we randomly select a gene from all 5000 genes as the outcome. In practice, users can change the random seed to generate different gene traits and thus different data sets to play with. Now we have training data x, training outcome y, test data xtest and test outcome ytest. Prediction is done as follows. Make sure to set “classify=TRUE” for binary outcome prediction. This example should take less than 2 minutes. 1 # RGLM prediction using default parameter choices. To learn how to changer parameter values, look at the tutorial RGLMparameterTuningTutorial.docx. # nThreads= 1 assumes that the computer has only 1 core. RGLM = randomGLM(x,y,xtest,classify=TRUE, keepModels=TRUE, nThreads=1) # the following alternative code could be faster if your machine has 6 cores or more #RGLM = randomGLM(x,y,xtest,classify=TRUE, keepModels=TRUE, nThreads=6) Comment: Here we ignore the warning messages. The warnings indicate that there are problems with fitting a glm model. 1.2 Predictor outputs # check out-of-bag prediction in the training data predictedOOB = RGLM$predictedOOB table(predictedOOB, y) # y # predictedOOB 0 1 # 0 24 6 # 1 4 21 # check test prediction predictedTest = RGLM$predictedTest table(predictedTest, ytest) # ytest # predictedTest 0 1 # 0 28 3 # 1 5 29 # test set prediction can be obtained by the following as well, only works when setting keepModels=TRUE in randomGLM function predictedTest = predict(RGLM, newdata = xtest, type="class") Accuracy can be calculated by hand or using the accuracyMeasures function in the WGCNA R package. Here, the test set prediction accuracy is 0.88; sensitivity is 0.91; specificity is 0.85. ## test set accuracy measures library(WGCNA) # Note: some computer systems support multi-threading, but it is not #enabled within WGCNA in R. To allow multi-threading within WGCNA with #all available cores, use allowWGCNAThreads() # If you get an error message, please ignore it. out = accuracyMeasures(table(predictedTest==0, ytest==0)) out # Measure Value #1 Error.Rate 0.1230769 #2 Accuracy 0.8769231 #3 Specificity 0.8484848 #4 Sensitivity 0.9062500 #5 NegativePredictiveValue 0.9032258 2 #6 PositivePredictiveValue 0.8529412 #7 FalsePositiveRate 0.1515152 #8 FalseNegativeRate 0.0937500 #9 Power 0.9062500 #10 LikelihoodRatioPositive 5.9812500 #11 LikelihoodRatioNegative 0.1104911 #12 NaiveErrorRate 0.4923077 Users can extract GLMs constructed in each bag. For example, the following codes indicate that the 119th, 973rd and 2974th features are selected into the model of bag 1, and the model is p ln (1−p) = 7979.74 + 2.00𝐹𝑒𝑎𝑡𝑢𝑟𝑒119 + 5.22𝐹𝑒𝑎𝑡𝑢𝑟𝑒973 − 36.52𝐹𝑒𝑎𝑡𝑢𝑟𝑒2974 . # indices of features selected into the model of bag 1 RGLM$featuresInForwardRegression[[1]] Output X200660_at X202291_s_at X212145_at Feature.1 119 973 2974 # Model coefficients of bag 1. coef(RGLM$models[[1]]) Output (Intercept) X200660_at X202291_s_at 7979.738216 2.002009 5.220940 X212145_at -36.515803 We recommend the following variable importance measure of RGLM. It is the number of times each feature is selected into GLMs across bags. In this example, we use default total number of bags = 100. Most genes were not used at all for prediction in any bags (4830 out of 4999). There is one gene selected 10 times across 100 bags, and 3 genes each selected 9 times across 100 bags. These genes have the biggest variable importance, and they contribute most to outcome prediction. # check variable importance varImp = RGLM$timesSelectedByForwardRegression table(varImp) # 0 1 2 3 4 5 6 7 9 #4830 108 37 8 4 6 1 1 3 10 1 # number of features used for prediction sum(varImp>0) #169 # Create a data frame that reports the variable importance measure of each feature. datvarImp=data.frame(Feature=as.character(dimnames(RGLM$timesSelectedBy ForwardRegression)[[2]]),timesSelectedByForwardRegression= as.numeric(RGLM$timesSelectedByForwardRegression)) #Report the 20 most significant features datvarImp[rank(-datvarImp[,2],ties.method="first")<=20,] 3 # Barplot of the importance measures for the 20 most important features datVarImpSelected=datvarImp[rank(datvarImp[,2],ties.method="first")<=20, ] par(mfrow=c(1,1), mar=c(4,8,3,1)) barplot(datVarImpSelected[,2],horiz=TRUE,names.arg= datVarImpSelected[,1],xlab="Feature Importance",las=2,main="Most significant features for the RGLM",cex.axis=1,cex.main=1.5,cex.lab=1.5) Most significant features for the RGLM 38487_at 220980_s_at 220856_x_at 218232_at 218217_at 217362_x_at 215049_x_at 212203_x_at 209619_at 208306_x_at 205105_at 204829_s_at 204174_at 202953_at 202803_s_at 202625_at 202291_s_at 201280_s_at 200986_at 10 8 6 4 2 0 200839_s_at Feature Importance 1.3 RGLM thinning Previously, RGLM achieves prediction accuracy 0.87 with 169 features. Here we want to reduce the number of features used for prediction while remaining good predictive accuracy. RGLM thinning does this. This procedure removes features with small variable importance, and only keeps important features for prediction. As shown in the following, if we set thinning threshold as 4 1, only 61 features with variable importance>1 are kept for prediction. But prediction accuracy remains almost the same (0.86). threshold = 1 # thinned RGLM thinRGLM = thinRandomGLM(RGLM, threshold) # number of features remained in predictor thinN = sum(thinRGLM$timesSelectedByForwardRegression>0) thinN # [1] 61 # test set prediction after thinning predicted = predict.randomGLM(thinRGLM, xtest, type="class") accuracyMeasures(table(predicted, ytest)) # Measure Value #1 Error.Rate 0.1384615 #2 Accuracy 0.8615385 #3 Specificity 0.9062500 #4 Sensitivity 0.8181818 #5 NegativePredictiveValue 0.8285714 #6 PositivePredictiveValue 0.9000000 #7 FalsePositiveRate 0.0937500 #8 FalseNegativeRate 0.1818182 #9 Power 0.8181818 #10 LikelihoodRatioPositive 8.7272727 #11 LikelihoodRatioNegative 0.2006270 #12 NaiveErrorRate 0.4923077 2. Continuous outcome prediction We still use the same brain cancer data set for illustration. Note that gene traits are not dichotomized this time, and we set “classify=FALSE” in the randomGLM function. # start a new R session # load required library library(randomGLM) # load data data(brainCancer) # check data dim(brainCancer$train) dim(brainCancer$test) N = ncol(brainCancer$train) # sample a quantitative gene trait from all N genes (features) and exclude it from the feature space set.seed(1) 5 traitIndx = sample(1:N, 1) y = brainCancer$train[, traitIndx] ytest = brainCancer$test[, traitIndx] x = brainCancer$train[, -traitIndx] xtest = brainCancer$test[, -traitIndx] # RGLM prediction with 1 thread. # Consider choosing nThreads=NULL or a larger integer to speed it up. RGLM=randomGLM(x,y, xtest, classify=FALSE, keepModels=TRUE,nThreads=1) # define the out-of-bag prediction predictedOOB = RGLM$predictedOOB.response # What is the OOB estimate of the accuracy? Recall that for a continuous trait, the accuracy is defined as the correlation between OOB prediction and the true, observed training set outcome. as.numeric(cor(predictedOOB, y, use="p")) # [1] 0.8712458 # define the test set prediction predictedTest = RGLM$predictedTest.response # What is the test set estimate of the accuracy? Recall that for a continuous trait, the accuracy is defined as the correlation between test set prediction and the true, observed test set outcome. as.numeric(cor(predictedTest, ytest, use="p")) # [1] 0.870373 Both the OOB estimate and the test set estimate of the prediction accuracy show that the RGLM is quite accuracy (both around 0.87). References 1. Song L, Langfelder P, Horvath S (2013) Random generalized linear model: a highly accurate and interpretable ensemble predictor. BMC Bioinformatics 14:5 PMID: 23323760DOI: 10.1186/1471-2105-14-5. 6