ele12256-sup-0001-SuppInfo

advertisement

Supplementary methods

Road map to statistical computation

A simple example of the Metropolis-Hastings algorithm

Figure S1

Supplementary references

Supplementary methods

The Markov chains iteratively sample parameters and update the latent states of the model. In

our configuration, we proceed in the following order: (1) update the latent states (i.e. choose

the winner for each position, given current parameter estimates for the distribution of canopy

extension and the logistic regression relating horizontal distance between contenders and the

focal position to the probability of winning the position); (2) sample the parameters for canopy

extension; (3) sample the parameters for the logistic regression. All of these steps complete a

single iteration of the Gibbs sampler.

The latent states are sampled from,

𝑀𝑢𝑙𝑡𝑖𝑛𝑜𝑚(𝑃𝑖 ),

where 𝑃𝑖 is the vector of probabilities that each contender is the winner within the 5 × 5 pixel

neighborhood. There is a different vector for each neighborhood. These probabilities can be

calculated directly using estimates of the parameters for canopy extension and the logistic

regression from the current iteration of the Gibbs sampler.

For all models that assumed a gamma distribution for canopy extension, we placed gamma

priors on the rate and shape parameters of 0.01. We estimated the rate and shape parameters

using a Metropolis-Hastings step, where proposals were generated from,

𝐺𝑎𝑚(𝑎 = 750, 𝑏 = 750⁄𝑏 ∗ ),

where 𝑏 ∗ is the current estimate of the rate or shape parameter that is being sampled from the

previous step of the Markov chain (Clark 2007). This distribution produces values that are

centered on 𝑏 ∗ with a variance dictated by the magnitude of 𝑎.

For models that assumed a log-normal distribution for canopy extension we placed priors on

the mean and variance of log-transformed canopy extension. Because this is a normal

distribution, the mean and variance can be estimated directly using conjugate normal and

inverse-gamma priors on the mean and variance respectively (Clark 2007). We sampled

conditional posteriors from,

𝑁(𝜇|𝜃1 , 𝜏12 ),

where,

1

𝑛 −1

𝜏12 = (𝜏2 + 𝜎2 ) ,

0

and,

𝜃

𝑛𝑦̅

𝜃1 = 𝜏12 (𝜏20 + 𝜎2 ),

0

and,

𝑛

1

𝐼𝐺 (𝜎 2 | 2 + 𝛼 + 1, 𝛽 + 2 ∑𝑛𝑖=1(𝑦𝑖 − 𝜇)2 ).

The prior on 𝜃0 was 0, and the prior on 𝜏02 was 100. The prior on 𝛼 and 𝛽 was 0.01.

The logistic regression requires priors on intercept and slope parameters. We used normal

priors on the logit scale. For the intercept, the prior was 𝑁(𝑙𝑜𝑔𝑖𝑡(0.95), √0.5). For the slope

parameters, the prior was 𝑁(0, √0.5). We sampled these parameters using a Metropolis step,

where proposals were generated from,

𝑁(𝜇 ∗ , 𝜎 2 ),

where 𝜇 ∗ is the estimate of the intercept or slope parameter at the current iteration of the

Gibbs sampler, and 𝜎 2 depended on the parameter being sampled.

We ran each statistical model in 16 parallel Markov chains. Models H1G, H3G, H1LN, H2LN and

H3LN appeared to converge to conditional posterior distributions rapidly and the chains were

well mixed. We ran theses simulations for 15,000 iterations and discarded the first 5,000. We

then stored every 100th iteration. Gamma parameters for H2G mixed slowly, so we ran this

model in 16 parallel chains for 100,000 iterations. We discarded the first 25,000 and then

stored every 750th iteration.

Road map to statistical computation

Below we provide an explanation of statistical computation using the simple example of a single

neighborhood. We include code that can be used in the R programming environment for

statistical computation (R Development Core Team 2012). R Code is indicated by courier

font.

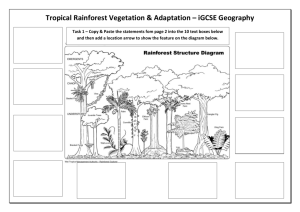

We begin by considering the fate of a hypothetical position that transitioned from 2 m to 20 m

tall (Fig. 2 main text, Fig. S1). In this example, the heights of pixels in a 5 × 5 pixel neighborhood

are indicated at two points in time. Each pixel is a square whose centers are separated by 1.25

m. Panel A is the first time point, and panel B is the second time point. Panel C shows an

arbitrary index that we use to refer to individual positions. For example, position 13 is the focal

position. Panel D shows the straight-line distance (m) between the center of each position and

the focal position. Only the focal position, indicated in green, has changed in height between

the first and second measurements in this example. Heights of the neighbors immediately to

the left and right correspond to the graphical example in Fig. 2 of the main text.

The data indicate that the position increased in height, but the data alone provide incomplete

information to infer whether this height change was due to the vertical growth of the

vegetation that occupied the position, corresponding to 18 m of vertical growth, or to lateral

capture of the position by one of the neighbors. Data provide some insight and constraints on

possible outcomes (for example, we might conclude that over a 2-year interval, vertical growth

of this magnitude is unlikely), but deciding which of these outcomes was most plausible, and

which of the contenders won the position, requires a framework for statistical inference.

The model described below proceeds in steps. These are (1) calculate the probability that each

candidate was the winner and randomly select the winner using a multinomial draw and the

corresponding probabilities. (2) Given the position and height of the winner, calculate the

distance between the height of the winner at the time of the first measurement and the height

of the focal position at the second measurement. We refer to this distance as canopy extension

(Fig. S1, panel E). When the winner is the focal position, canopy extension is simply vertical

growth. When the winner is a neighbor that was exactly the same height at the time of the first

measurement as the focal position at the time of the second measurement, canopy extension is

only horizontal lateral growth. When the winner is a neighbor that was not the same height at

the time of the first measurement as the focal position at the time of the second measurement,

canopy extension is both vertical and lateral growth, the magnitude of which can be calculated

by imaging a right triangle that connects the height of the neighbor at the time of the first

measurement to the height of the focal position at the time of the second measurement. (3)

Given the magnitude of canopy extension, update the parameter estimates for the distribution

of canopy extension. In the example below we use the gamma distribution (i.e. this is model

H1G from the main text). In a Bayesian framework, this step can be achieved using the

Metropolis-Hastings algorithm (Metropolis et al. 1953; Hastings 1970). (4) Given covariates of

the winner, update the parameters for the logistic regression. In the example below we will use

the horizontal distance between the focal position and winner (Fig. S1, panel D). In the main

text we considered the horizontal distance between the focal position and winner (H1G and

H1LN), the height of the winner at the time of the first measurement (H2G and H2LN), and both

the horizontal distance between the focal position and winner and the height of the winner at

the time of the first measurement (H3G and H3LN). This completes a single iteration of the

Gibbs sampler.

Implementation of the Gibbs sampler requires some summaries of the data. The probability

that each position was the winner is the joint probability of canopy extension and winning the

position according to the logistic regression. In R, the data are:

time1 <- matrix(c(7,6,10,8,19,16,14,8,8,10,10,20,2,5,5,2,22,8,9,10,8,10,6,22,4), 5, 5, byrow=T)

time2 <- time1

time2[3,3] <- 20 # Only the focal position changes in height

The horizontal distance between each position and the focal position (assuming 1.25 m pixels)

is (Fig. S1, panel D):

dx <- matrix(sort(rep(seq(1,(5*1.25),1.25),5)), nrow=5, ncol=5,byrow=F)

dy <- matrix(sort(rep(seq(1,(5*1.25),1.25),5)), nrow=5, ncol=5,byrow=T)

horizontal_distance <- sqrt(((dx-3.5)^2)+((dy-3.5)^2))

The matrix called horizontal_distance can be interpreted as the bases of a set of right triangles

that connect each position to the focal position. We can calculate candidate canopy extension

using the Pythagorean Theorem, which requires the bases and heights of these triangles. The

heights can be calculated like this:

delta_height <- time2[3,3] - time1

Candidate canopy extension is then (Fig. S1, panel E):

candidate_canopy_extension <- sqrt(delta_height^2 + horizontal_distance^2)

This matrix contains the distance from the focal position at the time of the second

measurement to each candidate at the time of the first measurement. One can think of these

numbers as the growth that would have occurred, had any given contender been the winner.

Examining the delta_height matrix indicates that some positions were taller at the time of the

first measurement than the focal position at the time of the second measurement. We assume

that these contenders did not win the position, because doing so would have required an

apparent reduction in height. Although we do not do so here, this assumption could be relaxed,

for example, to allow winners to emerge from the subcanopy. Therefore, we set each of these

positions equal to -9 in the candidate_canopy_extension matrix, so that they will have a gamma

probability density of 0. These locations are blacked out in portions of Fig. S1.

candidate_canopy_extension[which(delta_height<0)] <- -9

The probability of canopy extension is (Fig. S1, panel F):

p_canopy_extension <- dgamma(x=candidate_canopy_extension, shape=0.997, rate=0.518)

p_canopy_extension <- p_canopy_extension/sum(p_canopy_extension)

In this example and in Fig. S1, we calculate probabilities using the posterior medians for the

shape and rate of the gamma distribution (H1G in the main text), which are 0.997 and 0.518,

respectively. In practice, these would be the estimates associated with an iteration of the Gibbs

sampler.

The probability of winning the position based on the logistic regression (Fig. S1, panel G)

requires an inverse logit function, again using posterior medians for the slope and intercept of

the logistic regression:

inv.logit <- function(x){p <- exp(x)/(1+(exp(x))); return(p)}

p_winning_logistic <-

inv.logit(0.860-4.277*horizontal_distance)

p_winning_logistic <- p_winning_logistic/sum(p_winning_logistic)

The probability that each position was the winner (step 1) is in Fig. S1, panel H:

p_winner <- p_canopy_extension*p_winning_logistic

p_winner <- p_winner/sum(p_winner)

Next we use these probabilities to choose a winner.

the_winner <- rmultinom(n=1, size=1, t(p_winner))

The result of this multinomial draw is a matrix with 25 rows and 1 column that contains 24

entries of 0 and one entry of 1 at the position of the randomly selected winner. The relationship

we used between these positions and the 5 × 5 pixel neighborhood is in Fig. S1, panel C. Using

this location, extract the candidate canopy extension value.

location_of_the_winner <- which(the_winner==1)

canopy_extension <- t(candidate_canopy_extension)[location_of_the_winner]

Step (3) is to update parameter estimates for the distribution of canopy extension. We do this

for the rate and shape using the Metropolis-Hastings algorithm. Assuming we are at the gth

iteration of the Gibbs sampler, start by setting the new value for the parameter (at the gth

iteration) equal to the previous value (the g-1th iteration). This value will be overwritten later if

the proposed new value is accepted by the Metropolis-Hastings algorithm. If not, we will simply

keep the previous value. Proceed sequentially (see the section below, ‘A simple example of the

Metropolis-Hastings algorithm’):

1. Propose a new value for the shape. We do this using a gamma proposal density with

a small variance, described above (Clark 2007).

2. Calculate the joint probability of the data, given the current estimate of the shape

and prior.

3. Calculate the joint probability of the data, given the proposed estimate of the shape

and prior.

4. Accept or reject the proposal using Metropolis-Hastings.

5. Repeat this procedure for the rate, being sure to use the current value for the shape

(i.e. the updated value, if it was updated).

Step (4) is to update the parameters for the logistic regression. This is similar to the procedure

for updating the parameters for the distribution of canopy extension. However, because we use

a normal proposal density, these parameters can be updated using the Metropolis algorithm,

rather than Metropolis-Hastings.

1. Propose a new value for the intercept. We do this using a normal proposal density

with a small variance.

2. Calculate the joint probability of the data, given the current estimate of the

intercept and prior.

3. Calculate the joint probability of the data, given the proposed estimate of the

intercept and prior.

4. Accept or reject the proposal using the Metropolis algorithm.

5. Repeat this procedure for the slope(s), being sure to use the current value for other

parameters (i.e. the updated value, if parameters were updated).

This completes a single iteration of the Gibbs sampler. Next go back to step (1) and repeat the

entire process with the current parameter estimates. Repeat this process a large number of

times until the parameter estimates converge to the conditional posterior distribution.

An example of the Metropolis-Hastings algorithm

Here we provide a simple example of one implementation of the Metropolis-Hastings algorithm

(Metropolis et al. 1953; Hastings 1970). We do this so that readers can understand the

structure of a working version of the algorithm without the complexity of the statistical model.

Begin by generating a simulated data set under a gamma distribution with a shape of 1 and a

rate of 0.5.

x <- rgamma(1000,shape=1, rate=0.5)

hist(x)

Assume that you know the shape is 1, but do not know the rate, so that we can focus on just

one parameter. We will estimate the rate using the Metropolis-Hastings algorithm.

myRate <- matrix(NA, nrow=5000, ncol=1)

# A matrix to hold parameter estimates

myRate[1,1] <- 1

# A starting guess of 1 (we start at the wrong value to test the algorithm)

for (i in 2:length(myRate)){

# A loop

myRate[i,1] <- myRate[(i-1),1]

# set current = previous

proposal <- rgamma(1, shape=500, rate=500/myRate[(i-1),1])

# A proposed value (can be tuned)

pnow <-sum(dgamma(x,shape=1,rate=myRate[(i-1),1],log=T))+dgamma(myRate[(i-1),1],0.1,0.1,log=T)

pnew <-sum(dgamma(x,shape=1,rate=proposal,log=T))+dgamma(proposal,0.1,0.1,log=T)

#

#

#

#

the two lines above are the log likelihood of the data and the prior under the current (now)

and proposed (new) values of the rate

note that the prior here is a gamma with rate and shape of 0.1

this is an uninformative prior distribution

q1 <- dgamma(myRate[(i-1),1], shape=500, rate=500/proposal, log=T)

q2 <- dgamma(proposal, shape=500, rate=500/myRate[(i-1),1], log=T)

a <- exp(pnew-pnow)/exp(q2-q1)

# Acceptance probability

zz <- runif(1,0,1)

if (zz < a) {myRate[i,1] <- proposal}

}

par(mfrow=c(3,1))

plot(myRate, type="l")

abline(h=0.5, col="Red")

Figure S1. Inferring the fate of a single 5 × 5 pixel neighborhood at two points in time (panels A,

and B). Between the first and second measurements, the focal position (indicated by green)

experienced a height transition from 2 m to 20 m tall. The heights of the neighbors to the left

and right of this position correspond to the graphical example in Fig. 2 of the main text. Panel C

shows index locations for each position. These numbers are arbitrary, but are needed to refer

to individual positions. For example, position number 13 is the focal position. Panel D shows the

horizontal distance between the center of each position and the focal position. This is simply

the hypotenuse of a right triangle whose base and height are the distances between pixel

centers on the X and Y axes, respectively. In this example, we assume that pixels are square

with a side length of 1.25 m. Panel E shows the three dimensional distance between each

position and the focal position. This is the hypotenuse of a right triangle whose base is an

element in panel D, and whose height is the height difference between the given contender and

focal position. For example, position 14 was 10 m tall at the time of the first measurement, and

to win the focal position by the time of the second measurement it needed to grow vertically

and laterally to a height of 20 m tall. Thus, position 14 would have needed to extend its

vegetation by 10 m vertically and 1.25 m laterally, which corresponds to √101.5625, or

approximately 10.08 m. Panel F shows the probability associated with each candidate canopy

extension from Panel E. These numbers are simply a normalized gamma density, given the

posterior median shape and rate parameters. In practice, parameter estimates are updated

sequentially using a Gibbs sampler. Panel G shows the probability that any given contender will

win as a function of the horizontal distance between the contender and focal position. These

numbers were back-transformed from the logit scale, using posterior medians for the slope and

intercept of a logistic regression, and then normalized so that they sum to 1. Panel H is the

normalized product of panels G and F. Note that the focal position has a high probability of

winning based on distance alone (panel G), but a small probability of winning based on the

observed height change (panel F, i.e. a change of 18 m is statistically very unlikely if growth

follows a gamma distribution with shape and rate parameters equal to the posterior medians).

Combining these two sets of probabilities indicates that this position was most likely to have

been captured laterally by position 12. If position 12 was the winner, this position was

associated with lateral growth of 1.25 m.

References

Clark, J.S. (2007). Models for Ecological Data. Princeton University Press, Princeton, NJ.

Hastings, W.K. (1970). Monte-Carlo Sampling Methods Using Markov Chains and Their

Applications. Biometrika, 57, 97-&.

Metropolis, N., Rosenbluth, A.W., Rosenbluth, M.N., Teller, A.H. & Teller, E. (1953). Equation of

State Calculations by Fast Computing Machines. J Chem Phys, 21, 1087-1092.

R Development Core Team (2012). R: a language and environment for statistical computing. R

foundation for statistical computing Vienna, Austria.