Presentation1_report - Department of Computer Science

advertisement

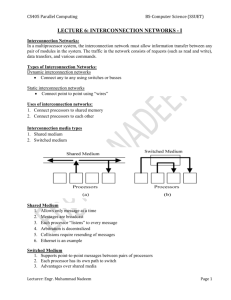

Parallel System Interconnections and Communications Western Michigan University CS 6260 (Parallel Computations II) Corse Professor: Elise de Doncker Presentation (1) Report Student’s Name: Abdullah Algarni February 26, 2009 Presentation’s abstract and goal: In this presentation, I will describe a comprehensive environment of parallel interconnection networks and communications. The need to interconnect the processing elements in computers solving many tasks in such a way that ensures optimal network performance is paramount. Of all architectures, massively parallel systems containing hundreds or more processors can be interconnected in a network of processors in a static ways (multicomputers) or dynamic ways (multiprocessors). I will describe many of possible topologies to build and assess the performance of multicomputers and multiprocessors such as Buses, Crossbars, Multistage Networks, Multistage Omega Network, Completely Connected Network, Star Connected Networks, and Linear Arrays. Also, I will give detailed descriptions of all important networks, hypercubes, and meshes and address all communication models and routing strategies. Finally, I will give evaluating to the static interconnection networks, and to the dynamic interconnection networks. In Addition, I will talk about “Grid Computing” which is a special case of parallel system. Grid computing (or the use of a computational grid) is the application of several computers to a single problem at the same time as a form of network-distributed parallel processing. Topics covered in the presentation: Parallel Architectures - SISD - SIMD - MIMD -Shared memory systems -Distributed memory machines -Hybrid systems Physical Organization of Parallel Platforms -Ideal Parallel Computer Interconnection Networks for Parallel Computers -Static and Dynamic Interconnection Networks -Switches -Network interfaces Network Topologies -Buses -Crossbars -Multistage Networks -Multistage Omega Network -Completely Connected Network -Linear Arrays -Meshes -Hypercubes -Tree-Based Networks -Fat Trees -Evaluating Interconnection Networks Grid Computing -Grid Concept and motivation -What it needs? -How it works? -Some existent grid projects “Today” 1. Classification of Parallel Architectures 1.1 Introduction: The traditional logical view of a sequential computer consists of a memory connected to a processor via a datapath. All three components – processor, memory, and datapath – present bottlenecks to the overall processing rate of a computer system. A number of architectural innovations over the years have addressed these bottlenecks. One of the most important innovations is multiplicity – in processing units, datapaths, and memory units. This multiplicity is either entirely hidden from the programmer, as in the case of implicit parallelism, or exposed to the programmer in different forms. In this section, I present an overview of important architectural concepts as they relate to parallel processing. 1.2 Types of Parallel Architectures We have three types of classification of parallel architectures: SISD: Single instruction single data – Classical von Neumann architecture SIMD: Single instruction multiple data MIMD: Multiple instructions multiple data – Most common and general parallel machine 1.3 Single Instruction Multiple Data (SIMD) : SIMD lets one microinstruction operate at the same time on multiple data items. This is especially productive for applications in which visual images or audio files are processed. What usually requires a repeated succession of instructions (a loop) can now be performed in one instruction. It is Also known as Array-processors. The concept of this architecture is as follow: A single instruction stream is broadcasted to multiple processors, each having its own data stream This type of architecture is still used in graphics cards today. Second: Multiple Instructions Multiple Data (MIMD): MIMD (Multiple Instruction stream, Multiple Data stream) is a technique employed to achieve parallelism. Machines using MIMD have a number of processors that function asynchronously and independently. At any time, different processors may be executing different instructions on different pieces of data. MIMD architectures may be used in a number of application areas such as computer-aided design/computer-aided manufacturing, simulation, modeling, and as communication switches. MIMD machines can be of either shared memory or distributed memory categories. These classifications are based on how MIMD processors access memory. Shared memory machines may be of the bus-based, extended, or hierarchical type. Distributed memory machines may have hypercube or mesh interconnection schemes. So, in this approach, each processor has its own instruction stream and input data. We can have further breakdown of MIMD usually based on the memory organization – Shared memory systems – Distributed memory systems Shared memory systems: The processors are all connected to a "globally available" memory, via either a software or hardware means. The operating system usually maintains its memory coherence. From a programmer's point-of-view, this memory model is better understood than the distributed memory model. Another advantage is that memory coherence is managed by the operating system and not the written program. Two known disadvantages are: scalability beyond thirty-two processors is difficult, and the shared memory model is less flexible than the distributed memory model. There are two versions of shared memory systems available today: 1) Symmetric multi-processors (SMPs): All processors share the same physical main memory. The Disadvantage of this approach is that the Memory bandwidth per processor is limited In this approach the typical size is : 2-32 processors. 2) Non-uniform memory access (NUMA): More than one memory but some memory is closer to a certain processor than other memory (The whole memory is still addressable from all processors) The Advantage: It Reduces the memory limitation compared to SMPs The Disadvantage: More difficult to program efficiently In this approach, To reduce effects of non-uniform memory access, caches are often used • Largest example of this type is : SGI Origin with10240 processors Distributed memory machines In distributed memory MIMD machines, each processor has its own individual memory location. Each processor has no direct knowledge about other processor's memory. For data to be shared, it must be passed from one processor to another as a message. Since there is no shared memory, contention is not as great a problem with these machines. It is not economically feasible to connect a large number of processors directly to each other. A way to avoid this multitude of direct connections is to connect each processor to just a few others. This type of design can be inefficient because of the added time required to pass a message from one processor to another along the message path. The amount of time required for processors to perform simple message routing can be substantial. Systems were designed to reduce this time loss and hypercube and mesh are among two of the popular interconnection schemes. Some protocols are used in this approach: – Sockets – Message passing – Remote procedure call / remote method invocation The performance of a distributed memory machine strongly depends on the quality of the network interconnect and the topology of the network interconnect There are two classes of distributed memory machines: 1) Massively parallel processing systems (MPPs) 2) Clusters 2. Physical Organization of Parallel Platforms In this section, I discuss the physical architecture of parallel machines. We start with an ideal architecture, outline practical difficulties associated with realizing this model, and discuss some conventional architectures. 2.1) Ideal Parallel Computer A natural extension of the serial model of computation (the Random Access Machine, or RAM) consists of p processors and a global memory of unbounded size that is uniformly accessible to all processors. All processors access the same address space. Processors share a common clock but may execute different instructions in each cycle. This ideal model is also referred to as a parallel random access machine (PRAM). Since PRAMs allow concurrent access to various memory locations, depending on how simultaneous memory accesses are handled, PRAMs can be divided into four subclasses. 1. Exclusive-read, exclusive-write (EREW) PRAM. In this class, access to a memory location is exclusive. No concurrent read or write operations are allowed. This is the weakest PRAM model, affording minimum concurrency in memory access. 2. Concurrent-read, exclusive-write (CREW) PRAM. In this class, multiple read accesses to a memory location are allowed. However, multiple write accesses to a memory location are serialized. 3. Exclusive-read, concurrent-write (ERCW) PRAM. Multiple write accesses are allowed to a memory location, but multiple read accesses are serialized. 4. Concurrent-read, concurrent-write (CRCW) PRAM. This class allows multiple read and write accesses to a common memory location. This is the most powerful PRAM model. Allowing concurrent read access does not create any semantic discrepancies in the program. However, concurrent write access to a memory location requires arbitration. Several protocols are used to resolve concurrent writes. The most frequently used protocols are as follows: Common, in which the concurrent write is allowed if all the values that the processors are attempting to write are identical. Arbitrary, in which an arbitrary processor is allowed to proceed with the write operation and the rest fail. Priority, in which all processors are organized into a predefined prioritized list, and the processor with the highest priority succeeds and the rest fail. Sum, in which the sum of all the quantities is written (the sum-based write conflict resolution model can be extended to any associative operator defined on the quantities being written). 2.2) Architectural Complexity of the Ideal Model Consider the implementation of an EREW PRAM as a shared-memory computer with p processors and a global memory of m words. The processors are connected to the memory through a set of switches. These switches determine the memory word being accessed by each processor. In an EREW PRAM, each of the p processors in the ensemble can access any of the memory words, provided that a word is not accessed by more than one processor simultaneously. To ensure such connectivity, the total number of switches must be (mp). (See the Appendix for an explanation of the notation.) For a reasonable memory size, constructing a switching network of this complexity is very expensive. Thus, PRAM models of computation are impossible to realize in practice. Is it realizable for big programs? Brain simulation: Imagine how long it takes to complete Brain Simulation? The human brain contains 100,000,000,000 neurons each neuron receives input from 1000 others. To compute a change of brain “state”, one requires 1014 calculations. If each could be done in 1s, it would take ~3 years to complete one calculation. Clearly, O(mp) for big values of p and m, a true PRAM is not realizable! 3. Interconnection Networks for Parallel Computers 3.1) Introduction: Interconnection networks provide mechanisms for data transfer between processing nodes or between processors and memory modules. A blackbox view of an interconnection network consists of n inputs and m outputs. The outputs may or may not be distinct from the inputs. Typical interconnection networks are built using links and switches. A link corresponds to physical media such as a set of wires or fibers capable of carrying information. A variety of factors influence link characteristics. For links based on conducting media, the capacitive coupling between wires limits the speed of signal propagation. This capacitive coupling and attenuation of signal strength are functions of the length of the link. There are two important metrics when we talk about networks: –Latency: • minimal time to send a message from one processor to another • Unit: ms, μs – Bandwidth: • amount of data which can be transferred from one processor to another in a certain time frame • Units: Bytes/sec, KB/s, MB/s, GB/s, Bits/sec, Kb/s, Mb/s, Gb/s Also its important to know some other terms such as: 3.2) Static and Dynamic Interconnection Networks Interconnection networks can be classified as static or dynamic. Static networks consist of pointto-point communication links among processing nodes and are also referred to as direct networks. Dynamic networks, on the other hand, are built using switches and communication links. Communication links are connected to one another dynamically by the switches to establish paths among processing nodes and memory banks. Dynamic networks are also referred to as indirect networks. Figure (a) bellow illustrates a simple static network of four processing elements or nodes. Each processing node is connected via a network interface to two other nodes in a mesh configuration. Figure (b) bellow illustrates a dynamic network of four nodes connected via a network of switches to other nodes. (a) a static network; and (b) a dynamic network. 3.3 Switches: Single switch in an interconnection network consists of a set of input ports and a set of output ports. Switches provide a range of functionality. The minimal functionality provided by a switch is a mapping from the input to the output ports. The total number of ports on a switch is also called the degree of the switch. Switches may also provide support for internal buffering (when the requested output port is busy), routing (to alleviate congestion on the network), and multicast (same output on multiple ports). The mapping from input to output ports can be provided using a variety of mechanisms based on physical crossbars, multi-ported memories, multiplexor-demultiplexors, and multiplexed buses. The cost of a switch is influenced by the cost of the mapping hardware, the peripheral hardware and packaging costs. The mapping hardware typically grows as the square of the degree of the switch, the peripheral hardware linearly as the degree, and the packaging costs linearly as the number of pins. 3.4 Network Interfaces: The connectivity between the nodes and the network is provided by a network interface. The network interface has input and output ports that pipe data into and out of the network. It typically has the responsibility of packetizing data, computing routing information, buffering incoming and outgoing data for matching speeds of network and processing elements, and error checking. The position of the interface between the processing element and the network is also important. While conventional network interfaces hang off the I/O buses, interfaces in tightly coupled parallel machines hang off the memory bus. Since I/O buses are typically slower than memory buses, the latter can support higher bandwidth. 4. Network Topologies A wide variety of network topologies have been used in interconnection networks. These topologies try to trade off cost and scalability with performance. While pure topologies have attractive mathematical properties, in practice interconnection networks tend to be combinations or modifications of the pure topologies discussed in this section. 4.1 Buses bus-based network is perhaps the simplest network consisting of a shared medium that is common to all the nodes. A bus has the desirable property that the cost of the network scales linearly as the number of nodes, p. This cost is typically associated with bus interfaces. Furthermore, the distance between any two nodes in the network is constant (O(1)). Buses are also ideal for broadcasting information among nodes. Since the transmission medium is shared, there is little overhead associated with broadcast compared to point-to-point message transfer. However, the bounded bandwidth of a bus places limitations on the overall performance of the network as the number of nodes increases. Typical bus based machines are limited to dozens of nodes. Sun Enterprise servers and Intel Pentium based shared-bus multiprocessors are examples of such architectures. The demands on bus bandwidth can be reduced by making use of the property that in typical programs, a majority of the data accessed is local to the node. For such programs, it is possible to provide a cache for each node. Private data is cached at the node and only remote data is accessed through the bus. There are two types of buses network: 1) Without cache memory The bounded bandwidth of a bus places limitations on the overall performance of the network as the number of nodes increases! The execution time is lower bounded by: TxKP seconds P: processors K: data items T: time for each data access 2) Second type, with cache memory If we assume that 50% of the memory accesses (0.5K) are made to local data, in this case: The execution time is lower bounded by:0.5x TxKP seconds That’s means that we made 50% improvement compared to the first type. 4.2 Crossbars: A simple way to connect p processors to b memory banks is to use a crossbar network. A crossbar network employs a grid of switches or switching nodes as shown in the figure bellow. The crossbar network is a non-blocking network in the sense that the connection of a processing node to a memory bank does not block the connection of any other processing nodes to other memory banks. Some specifications of this type of network: The cost of a crossbar of p processors grows as O(p2). This is generally difficult to scale for large values of p. Examples of machines that employ crossbars include the Sun Ultra HPC 10000 and the Fujitsu VPP500. 4.3 Multistage Networks: The crossbar interconnection network is scalable in terms of performance but unscalable in terms of cost. Conversely, the shared bus network is scalable in terms of cost but unscalable in terms of performance. An intermediate class of networks called multistage interconnection networks lies between these two extremes. It is more scalable than the bus in terms of performance and more scalable than the crossbar in terms of cost. The general schematic of a multistage network consisting of p processing nodes and b memory banks is shown in the figure bellow. A commonly used multistage connection network is the omega network. This network consists of log p stages, where p is the number of inputs (processing nodes) and also the number of outputs (memory banks). Each stage of the omega network consists of an interconnection pattern that connects p inputs and p outputs. Multistage Omega Network: An omega network has p/2 x log p switching nodes, and the cost of such a network grows as (p log p). Note that this cost is less than the (p2) cost of a complete crossbar network. The following figure shows an omega network for eight processors (denoted by the binary numbers on the left) and eight memory banks (denoted by the binary numbers on the right). Routing data in an omega network is accomplished using a simple scheme. Let s be the binary representation of a processor that needs to write some data into memory bank t. The data traverses the link to the first switching node. If the most significant bits of s and t are the same, then the data is routed in pass-through mode by the switch. If these bits are different, then the data is routed through in crossover mode. This scheme is repeated at the next switching stage using the next most significant bit. Traversing log p stages uses all log p bits in the binary representations of s and t. The perfect shuffle patterns are connected using 2×2 switches. The switches operate in two modes – crossover or passthrough. A complete Omega network with the perfect shuffle interconnects and switches can now be illustrated Multistage Omega Network – Routing: Let s be the binary representation of the source and d be that of the destination. The data traverses the link to the first switching node. If the most significant bits of s and d are the same, then the data is routed in pass-through mode by the switch else, it switches to crossover. This process is repeated for each of the log p switching stages using the next significant bit. Example: Routing from s= 010 , to d=111 Routing from s= 110 , to d=101 4.4 Completely Connected Network In a completely-connected network, each node has a direct communication link to every other node in the network. The following figure illustrates a completely-connected network of eight nodes. This network is ideal in the sense that a node can send a message to another node in a single step, since a communication link exists between them. Completely-connected networks are the static counterparts of crossbar switching networks, since in both networks, the communication between any input/output pair does not block communication between any other pair. While the performance scales very well, the hardware complexity is not realizable for large values of p. 4.5 Star Connected Networks In a star-connected network, one processor acts as the central processor. Every other processor has a communication link connecting it to this processor. The following figure shows a star-connected network of nine processors. The star-connected network is similar to bus-based networks. Communication between any pair of processors is routed through the central processor, just as the shared bus forms the medium for all communication in a bus-based network. The central processor is the bottleneck in the star topology. 4.6 Linear Arrays In a linear array, each node has two neighbors, one to its left and one to its right. If the nodes at either end are connected, we refer to it as a 1-D torus or a ring. a) with no wraparound links; (b) with wraparound link. Due to the large number of links in completely connected networks, sparser networks are typically used to build parallel computers. A family of such networks spans the space of linear arrays and hypercubes. A linear array is a static network in which each node (except the two nodes at the ends) has two neighbors, one each to its left and right. A simple extension of the linear array (a) is the ring or a 1-D torus (b). The ring has a wraparound connection between the extremities of the linear array. In this case, each node has two neighbors. 4.7 Meshes A two-dimensional mesh illustrated in the following figure (a) is an extension of the linear array to twodimensions. Each dimension has square P nodes with a node identified by a two-tuple (i, j). Every node (except those on the periphery) is connected to four other nodes whose indices differ in any dimension by one. A 2-D mesh has the property that it can be laid out in 2-D space, making it attractive from a wiring standpoint. Furthermore, a variety of regularly structured computations map very naturally to a 2-D mesh. For this reason, 2-D meshes were often used as interconnects in parallel machines. Two dimensional meshes can be augmented with wraparound links to form two dimensional tori illustrated in the following figure (b). The three-dimensional cube is a generalization of the 2-D mesh to three dimensions, as illustrated in the following figure (c). Each node element in a 3-D cube, with the exception of those on the periphery, is connected to six other nodes, two along each of the three dimensions. A variety of physical simulations commonly executed on parallel computers (for example, 3D weather modeling, structural modeling, etc.) can be mapped naturally to 3-D network topologies. For this reason, 3-D cubes are used commonly in interconnection networks for parallel computers (for example, in the Cray T3E). 4.8 Hypercubes The hypercube topology has two nodes along each dimension and log p dimensions. The construction of a hypercube is illustrated in the following figure. A zero-dimensional hypercube consists of 20, i.e., one node. A one-dimensional hypercube is constructed from two zero-dimensional hypercubes by connecting them. A two-dimensional hypercube of four nodes is constructed from two one-dimensional hypercubes by connecting corresponding nodes. In general a d-dimensional hypercube is constructed by connecting corresponding nodes of two (d - 1) dimensional hypercubes. The following figure illustrates this for up to 16 nodes in a 4-D hypercube. Properties of Hypercubes: - The distance between any two nodes is at most log p. - Each node has log p neighbors. 4.9 Tree-Based Networks A tree network is one in which there is only one path between any pair of nodes. Both linear arrays and star-connected networks are special cases of tree networks. The following figure shows networks based on complete binary trees. Static tree networks have a processing element at each node of the tree see the following figure. Tree networks also have a dynamic counterpart. In a dynamic tree network, nodes at intermediate levels are switching nodes and the leaf nodes are processing elements. Complete binary tree networks: (a) a static tree network; and (b) a dynamic tree network. To route a message in a tree, the source node sends the message up the tree until it reaches the node at the root of the smallest subtree containing both the source and destination nodes. Then the message is routed down the tree towards the destination node. Fat Trees: Tree networks suffer from a communication bottleneck at higher levels of the tree. For example, when many nodes in the left subtree of a node communicate with nodes in the right subtree, the root node must handle all the messages. This problem can be alleviated in dynamic tree networks by increasing the number of communication links and switching nodes closer to the root. This network, also called a fat tree, is illustrated in the following figure. 5. Evaluating Static Interconnection Networks We now discuss various criteria used to characterize the cost and performance of static interconnection networks. We use these criteria to evaluate static networks introduced in the previous subsection. Diameter: The distance between the farthest two nodes in the network. Bisection Width: The minimum number of wires you must cut to divide the network into two equal parts. Cost: The number of links or switches Degree: Number of links that connect to a processor A number of evaluation metrics for dynamic networks follow from the corresponding metrics for static networks. Since a message traversing a switch must pay an overhead, it is logical to think of each switch as a node in the network, in addition to the processing nodes. The diameter of the network can now be defined as the maximum distance between any two nodes in the network. This is indicative of the maximum delay that a message will encounter in being communicated between the selected pair of nodes. In reality, we would like the metric to be the maximum distance between any two processing nodes; however, for all networks of interest, this is equivalent to the maximum distance between any (processing or switching) pair of nodes. The connectivity of a dynamic network can be defined in terms of node or edge connectivity. The node connectivity is the minimum number of nodes that must fail (be removed from the network) to fragment the network into two parts. As before, we should consider only switching nodes (as opposed to all nodes). However, considering all nodes gives a good approximation to the multiplicity of paths in a dynamic network. The arc connectivity of the network can be similarly defined as the minimum number of edges that must fail (be removed from the network) to fragment the network into two unreachable parts. 6. Grid Computing Can we make Sharing between different organizations? “Yes we can” .. By using Grid computing we can make Computational Resources sharing Across the World. What is the relationship between parallel computing and grid computing? Grid computing is a special case of parallel computing 6.1 Introduction: Grid computing (or the use of a computational grid) is the application of several computers to a single problem at the same time – usually to a scientific or technical problem that requires a great number of computer processing cycles or access to large amounts of data Grid computing depends on software to divide and apportion pieces of a program among several computers, sometimes up to many thousands. Grid computing can also be thought of as distributed and large-scale cluster computing, as well as a form of network-distributed parallel processing [citation needed]. It can be small confined to a network of computer workstations within a corporation, for example or it can be a large, public collaboration across many companies or networks. It is a form of distributed computing whereby a "super and virtual computer" is composed of a cluster of networked, loosely coupled computers, acting in concert to perform very large tasks. This technology has been applied to computationally intensive scientific, mathematical, and academic problems through volunteer computing, and it is used in commercial enterprises for such diverse applications as drug discovery, economic forecasting, seismic analysis, and backoffice data processing in support of e-commerce and Web services. 6.2 GRID CONCEPT 6.3 Goals of Grid Computing Reduce computing costs Increase computing resources Reduce job turnaround time Reduce Complexity to Users Increase Productivity 6.4 What does the Grid do for you? You submit your work And the Grid ◦ Finds convenient places for it to be run ◦ Organises efficient access to your data Caching, migration, replication ◦ Deals with authentication to the different sites that you will be using ◦ Interfaces to local site resource allocation mechanisms, policies ◦ Runs your jobs, Monitors progress, Recovers from problems, Tells you when your work is complete 6.5Grids versus conventional supercomputers: "Distributed" or "grid" computing in general is a special type of parallel computing that relies on complete computers (with onboard CPU, storage, power supply, network interface, etc.) connected to a network (private, public or the Internet) by a conventional network interface, such as Ethernet. This is in contrast to the traditional notion of a supercomputer, which has many processors connected by a local high-speed computer bus. The primary advantage of distributed computing is that each node can be purchased as commodity hardware, which when combined can produce similar computing resources to a multiprocessor supercomputer, but at lower cost. This is due to the economies of scale of producing commodity hardware, compared to the lower efficiency of designing and constructing a small number of custom supercomputers. The primary performance disadvantage is that the various processors and local storage areas do not have high-speed connections. This arrangement is thus well suited to applications in which multiple parallel computations can take place independently, without the need to communicate intermediate results between processors. The high-end scalability of geographically dispersed grids is generally favorable, due to the low need for connectivity between nodes relative to the capacity of the public Internet. There are also some differences in programming and deployment. It can be costly and difficult to write programs so that they can be run in the environment of a supercomputer, which may have a custom operating system, or require the program to address concurrency issues. If a problem can be adequately parallelized, a "thin" layer of "grid" infrastructure can allow conventional, standalone programs to run on multiple machines (but each given a different part of the same problem). This makes it possible to write and debug on a single conventional machine, and eliminates complications due to multiple instances of the same program running in the same shared memory and storage space at the same time 6.6 Examples of Grids - - TeraGrid (www.teragrid.org) ◦ USA distributed terascale facility at 4 sites for open scientific research Information Power Grid (www.ipg.nasa.gov) NASAs high performance computing grid GARUDA Department of Information Technology (India Gov.). It connect 45 institutes in 17 cities in the country at 10/100 Mbps bandwidth. Data grid A data grid is a grid computing system that deals with data — the controlled sharing and management of large amounts of distributed data. These are often, but not always, combined with computational grid computing systems. Current large-scale data grid projects include the Biomedical Informatics Research Network (BIRN), the Southern California Earthquake Center (SCEC), and the Real-time Observatories, Applications, and Data management Network (ROADNet), all of which make use of the SDSC Storage Resource Broker as the underlying data grid technology. These applications require widely distributed access to data by many people in many places. The data grid creates virtual collaborative environments that support distributed but coordinated scientific and engineering research. References: [1] Introduction to Parallel Computing. By Ananth Grama, Anshul Gupta, George Karypis, and Vipin Kumar. [2] Parallel System Interconnections and Communications. By D. Grammatikakies, D. Frank Hsu, and Miro Kraetzl [3] Wikipedia, the free encyclopedia [4] Introduction to Grid Computing with Globus (ibm.com/redbooks) [5] Network and Parallel Computing: Ifip International Conference Npc 2008 Shanghai China Octob. By Jian (EDT)/ Li Cao [6] Network and Parallel Computing . By Jian (EDT) Cao & Minglu (EDT) Li & Min-you (EDT) Wu & Jinjun (EDT) Chen