Coding a Categorical Predictor

advertisement

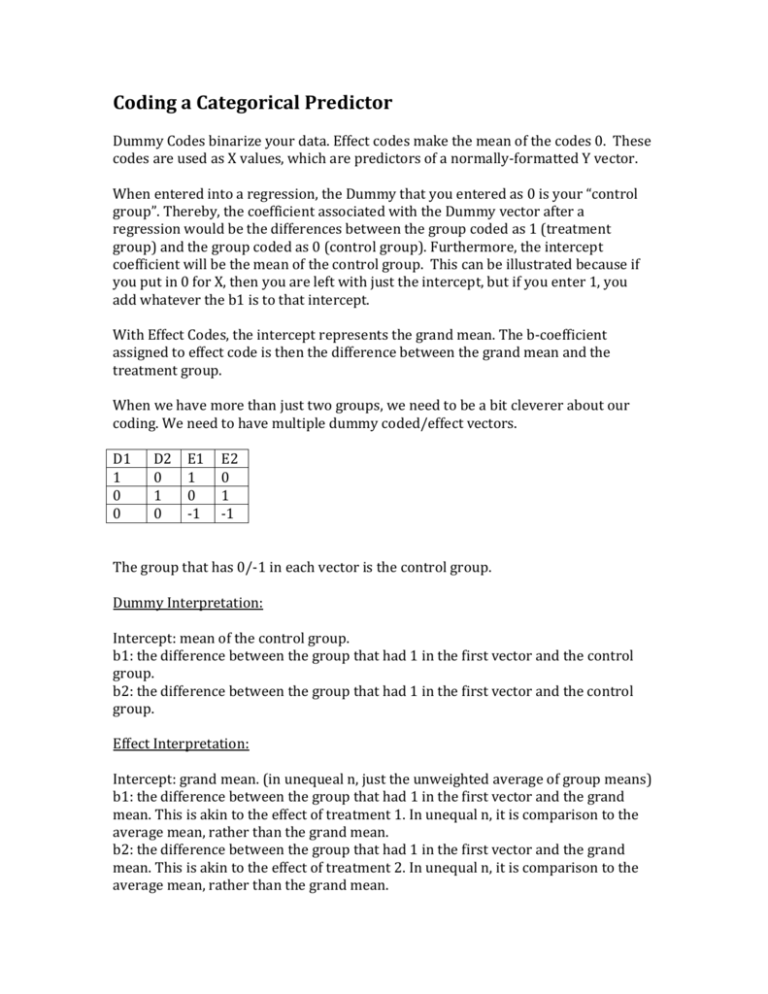

Coding a Categorical Predictor Dummy Codes binarize your data. Effect codes make the mean of the codes 0. These codes are used as X values, which are predictors of a normally-formatted Y vector. When entered into a regression, the Dummy that you entered as 0 is your “control group”. Thereby, the coefficient associated with the Dummy vector after a regression would be the differences between the group coded as 1 (treatment group) and the group coded as 0 (control group). Furthermore, the intercept coefficient will be the mean of the control group. This can be illustrated because if you put in 0 for X, then you are left with just the intercept, but if you enter 1, you add whatever the b1 is to that intercept. With Effect Codes, the intercept represents the grand mean. The b-coefficient assigned to effect code is then the difference between the grand mean and the treatment group. When we have more than just two groups, we need to be a bit cleverer about our coding. We need to have multiple dummy coded/effect vectors. D1 1 0 0 D2 0 1 0 E1 1 0 -1 E2 0 1 -1 The group that has 0/-1 in each vector is the control group. Dummy Interpretation: Intercept: mean of the control group. b1: the difference between the group that had 1 in the first vector and the control group. b2: the difference between the group that had 1 in the first vector and the control group. Effect Interpretation: Intercept: grand mean. (in unequeal n, just the unweighted average of group means) b1: the difference between the group that had 1 in the first vector and the grand mean. This is akin to the effect of treatment 1. In unequal n, it is comparison to the average mean, rather than the grand mean. b2: the difference between the group that had 1 in the first vector and the grand mean. This is akin to the effect of treatment 2. In unequal n, it is comparison to the average mean, rather than the grand mean. If we think of effect codes in terms of ANOVA, each subject’s score represents contributions of the grand mean + treatment effect + error. 𝑌 = 𝑏0 + 𝑏1 𝐸1 + 𝑏2 𝐸2 + 𝑒 This represents any one subject’s score. This minimizes depending on group, since the effect code would be 0 depending on which group. When the effect code is -1 and -1 for each (as it is for the control group), then we see: 𝑌 = 𝑏0 − (𝑏1 + 𝑏2 ) + 𝑒 What if our ns are unequal? For Dummy Codes, it’s the same thing. However, for effect codes, our mean of each vector needs to be 0. If they aren’t our interpretations are slightly different. But, we should really just weight our effect codes so that our interpretations remain. Instead of using -1 for the control group, we should instead use values that are based one the sample sizes of the groups involved in the contrast. Basically, you set up your binary effect codes as usual and then for the control group use the negative of the ratio of n for treatment 1 to n control group. Then, repeat for second group. If we had 6 in treatment 1, 5 in treatment 2 and 10 in control group: 𝑒𝑓𝑓𝑒𝑐𝑡 𝑐𝑜𝑑𝑒 𝑓𝑜𝑟 𝑣𝑒𝑐𝑡𝑜𝑟 1 = 𝑛 𝑡𝑟𝑒𝑎𝑡𝑚𝑒𝑛𝑡 1 6 = = .6 ∗ (−1) = −.6 𝑛 𝑐𝑜𝑛𝑡𝑟𝑜𝑙 10 We have an aim to have orthoganality(independence) amongst our vectors of effect codes. A zero order correlation is necessary but not sufficient to define orthogonality (not ever 0 correlation means orthogonal vectors – orthogonal could still be curvilinear and still have a linear relationship). Orthogonal vectors can be used to code a priori comparisons among group means. A full set of orthogonal comparisons includes (number of levels) -1 comparisons, which exhaust the available information. We can contrast groups where the sum of each vector is 0. If there is a 0 in the vector, then that means that particular group is not involved in that particular contrast. To determine if we have orthogonal contrast, we must prove that the products of codes equals 0 when all are summed. Basically, if we have two vectors… do a dot product and sum that resulting vector. That sum must be 0 for us to have orthogonal contrasts. When building our codes for contrasts, our b-coefficients will represent the difference between the groups of interest scaled by the number of groups. If we are doing specific contrasts AND our n is unequal, then we should use the number of subjects per group as the actual effect codes. For the first vector, we would use the number of the control group for the effect codes of both treatments and then the effect code for the control would be the negative sum of both treatment groups. If we are just doing a contrast between two treatment groups, set the control to 0 and then assign the number of subjects in the opposite group to each group(and make one negative so it all sums to 0). That is, if democrats have 8 subjects and republicans have 6, then we’d make a contrast where the effect code for democrats is 6 and the effect code for republicans is -8. Be sure to incorporate sample size when checking the orthogonality of contrast. Thus, if we are multiplying two vectors, make a third vector that has the n for each row (group) and then dot product all 3 vectors and sum. That number should be 0. With this setup, the intercept will still be the grand mean. The contrasts are now between weighted means of groups. With criterion scaling, we use a single coded vector to represent group membership. You simply use the group mean on the DV as the effect code. This produces an accurate R2, but will give wrong df since we only used 1 vector. Need to calculate MS and F by hand. Your intercept and b1 will be meaningless since they will be constants (0 and 1). Interactions Interaction basically exemplify that your data set of predictors may account for some variance, but only at levels of another variable. “The effect of one variable depends on the level of another”. The most straightforward way to do this is by taking your two vectors of values and multiplying them and then including all 3 vectors in a multiple regression. The intercept of a regression with interactions that used dummy codes is the mean for the variable that was coded 0. The first dummy code for factor A (no interaction) will have a coefficient that tells us the difference between factors A when everything else in the model is set to 0 (that is…for the control groups). Interaction terms tell us the difference in differences. That is, if we have two levels of factor A and two levels of factor B, the interaction tells us how much B1 and B2 differ as a function of A1. A2 would be a separate interaction term. If we don’t have an interaction, get rid of it and test the main effects. If there is an interaction, then split the data into subsamples, regress the outcome on the treatment variable in each subsample to find the appropriate MSregression treatment. Then use the MSres from the full model. You can do this AT each level of the continuous variable of interest by using a subsetting strategy (thresholding your continuous variable to online include certain ranges of values). You can also compute regions of significance by using the Potthoff extension of Johnson-Neyman procedure. To do so, you need a variance/covariance matrix of the coefficients. This will yield two X values beyond which T is significant < C and T is sig > C. You can also use a recentering strategy. We can remove a value of interest from each number in our X and recode the X and the interactions and then our b-coefficient associated with the dummy variable (b2) will test the group differences ay X=0 (which now represents your level of interest). Recentering is important because it reduces the correlation of X and XZ, since X makes up part of XZ. So center X and center Z and then take the cross-product of those two, centered vectors. We interpret a significant interaction by saying “the expected amount of change in relationship between Z and Y for a 1 unit increase in X, or expected amount o change in relationship between X and Y for a 1 unit increase in Z”. Centering allows us to guarantee meaning out of our zero points. Like, when we say “the coefficient of X when Z=0 could mean something much more if we know that 0 is actually a particular value that we have artificially made to be 0 by recentering”. An interaction coefficient will be exactly the same regardless of whether or not we recenter. The highest order term(the interaction) is invariant to linear transformation. If we do in fact have an interaction, we want to dissect our data to find what is driving our interaction. Where is it on Z that X changes it relationship with Y? We should choose particularly meaningful values such as the mean, +/- 1SD, percentiles of the variable distribution (top 90%, etc.). All we have to do is plug in our changing value (when all variables are centered), using a rearranged regression equation: 𝑌̂ = (𝑏0 + 𝑏2 𝑍) + (𝑏1 + 𝑏3 𝑍)𝑋 Our next step is to obtain the standard errors of simple slopes so that we may test their significance. We do this by using the following formula: 2 𝑠𝑏 = √𝑠𝑋2 + 2𝑍𝑠𝑋,𝑋𝑍 + 𝑍 2 𝑠𝑋𝑍 Where the first term under the root is the variance of the beta for X, Z is the Z value you are testing the effect at (e.g +1SD), the next s is the covariance between X and the interaction XZ. The last term is the variance of the interaction. You can do this for all interesting levels of Z that you are interested in and submit it to a t-test with n-k-1 df, where k is the number of predictors. For example: 𝑡+1𝑆𝐷 𝑜𝑛 𝑍 = 𝑠𝑖𝑚𝑝𝑙𝑒 𝑠𝑙𝑜𝑝𝑒 = 𝑏1 + 𝑏3 𝑍 𝑠𝑏 𝑓𝑜𝑟+1𝑆𝐷 𝑜𝑛 𝑍 We can rearrange these equations the other way to look at levels of X that we are interested in. Depends on the research questions. Just need to rearrange our regression equation to look like: and standard error to: 𝑌̂ = (𝑏0 + 𝑏1 𝑋) + (𝑏2 + 𝑏3 𝑋)𝑍 2 𝑠𝑏 = √𝑠𝑧2 + 2𝑋𝑠𝑍,𝑋𝑍 + 𝑋 2 𝑠𝑋𝑍 ANCOVA An ANCOVA aims to remove the extraneous error for a more powerful test of group differences (treatment). A Bad Ancova adjusts awat pre-existing group covariate differences. ANCOVA assumes that the covariate slope is the same across all treatment groups (no interaction). You want to test this interaction, hope it is non-significant, and then proceed with testing your main effects. Don’t forget about the adjusted means: 𝑌̅𝑗(𝑎𝑑𝑗) = 𝑌̅𝑗 − 𝑏(𝑋̅𝑗 − 𝑋̅) Differences in adjusted means are the same as differences among intercepts of various lines. If you dummy coded your variables, then these difference tests are built into the coefficient output. Curvilinear Regression Sometimes, a straight line just won’t do. Each line has as many bends as the highest order exponent -1. We need to make sure that our curved lines fit with our hypothesis and that we are not overfitting. If you had 20 unique X values, you could fit up to a 19th order polynomial. After that point, the prediction line simply traces the mean for the number of observations at each level. Functions of variables are non-linear, but coefficients are still linear (we are still just adding a coefficient and adding them all into one linear equation.). A good plan is to start with a higher order than you hypothesize, hope it is nonsignificant, and return to your hypothesis. (e.g start with cubic then reduce down to quadratic). A key note is that any shared variance for the terms in your equation will belong to the lower order term. You can only talk about the highest order term in the model when saying what it accounts for “above and beyond” the other terms in the equation. Interpreting our coefficients is a bit more complex, now. We normally think of coefficients as the change in Y with a unit increase in X. However, if we have X and then X2 and then X3, then those latter terms depend on X. (The intercept is independent, because 0 for X would make the higher order terms 0 as well.) In a quadratic model, the b for X2 is known as the acceleration. That is…how much is the change changing? If we expect a change in Y form X, but the magnitude of that change changes, then the b coefficient for X2 will tell us how much the change is changing. The b for X is, technically, the instantaneous linear change at X=0(first derivative). That is…from the get go, what is the slope of X before it starts accelerating…. We do not interpret lower order effects in the presence of a significant higher order effect. Centering in a curvilinear regression framework helps us interpret our data with more ease and also reduces colinearity. After centering, the acceleration will now give us a good idea of the overall trend. With centered variables, our coefficients are more easily interpreted. The first b1 will tell us the predominant direction of the curve. The second will be concavity (positive for opening upward, negative for opening downward). If b1 is 0, then that means there is a U function. If our X variable is relatively limited in the number of values it can take on, then you should use orthogonal polynomials. Orthogonal polynomials are unique variables that are structures to capture specific curve components. The weights of orthogonal polynomials should sum to 0. Furthermore, the sum of the products of any pair of corresponding weights will be 0. The u-Point Scales, as they are known, will showcase the number of turns in the curve, depending on how many times the series of numbers changes from increasing to decreasing. If the relationship between X and Y is monotonic, we can use the bulging rule to determine how to transform X and Y depending on which quadrant (Cartesian) the line’s curve falls on. This is REPLACING the actual X or Y variable with a transformed one. IIIIIIIV- X up Y up Y up X down X down Y down X up Y down By up or down, we mean raiding to the power of X or Y to [3 2 1 .5 0 -.5 -1 -2 -3]. Transforming Y might change the error structure. If non-linearity is the only issue, just transform X. So we can either add terms or transform terms. Which to use when? Transformation is appropriate for monotonic only. Polynomial works for both monotonic and non-monotonic associations. Polynomial approach uses additional model degrees of freedom, whereas a transformation does not. Another option is an exponential and power functions. For exponential, we put eb1X and for power we do Xb1. The predicted value generated by a trend may be applied to X values not included in, but within the range of the original data (interpolation). However, extrapolating predicted values generated by a trend that is outside the range of X value is risky. The trend may reverse at different ranges of X (since that happened in our current dataset already!). Variables that are non-linearly transformed can also interact with other variables. b1 would be the instantaneous acceleration for control. b2 is the acceleration in control group. b3(attached to a dummy variable) would show the difference in intercepts of control and treatment. b4(interaction between original X and a dummy) would show the difference in instantaneous change between control and treatment. b5(the interaction between quadratic term and dummy variable) would be the difference in quadratic acceleration between control and treatment. Mediation A mediator informs us about what mechanisms give rise to an effect other than the predictor variable. We must satisfy the following: 1)the predictor must be related to the mediator a 2) the predictor must be related to the outcome c (total effect) 3) the mediator must be related to the outcome (when the predictor is in the model) b / c’ for the predictor 4) the predictor is strongly related to the outcome when mediator is in the model. c’ < c Total effect = a +b Mediated effect = total effect – direct effect Mediation= c-c’ OR Mediation=a*b We need to test if the mediation is substantial. We would first need to calculate the standard error of the mediated effect: 𝑠𝑚𝑒𝑑 = √𝑏 2 𝑠𝑎2 + 𝑎2 𝑠𝑏2 Then we can calculate the Z statistic by: 𝑚𝑒𝑑𝑖𝑎𝑡𝑖𝑜𝑛 𝑧= 𝑠𝑚𝑒𝑑 We can also bootstrap to test the mediated effect, since the z-test assumes normality, which a*b is not necessarily normal. If we have multiple mediators, then we need to run get an estimate of c’ from iterating over each mediator being allowed to be in the model alongside the predictor, one at a time. Then, sum all the c’s and estimate each a*b each time, uniquely, depending on which mediator was in the model. A phenomenon known as suppression will actually strengthen the predictive validity of another variable, by increase the predictor’s coefficient. Classical suppression is when the suppressor is related to the predictor but not to the outcome. Thus, it suppresses variance in predictor that is irrelevant to the outcome. (Verbal ability may serve as a suppressor in the association between a paper and pencil test of job skills and a measure of job performance). We can see suppression if c’ > c Be sure to be wary of common cause models (Where the mediator causes X and Y) when interpreting any hint of “causality” in your mediated model. Piecewise Models Basically, a continuous piecewise is similar to an interaction in that the relationship between the predictor and the outcome is different for different ranges of the predictor variable. We can add in a categorical/dummy variable that interact with a recentered X at the same level of the split in order to model a piecewise relationship. The dummy would be a conditional (D1=O if X<some value * recentered at that same value). Let’s say the value we want to recenter at is 10, then we can rearrange our terms to have: 𝑌 = (𝑏0 − 15𝑏2 ) + (𝑏1 + 𝑏2 )𝑋1 + 𝑒 the intercept will be the first segments intercept b1 will be the 1st segments slop b2 will be the difference between the 1st and 2nd segment slopes Thus b1+b2 would be the second segment’s slope. If the interaction between the dummy variable (with the if statement) and the recentered X is significant, then you need two slopes. A discontinuous piecewise would be useful for something like an intervention, where we expect one line to totally change its slope to the point where we would need a new line at some point in the graph to model an intervention because a) the intercept would change(if it could be extended back to the beginning of the experiment, which it can’t) and b) the slope would change We would set up our dummy code to be a condition statement based on the time during our measurements when we expect our intervention to be. Additionally, we would take our time predictor and recenter it to be at the time of interest. We also need to have the dummy variable as its own isolate variable. Thus, if we rearrange our terms we have(if 3 is the value for the if statement): Here, 𝑌 = (𝑏0 − 3𝑏2 ) + (𝑏1 + 𝑏2 )𝑋 + 𝑏3 + 𝑒 b0 is the pre-intervention(if statement) intercept or the expected value when X(raw)=0 b1 is the pre-intervention slope b2 is the difference between the pre and post intervention slopes. If this is significant, then we need two separate lines. b3 is the immediate intervention effect. This is the difference between where we would expect the original line (pre intervention) to keep going and where the new, discontinuous line actually is. If this is significant, then we need a discontinuous model. We can add in another dummy variable to this model to represent a control group that underwent the same testing at the same times, but without an intervention. We would add in additional terms where that new dummy interacts with time, the if statement dummy, and both the time and if statement together. We would be interested in whether or not the 3 way interaction and the interaction between the dummy for intervention and the if statement interact. The coefficients for these would capture the difference between control and intervention group POST the intervention (the if statement). In a discontinuous piecewise model, intercept is for the control, X is for the control, the interaction between the recentered X and the Dummy(condition) is for the control and the Dummy is for the control. The Intervention is for the treatment group, so is anything that interacts with the intervention. These show the differences from the control. In summary: Continuous Piecewise: intercept + X + D1(if statement)X2(centered at if statement) DisContinuous Piecewise: Continuous + D1 knots are the locations on a regression where we see a “spline” and a general shift in the function.