Categorical Variables in Multiple Regression

advertisement

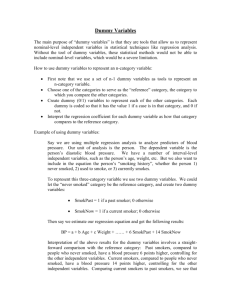

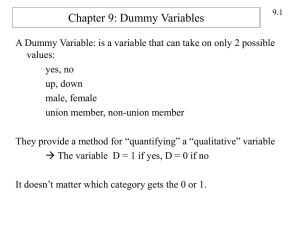

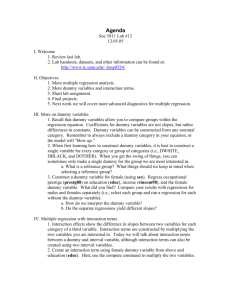

Statistical Analysis: SC504/HS927 Handout Week 22: OLS 3: Multiple Regression and Dummy Coding Categorical Variables in Multiple Regression So far we have mainly used continuous variables (interval/ratio data) as predictors within our multiple regression models. An extension of multiple regression is if we want to ‘control’ for (or include) categorical variables. If these are binary variables – they have values of 0 and 1 only – then they can simply be plugged into the model. In such cases the co-efficient for such a variable is the effect on the slope where the condition is true (X=1) e.g., Y = a + b(1). For example if, in a hypothetical data set we wanted to introduce variables for age and for sex (coded M=0 and F=1) the equations would take the form: Y=a + b1(age) +b2(0) i.e. Y=a + b1(age) when respondents were male; and Y=a + b1(age) +b2(1) i.e. Y=a + b1(age) +b2 when respondents were female. NB: Note that you have to represent this by producing two equations; one for males and one for females. However, in many cases we have to transform them into a ‘dummy variable’ or a series of ‘dummy variables’ that have the values 0 or 1. Returning to the data set alcohol.sav that we used in week 20 we might introduce sex as a predictor alongside household income to investigate whether they account for a significant amount of variance in the number of units alcohol consumed. First we want to transform it into a binary (0,1) variable to enable correct interpretation. Note that although you have two sexes, you just have one dummy variable: one sex is related to the value of the other, which is given the value of 0. Transform Compute name new sex variable under target variable (e.g. sex2) = enter ‘0’ in the numeric expression box If sex =1 (make sure you have clicked ‘include if case satisfies condition’) Continue OK Transform Compute sex2 = enter ‘1’ in the numeric expression box If sex =2 (make sure you have clicked ‘include if case satisfies condition’) Continue OK Then run the regression: Analyze Regression Linear Dependent Insert d7unit Independent Insert eqvinc and sex2 Statistics Tick confidence intervals in addition to the default tick for estimates Continue OK Coefficientsa Model 1 (Cons tant) annual hous ehold income in £000s s ex2 Uns tandardized Coefficients B Std. Error 9.329 1.012 Standardized Coefficients Beta t 9.222 Sig. .000 95% Confidence Interval for B Lower Bound Upper Bound 7.333 11.325 .024 .031 .056 .798 .426 -.036 .085 -4.805 .981 -.344 -4.896 .000 -6.741 -2.868 a. Dependent Variable: units of alcohol drunk The negative value of the coefficient for sex indicates that being female (value=1) reduces the estimated alcohol consumption compared to being male (value=0). Thus our estimate for alcohol (d7unit) will look like this: Ŷ = constant+b1*eqvinc+b2*sex =9.329+0.024*eqvinc – 4.805*sex Therefore the estimate for a man will be Ŷ =9.329+0.024*eqvinc And the estimate for a woman will be: Ŷ =9.329+0.024*eqvinc – 4.805 NB The net estimates would have ended up the same had we coded 0 for female and 1 for male although the coefficients would have been different. If you don’t believe me try it! Recode your sex variable so that Male = 1 and Female = 0 and then work out the regression equation, you’ll see that the intercept value has changed but all other absolute values have remained the same resulting in the same predicted Y value. The value of the coefficient for income is now the effect of each additional unit of income controlling for sex or holding sex constant (i.e. comparing women with women and men with men). Alternatively the value of the coefficient for sex (if you are more interested in sex differences) is the amount that being a woman will reduce the amount drunk compared to men, controlling for household income. The value of the constant (intercept) is now where both income and sex=0 i.e. it is the value for men at zero household income (even if zero household income is not inherently very meaningful). If the variable is not binary, we need to turn it into a series of binary variables by the use of a series of ‘dummy’ variables. For example, suppose you want to investigate the effect of housing tenure: you have a variable coded: 1=owns outright 2=owns with a mortgage 3=part owns, part rents 4=rents 5=rent free NB: the coding is arbitrary. You could have 5=owns outright. 3= owns with a mortgage, 1=rents, 2=rent free, 4=part owns, part rents. If the categorical variable has 5 categories, create 4 dummy variables e.g. d1 =1 if owns with a mortgage, 0 otherwise d2 =1 if part owns, part rents, 0 otherwise d3 =1 if rents, 0 otherwise d4 =1 if rent free, 0 otherwise The omitted category is known as the ‘reference’ category, and each case will have a maximum of one dummy coded 1, outright owners will have them all coded 0 As a rule, when using models with dummy variables you should include all the dummies. Even if some coefficients become insignificant once we introduce further variables into a model we might want to retain them nevertheless to indicate that we have taken them into account. In many analyses in social science we are interested in the effect of one particular predictor/explanatory variable on the outcome variable but need to control for the effects of other variables. Using ‘stats sceli.sav’ data 1. Create dummy variables for qual3 (highest qualification) where ‘none’ is your reference category. You can do this by going to Transform → Compute → name your new dummy variable in the target variable box then you will have to figure out how to assign numbers to your dummy variables so that each variable only consists of 0s and 1s. Then repeat the procedure for your second dummy variable (remember there are 3 categories within qual3 therefore we will have to create 2 new dummy variables). 2. Label your new dummy variables by going to ‘variable view’ and inserting the value labels – from now on when you create tables the value labels should appear informing you of which category you are inspecting. 3. Once you have created both dummy variables create a table of frequencies including ‘qual3’ plus both your new dummy variables. Check the table to ensure you have correctly assigned 0s and 1s within both dummy variables. 4. Now run a regression analysis (Analyze → Regression → Linear) with ‘weekly household income’ as your dependent variable and both your dummy variables as independent variables. 5. Report the R2 value, the F test and its significance level and the individual standardised beta coefficients and their significance levels. What can you conclude from this analysis? 6. Now re-run the analysis keeping the predictors and dependent variable but add ‘hourly wage’ as a new predictor to the model by clicking the ‘Next’ button and adding the variable into this box. Don’t forget to go to statistics and click on R2 change before running the analysis. Does this new predictor explain a significant amount variance in the dependent variable after accounting for the variance explained by the two dummy variables? What can you conclude from this analysis?