Liz Salesky Math 20, Project Paper

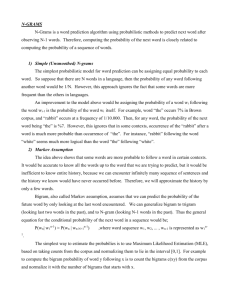

N-gram Language Models

If someone asked you to fill in the blank in the sentence ‘How do we predict what word

comes ____’ you would undoubtedly answer with ‘next.’ Even for a more ambiguous sentence

like ‘I need to study for my Probability ____’ we can immediately limit our responses to

semantically similar words like ‘final,’ ‘exam,’ or ‘test.’ Why is this? Why can we listen to music

and be able to roughly sing along, though we might not have heard the song before? Our

brains think probabilistically, though we may not consciously realize it. This is particularly

obvious when it comes to our language usage. Native speakers of a language seem to have a

built-in grammar; we may not be able to explain why we can use certain words in some

situations, and not others, or why a certain preposition needs to come after a given word or

phrase, but somehow we’ve been trained from a young age to realize how to sequence words.

Searching for the right word, or even correct letter when we’re spelling, we are looking for

words that fit a certain context. A popular application is reading text without vowels; ‘w cn stll

rd wtht ny vwls,’ because after seeing and hearing so many examples of the English language,

we intuitively know the probability of certain vowels in certain locations and can fill in the

gaps. Computational linguistics has found hosts of ways to use statistically organized

sequences of words; machine translation, speech recognition, and text prediction, to just

name a few. Language models comprising sequences of n words, or n-grams, can be used to

describe certain aspects of a corpus, but can also predict new forms based on what has come

before. The n-gram models discussed in this paper can predict the most probable next word

given a history, using simple conditional probability, as well as tell us how predictable a large

text is given a unit length of n words.

At base, to computer probability, we must count something. In computational

linguistics, we are counting words! In talking about n-grams, we’re concerned with the

frequencies of words in a corpus, and as the value of n gets larger, we’re also concerned with

the relative frequencies of words. We want to know, given a history of n-1 words, how

probable it is that the nth is a particular word. This practice has many very prominent

applications, like machine translation, text prediction, spelling correction, and more, which

we will look at later. Let us now look at how we determine the probability of the nth word. At

the heart of the matter is relative frequency – out of all these possible outcomes, what is the

probability that we have outcome x? So, if we have a text, what is the probability of the word

‘the?’ Intuitively, we know that it is just the counts of ‘the’ out of the total number of words in

the text;

. Now we already now how to construct a unigram language model! We are

looking at the probability of a single word, or gram, within a language model, which is our

corpus, constructed via probability. A unigram model is a 0th order Markov approximation; it

actually involves no context at all, since we are looking at a single word. The probability of any

word is just its relative frequency within the corpus, as the above formula suggests. Looking

at a 7-word sentence like ‘the girl is going to the store’ with a unigram language model, we’re

asking ‘Given this sentence, what is the likelihood of this single word?’ So, we may create a

chart with each of the 7 words, marking their probabilities as the number of times they

appear in the sentence out of the total number of words in the sentence. We see that every

word has a probability of 1/7, then, except for ‘the,’ which has a probability of 2/7. Note that

the total number of words in the sentence is not the total number of unique words, or types,

but rather the number of word occurrences, or tokens. Progressing, though, we want to know

Liz Salesky Math 20, Project Paper

how likely it is, based on the text we’re looking at, that we see both the words ‘the’ and ‘and.’

Note that we’re not yet talking about distributions; we don’t need to see them next to each

other, we just need to see both of them throughout the text. This alone is actually quite useful

in language identification! Seeing patterns of words and word structure can separate related

languages, like Dutch and Afrikaans, even though the two languages are mutually intelligible.

This joint probability is given by

, and we can infer what will happen as we

increase the number of words we’re asking about. Let us put these into context – literally. If

we’re also concerned about the context a word is in, we’re asking ‘what is the probability of

word x given that word y precedes it?’ But, we recognize that this is just conditional

probability! Given our definition above about the probability of x, Bayes’ rule tell us that this

question translates to p(wn|wn-1) = c(wn-1, wn)/c(wn-1).1 So, we now know how to construct a

bigram language model! A bigram model is a 1st order Markov approximation; as the formula

above shows us, it asks for the probability of a word x, given its predecessor y in the text. This

is the number of times that we see both words, out of the total number of times we see the

predecessor; we’re looking for the percentage of times that x follows y out of all the times that

something follows y. Continuing on, we note that a trigram model could be constructed using

p(wn|wn-2, wn-1) = c(wn-2, wn-1, wn)/c(wn-2, wn-1), and so we can generalize our cases. A unigram

model gives us p(Xn | Xn-1, Xn-2, Xn-3, Xn-4, ..., X1) = p(Xn), while a bigram model is represented by

p(Xn | Xn-1, Xn-2, Xn-3, Xn-4, ..., X1) = p(Xn | Xn-1), a trigram by p(Xn | Xn-1, Xn-2, Xn-3, Xn-4, ..., X1) =

p(Xn | Xn-1, Xn-2), and so an n-gram by p(Xn | Xn-1, Xn-2, Xn-3, Xn-4, ..., X1) = p(Xn | Xn-1, Xn-2, ..., Xn-y).

This is all a direct application of Bayes’ rule. Before we move on to the significance of such

models, let us note some helpful chain rule. In performing the calculations we just listed, as n

increases, we aren’t losing information; if we’re looking at a trigram model, we need to first

have the word x to consider the probability of x given y, which in turn needs to occur in order

to have x given y and z. So, the probability of the third gram, or word, in a trigram, or three

word sequence, is expanded out from the above formula to be p(x, y, z) = p(x) * p(y|x) *

p(z|x,y), and our generalized case is p(x1, x2, x3, ..., xn) = p(x1) * p(x2|x1) * p(x3|x1,x2) *.... *

p(xn|x1,x2, ...,xn-1).

In using n-grams, we must ask ourselves how much history we should use. What is the

perfect balance between added information and additional computation? So, well it is actually

quite useful to know the probability of a certain sequence, we need to ask ourselves what ngrams are actually telling us about a text as a whole. In fact, constructing an n-gram model for

a text can answer many, varied questions! How complex is English? How compressible? How

predictable? How redundant? How efficient? All of these can be answered by the probabilities

of the n-grams a text contains. A particular measure for this is entropy. Entropy is broadly, as

Merriam Webster puts it, a ‘the degree of disorder or uncertainty in a system.’2 Here, our

system is the n-gram model trained on our text. Any text is going to be uncertain to some

degree – there isn’t an infinite history, with all words distributed as they are in grammatical

speech around the world; that would be impossible. But, looking at the entropy of a text for

different values of n in n-gram models can allow us to formulate hypotheses about the

predictability and uniformity of a text, and comparing these different entropy values can

Foundations of Statistical Natural Language Processing, by Chris Manning and Hinrich Schütze, MIT Press. Cambridge, MA:

May 1999.

2 http://www.merriam-webster.com/dictionary/entropy

1

Liz Salesky Math 20, Project Paper

further those intuitions. So, what is entropy in language and how do we calculate it? Entropy

is ‘a convenient mathematical way to estimate the uncertainty, or difficulty of making

predictions, under some probabilistic model.’3 It’s telling us how hard it is to predict the word

that comes next. Its formula is H(p(X)) = –p(X = xi)*log2p(X = xi).4 Looking at this closely, we

notice that it is a negative weighted average of the logged probabilities of our words – the

negative is there because the log gives us a negative value. The log inside the sum shows us

that the entropy of a text actually reflects the text’s uncertainty in the number of bits it would

take to store the text, if we did so in the most efficient way possible (by grouping sequences of

words of length n that occur frequently, so that they only need to be stored once). So, entropy

is also a lower bound on the number of bits it would take to encode information. Let’s look at

a unigram model. The entropy of a unigram language model is calculated by summing the

weighted probability of each type occurring in the document, i.e. –p(xi)*log2p(xi). The

maximum possible entropy occurs when the possibilities are uniformly distributed, as we saw

when said the maximum value of p*q in calculating confidence intervals was ¼. So, for a

unigram model, this means that each type occurs the same amount of times, not dependent on

order. A unigram model, as we’ve said, does not use context. Even with entropy, we see that

the value is independent of order; we might as well have just had a list with the same words.

Taking our 7 word example unigram sentence from above, with the same probabilities, we see

we have H(p(X)) = - [p(x1) log2 p(x1) + p(x2) log2 p(x2) + … + p(x7) log2 p(x7)], or

H(p(X)) = –[5*(0.1429 log2 0.1429) + 0.2857 log2 0.2857], or 2.5217 bits. The entropy of a

bigram model is a little trickier; we’re weighting the number of times that a word x occurs

first in a bigram out of all the words in the text, but then we have the weighted probabilities of

each of the ‘next words.’ So, if our first set of bigrams were ‘the’ followed by ‘dursleys’ ½ of

the time, ‘cupboard’ ¼ of the time, and ‘stairs’ the other ¼, we would need to know as well

that ‘the_x’ occurs 16 times in a text with 40 words, and then we would be able to begin to

compute the entropy as such; H(p(X)) = –[16/40 ( ½ log2 ½ + ¼ log2 ¼ + ¼ log2 ¼ ) + … +

p(xy) log2 p(xy)]. We’re taking a weight average of the weighted averages of the bits to encode

each bigram. Discovering the number of bits necessary to encode all bigrams that start with a

certain word has replaced the number of bits necessary to encode a unigram, as we might

have suspected. To put these calculations into context, let us look at the results of large text in

Python.

The sample text that I used for my computations was the second chapter of the first

Harry Potter book, Harry Potter and the Sorcerer’s Stone, file sschap2.txt. It is approximately

18 pages in the book, depending on the edition. It contains 3821 words, 972 of which are

unique. When speaking about the English language, we know that some words will occur

more often than others, but until we sit down and see numbers like these two, which are

vastly different, we don’t realize quite how large the disparity is. Of the 972 unique words in

this text, only 393 occur more than once, meaning 579 words occur only once out of 3821

total words in this chapter. They account for only 15% of the text! As well, only 50 words

occur more than 10 times, and only 26 occur more than 20 times. What does this mean for our

n-grams? The vast majority of the words we see are going to be repeated at some point in the

chapter; so, if we are looking at bigrams or larger models, there are going to be multiple

options to predict after a given word. Every such option increases the entropy, or uncertainty,

3

4

Irvine, Ann. "Information Theory." Hanover, NH. 18 Oct. 2011. Lecture.

Shannon, C. E. "Prediction and Entropy of Printed English." Bell Technological Journal. Bell Technologies, 15 Sept. 1950.

Liz Salesky Math 20, Project Paper

of the text. This might lead one to conjecture that the bigram model will have a higher entropy

than the unigram model, which does not use context, but really, each unigram in a unigram

model is just as likely to precede any other unigram! This makes the model much less

uncertain, which is reflected in the entropy of the unigram and bigram models for Harry

Potter, which are 8.131 bits and 2.830 bits, respectively. Before describing the models more

thoroughly, though, let us note that context does not just constitute the history before a word,

but also the location of a word in a sentence. Certain words are bound to be more likely at the

beginnings or ends of sentences than others, and similarly some words are more likely to

occur around punctuation, which marks boundaries. For this project, I stripped and did not

make use of punctuation, but did choose to note the beginnings of sentences by inserting the

word BOS at the beginnings of sentences. In fact, if we look at the top six most frequent words,

we see that they are ‘BOS,’ ‘the,’ ‘a,’ ‘he,’ ‘and,’ and ‘Harry.’ These occur 196, 180, 93, 90, 84 and

73 times in this text, respectively. Remembering that entropy is a weighted average, we notice

that words with higher frequencies will be weighted more heavily, because they occur more

often in the text. This means that their high entropies, because there are multiple different

bigrams starting with the same word, will influence the text’s entropy more than the 579

words that occur only once, and whose following words then have a probability of 1, may be

overshadowed. This trend ties into my choice to use only unigram and bigram models in my

program; first, the calculation of entropy for a bigram model already takes several seconds,

and secondly, part of the pull of these models is that they can be used to make predictions! In

my program, because bigrams are the largest n-gram stored, and the history used to calculate

the probability of a bigram is a unigram, the prediction in the program can only take in a

single word, like ‘aunt,’ which predicts ‘petunia.’ To ask what would come after an n-gram

would be only to ask what would come after the last word. However, we can ask a model to

evaluate the probability of a sentence that it hasn’t seen yet; an n-gram model will break the

sentence into sequences of length n, but the probability of a sentence given an n-gram model

will be 0 if any of the n-grams that make up the sentence are not in the model. Lambdasmoothing can be used to rectify this, as can using a lower order model or more data,5 but for

this project, I wanted to keep calculations a little more straightforward, since this is about the

probability rather than the linguistics.

One clear and seemingly ever-present application of n-gram modeling is text

prediction. The text prediction or T9 option on a lot of phones is really just modeled on ngram probabilities! When you’re typing with certain keys, the algorithm is suggesting the

most likely combination of the characters you’ve pressed given the previous word typed, or

possibly multiple words typed. My phone, for example, uses a bigram model – I can tell

because it currently suggests ‘nog’ as the next word no matter what word I enter before ‘egg.’

In a similar vein, n-grams are used in spelling correction. A lot of the time, one can catch

spelling errors by having an algorithm consult a stored dictionary that will notice when a

word has been typed that it doesn’t recognize. For example, getting a red underline in

Microsoft Word is because an entered word hasn’t been entered into Word’s own particular

built-in dictionary yet. However, perhaps a more efficient (storage-wise, at the very least)

method is to use n-grams! Context-sensitive spelling error correction uses probability to

suggest, though, will detect mistakes and suggest alternatives based on the previous words;

Foundations of Statistical Natural Language Processing, by Chris Manning and Hinrich Schütze, MIT Press. Cambridge, MA:

May 1999.

5

Liz Salesky Math 20, Project Paper

this involves distinguishing between ‘he’s sitting over their, ‘he’s sitting over they’re,’ and ‘he’s

sitting over there.’ Another similar application is one of the most groundbreaking aspects of

the computational linguistics field; statistical machine translation. Google Translate, for

example, is one of the best statistical machine translators out there. N-grams here are perhaps

most obviously useful in providing not just good matches to the English text or the foreign

language text, but both! This means being able to distinguish word order, prepositions, affixes

and various other language features to be able to say that ‘TV in Jon appeared’ and ‘TV on Jon

appeared’ are much less likely than ‘Jon appeared on TV.’ N-grams are also useful in

augmentative communication systems for the disabled, speech recognition, and other

language processes with noisy data in which words’ context can be used to determine the

most likely intended input, as well as more fundamental natural language processing

applications like part-of-speech tagging and language generation.6

The famous linguist Noam Chomsky once said that “it must be recognized that the

notion ‘probability of a sentence’ is an entirely useless one, under any known interpretation of

this term.”7 Chomsky almost certainly over-generalized, but as we have come to see, n-gram

models are just approximations of language, because we can’t possibly feed them infinite

vocabulary and grammar. This is not to demean their use, though, since people compose

language in much the same way models do – probabilistically. This means that not only can

probability be used to describe language, but it can also be used to tell us something about

language itself! N-gram models can be used to intimate patterns about a single language or

cross-linguistically which it would be much harder to realize without such programs.

Language is systematic, but not completely. There is no universal theory of language, but we

can’t get from any word to any other word, necessarily, depending on our position within a

sentence. Language is not ergodic. Probability and statistics can do much more than just

describe the rules that regulate these changes. The program 20project.py can accurately tell

us that ‘petunia’ should follow ‘aunt,’ or that ‘had’ will most likely follow ‘harry.’ Different

values of n give us very different models, though. A unigram language model will just as soon

suggest ‘the’ follow ‘the’ because of its frequency, whereas a bigram model might predict that

‘dursleys’ comes next. I think that n-grams are a really fascinating application of conditional

probability because they are of this; they use something relatively simple to describe a very

complex entity, language, in a very approachable way and allow us to learn new things, like

the uncertainty of a text, that we might not have been able to see as easily otherwise.

Language generation – This program generates text in multiple languages based on n-gram character and word based

models trained on prominent novels and other large texts. http://johno.jsmf.net/knowhow/n-grams/index.php

7 Jurafsky, Dan, and James H. Martin. Speech and Language Processing: an Introduction to Natural Language Processing,

Computational Linguistics, and Speech Recognition. Upper Saddle River, NJ: Pearson Prentice Hall, 2008. Print. 57.

6

Liz Salesky Math 20, Project Paper

The entropy of the unigram model for the file is 8.131 bits.

The entropy of the bigram model for the file is: 2.830 bits.

The 10 most frequent unigrams and their frequencies in this text are:

BOS

196

186

the

180

a

93

he

90

and 84

harry 73

was 70

had 65

to

63

The number of tokens (words) in this text is 3821 and the number of types (unique words) is

972. Of those 972 types, only 393 occur more than once, only 50 occur more than 10 times,

and only 26 occur more than 20 times!

The 10 most frequent bigrams and their frequencies in this text are:

BOS_

93

aunt_petunia 22

on_the

17

in_the

17

uncle_vernon 16

it_was

14

the_dursleys 13

he_had

13

of_the

12

had_been

12

The word entered was aunt, and the word the bigram model predicts will come next is

petunia.