paperAzadeh_MM2

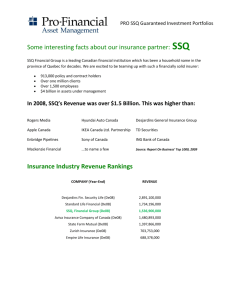

advertisement

The resolution of rotational ambiguity for threecomponent systems Azadeh, Hamid, Marcel Introduction The model-free analysis or soft-modelling of multivariate data is a core area of research in chemometrics [1]. Its principles are astonishingly simple: the analysis is based on the decomposition of a matrix of data into the product of two smaller matrices which are interpretable in chemical terms. Generally, one resulting matrix is related to the concentrations of the species involved in the process and the other matrix contains their response vectors. Typically, but not exclusively, we deal with multivariate spectroscopic data of a series of solutions, the spectra are written as rows of a data matrix D of dimensions nspectra nwavelengths. According to Beer-Lambert’s law D can be written as a product of two matrices, eq. (1), C containing as columns the concentrations profiles of the species, giving it the dimensions nspectra nspecies, and A containing as rows the molar absorptivities for the species as the measured wavelengths, giving it the dimensions nspecies nwavelengths. D = CA (1) Model-free analysis of such a data matrix D consists of decomposing the matrix into the product of the two meaningful matrices C and A. Equation (1) really is a system of nspectra nwavelengths equations, one equation for each element in D. The unknowns are the nspectra nspecies elements of C and the nspecies nwavelengths elements of A. The number of equations is generally much larger than the number of unknowns, thus there is no equality but there must be a solution where the product CA represents D as well as possible, as defined by a minimal sum of squares of the residuals R, the matrix of differences between D and its decomposition CA. R = D - CA; ssq Rij (2) A very prominent decomposition of D is the Singular Value Decomposition, SVD, equation (3). Here the columns of U are the lefthand eigenvectors, the rows of V are the right-had eigenvectors and S is a diagonal matrix of singular values. For any number of columns of U and S and rows of S and V, the decomposition is ideal according to eq. (2), i.e. SVD results in the minimal ssq for any chosen number of rows and columns, 1 D = USV (3) SVD is usually unique (unless two or more singular values are accidentally equal), but unfortunately this decomposition is chemically not useful, the matrices U and V are abstract eigenvectors and contain no directly chemically meaningful information. However, the matrices U and V are related to the useful matrices C and A. After having reduced the columns/rows of U, S and V to the correct number of species, nspecies, we can combine equations (1) and (3) D = CA = USV (4) and introduce a non-singular square transformation matrix T: CA = UST -1TV with C = UST -1 (5) and A TV The matrix T is often referred to a ‘rotation’ matrix, this is not correct as it is not strictly a rotation, the expression transformation matrix is clearly preferable (later). The task now can be formulated at finding a matrix T for which the products TV and UST-1 result in matrices A and C which are chemically meaningful and which minimises the sum of squares as defined in eq. (2). It is important to note that any non-singular matrix T will result in the same ssq as the original SVD. However, the computed matrices C and A will generally not be chemically meaningful, i.e. they may contain elements or combination of elements that are not possible. There are natural restrictions that always apply to the matrices C and A, most prominent is the restrictions that all elements have to be positive (≥0). Other restrictions apply for certain cases, e.g. concentration profiles in chromatography are always unimodal, in certain cases model based restrictions to some concentrations can be imposed [Combining hardand soft-modelling to solve kinetic problems, Anna de Juan, Marcel Maeder, Manuel Martínez and Romà Tauler, Chemometrics and Intelligent Laboratory Systems, 2000, 54, 123-141. Application of a novel combination of hard- and soft-modeling for equilibrium systems to the quantitative analysis of pH modulated mixture samples, Josef Diewok, Anna de Juan, Marcel Maeder, Romà Tauler, Bernhard Lendl, Anal. Chem. 2003, 75, 641-647]. Generally, there are no restrictions in addition to non-negativity that apply to the spectra in the matrix A. Under certain, well defined conditions [2] the restrictions result in a unique solution. But, whatever the restrictions that can be applied, there is no guarantee for a unique solution; as a matter of fact nonunique solutions are probably more common. If there is a unique solution there are two families of algorithms that will find this solution: (a) the well-known alternating least squares, ALS, algorithm which has been introduces a long time ago [3] and which has found numerous applications [4-8]; and (b) resolving factor analysis, 2 RFA, which treats the elements of T as nonlinear parameters and uses the Newton-Gauss algorithm to minimise ssq[9]. Both algorithms in principle can deal with any number of components, however practical aspects limit the number to 3-5 components. If the solution is not unique, there will always be a range of feasible solutions within which the conditions that are imposed on the elements of C and A are met and all of which result in the minimal ssq. The ranges of feasible solutions for C and A are directly related to ranges of feasible elements in the matrix T. For 2-component systems there is a simple and intuitive method published in 1971 [10] which defines the ranges of feasible solutions. This is one of the earliest ‘chemometrics’ publications! Considering the simplicity of solution for the 2-component system it is not surprising that there are a good number of publications that attempt at extending the idea to three or more components. [11-14]. However, the 3-component problem is dramatically more complex and is presently intensely discussed [Calculation and Meaning of Feasible Band Boundaries in Multivariate Curve Resolution of a Two-Component System, Hamid Abdollahi, Marcel Maeder and Rom Tauler, Anal. Chem., 2009, 81 (6), pp 2115–2122. refs 14 and 16] In a recent publication, we have re-formulated the 2-component problem [15], defining ssq as a function of the elements of T. Due to the multiplicative ambiguity (see later) a normalised matrix T is defined by only 2 elements and is possible to represent the quality of the resolution as a function of these 2 elements, resulting in illustrative error surfaces. The ranges of feasible solutions are then visible as a flat, extended minima, their borders represent the ranges. It is an alternative to the Lawton-Sylvestre method at arriving at the ranges of feasible solutions. In this contribution we will expand the basic idea and apply it to 3component systems. First, a sum of squares can be defined as a function of the values of the transformation matrix T. Eq. (6) summarises the procedure: for a given T the matrices A and C are computed, to both matrices the restrictions are applied (e.g. setting negative values to zero), resulting in the matrices A’ and C’, next the product C’A’ is subtracted from the measurement D to result in a matrix R of residuals and thus sum of squares is computed over all elements of R. 1) A = TV, apply restsrictions A' 2) C = UST -1, apply restsrictions C' 3) R = D - C'A' 4) ssq = (6) R (i , j ) For a three component system the matrix T is a 33 matrix, thus at first sight it appears the problem of finding the large matrices C and A is 3 reduced to finding only 9 numbers! It is even better: due to the multiplicative ambiguity each concentration profile can be multiplied by any number (0), and the corresponding spectrum in A is multiplied with its inverse. Further, multiplying a spectrum in A with a factor is equivalent to the multiplication of the corresponding row of T with the same number. For each spectrum or for each row of T we can use any number, for this paper we multiply each row with the inverse of its first element resulting in a matrix T which has a first column of 1s. Thus T is defined by only 6 elements! This normalization can also be considered as the projection of each row vector of T through the origin on a plane defined by the condition that the first coordinate is equal to one. As a result we can reduce the dimension from 3 to 2 allowing visualisation of the rows of T and thus the spectra they represent in a 2D plane. 1 t12 t13 T 1 t22 t23 1 t32 t33 (7) The obvious initial idea is to expand the approach developed for the 2component system [15] by scanning a range of values for each of the 6 unknown elements. However this is out of questions, if the range of each element comprises 100 values, the total number of required calculations is 1006 or 1012! An alternative and efficient method is presented in this contribution Algorithms The error surface The idea is to define the sum of squares, defining ssq as in eq. (6), as a function of only 2 parameters while independently computing the remaining 4 parameters. E.g. we chose the elements t12 and t13 of the first row. The remaining 4 unknown elements of T are fitted using a standard simplex algorithm (fminsearch in Matlab). If the pair t12 and t13 is part of a range of feasible solutions, the fitting of the others will result in the minimal ssq. If the initial pair is outside the range, the result of the fit will be a larger ssq. Using a Newton-Gauss algorithm fir the fitting would be faster but if the parameters are within a feasible range, the numerical derivatives of the residuals with respect to the parameters is zero and the algorithm crashes. The Newton-Gauss algorithm only works only for unique solutions [9]. A scan of t12 and t13 over an appropriate range and plotting the resulting ssq as a function of t12 and t13 results in a 3-D graph as represented in Fig 1. 4 Figure 1: A 3-dimensional representation of ssq as a function of the elements t12 and t13; three Areas of Feasible Solutions are revealed [Azadeh, we need to improve this Figure, one thing is to fix the bathtub as the level defined as 2 x ssq(SVD). Probably also better colors or contour lines. Can you give me the data so I can play] There are several observations to be made: in Figure 1 three independent areas with minimal ssq appear. This is a consequence of the fact that the order of the 3 components in C and A is not defined, all 3 species can be in the first column of C and row of A and thus result in such a minimal area in the figure. As long the range of t12 and t13 is wide enough, the three minima for all three components are covered. We will call these Areas of Feasible Solutions, or AFS. The shapes of the AFSs are irregular, in contrast to the situation with 2component systems where the equivalent AFSs are rectangular and orthogonal to the axes. This is explained by the fact that the inversion of T, as in eq. (5), for the 3-component case is relatively complex and all elements of the 33 matrix T are mixed. The inversion of the 22 matrix T for the 2-component system does not mix the elements. The error surface reveals the same information as the ‘Borgen plots’ as developed in [11, 13, 14, 16]. The crucial difference is that the algorithm can deal with real data with any amount of random noise, the Borgen plots are essentially only defined for perfect, noise-free data. Further additional restrictions such as unimodality are straightforwardly implemented here but not in Borgen plots. For each pair (t12,t13) the four other elements of T have to be determined. This is an iterative calculation and as with any iterative procedure the quality of the initial guesses for the parameters is crucially important for fast computation. Further, careful inspection reveals that the ssq in the AFS is not perfectly constant, mainly due to 5 numerical problems resulting from limited precision termination criteria of the iterative computations. In the algorithm used to produce Figure 1, the result of the previous fit is used as initial guess for the next fit. The computation times for 100x100 range for t12 and t13 is approx 10 min on a typical PC in 2009. Determination of Area of Feasible Solutions, AFS The calculation of complete error surfaces as in Figure 1 is possible but time consuming. A substantial amount of irrelevant information is calculated as only the border of the AFS is relevant, points inside and outside the AFS do not convey any additional useful information. In order to compute the border of the AFS we devised the following algorithm, which, while still depending on reasonable initial guesses for the process, these guesses do not need to be close to the optimum and thus the complete procedure is surprisingly robust and fast. a) Determine the minimal ssq-level for the AFSs. As mentioned before the ssq is not uniform throughout the AFS and as a rule of thumb we define the relevant ssqAFS as twice the ssq calculated for 3 eigenvectors, see (6). While this is a conservative estimate but the increase of the size of the AFS is small and as a result to overestimation of the range of solutions is small as well. b) Decide on size of simplex and run a basic simplex algorithm, i.e. constant size. Naturally, a smaller simplex will results in improved resolution and increased computation time. An acceptable compromise is required. c) Start with initial guess for t12 and t13 and define a simplex of the appropriate size. There are three kinds of position for the starting simplex: it can sit on the border of the AFS, identified by one or two vertices with minimal ssqAFS while two or one has a larger ssq; The simplex can be inside the AFS, recognised by all vertices with minimal ssqAFS; or the simplex can be outside, recognised by three vertices with large and usually different ssq. d) The starting simplex is outside the AFS: continue down towards AFS using the normal simplex algorithm until border of AFS is reached, as defined in point c) above. e) The starting simplex is inside the AFS: toggle simplex in any constant direction till border of AFS is reached f) The simplex is on border of the AFS, either starting there or reached form inside or outside: Toggle simplex along the border. The principle of the algorithm is represented in Figure 2. The blue curve represents the border of the AFS. We start with the triangle on the left. For any triangle straddling the border there are 2 vertices on one side and one vertex on the other side of the border. For the straddling starting triangle there are 2 vertices inside the AFS and one outside. Reflect one of the inside vertices at the side opposite of the triangle; in the example this is the top 6 vertex. (if the other vertex is reflected the path around the AFS is in the opposite direction but otherwise identical.) The reflection results in the next triangle, just below. The rule for the second and all following triangle is to reflect the old vertex of the pair on one side, in the example the vertex to the left. The process is continued. We discuss the process with a later triangle indicated in grey, the sequence of the calculation of its vertices is indicated by the numbers. As vertex 1 and 3 are on the same side, vertex 1 (the ‘old’ one) is reflected, resulting in the new triangle indicated by the dashed lines. g) The AFS is circumscribed once the first simplex has been reached again. As each AFS is an enclosed area, the continuation of the toggling simplexes will result in the complete circumscription of the border. start 3 1 2 Figure 2: The blue line is the border of the AFS; the triangles are toggled along the border, as described in the text. The AFS determined in this way represents the range of feasible solutions for the spectrum of one component. The other two components need to be determined in two analogous computations. A vertex inside the AFS defines the two elements t12 and t13 of the transformation matrix T. However, the other 4 elements of T have been calculated and each of the two rows will represent a feasible solution for the spectrum of the two remaining components. The situation is represented in Figure 3 7 AFS 1 AFS 2 AFS 3 Figure 3: The triangle represents one complete solution, i.e. transformation matrix T. The top vertex defines the border of one AFS, the other vertices are somewhere in the other AFS’s but it is not defined where in the AFS The crucial aspect is that the exact locations of the two vertices in AFS2 and AFS3 are not known, they can be anywhere inside the AFS or on the border. In fact, the whole collection of solutions found while determining the border of the first AFS will be somewhere within the AFSs. As only the border of the AFSs are useful in representing the ranges feasible spectra, these borders have to be determined independently in computations analogous to the ones described for the first AFS. The only advantage is that we can start with any of the already obtained solutions, knowing it must be in the AFS. Any triangle, as represented in Figure 3 defines a completer matrix T and thus the matrix A of absorption spectra and after its inversion the matrix C of concentration profiles. A triangle on the border of the i-th AFS will define a limiting absorption spectrum for the i-th component. The matrix T, represented by the triangle, results in a set of concentration profiles, but generally not in limiting ones. The collection of T-matrices will give a good indication of the range of feasible concentration profiles but if the range of feasible solutions has to be computed the procedure has to be repeated for the concentration profiles. Probably the best way is to adapt (5) and write CA = UTT -1SV with C = UT (8) and A T -1SV The equivalent definition of the transformation matrix as given in equation (7) would be 8 1 1 1 T t21 t22 t23 t31 t32 t33 (9) The pair t21 and t31 replaces the pair t12 and t13 in the computations above, e.g. those represented in Figure 1. As mentioned before, the size of the original triangle defines the resolution of the border and the computation time. As the triangles do not change size in the process the resolution will be uniform along the border. In summary, the border of each AFS for either the spectra of the concentration profiles has to be determined independently from all the others. This has the advantage that only those required need to be computed. The concomitant disadvantage is that the all computations has to be performed for each component for a complete analysis. Numerical Experiments Figure 4: Concentration profiles, absorption spectra and data matrix D used for the computation of Figures 1, …[Azadeh, no markers for C and black for D] Figure 5: (a) AFSs for the absorption spectra for different noise levels. The smallest AFSs are for data without noise, increasing areas for noise levels of 1%, 2%, 3% and 4% of averaged magnitude of data. (b) Enlarged areas, indicating vertices of simplexes and approximate border of AFS as lines. 9 Figure 6: (a) Range of feasible absorption spectra and (b) range of feasible concentration profiles. Each absorption spectrum and concentration profile is normalised to a maximum of one. [Azadeh, are these calculated as described with all six profiles calculated individually? Normalise all to max of one. Which noise level?] I think we do not need many more figures, at least I don’t know which ones. Conclusion A completely general method for the computation of the feasible bands for the concentration profiles and absorption spectra for 3-component systems has been presented. The problem is significantly more complex and difficult than the 2-component system. This is reflected in the long time span between the two publications. The fact that the computation is time consuming is not relevant as the requirements are in the range of minutes. More difficult is the representation of the results of the computations. The clusters of spectra and concentration profiles as represented in Figure 6 are useful but are mainly of graphical value and are not easy to use otherwise. Additionally, the traces in that Figure only are those on the border of the AFS, all inside points are also feasible solutions, plotting them in a Figure will result in an unstructured band, thus loosing the information of the individual shapes of the profiles. This issue has already been identified for the 2-component system. Future development of 4-component system. Not much about it other than it is theoretically possible: AFS defined by three elements in row of T and simplex is tetrahedron, with fitting of 6 parameters. 10 References 1. Delaney, M., Anal. Chem. 1984, 56, 261R–277R. 2. Manne, R., Chemom. Intell. Lab. Syst. 1995, 27, 89-94. 3. Kvalheim, O. M.; Liang, Y. Z., Anal. Chem. 1992, 64, 936- 945. 4. 58. Tauler, R.; Smilde, A.; Kowalski, B. R., J. Chemom. 1995, 9, 31- 5. de Juan, A.; Vander Heyden, Y.; Tauler, R.; Massart, D. L., Anal. Chim. Acta 1997, 346, 307-318. 6. Tauler, R.; Barcelo, D.; Thurman, E. M., Environ. Sci. Technol. 2000, 34, 3307. 7. Navea, S.; de Juan, A.; Tauler, R., Anal. Chem. 2002, 74, 60316039. 8. Jaumot, J.; Gargallo, R.; Escaja, N.; Gonzalez, C.; Pedroso, E.; Tauler, R., Nucleic Acids Res. 2002, 30, (17), e-92,1-10. 9. Mason, C.; Maeder, M.; Whitson, A., Anal. Chem. 2001, 73, 1587– 1594. 10. Lawton, W. H.; Sylvestre, E. A., Technometrics 1971, 13, 617633. 11. Borgen, O. S.; Kowalski, B. R., Anal. Chim. Acta 1985, 174, 1-26. 12. Borgen, O.; Davidsen, N.; Mingyang, Z.; Øyen, Ø., Microchim. Acta 1986, 11, 63-73. 13. Rajko, R.; Istvan, K., J. Chemom. 2005, 19, 1-16. 14. Rajko, R., Anal. Chim. Acta 2009, 645, 18-24. 15. Vosough, M.; Mason, C.; Tauler, R.; Heravi, M.; Maeder, M., J. Chemom. 2006, 20, 302- 310. 16. Rajko, R., J. Chemom. 2009, 23, 172-178. 11