Use case from DFC

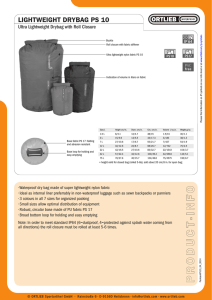

advertisement

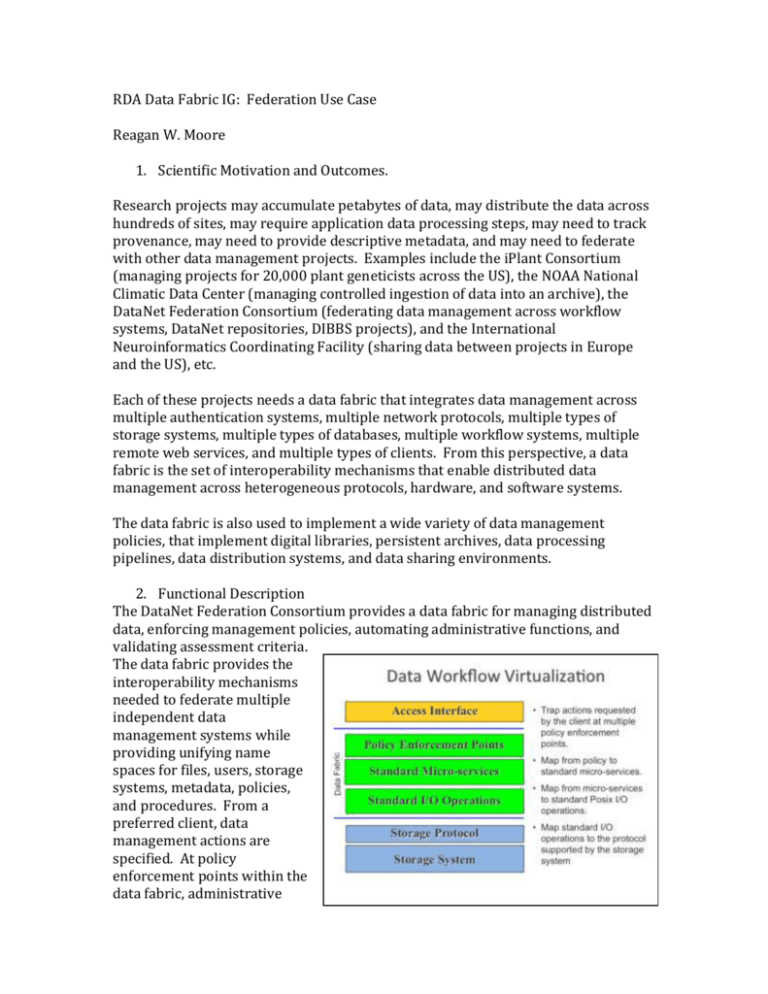

RDA Data Fabric IG: Federation Use Case Reagan W. Moore 1. Scientific Motivation and Outcomes. Research projects may accumulate petabytes of data, may distribute the data across hundreds of sites, may require application data processing steps, may need to track provenance, may need to provide descriptive metadata, and may need to federate with other data management projects. Examples include the iPlant Consortium (managing projects for 20,000 plant geneticists across the US), the NOAA National Climatic Data Center (managing controlled ingestion of data into an archive), the DataNet Federation Consortium (federating data management across workflow systems, DataNet repositories, DIBBS projects), and the International Neuroinformatics Coordinating Facility (sharing data between projects in Europe and the US), etc. Each of these projects needs a data fabric that integrates data management across multiple authentication systems, multiple network protocols, multiple types of storage systems, multiple types of databases, multiple workflow systems, multiple remote web services, and multiple types of clients. From this perspective, a data fabric is the set of interoperability mechanisms that enable distributed data management across heterogeneous protocols, hardware, and software systems. The data fabric is also used to implement a wide variety of data management policies, that implement digital libraries, persistent archives, data processing pipelines, data distribution systems, and data sharing environments. 2. Functional Description The DataNet Federation Consortium provides a data fabric for managing distributed data, enforcing management policies, automating administrative functions, and validating assessment criteria. The data fabric provides the interoperability mechanisms needed to federate multiple independent data management systems while providing unifying name spaces for files, users, storage systems, metadata, policies, and procedures. From a preferred client, data management actions are specified. At policy enforcement points within the data fabric, administrative policies are selected and applied for each action. The policies control the execution of procedures that are composed by chaining basic functions (micro-services) into a workflow. State information generated by the workflow is saved as metadata on one of the unifying name spaces. The actions of the workflow are mapped to the protocol used by the storage system. The components of the data fabric include storage servers, a metadata catalog server, a messaging server, and a queue for outstanding rules. The mechanisms used for federation include drivers that map from the DFC access protocol to the protocol of the external resource, micro-services that can encapsulate web service interactions, and messaging through an external message queue. These enable tightly coupled federations, loosely coupled federations, and asynchronous federation of data management systems. 3. Describe essential Components and their Services The data fabric is composed through the integration of existing technologies. The components are selected from the following categories: storage systems, databases, authentication environments, networks, microservices, policies, and message buses. Each of these components is pluggable, with the ability to add new components dynamically to the data fabric. The types of services that are provided on top of the data fabric are specific to each user community, but include web browsers, web services, digital libraries, file systems, data synchronization tools, unix shell commands, message queues, and scripting languages. The data fabric can be viewed as the software that enables the capturing of knowledge needed for interacting with data from multiple resources. Through creation of workflows, processing can be applied to the data to transform data formats, extract metadata, generate derived data products, and enforce management policies. Specific user tools such as the HDF5 viewer can be used to visualize data sets accessed through the data fabric. Or workflow systems such as Kepler can be applied to data retrieved through the data fabric. Discovery of relevant data sets can be done through queries on descriptive and provenance metadata. The name space used to describe data objects supports unique identifiers, access controls, arrangement in collections, descriptive and provenance metadata, and access restrictions (time period, number of accesses). Mechanisms are provided to support registration of data in other name spaces, such as the CNRI Handle system and the DataONE registry. 4. Describe optional/discipline specific components and their services The plug-in framework enables the addition of clients, policies and micro-services that are specific to a discipline. Examples include Hydrology – workflows that capture the knowledge needed to acquire data sets from NOAA repositories, transform the data to a desired coordinate system, and conduct a hydrology watershed analysis Micro-services for interacting with the DataONE registry, the SEAD repository, and the TerraPop repository. Micro-services that support data access within Kepler workflows Micro-services that interact with the NCSA Cyberintegrator to capture output from workflows run on XSEDE resources Micro-services that interact with NCSA Polyglot to transform data formats Micro-services that support external indexing of textual material Workflow structured objects that capture provenance (input parameters, output files) of workflows Micro-services that use pattern recognition to extract descriptive metadata Synchronization tools that track the loading of files onto the data fabric Micro-services that summarize audit trails Procedures to verify data replication and data integrity Procedures to aggregate data from a cache and manage migration of data from a cache to an archive Procedures to manage data staging Clients that support User level file systems Clients that support dynamic rule modification and execution Clients that implement web services Clients that support application interaction with remote data (C I/O library, Fortran I/O library) 5. Describe essentials of the underlying data organization The choice of type of data organization is made by the researcher. Projects have chosen to: Put 5 million files in a single logical directory Store files in a logical collection hierarchy (arbitrary depth) Use tags (valueless attributes) to label digital objects, and find objects through a search on the tags Use standard metadata (such as Dublin Core) to label digital objects and search on metadata values Organize data objects by type or format Distribute data objects across storage systems based on data type or size Deposit data into a data cache and then migrate the data to a tape archive Automate conversion of data objects to a standard data format, but save the original To enable these multiple approaches, schema indirection is used. The name of a metadata attribute is stored along with the value of the metadata attribute. This makes it possible to assign a single digital object a unique descriptive metadata. Or all digital objects within a logical collection can be assigned values for a metadata attribute. The data fabric uses 318 attributes to record state information about users, files, storage systems, metadata, policies, and micro-services. This set has proven sufficient to handle requirements from digital libraries, data sharing environments, archives, and data processing systems. 6. Indicate the type of APIs being used The APIs are differentiated between development libraries (used to port new clients), a DFC surface which supports standard access protocols, and clients required by user communities. The APIs provide access through: File system interfaces – WebDav, FUSE Digital library interfaces – Fedora, Dspace, VIVO, DataVerse Restful interfaces Web browsers – iDrop-web, Mediawiki Message buses – AMQP, STOMP Grid Tools – GridFTP Open Data Access Protocol – Thredds Unix shell commands – iCommands Workflow systems – Kepler, NCSA Cyberintegrator Text search – Elastic search Java - ModeShape 7. Achieved results The DFC data fabric has been applied across data management applications ranging from digital libraries, to data grids, to archives, to processing pipelines. Each application implements a different set of policies, uses a different set of technology components, and applies a different set of clients. Disciplines that build upon the technology include: Atmospheric science NASA Langley Atmospheric Sciences Center Biology Phylogenetics at CC IN2P3 Climate NOAA National Climatic Data Center Cognitive Science Temporal Dynamics of Learning Center Computer Science GENI experimental network Cosmic Ray AMS experiment on the International Space Station Earth Science NASA Center for Climate Simulations Ecology CEED Caveat Emptor Ecological Data Engineering CIBER-U High Energy Physics BaBar / Stanford Linear Accelerator Hydrology Institute for the Environment, UNC-CH; Hydroshare Genomics Broad Institute, Wellcome Trust Sanger Institute, NGS Neuroscience International Neuroinformatics Coordinating Facility Neutrino Physics T2K and dChooz neutrino experiments Optical Astronomy National Optical Astronomy Observatory Plant genetics the iPlant Collaborative Radio Astronomy Cyber Square Kilometer Array, TREND, BAOradio Social Science Odum, TerraPop