Review1-2014

advertisement

Dr. Eick

COSC 6342 Solutions Homework1 Spring 2013

Due date: Problems 1-3, 5-6 are due Thursday, Feb. 7 11p, and all other problems are due Friday,

Feb. 15, 11p.

Last updated: Feb. 13, 4:30p (change in problem 9, following the discussions in the lecture on

Feb. 13!)

1. Compare decision trees with kNN; what do they have in common; what are the main

differences between the two approaches?

Answer:

Commonality: Decision trees and kNN are both supervised learning methods that assign a

class to an object based on their features.

Main Differences: Decision trees define a hierarchy of rules in the form of trees and these

rules are formed from training data. These trees give priority to more informative features. A

decision tree could become very complex as the decision tree gets larger and requires pruning

to improve its performance.

a) Compare decision trees with kNN to solve classification problems. What are the main

differences between these two approaches? [5]

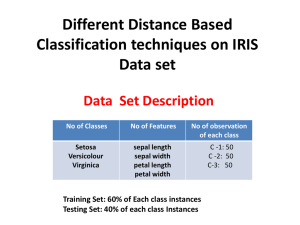

kNN Decision Tree

- Lazy learner - Learn model (tree)

- Local model - Global model

- Distance based - Based on attribute order

- Voronoi convex polygon decision

boundary

- Rectangle decision boundaries

- Hierarchical learning strategy

2. Show that the VC dimension of the triangle hypothesis class is 7 in 2 dimensions (Hint: For

best separation place the points equidistant on a circle). Generalizing your answer to the

previous question, what is the VC dimension of a (non-intersecting) polygon of p points?

do some reading on the internet, if you are having difficulties approaching this problem!

Answer:

Intuitively, a p-sided polygon can contain at least p points. However, it cannot contain p + 1

(or more) points if there are more than 2p+1 and the points are placed in alternate positions

(they are not next to each other). Therefore, the VC dimension of a (non-intersecting)

polygon of p points is 2p + 1 (VC-dimension = 2p+1).

3. One major challenge when learning prediction and classification models is to avoid

overfitting. What is overfitting? What factors contribute to overfitting? What is the

generalization error? What is the challenge in determining the generalization error? Briefly

describe one approach to determine the generalization error. 8-12 sentences!

Answer:

Overfitting: A model H is more complex than the underlying function f; when the model is

too complex, the test errors are large although training errors are small.

Factors contribute to overfitting are:

1. Noise in the training examples that are not a part of the pattern in general data set.

2. Selected a hypothesis that is more complex than necessary.

3. Lack of training examples

...

Generalization error is the error of the model on new data examples.

The main challenge in determining the generalization error is that we don't actually have new

data examples. The cross-validation approach is often used to estimate the generation error. It

splits the training data into a number of subsets, one subset is used as the testing set and the

rest of the data are used as the training set. The experiment repeats until all subsets have been

used as the testing set. The generalization error is estimated by the average of testing errors

and usually its standard deviation is also reported.

4. Derive equation 2.17; the values for w0 and w1 which minimizes the squared prediction error!

Answer:

In last year’s solution.

5. A lot of decision making systems use Bayes’ theorem relying on conditional independence

assumptions—what are those assumptions exactly? Why are they made? What is the problem

with making those assumptions? 3-6 sentences!

Answer:

Missing: List Assumptions!

It assumes the presence of a particular feature (symptom) unrelated to the presence of any

other feature and that the presence of a feature (symptom) assuming a class (disease) is

unrelated to the presence of other features assuming a class. The assumption is made to

simplify decision making by simplifying computations and by dramatically reducing

knowledge acquisition cost. The problem of making those assumptions is that features are

often correlated, and making this assumption in presence of correlation leads to errors

in probability computations, and ultimately to making the wrong decision.

6. Assume we have a problem in which you have to choose between 3 decisions D1, D2, D3.

The loss function is: 11=0, 22=0, 33=0, 12=1, 13=1, 21=1, 31=10, 23=1, 32=8; write the

optimal decision rule! Decision rule! (ik is the cost of choosing Ci when the correct answer

is Ck.). If you visualize the decision rule by Feb. 20, you get 50% extra credit; send your

visualization and a brief description how you obtained it to Dr. Eick.

Answer:

Visualization of the Decision Rule by Audrey Cheong

Assume we have a problem in which you have to choose between 3 decisions D1, D2, D3. The

loss function is: 11=0, 22=0, 33=0, 12=1, 13=1, 21=1, 31=10, 23=1, 32=8; write the

optimal decision rule! (ik is the cost of choosing Ci when the correct answer is Ck.)

𝑃(𝐶1 |𝑥) + 𝑃(𝐶2 |𝑥) + 𝑃(𝐶3 |𝑥) = 1

𝑅(𝛼𝑖 |𝑥) = ∑ 𝜆𝑖𝑘 𝑃(𝐶𝑘 |𝑥)

𝑘≠𝑖

𝑅(𝛼1|𝑥) = 𝜆12 𝑃(𝐶2 |𝑥) + 𝜆13 𝑃(𝐶3 |𝑥) = 𝑃(𝐶2 |𝑥) + 𝑃(𝐶3 |𝑥) = 1 − 𝑃(𝐶1 )

𝑅(𝛼2 |𝑥) = 𝜆21 𝑃(𝐶1 |𝑥) + 𝜆23 𝑃(𝐶3 |𝑥) = 𝑃(𝐶1 |𝑥) + 𝑃(𝐶3 |𝑥) = 1 − 𝑃(𝐶2 )

𝑅(𝛼3 |𝑥) = 𝜆31 𝑃(𝐶1 |𝑥) + 𝜆32 𝑃(𝐶2 |𝑥) = 10𝑃(𝐶1|𝑥) + 8𝑃(𝐶2 |𝑥)

The optimal decision rule is:

Choose 𝐷𝑖 𝑖𝑓 𝑅(𝛼𝑖 |𝑥) < 𝑅(𝛼𝑘 |𝑥) for all 𝑘 ≠ 𝑖

Choose D1 if

𝑅(𝛼1 |𝑥) < 𝑅(𝛼2 |𝑥)

1 − 𝑃(𝐶1 |𝑥) < 1 − 𝑃(𝐶2 |𝑥)

𝑃(𝐶1 |𝑥) > 𝑃(𝐶2 |𝑥)

and

𝑅(𝛼1 |𝑥) < 𝑅(𝛼3 |𝑥)

1 − 𝑃(𝐶1 |𝑥) < 10𝑃(𝐶1|𝑥) + 8𝑃(𝐶2 |𝑥)

11𝑃(𝐶1|𝑥) + 8𝑃(𝐶2 |𝑥) > 1

Choose D2 if

𝑅(𝛼2 |𝑥) < 𝑅(𝛼1 |𝑥)

1 − 𝑃(𝐶2 |𝑥) < 1 − 𝑃(𝐶1 |𝑥)

𝑃(𝐶2 |𝑥) > 𝑃(𝐶1 |𝑥)

and

𝑅(𝛼2 |𝑥) < 𝑅(𝛼3 |𝑥)

1 − 𝑃(𝐶2 |𝑥) < 10𝑃(𝐶1 |𝑥) + 8𝑃(𝐶2|𝑥)

10𝑃(𝐶1|𝑥) + 9𝑃(𝐶2 |𝑥) > 1

Choose D3 if

𝑅(𝛼3 |𝑥) < 𝑅(𝛼1 |𝑥)

10𝑃(𝐶1|𝑥) + 8𝑃(𝐶2 |𝑥) < 1 − 𝑃(𝐶1 |𝑥)

11𝑃(𝐶1|𝑥) + 8𝑃(𝐶2 |𝑥) < 1

and

𝑅(𝛼3 |𝑥) < 𝑅(𝛼2 |𝑥)

10𝑃(𝐶1|𝑥) + 8𝑃(𝐶2 |𝑥) < 1 − 𝑃(𝐶2 |𝑥)

10𝑃(𝐶1|𝑥) + 9𝑃(𝐶2 |𝑥) < 1

1

D2

P(C2)

D1

1/8

1/9

0

D3

P(C1)

1

Figure 1. The optimal decision rule based on the probability of C1 and C2. Choose D1 if in the blue region, D2 if in the red

region, or D3 if in the yellow region. (Not to scale for visibility purposes.)

This visualization was obtained by locating the decision boundaries. Basically, I found the

decision boundaries based on the conditions listed above. D1 is bounded within the lines formed

by

𝑃(𝐶1 |𝑥) = 𝑃(𝐶2 |𝑥), 11𝑃(𝐶1 |𝑥) + 8𝑃(𝐶2 |𝑥) = 1, and 1 − 𝑃(𝐶1 |𝑥) = 𝑃(𝐶2 |𝑥). D2 is bounded

within the lines formed by 𝑃(𝐶1 |𝑥) = 𝑃(𝐶2 |𝑥), 10𝑃(𝐶1|𝑥) + 9𝑃(𝐶2 |𝑥) = 1, and 1 −

𝑃(𝐶1 |𝑥) = 𝑃(𝐶2 |𝑥). D3 is bounded within the lines formed by 11𝑃(𝐶1 |𝑥) + 8𝑃(𝐶2|𝑥) = 1,

10𝑃(𝐶1|𝑥) + 9𝑃(𝐶2 |𝑥) = 1, 𝑃(𝐶1 |𝑥) = 0, and 𝑃(𝐶2 |𝑥) = 0.

7. What does bias measure; what does variance measure? Assume we have a model with a high

bias and a low variance—what does this mean? 3-4 sentences!

Answer:

Bias: measure the error between the estimator’s expected parameter and the real parameter.

Variance: measures how much the estimator fluctuates around the expected value.

A low bias and a high variance model is a complex model that is overfitting to the dataset.

8. Maximum likelihood, MAP, and the Bayesian approach all measure parameters of models.

What are the main differences between the 3 approaches? 3-6 sentences!

Answer:

Maximum likelihood estimates the parameter by estimating the distribution that most likely

resulted in the data. MAP and Bayesian approach both take into account the prior density of

the parameter. MAP replaces the whole density with a single point to get rid of the evaluation

of the integral, whereas the Bayesian approach uses an approximation method to evaluate the

full integral.

9. Assume we have a single attribute classification problem involving two classes C1 and C2

with the following priors: P(C1)=0.6 and P(C2)=0.4. Give the decision rule1 assuming:

p(x|C1)~(0,4); p(x|C2)~(1,1) Decision rule!

Answer:

Normal Distribution

(𝑥 − 𝜇𝑖 )2

1

𝑝(𝑥|𝐶𝑖 ) =

exp [−

]

2𝜎𝑖2

√2𝜋𝜎𝑖

𝑝(𝑥 |𝐶1 ) =

1

√2𝜋2

exp [−

𝑥2

]

2∗4

and

𝑝(𝑥 |𝐶2 ) =

1

√2𝜋

exp [−

Using Bayes ′ theorem, assume P(C1 ) = 0.6 and P(C2 ) = 0.4 :

Equate the posterior probabilities to find their intersections:

𝑃(𝑥|𝐶1 )𝑃(𝐶1 ) = 𝑃(𝑥|𝐶2 )𝑃(𝐶2 )

1

𝑥2

1

(𝑥 − 1)2

exp [−

] ∙ 𝑃(𝐶1 ) =

exp [−

] ⋅ 𝑃(𝐶2 )

2∗4

2

√2𝜋2

√2𝜋

Taking the log of both sides gives,

1

𝑥2

1

(𝑥 − 1)2

− 𝑙𝑜𝑔2𝜋 − 𝑙𝑜𝑔2 − + log 𝑃(𝐶1 ) = − 𝑙𝑜𝑔2𝜋 −

+ log 𝑃(𝐶2 )

2

8

2

2

𝑥2

(𝑥 − 1)2

−𝑙𝑜𝑔2 − + log 𝑃(𝐶1 ) = −

+ log 𝑃(𝐶2 )

8

2

2

2

𝑥

𝑥

1

−𝑙𝑜𝑔2 − + log 𝑃(𝐶1 ) = − + 𝑥 − + log 𝑃(𝐶2 )

8

2

2

−8 log 2 − 𝑥 2 + 8 log 𝑃(𝐶1 ) = −4𝑥 2 + 8𝑥 − 4 + 8 log 𝑃(𝐶2 )

3𝑥 2 − 8𝑥 + 4 − 8 log 2 + 8 log 𝑃(𝐶1 ) − 8 log 𝑃(𝐶2 ) = 0

3𝑥 2 − 8𝑥 + 1.6985 = 0

Using the quadratic formula,

8 − √64 − 4 ∗ 3 ∗ 1.6985

𝑝𝑜𝑖𝑛𝑡1 =

= 0.2326

6

8 + √64 − 4 ∗ 3 ∗ 1.6985

𝑝𝑜𝑖𝑛𝑡2 =

= 2.4341

6

Decision rule:

If 𝑥 > 2.4341

then choose C1

else if 𝑥 < 0.2326

then choose C1

else

choose C2.

1

Write the rule in the form: If x>…then…else if …else…!

(𝑥−1)2

2

]

10. Assume we have a dataset with 3 attributes and the following covariance matrix :

9 0 0

0 4 -1

0 -1 1

a) What are the correlations between the three attributes?

b) Assume we construct 3-dimensional normal distribution for this dataset by using

equation 5.7 assuming that the mean is =(0,0,0). Compute the probability of the three

vectors: (1,1,0), (1,0,1) and (0,1,1)!

c) Compute the Mahalanobis distance between the vectors (1,1,0), (1,0,1) and (0,1,1).

Also compute the Mahalanobis distance between (1,1,-1) and the three vectors (1,0,0),

(0.1.0). (0,0,-1). How do these results differ from using Euclidean distance? Try to

explain why particular pairs of vectors are closer/further away from each other when

using Mahalanobis distance. What advantages do you see in using Mahalanobis distance

of Euclidean distance?

Answer:

Given a dataset with three attributes X, Y, and Z, the correlations are

𝜎𝑋𝑌

0

𝜌𝑋𝑌 =

=

=0

𝜎𝑋 𝜎𝑌 3 × 2

𝜎𝑌𝑍

−1

𝜌𝑌𝑍 =

=

= −0.5

𝜎𝑌 𝜎𝑍 2 × 1

𝜎𝑋𝑍

0

𝜌𝑋𝑍 =

=

=0

𝜎𝑋 𝜎𝑍 3 × 1

b) Assuming that the mean is = (0,0,0), the probabilities of the three vectors, (1,1,0),

(1,0,1) and (0,1,1), are

𝑇

𝑝((1,1,0)) =

1

3

1

(2𝜋)2 |∑|2

0

1

0

1 1

exp [− ((1) − (0)) 𝛴 −1 ((1) − (0))]

2

0

0

0

0

= 0.0098

𝑇

𝑝((1,0,1)) =

1

3

1

(2𝜋)2 |∑|2

0

1

0

1 1

exp [− ((0) − (0)) 𝛴 −1 ((0) − (0))]

2

1

0

1

0

= 0.0059

𝑇

𝑝((0,1,1)) =

1

3

1

(2𝜋)2 |∑|2

0

0

0

1 0

−1

exp [− ((1) − (0)) 𝛴 ((1) − (0))]

2

1

0

1

0

= 0.0038

⃗ = (1,1,0),𝑦

⃗ = (1,0,1), and 𝑧

⃗ =

c) The Mahalanobis distances between the vectors𝑥

(0,1,1) are

𝑑(𝑥, 𝑦) = √(𝑥 − 𝑦)𝑇 𝛴 −1 (𝑥 − 𝑦)

1−1

0

(⃗𝑥 − ⃗𝑦) = (1 − 0) = ( 1 )

0−1

−1

𝑑(𝑥, 𝑦) = √[0

1⁄

9

]

1 −1 × 0

[ 0

0

1⁄

3

1⁄

3

0

0

1⁄ × [ 1 ] = 1

3

−1

4⁄

3]

𝑑(𝑦, 𝑧) = √(𝑦 − 𝑧)𝑇 𝛴 −1 (𝑦 − 𝑧)

1−0

1

(⃗𝑦 − ⃗𝑧) = (0 − 1) = (−1)

1−1

0

1⁄

0

9 0

1

4

1

1

𝑑(𝑦, 𝑧) = √[1 −1 0] × 0

⁄3 ⁄3 × [−1] = √ = 2/3 ≈ 0.667

9

0

1⁄ 4⁄

0

[

3

3]

𝑑(𝑥, 𝑧) = √(𝑥 − 𝑧)𝑇 𝛴 −1 (𝑥 − 𝑧)

1−0

1

(⃗𝑥 − ⃗𝑧) = (1 − 1) = ( 0 )

0−1

−1

1⁄

0

9 0

1

1

1

𝑑(𝑥, 𝑧) = √[1 0 −1] × 0

⁄3 ⁄3 × [ 0 ] = √13⁄3 ≈ 1.202

−1

1⁄ 4⁄

[ 0

3

3]

⃗⃗ = (1,1, −1) and the three vectors ⃗𝑝 = (1,0,0),

The Mahalanobis distances between ⃗𝑤

⃗ = (0,1,0), and ⃗𝑟 = (0,0, −1) are

𝑞

𝑑(𝑤

⃗⃗ , 𝑝) = √(𝑤

⃗⃗ − 𝑝)𝑇 𝛴 −1 (𝑤

⃗⃗ − 𝑝)

1−1

0

(⃗𝑤

⃗⃗ − ⃗𝑝) = ( 1 − 0 ) = ( 1 )

−1 − 0

−1

1⁄

0

9 0

0

𝑑(𝑤

⃗⃗ , 𝑝) = √[0 1 −1] × 0 1⁄3 1⁄3 × [ 1 ] = 1

−1

1⁄ 4⁄

[ 0

3

3]

𝑑(𝑤

⃗⃗ , 𝑞 ) = √(𝑤

⃗⃗ − 𝑞 )𝑇 𝛴 −1 (𝑤

⃗⃗ − 𝑞 )

1−0

1

(⃗𝑤

⃗⃗ − ⃗𝑞) = ( 1 − 1 ) = ( 0 )

−1 − 0

−1

1⁄

0

9 0

1

𝑑(𝑤

⃗⃗ , 𝑞 ) = √[1 0 −1] × 0 1⁄3 1⁄3 × [ 0 ] = √13⁄3 ≈ 1.202

−1

1⁄ 4⁄

[ 0

3

3]

𝑑(𝑤

⃗⃗ , 𝑟) = √(𝑤

⃗⃗ − 𝑟)𝑇 𝛴 −1 (𝑤

⃗⃗ − 𝑟)

1−0

1

(⃗𝑤

⃗⃗ − ⃗𝑟) = ( 1 − 0 ) = (1)

−1 + 1

0

1⁄

0

9 0

1

4

𝑑(𝑤

⃗⃗ , 𝑟) = √[1 1 0] × 0 1⁄3 1⁄3 × [1] = √ = 2/3 ≈ 0.667

9

0

1⁄ 4⁄

0

[

3

3]

For Euclidean distance, the distance for the 3 points to the point (1,1,-1) are all √2. But

as we seen from the computation, the Mahalanobis distance are different for all 3 points.

The vector pairs (x⃗, y

⃗ ) and (w

⃗⃗ , p

⃗ ) are closer together because attributes Y and Z are

correlated. Vector pairs (x⃗, z) and (w

⃗⃗ , q

⃗ ) are closer together because attribute X has a

larger variance than the other attributes, which outweighs the impact of attribute Z having

a smaller variance than the others. Vector pairs (y

⃗ , z) and (w

⃗⃗⃗ , r) have the smallest

Mahalanobis distance because attributes X and Y have variances greater than one, which

means the vectors are "closer" to the reference than if the variances were one.

The advantage of using Mahalanobis distance over the Euclidean distance is it that the

Mahalanobis distance is normalized by the variance of the attribute and the correlation

between attributes.

3) Reinforcement Learning [14] Problem from the 2014 Final Exam

a) What are the main differences between supervised learning and reinforcement learning? [4]

SL: static world[0.5], availability to learn from a teacher/correct answer[1]

RL: dynamic changing world[0.5]; needs to learn from indirect, sometimes delayed

feedback/rewards[1]; suitable for exploring of unknown worlds[1]; temporal analysis/worried

about the future/interested in an agent’s long term wellbeing[0.5], needs to carry out actions to

find out if they are good—which actions/states are good is (usually) not known in advance1[0.5]

b) Answer the following questions for the ABC world (given at a separate sheet). Give the

Bellman equation for states 1 and 4 of the ABC world! [3]

U(1)= 5 + *U(4) [1]

U(4)= 3 + *max (U(2)*0.3+ U(3)*0.1+U(5)*0.6, U(1)*0.4+U(5)*0.6) [2]

No partial credit!

c) Assume you use temporal difference learning in conjunction with a random policy which

choses actions randomly assuming a uniform distribution. Do you believe that the estimations

obtained are a good measurement of the “goodness” of states, that tell an intelligent agent

(assume the agent is smart!!) what states he/she should/should not visit? Give reasons for your

answer! [3]

Not really; as we assume an intelligent agent will take actions that lead to good states and avoids

bad states, an agent that uses the random policy might not recognize that a state is a good state if

both good and bad states are successors of this state; for example,

S2: R=+100

S1:R=-1

S3: R=-100

Due to the agent’s policy the agent will fail to realize the S1 is a good state, as the agent’s

average reward for visiting the successor states of S1 is 0; an intelligent agent would almost

always go from S1 to S2, making S1 a high utility state with respect to TD-learning.

d) What role does the learning rate play in temporal difference learning; how does running

temporal difference learning with low values of differ from running it with high values of ?

[2]

It determines how quickly our current beliefs/estimations are updated based on new evidence.

e) Assume you run temporal difference learning with high values of —what are the

implications of doing that? [2]

If is high the agent will more focus on its long term wellbeing, and will shy away from taking

actions—although they lead to immediate rewards—that will lead to the medium and long term

suffering of the agent.

13) Non-Parametric Density Estimation1 (Ungraded)

Assume we have a one dimensional dataset containing values {2.1, 3, 7, 8.1, 9, 12}

i.

Assume h=2 for all questions (formula 8.3); compute p(x) using equation 8.3 for

x=6.5 and x=9.9

Assume origin =0 p(6.5)=1/6x2 p(9.9)=2/12

ii.

Now compute the same densities using Silverman’s naïve estimator (formula 8.4)!

Assume p(6.5)=1/6x2 p(9.9)=1/12

iii.

Now assume we use a Gaussian Kernel Estimator (equation 8.7); give a verbal

description and a formula how this estimator measures the density for x=10

p(10)=1/12*(K(7.9/2)+K(7/2)+K(3/2)+K(1.9/2)+K(1/2)+K(2/2))

With K being: K(u)=1/sqrt()*e(u**2)/2

iv.

Compare the 3 density estimation approaches; what are the main differences and

advantages for each approach?

Fix bins / bins are define by a sliding window with respect to the query point / contribution

to the density of point to the density of a query point is inversely proportional to the

distance between the two points.

i) is sensitive to the bin origin whereas ii) is independent of it

i) and ii) are hard techniques; a point either contributes are does not contribute to the

density of the query point; iii) is a soft techniques in which points contribute to a certain

degree to the density of the query point.

i) is less smooth than ii) which is less smooth than iii; iii) is more precise than ii) and ii) is

more precise than i) in approximating the actual density function.

Histograms are the easiest to interpret as they indicate actual numbers of observations in a

range, whereas the other two approaches are slightly more difficult to interpret.