peak 0

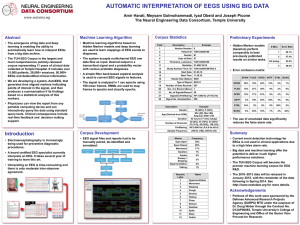

advertisement

Brain-EEG coherence. The EEG signal can have responses to sound onsets within the millisecond range, as evidenced by the auditory brainstem response, which involves more than a thousand repetitions. http://en.wikipedia.org/wiki/Auditory_brainstem_response The EEG signal can also respond in a very fast time scale to speech or complex signals, as evidenced by the complex auditory brainstem response (CABR). http://www.ncbi.nlm.nih.gov/pmc/articles/PMC2868335/pdf/nihms-181042.pdf http://www.nature.com/nrn/journal/v11/n8/full/nrn2882.html CABR involves thousands of repetitions of the stimulus. These techniques show that the brain can lock to sound, speech in particular, at more than 100 Hz. This locking should be observed in continuous (not averaged) paradigms by calculating the coherence between the EEG signal and the sound. I tested this in a dataset downloaded from: http://sourceforge.net/projects/aespa/?source=navbar as part of a demo for using Ed Lalor’s lab software. The speech signal consists of binaurally presented speech. The signal downloaded from the site was already Hilbert filtered and resampled at 512 Hz. The EEG signal was referenced to mastoid. The resulting signal has high frequencies corresponding to the speech envelope and to the fundamentals of the vowels, here is its Fourier transform: Single-Sided Amplitude Spectrum of y(t) 0.03 0.025 |Y(f)| 0.02 0.015 0.01 0.005 0 0 50 100 150 Frequency (Hz) 200 250 The coherence below (in blue) was calculated between this signal and the EEG. In order to estimate the significance I “circularly rotated” the speech signal thus creating a surrogate coherence distribution. The red band represents its mean an standard deviation. The data suggests that the brain synchronizes with the speech signal at several frequencies, corresponding both to phonemes and fundamentals. The following graphs represent the cross correlation of the speech and the EEG signal filtered around the first frequency peak, between 4 and 12 Hz. Peaks to the right of 0 indicates that speech precedes EEG. And here is the same zoomed in around 0. This suggests an N1-P2 complex, perhaps locked to the onset of the silables. The following graph represents the cross-correlation of speech and EEG, the signals were filtered between 160 and 240 Hz corresponding to the high frequency peaks in the coherence graph. I don’t know what to make of this. The first clear peak is around 0 and is much narrower and clearly different than the others. The fact that there is no peak before 0 indicates that speech influences EEG. All the activity to the right of 0 might be an artifact. Or maybe the whole thing is an artifact. Here is the 0 peak zoomed in. It peaks at around 0.01 seconds and it is to the right of 0 indicating speech precedence. Also, the EEG signal had been previously filtered (not by me) at 15 Hz, so there was very little power remaining in the high frequencies. More meaningful sound signal Since the brain does not care much about sustaind sounds I figured that it might be good to correlate the EEG activity to a transformed version of the sound that is larger at onsets. Following is the broad-band cross-correlation between EEG and speech. There is a clear peak at 0, however the signal to noise is not very good and the peak does not resemble a traditional auditory evoked response. In order to detect sound onsets (weighted by the strenght of the onset) I low-pass filtered the Hilbert transformed speech signal at 40 hz, and then I derived it by taking the running difference over 5 samples. We then rectified the resulting function. The follwing figure represents the Hilbert transformed speech signal (top) and the “onset” signal, bottom. It can be seen that the size of the transformed peaks are commesurate with the size of the envelope, but the sustains are not represented. Following is the cross-correlation between the transformed speech signal and the EEG. The signal to noise appears much better than that of the original signal. Follwing is the same graph zoomed in around 0. And N1-P2 complex typical of an auditory evoked response is apparent. This breaches the gap between ERP and continuous based analises. Extension to other data set The same analyses were applied to data from Lalor’s lab. Data consisted of 5 repetitions of binaurally presented speech. Each two minutes long. No clear coherence peak was observed at any frequency. As shown in the previous data-set, the onset transformed signal presented a clearly higher signal to noise ratio, considered as the size of the peak right after 0 in relation with the size of the noise far from the peak. Here are the cross-correlations for each repetition. It can be seen that in the 4th repetition the raw evoked peak is barely visible, but is clearly detected when using the onset signal. It is interesting that although 3 of the 5 repetitions have clear peaks the other two don’t really seem to exhibit peaks at all, this might reflect differences in attentional modulation across trials or perhaps artifacts that make the brain response more noisy.