Detection and Segmentation of Human Faces in Color Images with

advertisement

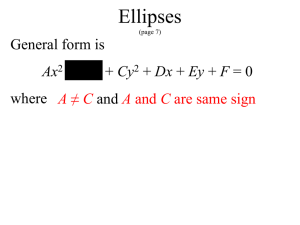

Detection and Segmentation of Human Faces in Color Images with Complex Backgrounds Manika Singhal#1,Somya Garg#2,Nidhi Garg#3 Department of CSE, Raj Kumar Goel Institute of Technology for Women Ghaziabad, India 1manikasinghal241@gmail.com 2mastsomya@gmail.com 3nidhigarg@rkgitw.edu.in Abstract-This paper proposes a novel fast approach to the detection, segmentation and localization of human faces in color images under complex background. First, a number of evolutionary agents are uniformly distributed in the 2-D image environment to detect the skin-like pixels and segment each face-like region by activating their evolutionary behaviors. Then wavelet decomposition is applied to each region to detect the possible facial features and a three-layer BP neural network is used to detect the eyes among the features. Experimental results show that the proposed approach is fast and has a high detection rate. morphological operations is used for binary images. After this fill image regions and holes based on morphological reconstruction. Since this stage aims to find out to exact face. Thus a morphological close and open algorithms are applied on the resultant reconstructed image. Thus at last the morphological dilation with a square Structuring element is used to eliminate the effects of slightly slanted edges and a vertical linear structuring element is then employed in a closing operator to force the strong vertical edges clogged. III. Keywords-Face detection; Face location; Image segmentation; Evolutionary computation; Agent; Wavelet decomposition; Neural network. I. INTRODUCTION One of the most popular topics of research in the computer vision field. It has many applications including face recognition, crowd surveillance, and human computer interaction. Face detection is to locate and detect all the faces and size from input images. It is complicated because of complex background, various illumination conditions, and large amount of facial expressions and so on. Therefore we have to find an effective approach which is somehow invariant to these changes. It may be felt that face detection is a trivial task. After all, we human beings, do this in our daily lives without any effort. The human visual system can easily detect and differentiate a human face from its surroundings but it is not easy to train a computer to do so. The detection of a face in a scene comes out to be an easy task for the human brain due to a lot of parallelism achieved by it. It has not been possible for the present day computational systems to reach that level of parallelism in processing information. II. FACE DETECTION In this step, the morphologically operation with the structuring element apply in the image and generally the FACE LOCALIZATION When human face candidate area screening, as a result of the forehead part superposition, as well as the forehead with other parts, for example clothes and so on the connection, to screened has caused the difficulty, therefore should use first shuts the operation, separated the connection, then carried on processing. So in this step, segment out non-face regions using major to minor axis ratio with help of Heuristic Filtering. Only those regions in the retained image which have an area greater than or equal to 1/250 of the maximum area region and remove those regions which have Width/Height < 6 ratio. IV. FACE FEATURE CLASSIFICATION The purpose of this section is to bound boundary box the faces from the localization text region. As the main color in a human faces is skin color, the threshold should be big enough. On the other hand, face region contains eyes, mouth, hair, nose and other background colors, therefore the threshold cannot be too high. V. FACE DETECTION ALGORITHM Face detection contains two major modules: (i) Face localization for finding face candidates and (ii) Facial feature detection for verifying detected face candidates. The eyes and mouth candidates are verified by checking (i) Luma variations of eye and mouth blobs; (ii) Geometry and orientation constraints of eyes-mouth triangles; and (iii) The presence of a face boundary around eyes-mouth triangles. Fig.-1 Face Detection A. Lighting compensation and skin tone detection The appearance of the skin-tone color can change due to different lighting conditions. We introduce a lighting compensation technique that uses “reference white” to normalize the color appearance. We regard pixels with top 5 percent of the luma (nonlinear gamma-corrected luminance) values as the reference white if the number of these reference-white pixels is larger than 100. The R, G and B components of a color image are also adjusted in the same way as these reference-white pixels are scaled to the gray level of 255. Based on the locations of eyes/mouth candidates, our algorithm first constructs a face boundary map from the luma, and then utilizes a Hough transform to extract the best-fitting ellipse. The fitted ellipse is associated with a quality measurement for computing eyes-and-mouth triangle weights. An ellipse in a plane has five parameters: an orientation angle, two coordinates of the center, and lengths of major and minor axes. Since we know the locations of eyes and mouth, the orientation of the ellipse can be estimated from the direction of a vector that starts from midpoint between eyes to the mouth. The location of the ellipse center is estimated from the face boundary. Hence, we only require a twodimensional accumulator for an ellipse in a plane. The ellipse with the highest vote is selected. Each eye-mouth triangle candidate is associated with a weight that is computed from its eyes/mouth maps, ellipse vote and face orientation that favors vertical faces and symmetric facial geometry. Fig.2 Eample of Face Detection Technique Fig.3 Localizatipn of Facial Features B. Localization of Facial Features Among the various facial features, eyes and mouth are the most suitable features for recognition and estimation of 3D head pose. Most approaches to eye and face localization are template based. However, our approach is able to directly locate eyes, mouth, and face boundary based on measurements derived from the color-space components of an image. [3] R.A. Redner, and H.F. Walker, “Mixture densities, maximum likelihood and the EM algorithm”, SIAM Review, Vol. 26, no. 2, pp. 195-239, 1984. [4]C. Bishop, Neural Networks for Pattern Recognition, Oxford University Press, 1995. [5]www.theguardian.com/technology/2013/nov/11/tesc o. [6] Chellappa, Wilson, Sirohey, “Human and Machine Recognition of Faces: A Survey”, Proceedings of the IEEE, Vol. 83, No. 5, May 1995, pp. 705-740. Fig.4 Example of Face Capture [7]Judith A.blake(the Jackson laboratory U.S.A). [8]Joseph R.Ecker Howard Hughes Medical Institute and Salk Institute ,U.SA. VI. CONCLUSION We have presented a face detection algorithm for color images using a skin-tone color model and facial features. Our method first corrects the color bias by a novel lighting compensation technique that automatically estimates the reference white pixels. We overcome the difficulty of detecting the low-luma and high-luma skin tones by applying a nonlinear transform to the YCbCr color space. Our method detects skin regions over the entire image, and then generates face candidates based on the spatial arrangement of these skin patches. The algorithm constructs eye/mouth/boundary maps for verifying each face candidate. Detection results for several photo collections have been presented. Our goal is to design a system that detects faces and facial features, allows users to edit detected faces, and uses the facial features as indices for retrieval from image and video databases. REFRENCES [1] M. Turk and A.P. Pentland, “Face recognition using eigenfaces”, Proc. CVPR, 1991, pp 586-593. [2] Jones, Rehg, “Statistical Color Models with Application to Skin Detection”, Tech-Rep. CRL 98/11, Compaq Cambridge Research Lab 1998.