18Mar11 - MiraCosta College

advertisement

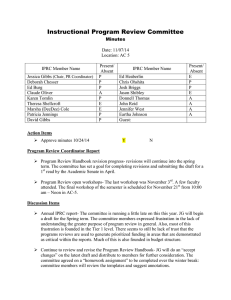

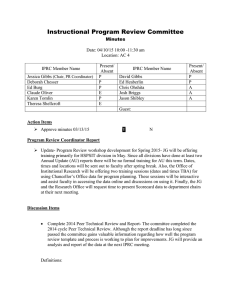

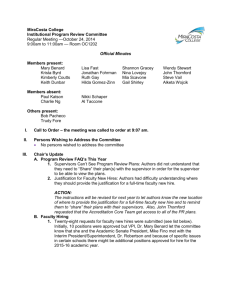

IPRC Meeting Minutes, 18Mar11, 3 to 5pm Members in attendance: JAustin, JFerris, DRobertson, DSmith, ATaccone, MFino, KCleveland, PPerry, MWhitney, YBalcazar, LLevel, JRomaine, CDwyer Members absent: KCoutts, PDeegan, LKurokawa, NSchaper, HGomez-Zinn, LLambert, JMoore, KReyes, TFore, GShirley Persons wishing to address the committee None As a preface to the meeting, MFino shared the items officially routed to IPRC from Steering Council. Items 3 through 9 were just approved by Steering on 18Mar11: 1. Working with BPC in a task force to form the Integrated Planning Manual (to be recommended by BPC to ASC for approval) 2. Working with C&P on biannual program review requirements for CTE programs (AP 4103) -- to be approved by ASC 3. Changes to collegewide program review standards - IPRC to Admin Council 4. Changes to academic program review process -IPRC and AAC to ASC. 5. Changes to collegewide program review process -IPRC to Admin Council. 6. Academic Program Review Validation - IPRC to ASC. 7. Nonacademic Program Review Validation - IPRC then to Admin Council. 8. Incorporation of SLOs into Academic Program Review - IPRC and AAC to ASC 9. Incorporation of SLOs into nonAcademic Program Review - IPRC to Admin Council. I. II. Integrating SLOs and assessments into program review MWhitney lead the discussion and provided handouts that included: (a) ACCJC rubrics for SLOs and Program Review, (b) overview of SLOs and PR written by Mark, (c) Institutional Learning Outcomes matrix, and (d) an excerpt from a guidance document written by the statewide academic senate. For academic programs, we want to be aware of how users reflect on performance in TracDat and exactly what information flows into Program Review. Specifically, we want to ensure that how SLOs get reported in Program Review does not stifle the faculty members ability to “think out loud” in information reported in TracDat. Discussion broadened about similar performance metrics as they exist in other areas of the college (some are called Administrative Unit Outcomes) Program review and integrated planning An Integrated Planning Manual draft is forthcoming IPRC needs academic committee members to work on a taskforce with BPC members MFino presented the possible use of Blackboard (Bb) as a tool to manage the Program Review process. A “Program Review” class was created in Blackboard. All PR review authors would be enrolled as “students” in the class. All members of IPRC and all management (Dept Chairs, deans, VPs, managers) would be enrolled as instructors. Bb has the ability to house PR packets if we continue with the form-driven approach as has been previously presented; Bb can be the database of all Program Reviews. In addition, Bb has the ability to document how IPRC chooses to “validate” programs. As starting point to the discussion, Mike showed the following possible timeline for the Program Review process. Steps are included to encourage discussion of the program review between department members and deans/managers before the submission of the final program review. Authors and Managers (e.g. Dept Chairs, deans, managers, VPs) would give the program a rating (example given below). IPRC, as a committee, would then give the final program ratings based primarily on the work of the Authors and Managers. The expectation is that IPRC moves validation to a consent calendar where program ratings are all in agreement. In the (expected) small number of cases where program ratings are not in agreement, IPRC will make the final judgment. In addition, IPRC committee members would be a resource throughout the entire process. Bb can document all of these ratings and the final validation ratings. With a defined process flow, we can better determine the right tool (i.e. Bb, PERCY, ??) to manage this process and best link to the next step of resource allocation for program development plans. Stage Number Stage Name 1 ReviewReflect-Plan Local Revision 2 Mgmt Revision 0 3 Program Evaluation Program Validation Transition to Planning and Budget 4 Stage Completion Date Time at this Stage 16Sep11 months 30Sep11 2 weeks 28Oct11 4 weeks 18Nov11 3 weeks 16Dec11 4 weeks End of Spring Flex week 4 weeks Reviewers Result Program Author (IPRC) Program/Dept members (IPRC) Department Chairs, Deans/Managers (IPRC) Department Chairs, Deans/Managers, Division Leadership (IPRC) Program Review Packet Discussion/Revision Discussion/Revision Recommend Scoring Reconcile scoring; Validate Program authors translate their development plans into budget requests IPRC Program Review Scoring (example) 1. Program is effectively meeting the mission of the college in all areas of review. Program development plans appropriately address areas to improve or expand. 2. Program is effectively meeting the mission of the college. In three or more areas of review, the program needs significant improvements to performance against standards. Program development plans appropriately address areas to improve. 3. Program is not effectively meeting the mission of the college in three or more areas of review. Program development plans do not sufficiently address areas to improve. III. IV. V. Subcommittee breakouts on data and standards for program reviews Saved for next meeting. Committee members were asked to look at their own programs and use the worksheets developed to see if the consolidation of standards works for their programs and what measures exist (or should exist) to support measuring performance against standards. Future Meetings and Agenda Items None Adjournment