Online Object Tracking using L1/L2 Sparse Coding and Multi Scale

International Journal of Electrical, Electronics and Computer Systems (IJEECS)

________________________________________________________________________________________________

Online Object Tracking using L1/L2 Sparse Coding and Multi Scale

Max Pooling

1 V.S.R Kumari, 2 K.Srinivasarao

1 Professor & HOD, Dept. of ECE, Sri Mittapalli College of Engineering, Guntur, A.P, India.

2 PG Student (M. Tech), Dept. of ECE, Sri Mittapalli College of Engineering, Guntur, A.P, India.

Abstract: Online object tracking is a major challenge problem in computer vision due difficulties to account for appearance changes of object. For efficient and robust tracking, we propose a new novel online object tracking algorithm is, SPCA (Sparse prototype based Principal

Component Analysis). In order to achieve PCA reconstruction to represent an object by sparse prototype, we introduce l1/l2 sparse coding and multi-scale max pooling. In order to reduce tracking drift, we present a method that takes occlusion and motion blur into account rather than simply includes image observations for model update and compare the proposed method with several existing methods like IVT, MIL, PN, VTD, Frag, l1 minimization by considering Overlap Rate, Center Error parameters.

Keywords: PCA, Frag Tracker, l1/l2 sparse coding, object

Representation, Object Tracking, Sparse Prototype,

Overlap Rate and Center Error.

I. INTRODUCTION

Email : mrksr4u@gmail.com visual information. Novel Tracking Algorithm is used to report the appearance variations.

The rest of the paper is summarized as follows as

II. RELATED WORK

Dong Wang, et al, proposed a novel Incremental

Multiple Principal Component Analysis (IMPCA) method for online learning dynamic tensor streams.

When newly added tensor set arrives, the mean tenor and the covariance matrices of different modes can be updated easily, and then projection matrices can be effectively calculated based on covariance matrices.[2]

Amit Adam and Ehud Rivlin proposed every patch votes on the possible positions and scales of the object in the current frame, by comparing its histogram with the corresponding image patch histogram. They tried to minimize a robust statistic in order to combine the vote maps of the multiple patches. [3]

Tracking is simply, a problem of estimating the trajectory of an object in the image plane as it moves around a scene. The achievement of object tracking is one of the major problems in the field of computer vision. Object tracking is plays a critical role in various applications like Motion - based recognition, Automated

Surveillance, Video indexing, HCI, Traffic Monitoring,

Vehicle navigation.[1].

Xiaoyu Wang, et al proposed the occlusion likelihood map is then segmented by Meanshift approach. The segmented portion of the window with a majority of negative response is inferred as an occluded region. If partial occlusion is indicated with high likelihood in a certain scanning window, part detectors are applied on the unclouded regions to achieve the final classification on the current scanning window. [4]

A tracking system consists of three components there are, 1. Appearance or Observation Model: This evaluates the state of the observed image patch i.e.,

Thang Ba Dinh, et al proposed the generative model encodes all of the appearance variations using a low whether observed image patch is belonging to the object class or not. 2. Dynamic or Motion Model: This describes the state of the objects over a time. 3. Search

Strategy Model: which aims to finding the likely states in current frame when compared with previous frame. dimension subspace, which helps provide strong reacquisition ability. Meanwhile, the discriminative classifier, an online support vector machine, focuses on separating the object from the background using a

Histograms of Oriented Gradients (HOG) feature set.[5]

In this paper, we propose a new robust and efficient appearance models by considering the occlusion and motion blur.

The main challenge in developing a robust tracking algorithm is to account for large appearance variations of the target object and background over time. In this paper, we tackle this problem with both prior and online

Jakob Santner, et al proposed semi supervised or multiple-instance learning, which shows augmenting an on-line learning method with complementary, tracking approaches, can lead to more stable results. In particular, they used a simple template model as a non adaptive and thus stable component, a novel optical-flow based meanshift tracker as highly adaptive element and an on-line

________________________________________________________________________________________________

ISSN (Online): 2347-2820, Volume -2, Issue-7, 2014

7

International Journal of Electrical, Electronics and Computer Systems (IJEECS)

________________________________________________________________________________________________ random forest as moderately adaptive appearance based learner.[6]

Where y denotes an observation vector, C represents a matrix of templates, z indicates the corresponding coefficients, and ∈ is the error term which can be viewed as the coefficients of trivial templates.

Mark Everingham, et al proposed describes the dataset and evaluation procedure. Review the state-of-the-art in evaluated methods for both classification and detection, analyse whether the methods are statistically different, what they are learning from the images (e.g. the object or its context), and what the methods find easy or confuse.[7]

By assuming that each candidate image patch is sparsely represented by a set of target and trivial templates, Eq. 2 can be solved via l min

1

2

‖y − De‖ 2

2

1

minimization as

+ λ ‖e‖

1

(ii)

Where ‖. ‖

1 respectively.

and ‖. ‖

2

denote the l

1

and l

2

norms J. Kwon a novel, et al proposed tracking algorithm that can work robustly in a challenging scenario such that several kinds of appearance and motion changes of an object occur at the same time.Algorithm is based on a visual tracking de-composition scheme for the efficient design of observation and motion models as well as trackers.[8]

The underlying assumption of this approach is that error ∈ can be modeled by arbitrary but sparse noise, and therefore it can be used to handle partial occlusion.

However, the l

1 tracker has two main drawbacks.

1.

l

1 tracker is computationally expensive due to that speed of the operation will be reduced.

J. Mairal, et al proposed Modeling data with linear combinations of a few elements from a learned dictionary has been the focus of much recent research in machine learning, neuroscience, and signal processing.

For signals such as natural images that admit such sparse

2.

It does not exploit rich and redundant image properties which can be captured compactly with subspace representations. representations, it is now well established that these models are well suited to restoration tasks. In this context, learning the dictionary amounts to solving a large-scale matrix factorization problem, which can be done efficiently with classical optimization tools.[9]

To overcome these problems we introduce a new algorithm we introduce a new algorithm based on

SPCA.

III. TRACKING ALGORITHM

J. Yang, et al proposed Research on image statistics suggests that image patches can be well represented as a sparse linear combination of elements from an appropriately chosen over complete dictionary. Inspired by this observation, we seek a sparse representation for each patch

In this paper, Object tracking is considered as a

Bayesian inference task in a Markov model with hidden state variables. Given a set of observed images Y

T

[y

1

, y

2

… … y

T

=

] at the t th frame the hidden state variable x t

is estimated recursively as, p ( x t

Y t

) ∝ p ( y t x t

) ∫ p ( x t x t−1

) p ( x t−1

Y t−1

) dx t−1

--- (iii) of the low-resolution input, and then use the coefficients of this representation to generate the high-resolution output. Theoretical results from compressed sensing suggest that under mild conditions, the sparse representation can be correctly recovered from the down sampled signals.[10]

Where p(x t

⁄ x t−1

) represents the dynamic (motion) model between two consecutive states, and p(y t

⁄ ) denotes observation model that estimates the likelihood of observing y t

at state x t

. The optimal state of the tracked target given all the observations up to t th frame is obtained by the maximum a posteriori estimation over N samples at time t by

J. Wright, et al proposed the choice of dictionary plays a key role in bridging this gap: unconventional dictionaries consisting of, or learned from, the training samples them selves provide the key to obtaining stateof-the-art results and to attaching semantic meaning to sparse signal representations. Understanding the good performance of such unconventional dictionaries in turn demands new algorithmic and analytical techniques.[11]. x̂ = arg

Where x i t max p (y i t x i t p ( x i t x t−1

) for i = 1,2, . . N ------- (iv)

indicates the i-th sample of the state x t

, and y t i denotes the image patch predicated by

A. Dynamic Model

x i t

.

1.

Object Tracking With Sparse Representation

In this paper, we apply an affine image warp to model the target motion between two consecutive frames. The

Sparse representation is mainly applied in pattern recognition, and computer vision like face recognition super-resolution and image in painting [12].Sparse

Representation in tracking can be described six parameters of the affine transform are used to model p(x t

{x t

⁄

, y t x t−1

, θ t

)

, s t

, α

of t

, φ t

} tracked target. Let X t

=

where x, y translations, rotation angle, scale, aspect ratio, and skew respectively. The state transition is formulated by, approximated by the linear combination of known objects as p(x t

⁄ x t−1

) = N(X t

, X t−1

, φ) (v) y = Cz+ ∈= [C I] [ z

∈

] = De (i)

B. Observation Model

________________________________________________________________________________________________

ISSN (Online): 2347-2820, Volume -2, Issue-7, 2014

8

International Journal of Electrical, Electronics and Computer Systems (IJEECS)

________________________________________________________________________________________________

C. Update of Observation Model If no occlusion occurs, an image observation yt can be assumed to be generated from a subspace of the target object spanned by U and centered at μ. However, it is necessary to account for partial occlusion in an

The change in target object for visual tracking can be obtained by updating the observation model. The trivial coefficients are acquired for occlusion detection and appearance model for robust object tracking. We assume that a centered image observation y t

- μ of the tracked object can be represented by a linear combination of the

PCA basis vectors U and few elements of the identity matrix I (i.e., trivial templates). If there is no occlusion, the most likely image patch can be effectively represented by the PCA basis vectors and coefficients tend to be zeros. If partial occlusion occurs, the most likely image patch can be represented as a linear combination of PCA basis vectors and very few numbers of trivial templates. [13].

For each observation corresponding to a predicted state, we solve the following equation efficiently using the proposed algorithm each trivial coefficient vector corresponds to a 2D map as a result of reverse raster scan of an image patch. The occlusion map is referred as non-zero element of this map indicates that pixel is occluded. The ratio ή of the number of nonzero pixels and the number of occlusion map pixels is calculated. Two thresholds tr1and tr2 are used to describe the degree of occlusion. If the ratio, ή < tr1, the model can be updated directly. If tr1 < ή < tr2, it indicates that the target is partially occluded. Then the occluded pixels are replaced by its corresponding parts of the average observation µ, and use this recovered sample for update. Otherwise if ή > tr2, it means that a significant part of the target object is occluded, and this sample can be discarded without update.[14].

L(z i , e i ) = min z t

,e t

1

2

|||y −i − u i z

− e i || + λ |e i || ---- (vi)

And obtain z i and e i , where i denote the i-th sample of the state x.

The observation likelihood can be measured by their construction error of each observed image patch p ( y

−i x i

) = exp (− ||y −i − u i z

2

||

2

) ----- (vii)

However, Eq. 3.4 does not consider occlusion. Thus, we use a mask to factor out non-occluding and occluding parts, p ( y

−i x i w i

) = exp (−(w

)) ------(viii) i . (y −i − U i z

)| | 2

2

+ β ∑(1 −

IV. EXPERIMENTAL RESULTS

The proposed model is simulated using MATLAB and its performance is evaluated by comparing with several tracking algorithms, i.e., the incremental visual tracker

(IVT), L1 tracker (L1T), MIL tracker (MILT), the visual tracking decomposition tracker (VTD), PN , Frag

Tracker algorithms.

A.

Occlusion

The IVT, L1T , MILT , VTD , methods do not perform well, whereas the Frag algorithm performs slightly better. In contrast, our proposed method is better as shown in below figures

________________________________________________________________________________________________

ISSN (Online): 2347-2820, Volume -2, Issue-7, 2014

9

International Journal of Electrical, Electronics and Computer Systems (IJEECS)

________________________________________________________________________________________________

Figure 1: Qualitative evaluation: objects undergo heavy occlusion and pose change. Similar objects also appear in the scenes

________________________________________________________________________________________________

ISSN (Online): 2347-2820, Volume -2, Issue-7, 2014

10

International Journal of Electrical, Electronics and Computer Systems (IJEECS)

________________________________________________________________________________________________

120 200

100

150

80

60 100

40

20

50

0

0 100 200

Frame Number

300 400

0

0 100 200

Frame Number

300 400

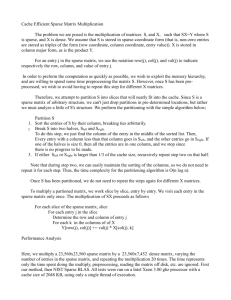

Data base

Person 1

Person 2

Person 3

IVT

11.45

7.95

46.675

Person 4

Person 5

11.867

8.534

Car scene 1 10.75

Car scene 2 13.11

Car scene 3 8.514

Car scene 4 16.764

Average 15.06

Figure 2: Center error for video clips/image series tested

Table 1: Average Center Error (Pixels) l1

8.75

8.85

121.475

6.467

9.734

6.85

9.21

4.614

12.864

20.97

PN

19.75

16.35

7.175

11.767

24.434

34.95

37.31

32.714

40.964

25.046

VTD

13.35

8.15

5.475

7.967

17.934

6.35

8.71

4.114

12.364

9.37

Table 2: Overlap Rate of tracking

MIL

34.55

11.85

50.075

73.567

65.734

17.45

19.81

15.214

23.464

34.63

Frag Track SPCA

7.85 6.65

13.25

7.275

8.15

50.775

8.867

184.634

24.25

26.61

33.767

8.734

98.25

100.61

22.014

30.264

36.11

96.014

104.264

56.35

Data base

Person 1

Person 2

Person 3

IVT

0.5

0.28

0.82

Person 4

Person 5

0.5

0.92

Car scene 1 0.85

Car scene 2 0.59

Car scene 3 0.28

Car scene 4 0.45

Average 0.54

C. Quantitative Evaluation l1

0.7

0.28

0.8

0.7

0.84

0.88

0.67

0.28

0.81

0.48

PN

0.45

0.70

0.56

0.45

0.64

0.65

0.49

0.70

0.66

0.54

Performance evaluation is an important issue that requires sound criteria in order to fairly assess the strength of tracking algorithms. Quantitative evaluation of object tracking typically involves computing the difference between the predicated and the ground truth center locations, as well their average values. Table II summarizes the results in terms of average tracking errors. Our algorithm achieves lowest tracking errors in almost all the sequences.

VTD

0.5

0.83

0.72

0.5

0.73

0.77

0.59

0.83

0.67

0.56

MIL

0.64

0.25

0.52

0.64

0.34

0.59

0.61

0.25

0.25

0.39

Frag Track SPCA

0.67 0.45

0.68

0.82

0.67

0.22

0.90

0.60

0.68

0.56

0.33

0.28

0.85

0.45

0.94

0.92

0.49

0.28

0.30

0.51 strength of subspace model and sparse representation.

Several experiments are carried out on different image sequences and compared with several state-of art techniques with proposed tracking algorithm; our algorithm is better tracking results. In future, our representation schemes extended for other vision problems including object recognition, and develop other online orthogonal subspace methods with the proposed model.

V. CONCLUSION

This paper presents a robust tracking algorithm based on sparse prototype PCA representation. In this work, we taken partial occlusion and motion blur into account for appearance update and object tracking by exploiting the

REFERENCES

[1] Thang Ba Dinh,” Co-training Framework of

Generative and Discriminative Trackers with

Partial Occlusion Handling”, Applications of

Computer Vision (WACV), 2011 IEEE

Workshop, 5-7 Jan. 2011, 642 - 649

________________________________________________________________________________________________

ISSN (Online): 2347-2820, Volume -2, Issue-7, 2014

11

International Journal of Electrical, Electronics and Computer Systems (IJEECS)

________________________________________________________________________________________________

[2] Dong Wang, Huchuan Lu, “Incremental MPCA for Color Object Tracking”, 2010 IEEE, 1051-

4651.

[9] B. Babenko, M.-H.Yang, and S. Belongie,

“Visual tracking with online multiple instance learning,” in Proc. IEEE Conf. Comput. Vis.

Pattern Recog., 2009, pp. 983–990

[3] M. Everingham, L. Van Gool, C. Williams, J.

Winn, and A. Zisserman, “The Pascal visual object classes (voc) challenge,” Int. J. Comput.

Vis., vol. 88, no. 2, pp. 303–338, Jun. 2010.

[10] Xiaoyu Wang,”An HOG-LBP Human Detector with Partial Occlusion Handling”, 2009 IEEE

12th International Conference on Computer

Vision (ICCV) 978-1-4244-4419-9.

[4] J. Kwon and K. Lee, “Visual tracking decomposition,” in Proc. IEEE Conf. Comput.

Vis. Pattern Recog., 2010, pp. 1269–1276

[11]

X. Li and W. Hu, “Robust visual tracking based on incremental tensor subspace learning,” in

Proc. IEEE Int. Conf. Comput. Vis., 2007, pp. 1–

8.

[5] Jakob Santner, Christian Leistner,” PROST:

Parallel Robust Online Simple Tracking”,

Computer Vision and Pattern Recognition

(CVPR), 2010 IEEE Conference, 13-18 June

2010, Page(s):723 – 730

[6]

[7]

[8]

J. Mairal, F. Bach, and J. Ponce, “Task-driven dictionary learning,”INRIA,

France, Tech. Rep. 7400, 2010.

Rocquencourt,

J. Yang, J. Wright, T. S. Huang, and Y. Ma,

“Image super-resolution via

19, no. 11, pp. 2861–2873, Nov. 2010. sparse representation,” IEEE Trans. Image Process., vol.

J. Wright, Y. Ma, J. Maral, G. Sapiro, T. Huang, and S. Yan, “Sparse representation for computer vision and pattern recognition,” Proc. IEEE, vol.

98, no. 6, pp. 1031–1044, Jun. 2010.

[12]

[13]

[14]

[15]

Amit Adam and Ehud Rivlin,” Robust

Fragments-based Tracking using the Integral

Histogram”, Computer Vision and Pattern

Recognition, 2006 IEEE Computer Society

Conference, 17-22 June 2006, Page(s): 798 – 805

S. Avidan, “Ensemble tracking,” in Proc. IEEE

Conf. Comput. Vis. Pattern Recog., 2005, vol. 2, pp. 494–501.

D. Comaniciu, V. R. Member, and P. Meer.

Kernel-based object tracking. IEEE Transactions on Pattern Analysis and Machine Intelligence,

25(5):564–575, 2003.

M. Black andA. Jepson, “Eigentracking: Robust matching and trackingof articulated objects using a view-based representation,” in Proc. Eur.Conf.

Comput. Vis., 1996, pp. 329–342

________________________________________________________________________________________________

ISSN (Online): 2347-2820, Volume -2, Issue-7, 2014

12