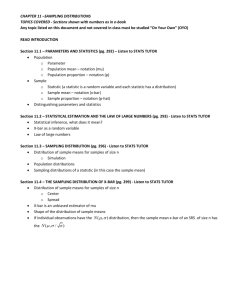

Chapter 7 Sampling Distributions

advertisement

Chapter 7 Sampling Distributions

We want to estimate population parameters, such as μ (population mean) and

σ(population standard deviation) and p (population proportion).

We use sample statistics x-bar and s and p-hat(p^).

Estimates are numbers we get from specific samples.

Estimators are rules (functions) we use to get the estimates.

X-bar says to sum the values and divide by n the sample size.

Why the distinction?

Estimates are numbers and will change from sample to sample.

Estimators can be looked at Random Variables.

Therefore we may know something about the distribution.

Let us look at a small example to illustrate the distribution of X, the original RV will

differ from the distribution of X-bar the sample means.

See file clt4.xlsx

Suppose that we have a DRV X with five values {0, 1, 2, 3, 4} and each value is

equally likely. Then the probability distribution is given in the first two columns.

Then suppose that we take a random sample of size 2 with replacement and we

take the sample mean (x-bar) of each of these samples. These are listed in columns

D – F. The probability distribution of X-bar for these samples is given in columns H

and I. The probability histograms are given on sheet 2. Note for X each bar is the

same height. For X-bar there are more bars and the histogram looks mound shaped!

What if we samples without replacement?

See columns K – M and O and P and blue bars on sheet 2.

What if the probabilities for X were not all equal?

See sheet 3

Recall the definition of the sample standard deviation:

xi x

s

n 1

2

What you should know.

X-bar is a good estimator of μ because:

E(X-bar) = μ (unbiased)

The average of all the sample averages is μ.

The standard deviation of X-bar is small, compared to other estimators.

σ x-bar = σ / √n standard error of the sample mean.

Also, as n gets bigger, X-bar gets closer to μ.

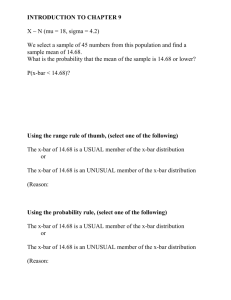

Most important: Central Limit Theorem (CLT). If Q is an estimator that has mean μ

and standard deviation σ(Q) then:

(Q – μ) / (σ(Q)) N(0,1) as n gets big.

For X-bar this becomes:

X

=Z

/ n

What is a big n? For most cases n ≥ 30 will work very nicely.

If n ≥ 30 or the population is normally distributed from a population with mean µ

and standard deviation σ then 𝑋̅ will be (approximately) normally distributed with

𝜎

mean µ and standard deviation 𝑛

√

This is really nice because, it is true for almost all X, and working with the Normal

Distribution is really easy. Use table or calculator.

Ex. Let μ = 200 and σ = 20.

Take a random sample of 100, n = 100

Then σ x-bar = σ / √n = 20 / 10 = 2.

What is the probability that the sample mean is less than or equal to 205?

P[X-bar ≤ 205] = normalcdf(-1000, 205, 200, 2)= .9938

P[Z ≤ (205 - 200)/2] =

P[Z ≤ 2.5] = .9938 = normalcdf(-10, 2.5)

Ex. Let μ = 200 and σ = 20

n = 100 and σ / √n = 2.

What is the probability that the sample mean is greater than 203?

P[X-bar > 203] = normalcdf(203, 1000, 200, 2) = .0668

P[Z > (203 - 200)/2] =

P[Z > 1.5] = .0668

What is the probability that the sample mean is between 197 and 202?

P( -1.50 < Z < 1.00) = P(197 < X-bar < 202)

normalcdf(197, 202, 200, 2)

.8413 -.0668 = .7745

Ex. Let μ = 200 and σ = 20

n = 100 and σ / √n = 2.

Find a such that 97.5% sample means fall below a.

(Find a such that 2.5% of sample means fall above a.)

P(X-bar < a) = .9750

P(Z < 1.96 ) = .9750

a = 1.96 * 2 + 200 = 203.92

invNorm(.975,200,2) = 203.92

Calculator steps are the same as before, you just need to use

σ / √n not σ, because we are not interested in X but X-bar.

You can use the CLT to calculate probabilities for the sample mean if you have a

sample bigger than or equal to 30 or you know that the underlying population (RV)

is normally distributed.

The CLT works for other estimators also, such as:

𝑇 = ∑ 𝑋 ~𝑁(𝑛 ∗ 𝜇, √𝑛𝜎)

Ex.

Ex. Let μ = 200 and σ = 20.

Take a random sample of 100, n = 100

T ~ (μT = 20000, σT = 200)

Find the probability that the sum is greater than 20350.

P(T > 20350) = P(Z > 1.75) = normalcdf(20350,10000000,20000,200) = 0.040

Normalcdf(1.75,10) = 0.040

Find the probability that the sum is less than 20222.

P(T < 20222) = P(Z < 1.11) = normalcdf(0, 20222,20000,200) = 0.867

Normalcdf(-10, 1.11) = 0.867

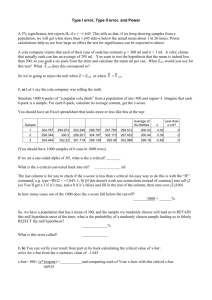

Say we want to estimate p = the population proportion of defective widgets. Since p

is a parameter it might be hard to obtain. We could take a random sample of size n

and find the number of defective widgets in the sample, call this number X, then we

let:

p^ = X / n

we read this p-hat.

p-hat is then an estimator of p.

Using the Binomial RV one can show that E(p^) = p and the standard error of p^ =

√(p * q/n).

Using the CLT we can see that

^

p p

will have an approximate standard normal distribution as n gets big.

p*q/n

Note that this is more an academic exercise now, since we can calculate exact

probabilities for p^ using the binomial RV directly. More on this later.

Chapter 7 Illustrated.

The scores on the Math SAT are normally distributed with a mean of 420 and a

standard deviation of 65.

1. A random person is selected. What is the probability that he/she scored

above 500?

2. A random sample of 25 people is selected. What is the probability that the

mean of the sample is greater than 500?

You should know how to answer question 1.

X = score on Math SAT’s. X ~ N(420, 65)

P (X > 500) = normalcdf(500, 10000, 420, 65) = .109

For question to you need to understand that X-bar is a random variable with mean

420 and standard deviation 65/5 = 13. The square root of 25 is 5.

P(X-bar > 500) = normalcdf(500, 10000, 420, 13) = 3.796 * 10-10 ≈ 0

The sample mean varies much less than the original variable (in this case Math SAT

score)

You can use this because although n = 25 < 30 we have a normal distribution.

Page 297 (307): 7.7

In Class: 1, 2, 6, 8 , 12

Homework: 3, 5, 7, 11