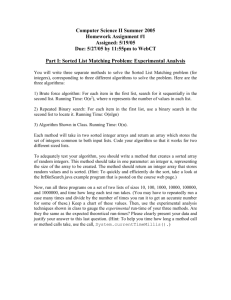

Lecture Note - Towson University

advertisement

Advance Data Structure and Algorithm

Chapter 2 Notes

ALGORITHM ANALYSIS

In order to write a program for a problem we need DATA STRUCTURES and

ALGORITHM

PROGRAM=DATA STRUCTURES + ALGORITHM

ALGORITHM:

An algorithm is a clearly specified set of simple instructions to be followed to

solve a problem.

There are 3 important features that an algorithm should possess:

Correctness

Robustness

Efficiency

A program can be defined in terms of execution time T(N) ,where N is the

problem size.

Time f(N) is defined as the duration it takes to execute N number of statements.

Assuming each statement takes the same time to execute, analysis of an

algorithm is based on the time taken to run the algorithm.

There are 2 types of running time:

Worst case running time

Average case running time

ASYMPTOTIC NOTATION:

A problem may have numerous algorithmic solutions. In order to choose the

best algorithm for a particular task, you need to be able to judge how long a

particular solution will take to run. Or, more accurately, you need to be able to

judge how long two solutions will take to run, and choose the better of the two.

You don't need to know how many minutes and seconds they will take, but you

do need some way to compare algorithms against one another.

Asymptotic Notation is a way of expressing the main component of the cost of

an algorithm, using idealized units of computational work.

The four main types of Asymptotic Notations are:

Big-Oh notation-O(N)

Big-Omega notation-Ω(N)

Big-Theta notation- Θ(N)

Little-Oh notation-o(N)

Now let us see about these notations in detail

Big-Oh notation-O(N): Asymptotic upper bound

T(N)=O(f(N))

Definition: If there are positive constants C and N0 such that T(N)≤ C.f(N)

where N ≥ N0

After N0 C.f(N)> T(N)

f(N) is the upper bound of T(N) function.

Example:

T(N)= 50𝑁 2 + 100𝑁 2 − 5𝑁 + 500

=O(𝑁 3 ) => f(N)=𝑁 3

Big-Omega (Asymptotic Lower Bound):

T(N)=Ω (g(N)) if there are positive constant C and N0 such that T(N) ≥ Co g(N)

where N ≥No

After No C.g(N) ≤ T(N)

Example: T(N)=5(𝑙𝑜𝑔𝑁)100 + 500

=Ω(1)

=Ω(1/N)

= Ω(logN)

Big-Theta (Asymptotic Tight Bound):

Definition:

If and only if T(N)=O(h(N))& T(N)=Ω(h(N)), h(N) is the both upper

and lower bound of T(N), then we call it as tight bound.

Example: T(N)= 3𝑁 2 + 100𝑁 − 500

=O(𝑁 3 )

=O(𝑁 2 )

=Ω(N)

=Ω(logN)

=Ω(𝑁 2 )

=> Θ(𝑁 2 )

Little-Oh

T(N)=o(P(N)) if T(N)=O(P(N))

and T(N)≠ Θ(P(N))

Example: T(N)=3𝑁 2 + 100𝑁 − 500

=O(𝑁 3 ) = 𝑜(𝑁 3 )

=O(𝑁 2 ) ≠ 𝑜(𝑁 2 )

=Ω(𝑁 2 )

RULES:

The important things to know are

Rule 1.

If T1(N) = O(f (N)) and T2(N) = O(g(N)), then

(a) T1(N) + T2(N) = O(f (N) + g(N)) (intuitively and less formally it is

O(max(f (N), g(N))) ),

(b) T1(N) ∗ T2(N) = O(f (N) ∗ g(N)).

Rule 2.

If T(N) is a polynomial of degree k, then T(N) = _(Nk).

Rule 3.

logk N = O(N) for any constant k. This tells us that logarithms grow very

slowly.

This information is sufficient to arrange most of the common functions by

growth rate.

Hopitals Rule:

We can always determine the relative growth rates of two functions f (N)

and g(N) by computing limN→∞ f (N)/g(N), using L’Hôpital’s rule if necessary.

The limit can have four possible values:

The limit is 0: This means that f (N) = o(g(N)).

The limit is c ≠ 0: This means that f (N) = Θ(g(N)).

The limit is ∞: This means that g(N) = o(f (N)).

The limit Oscillates: There is no relation

Eg.

𝑛2 + 𝑂(𝑛) = 𝑂(𝑛2 )

√𝑛2

1

= 𝑂(𝑛) = Ω(𝑙𝑜𝑔𝑛)

(𝑛)2

1

2

𝑛2 − 100𝑛 = 𝛩(𝑛2 )

Eg1.

Statement 0;----------------------------------T(1)

for(i=0;i<n;i++)

{

St-1;

St-2;

T2(n)=3n

St-3;

}

for(j=0;j=n*n;j++)

{

St-4;

St-5;

T3(n)=2𝑛2

}

Therefore T(n)= 2𝑛2 + 3𝑛 + 𝑐 = 𝑂(𝑛2 ) = 𝛩(𝑛2 )

Eg 2:

for(i=0;i<n;i++)

{

for(j=0;j<1;j++)

{

Stmt 0;

.

.

.

Stmt n;

}

}

=3n(n+1)/2

=O(𝑛2 )

= 𝛩(𝑛2 )

Binary Search Case:

For binary search, the array should be arranged in ascending or descending

order. In each step, the algorithm compares the search key value with the key

value of the middle element of the array. If the keys match, then a matching

element has been found and its index, or position, is returned. Otherwise, if the

search key is less than the middle element's key, then the algorithm repeats its

action on the sub-array to the left of the middle element or, if the search key is

greater, on the sub-array to the right.

Let A1,A2,…..An be sorted

T(n)=T(n/2)+c

=T(n/𝑛2 ) + 𝐶1 + 𝐶𝑜

=T(n/𝑛3 ) + 𝐶2 + 𝐶1 + 𝐶𝑜

.

.

.

Continues still there is only one element

Which implies lon n= C.log(n)= 𝛩 log(𝑛)

Divide and conquer

A divide and conquer algorithm works by recursively breaking down a problem

into two or more sub-problems of the same (or related) type, until these become

simple enough to be solved directly. The solutions to the sub-problems are then

combined to give a solution to the original problem.

General form of T(n)

T(n)=aoT(n/b)+ 𝛩(𝑛𝑘 ) where a,b,k are all integers.

Master Theorem

𝑎

T(n)= O(𝑛𝑙𝑜𝑔𝑏 ) if a>𝑏 𝑘

T(n)=O(𝑛𝑘 . 𝑙𝑜𝑔𝑛) if a=𝑏 𝑘

T(n)=O(𝑛𝑘 ) if a<𝑏 𝑘

Merge Sort

T(n)=T(n/2)+T(n/2)

=2T(n/2)+ 𝛩(𝑛)

=O(nlogn)

Typical Functional Growth Rate

The growth rate is faster as it goes down.

C

log N

(log 𝑁 2 )

1

(𝑁)4

1

(𝑁)3

1

(𝑁)2

N

Nlog N

3

(𝑁)2

.

.

.

𝐴𝑁 where A>1

Examples

Let A be an array of positive numbers

i,e

A [0]

A [1]

.

.

.

A[n]

To find the maximum value which is A[j]+A[i] where n >= j >= i >= 0

For ( i=0; i<=n ; i++) {

For ( j=i ; j<n ; j++) {

therfore T(n)=(n-1)+(n-2)+ . . . . . + 2+ 1 = O(𝑛2 )

O = A[j] + A[i] ;

}}

Thus T(n)=(n-1)+(n-2)+ . . . . . + 2+ 1 = O(𝑛2 )

Example

A [0]

A [1]

A [2]

A[3]

A[4]

A[5]

A[6]

A[7]

A[8]

A[9]

15

18

88

80

99

9

81

11

70

20

|

|

A[i]

A[j]

Therefore j = 4 and i = 2

To find the minimum value which is A[j] - A[i] where n >= j >= I >= 0

For ( i=0; i<=n ; i++) {

For ( j=i ; j<n ; j++) {

O = A[j] - A[i] ;

}}

where n >= j >= I >= 0

A [0]

15

A [1]

A [2]

A[3]

A[4]

A[5]

18

88

80

99

|

9

A[j]

A[6]

A[7]

A[8]

A[9]

81

11

70

20

|

A[i]

Therefore j = 4 and I = 5 which is not true in the above case

We should have had j = 4 and i = 1

Because n >= j >= I >= 0

Maximum Subsequence Sum Problem

1st Approach:

For a given set of integers 𝐴1 to 𝐴𝑛 find the maximum value of ∑𝑙𝑘 𝐴𝑖

Example -2, 11, -4, 13, -5, -2

For ( i=0; i<=n ; i++) {

For ( j=i; j<n ; j++) {

For ( k=i; k<j ; k++) {

SUM = A[i] + A[j] + A[k] ; }}}

Which is

= O(𝑛3 )

2nd approach: we don’t use K thus O( 𝑛2 )

3rd approach: Recursive approach

Divide and Conquer method

Here the array is divided into 2 parts plus the one which comes at the center and then

compared.

=O(n log n)

4th approach:

Linear time maximum

Here, if any sequence is negative it has to be thrown away as it cannot be the larger

sequence

GCD problem

Greatest common divisor, where 𝑥 𝑛 = x * x * x………*x where I have n of x’s.

Calculating 𝑥 𝑛

For all other languages we use the following which ends up

n)

at O(2 logn)= 2 O(log

Binary Search

A search algorithm used to find the some data ‘n’ is a group of data.

Thus to calculate the maximum we need to scan the array once thus order being O(n)

which is false.

Now if the array is sorted in some order, then we just compare the value to be found

with center item of the array, and with the comparison we keep eliminating half of the

array every step, leaving the order to be O(log n)

Which is as shown in the below algorithm.

If suppose we have a two dimensional array

We would scan each row and a column the Order to be n * n = O(𝑛2 ).

But the order is nlog(n) if the 2 dimensional array is sorted, which can still be reduced

to O(n) by using the zig-zag pattern to scan.

Worst case we still end up scanning (2n-1)= O(n).