Practitioners User Guide

advertisement

Futurewise Profile:

Practitioner’s Guide

Third Draft

10/17/2012

Futurewise Profile: Practitioner's Guide

Table of Contents

About this Guide .................................................................................................... 3

Part 1: Futurewise Profile ....................................................................................... 4

1.0 Introduction .................................................................................................... 4

1.2 Administering tests and questionnaires ....................................................... 17

1.3 Scoring tests and questionnaires ................................................................. 22

1.4 Futurewise Profile reports ............................................................................ 24

1.5 Generating job suggestions ......................................................................... 32

1.6 Review and feedback process ..................................................................... 36

Part 2: Additional Resources ................................................................................ 47

2.0 Answers to practice questions ..................................................................... 47

2.1 Type summaries .......................................................................................... 52

2.2 Learning approaches ................................................................................... 55

2.3 Futurewise job families ................................................................................ 56

2.4 Sample reports ............................................................................................ 57

2.5 Supporting manuals ..................................................................................... 58

2.6 Conversion Tables between test levels ....................................................... 58

2

About this Guide

This guide is principally about the assessments and reports that comprise the

psychometric aspect of the Futurewise Profile system. It also provides advice on the

feedback and review process. It mentions aspects of system set-up and online

administration but these are topics that are covered in depth in the User Guide.

3

Part 1: Futurewise Profile

1.0 Introduction

Futurewise Profile is a comprehensive online careers guidance tool. It combines a

range of contemporary reasoning tests and questionnaires with a powerful

management and reporting system. The tests measure verbal, numerical and

abstract aptitude, and memory and attention; and the questionnaires, work-based

personality and career interests.

Verbal Reasoning Test

The ability to understand written information and determine

what follows logically from that information.

Numerical Reasoning Test

The ability to use numerical information to solve everyday

problems.

Abstract Reasoning Test

The ability to identify patterns in abstract shapes and

generate and test hypotheses.

Memory and Attention Test

The ability to remember and follow complex sets of

instructions and to respond quickly and accurately.

Type Dynamics Indicator

The pattern of personality in terms of the four dimensions

of Type psychology.

Career Interests Inventory

The level and pattern of interest in Holland's six career

themes.

Assessment sessions can be conducted flexibly, in either a supervised or an

unsupervised manner. This means that all the assessments can be supervised, or

unsupervised, or a mixture of both. The two methods of administration use two

different sets of aptitude tests: supervised ('closed' tests), unsupervised ('open'

tests). All the assessments are delivered online and can be completed in formal

assessment sessions, or as part of careers or other lessons, or during homework

periods etc.

Within supervised sessions four versions of timed verbal, numerical and abstract

tests are available; within unsupervised sessions two (broader) versions of

equivalent timed tests are available. One version of the timed Memory & Attention

Test is suitable for all students.

Two versions of the untimed personality questionnaire are available: a pictorial

version that is appropriate for all ages, and a text-based version which may be more

appropriate for older students, e.g. for those in the Sixth Form or its equivalent. The

text-based version of the personality questionnaire may also be more suitable in

international settings because of the different cultural interpretations of some of the

pictures. One version of the untimed Career Interests Inventory is suitable for all

students.

4

However, despite the different versions of tests and the personality questionnaire,

the most typical supervised administration would comprise: verbal, numerical and

abstract reasoning tests Version-2 (suitable for those considering A-Level or

equivalent qualifications), the Memory and Attention Test, the pictorial version of the

Type Dynamics Indicator and the Career Interests Inventory.

The average performance time for this combination of assessments is 80-90

minutes. Although the total time may be greater because of any introductory

sessions by advisors or administrators, and the time taken by the student to read the

various sets of instructions, view example items and complete practice material. In

practice however 120 minutes is more than sufficient to complete the assessments

in one session.

The Futurewise Profile system produces two reports: a student's report and an

advisor's report. These are discussed in detail in this Guide.

In addition once the profiling process and guidance interview is complete students

can access the MyFuturewise web site. This contains a record of their results and

also allows them to explore their career suggestions in greater depth using the

careers database. Other careers-related tools and information are also provided.

1.1

Assessment suite

This section provides more details on each of the assessments:

1.1.1 Verbal Reasoning Test

Verbal aptitude is measured using a Verbal Reasoning Test (VRT). The

verbal tests consist of passages of information, with each passage

being followed by a number of statements. Students have to judge

whether each of the statements is true or false on the basis of the

information in the passage, or whether there is insufficient information

in the passage to determine whether the statement is true or false. In

the latter case, the correct answer option is ‘can’t tell’.

As students come to the testing situation with different experiences and

knowledge, the instructions state that responses to the statements

should be based only on the information contained in the passages, not

on any existing information that they may have.

Ultimately these instructions also reflect the situation faced by many

employees who have to make decisions on the basis of information

presented to them. In these circumstances decision-makers are often

not experts in the particular area and have to assume the information is

correct, even if they do not know this for certain.

The passages in the verbal tests cover a broad range of subjects. As

far as possible, these have been selected so that they do not reflect

5

particular occupational areas. Passages were also written to cover

both emotionally neutral areas and areas in which students may hold

opinions. Again, this was seen to make the Verbal test a valid analogy

of decision-making processes, where individuals have to reason

logically with both emotionally neutral and personally involving material.

Each statement has three possible answer options – true, false and

can’t tell – giving students a one-in-three or 33% chance of guessing

the answer correctly.

The quite generous time limits and the ‘not reached’ figures (the

number of people who do not attempt all the questions), suggest

guessing is unlikely to be a major factor for the verbal test.

The proportion of true, false and can’t tell answers was balanced in

both the trial and final versions of the verbal tests. The same answer

option is never the correct answer for more than three consecutive

statements.

The score for the verbal tests is based on the total number of questions

answered correctly. Addition information is also gathered on the

number attempted versus the number correct, and the speed and

accuracy with which the test is completed.

Practically students are able to use rough paper during the test and are

issued with two sheets and a pencil at the beginning; when this test is

completed remotely, or in an unsupervised manner, students should

also be told that they can use rough paper.

The test is designed to measure verbal reasoning ability (aptitude). This

is important for activities or occupations that require the analysis and

interpretation of written material, or the accurate communication of

verbal ideas. Well developed verbal aptitude is essential for many

professional jobs, such as law, medicine and teaching; administrative

and managerial jobs; sales, marketing and related activities; and those

aspects of science and technology in which accurate communication is

important, for example in engineering and computing.

1.1.2 Numerical Reasoning Test

Numerical Aptitude is measured using a Numerical Reasoning Test

(NRT). The numerical tests present students with numerical information

and ask them to solve problems using that information. Some of the

harder questions introduce additional information which also has to be

used to solve the problem. Students have to select the correct answer

from the list of options given with each question.

6

Numerical items require only basic mathematical knowledge to solve

them. All mathematical operations used are covered in the GCSE

mathematics syllabus, with problems reflecting how numerical

information may be used in work-based contexts.

Areas covered include: basic mathematical operations (+, -, x, ),

fractions, decimals, ratios, time, powers, area, volume, weight, angles,

money, approximations and basic algebra. The tests also include

information presented in a variety of formats, again to reflect the skills

need to extract appropriate information from a range of sources.

Formats for presentation include: text, tables, bar graphs, pie charts

and plans.

Each question in the numerical test is followed by five possible answer

options, giving students a one-in-five or 20% chance of obtaining a

correct answer through guessing.

The distracters (incorrect answer options) were developed to reflect the

kinds of errors typically made when performing the calculations needed

for each problem. The answer option ‘can’t tell’ is included as the last

option for some problems. This is included to assess students’ ability

to recognise when they have insufficient information to solve a problem.

As with the verbal tests, the same answer option is never the correct

answer for more than three consecutive statements.

The score for the numerical tests is based on the total number of

questions answered correctly. Addition information is also gathered on

the number attempted versus the number correct, and the speed and

accuracy with which the test is completed.

Practically students are able to use rough paper during the test and are

issued with two sheets and a pencil at the beginning; when this test is

completed remotely, or in an unsupervised manner, students should

also be told that they can use rough paper. Students are not allowed to

use calculators.

The test is designed to measure numerical reasoning ability (aptitude).

This is important for activities or occupations that require the analysis

and interpretation of different forms of numerical information, or the

precise communication of quantitative or numerical ideas, or situations

where accurate measurements are required. Well developed numerical

aptitude is essential in many commercial or related jobs, such as

accounting, banking and finance; numerate administrative work or

project management jobs; and, numerical professional-technical

activities such as the sciences, statistics, IT and all forms of surveying.

7

1.1.3 Abstract Reasoning Test

Abstract aptitude is measured using an Abstract Reasoning Test

(ART). The abstract tests are based on a categorisation task. Students

are shown two sets of shapes, labelled ‘Set A’ and ‘Set B’. All the

shapes in Set A share a common feature or features, as do the shapes

in Set B. Students have to identify the theme linking the shapes in

each set and then decide whether further shapes belong to Set A, Set

B or neither set.

The abstract test requires a holistic, inductive approach to problemsolving and hypothesis-generation, and does not simply involve the

student deciding what the next shape in a linear sequence of shapes

might be.

People operating in professional or managerial positions are often

required to focus on different levels of detail, and to switch between

these rapidly (e.g. understanding budget details and how these relate

to longer-term strategy). These skills are assessed through the

Abstract test, as it requires test takers to see patterns at varying levels

of detail and abstraction.

The test can also be a particularly valuable tool for spotting potential in

young people or those with less formal education, as it has minimal

reliance on educational attainment and language. In exceptional

circumstance this test can provide a guide to a student's overall

capability (general aptitude) - although if it is to be used to produce an

'estimate' of other aptitudes these must be confirmed with an

assessment expert.

Students are required to identify whether each shape belongs to Set A,

Set B or neither set. This gives three possible answer options,

meaning test takers have a one-in-three or 33% chance of guessing

answers correctly.

As with the other tests, the proportion of items to which each option is

the correct answer has been balanced. The same answer option is

never the correct answer for more than four consecutive shapes.

The score for the abstract tests is based on the total number of

questions answered correctly. Additional information is also gathered

on the number attempted versus the number correct, and the speed

and accuracy with which the test is completed.

Practically students are able to use rough paper during the test and are

issued with two sheets and a pencil at the beginning; when this test is

completed remotely, or in an unsupervised manner, students should

also be told that they can use rough paper.

8

The test is designed to measure abstract reasoning ability (aptitude).

This is important for activities or occupations that require the generation

of hypotheses, or as has been mentioned, the ability to rapidly switch

between different levels of information. Well developed abstract

aptitude is important in a broad range of science, mathematics,

engineering, IT and design activities; in technical jobs that require

problem solving or fault identification; and in managerial jobs where an

appreciation of the tactical and strategic implications of a course of

action are essential.

1.1.4 Test versions

The verbal, numerical and abstract tests are available at a number of

different 'closed' or 'open' versions.

Closed versions of tests are only for use in supervised

assessment sessions.

Open versions of tests can be used in supervised sessions but

are actually designed to be completed 'remotely', e.g. by a student

in an unsupervised setting at school or home.

A guide to the versions of the various tests is provided below. In most

circumstances closed Version-2 tests will be used. Also note that if

the decision is made to use different versions of tests it is not possible

to mix the versions for an individual, i.e. for an individual student to

complete a Version-2 Verbal test, a Version-3 Numerical test and so

on.

The closed Version-3 tests should only be used with high performing

students or groups of students. And whilst closed Version-4 tests are

available it is unlikely that they will be appropriate for the students

served by the Futurewise Profile system.

For those who do not have English as a first language, which may

include students at International Schools, the open Version-1 tests

are probably the most appropriate as they cover a greater educational

range than the closed Version-1 tests (see table overleaf).

9

Closed

Test

version

Open Test

version

Approximate educational level

This covers the top 95% of the population

and is broadly representative of the general

population.

Version 1

Version 2

This covers the top 60% of the population

and is broadly representative of people who

study for A/AS Levels (or equivalent), GNVQ

Advanced, NVQ Level 3 and professional

qualifications below degree level.

Version 3

This covers the top 40% of the population

and is broadly representative of the

population who study for a degree at a UK

University or for the BTEC Higher National

Diploma/Certificate, NVQ Level 4 and other

professional qualifications at degree level.

Version 11

Version 12

Version 4

This covers the top 10% of the population

and is broadly representative of the

population who have a postgraduate

qualification, NVQ Level 5 and other

professional qualifications above degree

level.

Note: The number of items and times of the tests vary. See Part II of

this guide for further details.

1.1.5 Memory and Attention Test

The Memory and Attention Test (MAT) is designed to assess a

student's ability to follow and to retain in memory sets of complex

instructions and to respond to these instructions rapidly and accurately.

The test consists of a number of panels (computer screens) which

contain shapes of different colours and the instructions ask the

respondent to click on specific shapes according to a particular set of

instructions.

As the student progresses through the shapes, the complexity of the

instructions increases, so requiring the student to hold a relatively large

amount of information in memory in order to be able to respond

correctly.

10

The student is able to refer to the current instruction set at any time

during the test, but each time they refer to the instructions will count

against them in relation to the assessment of the memory component

of the task. The test is timed and respondents are asked to complete it

as quickly as they are able.

In addition to the memory score based on the number of times they

referred to the instructions, the test is scored in terms both of the

accuracy with which they clicked on the correct shapes and also the

time taken to complete the test.

The test simulates one of the most important aspects of the workplace:

the need to quickly memorise and retain information in order to apply

rules or procedures in a timely and accurate manner, and also to multitask.

The MAT is a test that generates a useful understanding of

performance as students respond to increasingly complex instructions

and screens of information. There are a total of 50 screens to attempt.

The version of the MAT used in Futurewise Profile system produces

scores for memory (remembering sets of instructions), accuracy

(applying instructions precisely) and overall decision making (ability to

make decisions in a quick and accurate manner).

Practically students should not use rough paper during this test or

anything else that is likely to assist their memory, e.g. voice recorder on

mobile phone.

The test is designed to measure memory and attention. This is

important for activities or occupations that require rules or sets of

instructions to be remembered accurately, in order for decisions to be

made in a precise and timely way. More generally, memory helps to

structure thought and is the basis for effective learning. Well developed

memory and attention is essential in jobs that require the rapid

acquisition of job-relevant information, such as for example financial

trading; and where multi-tasking (simultaneously applying different sets

of rules) is needed for safe or effective performance. This is required in

many time-critical and attention dependent jobs, or those where using

the right set of rules can have profound effects on others, such as air

traffic control, the police and armed forces.

1.1.6 Type Dynamics Indicator

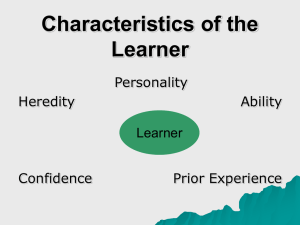

Personality is assessed using the Type Dynamics Indicator (TDI). This

is an untimed self-report questionnaire that is available in pictorial or

word versions. The pictorial version is composed of 56 items (pictures

and words); whereas the word version has 64 items (phrases and word

pairs).

11

The TDI is an up-to-date measure of Type psychology based on the

theories of Carl Jung. Globally the Type model is the most widely

researched and popular theory of personality used in individual

development. It is estimated that over 4 million people complete Typebased personality questionnaires every year.

The TDI identifies how someone views the world and makes sense of

it, and the ways in which they prefer to interact with other people - to

identify their most natural style. It does this by exploring the interaction

of four dynamic preferences:

What attracts and energises us: Extraversion - Introversion

How we see the world: Sensing - Intuition

How we make decisions: Thinking - Feeling

The way we manage the world around us: Judging - Perceiving.

These are then summarised as one of sixteen basic types. Full

descriptions of these basic types are provided in a separate publication

called 'Understanding Personality Typei' which is part of the Team

Focus Essential Guide Series. However it is important to realise that

people's reported Type can and does change over time. People grow,

become more insightful and learn to express their personality in

different ways in different circumstances. Thus the descriptions in this

guide, and those in the reports, provide a summary of what the person

believes about themselves which is the most important place to start

a discussion. A flavour of the four key preferences are described in

more detail below but, for a more extensive description of the

implications of a person's preferences please refer to the publication

mentioned above called 'Understanding Personality Type'.

Extraversion and Introversion (E-I) – a different focus for energy

and reality

People differ in terms of the kind of environment they enjoy. It is

apparent how some people thrive when there is a lot going on, when

there is plenty of chance for discussion, interaction and activity which

keeps them busy. Others enjoy a much quieter environment where

there is a chance to internalise, reflect and to create their own internal

reality. This sometimes leads to an apparent anomaly whereby

introverts function better in a busy environment than do extraverts usually because introverts are more effective at shutting that world out

when they need to concentrate whereas extraverts may let themselves

get too involved or distracted by what is going on. Everyone finds their

own way of balancing these fundamental differences which are

reflected in their basic character.

12

Sensing and iNtuition1 (S-N) – different ways of seeing the world

It is apparent that people, when subjected to the same information and

experience, are capable of recounting quite a different version of

events. Jung’s model of Type suggests that this is not just a question of

responding differently, it is also that we actually see differently. We

seem to notice different elements, and remember and extract different

meanings from them. It is as though we all have on a set of spectacles,

which filter and highlight differently. A food analogy would be that there

is a pile of ingredients. One person may see it as a pile of eggs, flour

and sugar. Another person may see that it is a potential cake. Thus

some people are very tuned in to practical details and specific facts (the

individual ingredients of eggs, flour and sugar) - the Sensing

preference. Others seem to make broad and abstract links, to see

patterns and possibilities without a great grasp of the details (the

potential cake) - the iNtuitive preference.

Thinking and Feeling – different ways of making

decisions

People argue, persuade, form their opinions and make judgements in

quite different ways. Some people are impressed by rational argument.

They like to have reasons which are logical and do not feel comfortable

with any decision until they have a clear rationale. They need to make

connections and build a logical framework which justifies the decision

they make - the Thinking preference. Others are less impressed with

these kinds of reasons. They tend to match their decisions to their

underlying values. They seem to have a more direct way of judging and

valuing. This does not mean that they are illogical, it’s simply that logic

is not as important in the decision-making process - the Feeling

preference.

Judging and Perceiving – different ways of managing

the world around us

People display fundamental differences when it comes to managing or

responding to the world around them. Some people with a Judging

preference like to know what is coming. They anticipate, plan and

organise the world and may treat surprises as a nuisance to be

managed. Others with a Perceiving preference have a more responsive

approach. They remain open to new ideas and information which they

happily incorporate into their plans and schedules. In fact they often

welcome or await new information and this sometimes means that they

delay decisions until the last minute. By viewing surprises as a

welcome change, it enables them to show flexibility and spontaneity.

In Futurewise Profile personality information is not only presented in

terms of descriptions of the basic Types but also with relation to workbased competencies, such as 'working with people', 'persuading

people', 'planning style', 'making decisions', 'getting results' and 'being

creative'.

1

Note the abbreviation for iNtuition is the second letter N since I is used for Introversion

13

These competencies are universally recognised as important by

employers and form part of many competency profiling systems.

In addition elements of Type are used to identify preferred learning

approaches and the parts of the 'learning cycle' to which someone is

attracted. There is more detail on what this means in the publication

'Understanding Learning Styles'.

1.1.7 Career Interests Inventory

The Career Interests Inventory (CII) is an untimed self-report

questionnaire based on John Holland’s widely used model of vocational

preferences. It explores interests, competencies and work styles to

provide a tool for supporting career choice.

His model, which splits the world of work into six broad themes, is the

most widely accepted theory of work interests. Holland's theory

underpins all the major career interest inventories and career

exploration products.

The inventory is divided into three sections which cover the six RIASEC

career themes (see below for details). Section One consists of 36

pictorial normative items. Section Two consists of 15 pictorial ipsative

items. Section Three relates to work areas for which a student may

express a particularly strong preference or dislike. Section Four relates

to a small number of natural abilities or natural talents (e.g. music

talents) which are required for certain career areas.

The normative and ipsative items ask how interested the student is in

an activity or career-related environment, or which, out of a choice of

two, would be preferred. The skills items how proficient the student is

at a very specific skill, e.g. music, art, performance, sport.

The additional items are designed to discover if using particular skills,

or working in a particular context or environment is either very

important to a student or they would prefer not to have a job with those

features, e.g. a job requiring the use of numerical skills for much of the

time, or directly caring for others in a health or medical setting, or being

part of the armed forces.

The use of normative (compared to other people) and ipsative

(compared to self) items provide alternative benchmarks for

interpretation. These are discussed later in this guide.

14

The interest themes (scales) covered by the inventory are described below.

Realistic

Jobs which fall into this area are practical occupations that usually

require physical or manual activity. They include skilled and technical

trades, and some of the service occupations. They generally have a

'hands on' element and may involve working outdoors. Realistic work

activities may involve using tools, equipment and machinery; IT; building

and repairing things; and/or work related to nature, agriculture and

animals.

Those with realistic interests are often motivated by the outdoors and by

physical, adventurous and sometimes risky activities. They are interested

in action rather than thought and generally prefer practical problems, as

opposed to those that are ambiguous, theoretical or abstract.

Investigative

Jobs which fall into this area are concerned with finding out about things.

They centre on science, medicine, social concerns, theories, ideas and

data, with the aim of understanding, predicting or controlling these things.

Investigative work activities have a strong 'analytical' element and include

researching, exploring, observing, evaluating, analysing, learning and

solving abstract problems. This may be in a laboratory, medical or

academic establishment, or in the computer industry.

Those with investigative interests are generally motivated by the desire to

probe, question and enquire. They tend to need space and calm to reflect

and think, and often dislike selling and repetitive activities.

Artistic

Jobs which fall into this area have a strong 'expressive' element and are

concerned with creating or appreciating art, drama, music or writing.

Artistic work activities include composing, writing, creating, designing,

cooking, performing and entertaining. This theme is not necessarily about

having an interest in painting or drawing personally, because it includes

occupations where people appreciate some kind of creative expression.

Those with artistic interests enjoy being 'spectators' or 'observers' and

their artistic side is often reflected in leisure and recreational activities.

They also tend to be content in academic environments as artistic

interests are often associated with verbal or linguistic abilities.

Social

Jobs which fall into this area involve working with people in a helpful or

facilitating way. They are concerned with human welfare and community

services. Work activities include caring, teaching and educating, treating,

helping, listening, counselling and discussing.

Those with social interests are motivated by an impetus to help or care

and tend to solve problems through discussing values and feelings, and

by directly interacting with others. In addition they are often particularly

team-minded.

NOTE: 'Teaching' occurs across most of the themes but each one tends

to attract people with an interest in that theme. So 'realistic' teaching

incorporates hands-on or technical type activities; whereas ‘social’

teaching is more concerned with the interpersonal and pastoral elements.

15

Enterprising

Jobs which fall into this area are concerned with business and

leadership. They seek to attain personal or organisational goals, or

economic gain. Work activities include selling, marketing, managing,

influencing, persuading, directing and manipulating others. Being selfemployed (running your own business) falls into this category, as does

work in politics.

Those with enterprising interests are frequently motivated by taking

financial or interpersonal risks, and often like to participate in competitive

activities. Whilst they can be systematic in their approach they are

generally unlike those with investigative interests as they tend to dislike

scientific activities, or those which require intellectual application.

Conventional Jobs which fall into this area are concerned with organisation, data and

finance. They involve working with information, numbers or machines, to

meet organisational demands and standards. Work activities include

setting up procedures, maintaining orderly routines, organising,

operating, accounting and processing.

Those with conventional interests often enjoy mathematics and activities

that involve the management of resources. They tend to work well in

large organisations and are often equally as happy dealing with people

as they are with data or ideas.

16

1.2

Administering tests and questionnaires

1.2.1 Setting up the system

The process of setting up the Futurewise Profiling programme within a

school is described in the User Guide. This guide explains in detail how

to use the Futurewise Profiling system and covers such areas as

programme setup, registering students in the system, adjusting the

general settings for a school account, making adjustments to the

assessment settings for individual students, preparing for and running

assessments, viewing results, generating reports and so on.

It is essential that those users of the system who have a major

responsibility for running the Futurewise Profiling System in a

school are familiar with those parts of the guide that are relevant

to the tasks which they undertake or for which they are

responsible. Users should also consult the online help which is

available from all screens of the system and covers much of the same

content as the User Guide.

Although much of the basic programme setup will normally be

undertaken by Futurewise staff, there are certain essential procedures

which would usually be undertaken by school staff. These include:

Checking the default assessment settings to be used for each

student group

Setting assessment deadlines for each group

Inviting students to register in the system

Making adjustments to the assessment settings for individual

students (e.g. entering ability assessments for disabled students

who are unable to take certain assessments, adjusting aptitude test

versions for particularly bright or less able students, etc)

Planning classroom sessions for supervised assessments

Finally, enabling the assessments once all set up procedures have

been finalised.

It is particularly important to ensure that

the correct default settings for each assessment group have

been made before students are invited to register

any settings required for individual students have been made

before the assessments are enabled.

17

School-based staff who are new to the Futurewise Profiling system will

be able to call upon the assistance of Futurewise staff for help and

advice during the programme setup process.

1.2.2 Supervised versus unsupervised test administration

The Futurewise Profile assessments can be taken either under

supervised or unsupervised conditions and, in the case of the verbal

reasoning, numerical reasoning and abstract reasoning tests, different

versions of the tests must be used depending on whether the tests are

to be supervised or not.

In the case of supervised assessments, once students have logged in

to their Futurewise Profiling Home Page, they will also need to enter an

additional password whenever they begin an assessment. This

password is necessary in order that students cannot take the

assessment at home or under other unsupervised conditions. The

passwords required for supervised assessments will always be

provided to the student only at the time of the assessment itself. Details

of how to obtain the assessment passwords can be found in the User

Guide.

Wherever possible, schools should use the supervised versions

of the three reasoning tests. Although this requires additional effort

in setting up and supervising classroom assessment sessions, the

supervised assessments will normally provide a more valid and reliable

means of assessing a student's aptitudes.

With unsupervised

assessments, there is of course no way of being certain whether the

student has completed the assessments entirely by themselves or with

the help of others. Furthermore, with a supervised assessment, in

addition to being able to ensure that the setting and circumstances for

the assessment are optimal, it is also possible for the administrator to

introduce the assessment to the students before they begin and to be

on hand should there be any difficulties or problems encountered by

students. Notwithstanding this, when assessments are used for

development purposes such as careers guidance, authenticity is not

usually a major issue.

If supervised assessments cannot be arranged, then it is good practice

to ensure that students have received an introduction to the

assessments in a class setting, during which the nature of the

assessments can be discussed and any concerns raised by students

can be addressed.

18

1.2.3 Preparing for a supervised assessment session

The assessment room needs to be suitably heated and ventilated (with

blinds if glaring sunlight is likely to be a problem) for the number of

people taking the assessments and for the length of the session. All

the computer screens need to be clear and easy to read. The room

should be free from noise and interruption, as any disturbances can

affect performance.

It should also be ensured that the computers on which the

assessments are to be taken meet the minimum requirements as set

out in the User Guide.

There should be space between each test student’s computer screen

so that students cannot see others’ progress or answers and the

administrator should be able to walk around to keep an eye on

progress or difficulties – especially during the examples where

misunderstandings can be dealt with.

If the assessments are to be taken as part of an assessment day,

remember that performance tends to deteriorate towards the end of a

long day. If a number of sessions are being planned, for different

groups of students, those who take the assessments towards the end

of the day may be disadvantaged. If there are other mental challenges

remember to organise appropriate breaks.

A notice to the effect of ‘Testing in progress – Do not disturb’ should be

displayed on the door of the assessment room. Ensure that chairs and

desks are correctly positioned and that rough paper and pencils are

available for the verbal, numerical and abstract tests. Also remember

that calculators are not permitted for any of the Futurewise Profile

assessments.

1.2.4 Procedure for a supervised assessment session

Please note that the instructions for test administration which follow

below assume that students have already registered within the system

and have been provided with their logins and instructions on how to

access their home page.

Prior to the first assessment session, whether supervised or

unsupervised, students should already have been provided with a

general introduction to the Futurewise Profiling system.

This

introduction should cover at least the following areas:

the objectives of the Futurewise Profiling system and, briefly, how it

all works

why they are being asked to take the assessments

19

what assessments they have to take

how the assessments will be used in generating their Futurewise

Profile

what feedback they will receive on their results

who will have access to their results

the date by which any unsupervised assessments should be

completed

what will happen when the assessments have been completed

the details of who they should speak to in case of queries or

difficulties

The assessment session itself should then proceed as follows:

Invite students into the assessment room and direct them where to sit.

When all students are seated, you should give an informal introduction

to the session. You should prepare the points you wish to cover in

advance, but should nevertheless deliver the introduction informally in

your own words.

The aim of the introduction is to explain clearly to the students what to

expect and to give them some background information about the

assessments and why they are being used. This will help to reduce

anxiety levels and create a calm environment. The administrator

should aim for a relaxed, personable, efficient tone, beginning by

thanking the students for attending.

During your introduction, you should:

ask students not to touch the computers until they are told to do so.

advise students which assessment they will be taking in the current

session and explain how that assessment fits into the programme

of Futurewise assessments which they are taking.

explain to students that all instructions they will need will be

provided on-screen and, if the test is timed, that the timing will only

begin once they have read the instructions and completed the

practice items. Explain that for each test, there will practice

questions at the start before the test proper begins.

ask the students if there are any questions and deal with these

accordingly. Explain to students that you will be unable to give any

specific advice during the assessment on how they should answer

specific questions, but that they should let you know if they have

any particular difficulties (for example, difficulties with their

computer).

explain to students the policy you wish to adopt as to what they

should do when they have finished the assessment. In some

20

cases, you may wish students to remain seated once they have

completed an assessment so as not to disturb other students. In

other cases, for example when taking non-aptitude tests such as

the personality and career interests questionnaires, you may permit

them to leave as soon as they have submitted the assessment. If

the students are due to take more than one assessment, you may

wish to tell them to continue with the second assessment as soon

as they have finished the first.

Following the introduction, you should then provide the students

with the passwords they will need for each assessment they are

due to take (see the User Guide for details of how to obtain the

passwords.). The passwords should be displayed to the students

on the board or overhead projector or similar. You should not

distribute sheets containing the passwords as this could allow a

student to pass on the password to other students who are due to

take the assessment at a later session.

Depending on what is appropriate for the assessments to be taken,

instruct students as to whether they should begin the test

immediately once they have logged in or wait for your instruction

before clicking on the first assessment on the Home Page

dashboard. When all students are ready, tell them to login to their

Home Page in Futurewise Profiling (see detailed instructions in the

User Guide).

If you have told students they can begin

immediately, they will now do so. Otherwise, wait until all students

are at their Home Page and then give the instruction to click on the

assessment to be taken in their Home Page dashboard.

Once the assessment session has begun, your principal task will

be to ensure that students are working quietly, are not disturbing

other students and are not conferring with each other. Should

difficulties occur, advice is provided in the User Guide as to how

these can be dealt with. Note that for all assessments except the

Memory and Attention Test, the students’ responses are saved as

they go through the test. If a student's computer fails for technical

reasons, then the student can immediately login on another

computer, restart the assessment and be taken automatically

straight to the question they were previously working on, with their

previous responses preserved. In the case of the Memory and

Attention Test, if students experience a technical problem, they will

need to login on a different computer and start the test once again

from the beginning.

21

1.2.5 Unsupervised assessments

Unsupervised assessment is typically used for those assessments such

as the personality and career interest assessments where it is not so

important to ensure that students are not conferring with each other.

Indeed, with these assessments, there is little to be gained by a student

seeking help from another person. Nevertheless, if a school has the

resources to supervise the personality and career assessments, then

this would help to ensure that students complete these assessments

with care and without distraction.

Unsupervised assessments may take place at home or elsewhere, at

the students' convenience, though could also be part of a classroom

session. The latter might be the case for example where you would like

the students to take the assessments in programmed class time but

where you do not have the resources to arrange for a staff member to

supervise the session. In either case, passwords will not be required for

accessing the assessments. Further details of how the programme

setup and the test versions selected determine the requirement for

passwords can be found in the User Guide.

In the case of the four ability tests (Numerical Reasoning, Verbal

Reasoning, Abstract Reasoning and the Memory and Attention Test),

unsupervised administration is less desirable than supervised

administration for the reasons given above.

However, where

unsupervised administration is necessary for resource or logistical

reasons, then it is important to encourage students to undertake these

assessments without seeking help from others. They should be helped

to understand that the results of the assessments will not be 'used

against them' in any way and will be used only to provide them with

accurate information about which jobs their skills and abilities are suited

to. It is therefore in their interests to undertake the assessments

without help from other people.

.

1.3

Scoring tests and questionnaires

1.3.1 Automatic scoring and manual input of scores

Once a student has completed the online tests and questionnaires the

results are generated automatically. The system then uses the results

to produce the Student's and Advisor's Reports. Thus under most

circumstances there is no requirement for the Advisor or system

administrator to input scores, or deal with any aspect of the scoring

process.

22

However there may be occasions when a student cannot take the

online versions of the assessments and paper-and-pencil materials are

used. These are available for all the assessments except the Memory

and Attention Test.

If paper-and-pencil materials are used the responses made by a

student can be entered manually into the system - see the User Guide

for further details.

1.3.2 Scaling scores and reporting results

The system automatically converts all test scores so that they are

reported at the Version-2 test, i.e. the Student's Report will explain that

the results of the aptitude tests are relative to students considering Alevel, Scottish Higher, IB or similar qualifications.

It is important to note that a Student's Reports always use a Version-2

benchmark, irrespective of the tests that have been completed, i.e.

even if the student has completed Version-1 or Version-3 tests. Also,

that the TDI and CII results are always reported by reference to the UK

general population.

The Advisor's Report contains information that allows results to be

compared with other benchmark groups (norms): essentially to ask

what the results would look like if a student was compared to a higher

(or lower) standard. See the Comparison Tables in Part II.

In the reports, the test and questionnaire results are presented on 10point or 'Standard Ten' (STEN) Scales. On a STEN scale a result of 1-3

is described as 'low', 4-7 as 'medium' and 8-10 as 'high'.

If necessary finer distinctions can be made with regard to aptitude test

results by using the percentile results that are presented in the

Advisor's Report. The percentile scale is the 'better than' or 'good as'

scale, so for example a student scoring at the 65%ile has achieved a

higher score than 65% of the population, or they can be described as

being in the top 35%.

Note: Statistically a STEN scale has a mean of 5.5 and a standard

deviation of 2. STENs of 1-3 or 8-10 would each be achieved by ~16%

of the norm group; and a STEN of 4-7 by ~68% of the norm group.

23

1.4

Futurewise Profile reports

1.4.1 Introduction to reports

At the end of the assessment process the Futurwise Profile system

produces PDF reports. These are generated in three stages:

the Student's Pre-interview Report containing the results and

interpretation of the assessments completed by the student. This

is sent when all the assessments have been completed by the

student, and is used by the student to prepare for the guidance

interview.

the Advisor's Report containing the same information plus a

range of additional results - see the section on the Advisor's

Report for further details. This is used by the advisor to prepare

for the guidance interview and as a source of information during

the interview.

the Student's Final Report which is produced after the guidance

interview (if appropriate) which contains the advisor's notes. This

report is sent to the student, the student's parents/guardians and

the school when the advisor's notes have been added after the

guidance interview.

1.4.2 Student's Report

This part of the Guide describes the sections in the Student's Report

and the underlying logic that drives the content.

Note 1: Throughout the narrative report 'tests' are referred to as

'assessments'. However in this explanatory section they are referred to

as tests (or questionnaires).

Note 2: A complete sample report is provided at the end of this Guide

for reference purposes. However as reports may be updated from time

to time make sure you are looking at the latest version.

Introduction

After a title page which includes the student's name, name of school,

report date and IF reference number, there is a short piece of

introductory text and a list of contents.

24

In addition if a student has self-reported learning difficulties (dyslexia,

dyspraxia), visual impairments (colour blindness, poor sight), health

issues (epilepsy), physical disabilities, or possible language problems

(English as a second language) a note will be included on the

introduction page to the effect that these might have influenced their

performance on the tests.

The report is then composed of seven sections (A-G).

Section-A: The Big Picture

This section contains charts of the results for the six tests and

questionnaires completed by the student. It's designed to give students

a quick visual overview of their results.

Personality style

The first chart illustrates the results from the Type Dynamics Indicator

(TDI). For each of the four dimensions there are two bars. For example,

the initial dimension is concerned with Extraversion and Introversion,

and so the first bar provides the result for Extraversion and the second

for Introversion.

In most Type indicators the result would only indicate a person's

preference in terms of Extraversion or Introversion. However this can

obscure the fact that, although one is always greater, everyone has a

mixture of both Extraverted and Introverted preferences, and using two

bars reinforces this important point.

The results are presented on a standard ten point (STEN) scale and

the measurement error in each result is indicated using a short

horizontal line at the end of the bar. Measurement error gives an

indication of the accuracy of the results. For example, if the result for a

particular personality scale is represented by a STEN of 6, and the line

starts at 5 and ends at 7, it means that the result is between 5 and 7.

In all cases the personality results are produced using a comparison

with the general UK population.

General aptitudes

The second chart shows the results for the four aptitude tests. However

in the case of the Memory and Attention Test, there are three separate

results (Memory; Accuracy; Decision Making) and so the chart contains

a total of six bars.

As with the TDI, each of the results is presented on a STEN scale, with

in each case a short horizontal line at the end of each bar showing the

measurement error.

25

It is also important to realise that all the aptitude results are

benchmarked (normed) against students considering A-Level, Scottish

Higher, IB or similar qualifications.

There will also be an indication on the chart, if one or more of the test

results are:

missing (not attempted by the student), indicated by the text

'Missing result'.

estimated (by the school or advisor, for example because of a

physical problem), indicated by the text 'Estimate'.

based on extended timings (for example because of dyslexia),

indicated by the letters 'ET'.

Career interests

The third chart shows the results from the Career Interests Inventory in

terms of the six Holland career themes.

In the same way as the other two charts the results are presented on a

STEN scale, with a short horizontal line at the end of each bar showing

the measurement error.

In all cases the results are produced using a comparison with the

general population.

Section-B: Overview

This section contains narrative relating to the student's personality,

aptitude and interest results. It is written, as are the other sections, at a

level that is appropriate for the average 14-15 year old reader.

In each part there is an introduction, for example describing the

aptitude tests, and additional text that relates directly to the student's

results.

The Personality Style part contains one of 16 general personality

descriptions, depending on the students reported psychological Type.

The General Aptitudes part has two main components. The first

describes the results of the verbal, numerical and abstract tests in

terms of whether the results for each is 'low' (STEN 1-3), 'average'

(STEN 4-7) or 'high' (STEN 8-10). It also contains suggestions on what

the results might mean, in terms of the aptitudes assessed.

As there are three tests and three levels of reporting the text is based

on 27 unique permutations of results.

26

The second component deals with the Memory and Attention Test in

the same way. Again as there are three results and three levels of

reporting the text is based on a further 27 unique permutations of

results.

The Career Interests section describes the two career areas (themes)

that appear to be of the most interest, and the area that is of the least

interest.

In those cases were the results for areas are tied on the basis of the

normative results, the career areas are separated, and the top two

identified, using the ipsative results and/or raw scores.

Note: The career interest narrative also comments on the fact that

career areas may not appear to go together and this may be because

the student has a broad range of interests. In addition that interests

and personality can appear to be mismatched as it is possible to be

interested in something that does not immediately seem to complement

a person's personality, e.g. to have interests concerned with organising

data and information but a personality Type that suggests a preference

for working in a broad brush rather than a detail conscious way. These

two things are not necessarily incompatible but they would probably

need exploring during the feedback process.

Section-C: You and work

This section contains more detail on the student's personality and is

concerned with how it relates to the world of work. It is specifically

written to reflect the competency areas that are recognised as

important by employers.

As such while it reports on six competencies (Working with people;

Persuading people; Planning style; Making decisions; Getting results;

Being creative) it is based on what are agreed by researchers to be the

eight main competencies, or the so-called 'Great Eight'.2

However in the sense of the report, competencies are concerned with

the way in which a student might go about 'working with people' for

example, and not about how effective or successful they might be.

Thus competencies based on personality indicate style of approach

and are not about 'capability'.

2

e.g. Bartram, D. (2005). The Great Eight Competencies: A Criterion Centric Approach to Validation.

Journal of Applied Psychology, 90(6), 185-203.

27

At the beginning of the section there is one of 16 Type workplace

definitions, based on the student's reported Type. This is identified by

analysing the results from the TDI with the position on each of the four

dimensions (E-I; S-N; T-F; JP) being used to define the overall Type,

and thus the appropriate description. This is followed by definitions of

the six competencies described above with three bullet points that

relate directly to the relationship between the reported Type and the

competency.

As there are six competencies and 16 Types there are 96 possible sets

of three bullet points - a pool of 288 bullet points in total. The

descriptors were written for each of the competencies by experts in

Psychological Type, and by reference to Type research, and reflect

how someone with a particular Type would be predicted to act or

respond.

Finally at the end of the section there is a part that brings together the

personality and interest results. This is based on research on the

relationship between Type and Holland career interest themes. In

particular which Types are most likely to be aligned (in agreement) or

unaligned (not in agreement) with which interest themes3.

The text is based on the top two scoring interest themes and is in one

of three formats. If the top two scoring interest themes are aligned, two

sets of three bullet points appear in the text.

When one is aligned and one is unaligned, one set of three bullet points

appears. This is then followed by two bullet points which combine the

interest theme with how this might be expressed in terms of Type. For

example:

"If your personality style is combined with your top two interests, they agree

that you:

prefer to decide for yourself what to do.

get pleasure from becoming an expert in something.

enjoy questioning how the world works.

And you:

have an interest in helping others, but with practical things rather than

feelings.

are interested in giving advice to other people, but like to have a plan

rather than letting things take their course."

3

e.g. Merriam, J.N., Thompson, R.C., Donnay, D.A.C., Morris, M.L. & Schaubhut, N.A. (2006).

Correlating the Newly Revised Strong Interest Inventory with the MBTI®. CPP Inc.

28

And finally, if neither of the top two scoring interest themes are aligned,

there are two sets of two bullet points illustrating how the areas might

be expressed in terms of Type.

Care has been taken to ensure that, as far as possible, all the points

are positive in nature. However the reason there are two bullet points

for unaligned comments, those which include a 'but' element, is so for

most students the 'numerical' balance of bullet points will be in favour of

more positive 'aligned' statements.

As there are 16 Types and 6 interest themes, some with two and some

with three bullet points, there are a total of 242 comments on Type

combined with interest, from which the actual text shown on the

questionnaire will be selected.

Note: At the end of the section there may be an additional comment

about the Type results. In particular if one or more of the pairs (E-I;

S-N; T-F; J-P) produced results which were very similar to each other,

e.g. a single STEN between the results for E and I. When this occurs

the result is called a corridor score. The practical implication is that

the first letter of the Type might be an E or an I, leading to a different

overall Type.

When this happens, the report suggests that the Type results should be

considered with extra care. The implication being that it would be useful

to talk them through with an advisor who will be able to discuss the

other Types that would fit the personality profile. This is a level of

complexity that may be beyond most guidance interviews; however the

Advisor's Report does indicate the 'next best' fit for a student with one

or more corridor scores. It is suggested that each advisor has a copy

of 'Understanding Personality Type' which allows them to understand

the possible differences between the 'best fit' and the 'next best fit'

types. This booklet is published by and available from Team Focus

Limited.

Section-D: You and learning

This section is concerned with learning approaches (learning style).

After a general preamble it identifies the learning approach that the

student is most likely to adopt/prefer. This is one of four approaches

based on an analysis of the first two scales in the TDI (E-I; S-N).

See Part III for a brief description of each of the four approaches Activating, Clarifying, Innovating and Exploring - and also how these

relate back to the 16 Type descriptions.

29

The description of the preferred approach is reinforced with a diagram

that highlights the student's approach alongside the other three

possible approaches.

The diagram is followed by two bullet points that identifying actions

from the less preferred learning approaches that the student might like

to consider.

Note: The way in which someone prefers to learn can influence the

way in which they apply their interests. So this part of the report

suggests that the student looks back at their interest results and thinks

about those that engage them the most, as this may be because they

are a better fit with their learning approach. For example, someone may

have an Activating learning approach, and this practical and hands-on

style might match interests in Realistic job activities.

Section-E: You and careers

This section provides a list of the 15 jobs that are the best matches with

the student's personality, aptitudes and interests. For each job, the 'job

family' to which it belongs is also indicated.

Three lists, of 10 related jobs each, are also provided. These

respectively show the best matches (a) if career interests are given

greater weight than aptitudes or personality, (b) if personality is given

greater weight than aptitudes or interests, and (c) if aptitudes are given

greater weight than interests or personality. In each case the job is

linked to its job family.

The jobs are selected from a database that contains 774 jobs. However

under most circumstances the lists described above are generated

from a sub-set of 437 jobs that have been selected as having the most

relevance for students using the Futurewise Profile system.

Note: See Section 1.5 of this guide for a description of how the job

suggestions are generated.

Section-F: You and subject choice

This section contains three tables. The first shows the academic

subjects that are required (or useful) for each of the 15 primary job

suggestions.

The second lists the Russell Group 'facilitating subjects' that the

student is currently studying, those that are being considered for higher

level study, and the students interest in each. Facilitating subjects are

highly regarded by Russell Group Universities and keep a wider range

of HE options open.

30

The third table lists other academic subjects, which the student is

currently studying or thinking of studying, that might be essential, or

useful, for the 15 job suggestions.

The second and third tables use information on subjects being studied

or considered as gathered from the student during registration - see

the User Guide for details. Thus it uses information on the school

subjects that are available at a particular school, or on occasions, a

default list of subjects if a school does not enter a list of available

subjects.

Section-G: Advisor's comments

The final section of the report incorporates the Advisor's comments, as

appropriate. These are based on the feedback or review session(s)

with the student, having been input into the Futurewise Profile system

via the Advisor's interface - see User Guide.

The end page is a standard check list of further sources of information.

1.4.3 Advisor's Report

The Advisor's Report contains the same narrative and notes which are

presented in the Student's Report. The narrative is in the same voice

as the Student's Report.

The Advisor's Report contains the same charts (with error bars) and

tables as the Student's Report but also incorporates additional

information. This information is designed to give the Advisor further

options with regard to the personality, aptitude and interest results.

Specifically:

Personality style. This comprises a chart and details of each of

the scales with an additional table indicating the clarity of

preference. For those student's who have one or more corridor

scores it will indicate the next best fit with the data. This means

that the Advisor can use the 'alternative' Type description that is

presented in the Advisor's Report if this seems appropriate, for

example if the student feels that their 'reported' Type does not

sound accurate.

A quick reference to all 16 Types is provided at the end of the

Advisor's Report. This has the reported Type and the next best fit

Type highlighted. Other information is provided in this guide.

General aptitudes. This comprises a chart of the VRT, NRT, ART

and MAT results. The table beneath provides considerable

additional information on each test in terms of:

31

Number of questions in the test

Number of questions attempted

Number of questions correct

Comments on speed & accuracy

Result presented as a STEN

Result presented as a percentile

Comparison (IRT) score - allows comparison with other norms.

As with the Student's Report, notes flag missing tests, estimated results

or extended times.

Career interests. This comprises a chart of the student's career

interests. An additional table is provided which shows the

normative STEN scores and the ipsative scores.

For guidance on how to incorporate this additional information in a

review or feedback session see Section 1.6 of this guide.

1.5

Generating job suggestions

As the list of job suggestions if often seen by the student and his or her parents as

the ultimate 'test' of a careers guidance system, what follows is a description of how

job suggestions are generated. In this section, the method which is used to generate

the list of recommended jobs is explained in detail for those who wish to understand

precisely how it works. What follows is of necessity fairly technical and there is no

requirement for users of the Futurewise Profiling system to understand these

principles in detail.

1.5.1 Introduction

The occupational mapping process works by calculating an overall

match score for each of the jobs in the jobs database. Jobs are then

ranked in terms of their match scores, and those with strong match

scores form the basis of suggestions to the student.

The overall match score is made up of three separate components:

aptitudes, interests and personality. Each of these component match

scores expresses the match between the student's assessment profile

and the requirement scores (also expressed in terms of aptitudes,

interests and personality) of the job in question. The way in which the

component match scores are calculated is different for each

component.

The requirement scores for each job are based on a thorough analysis

of the UK based CASCAiD database using, where appropriate,

additional data from the world's most comprehensive jobs database,

the US Department of Labor's O*NET system.

32

Every job has also been reviewed for use in the Futurewise Profile

system by occupational psychologists and other career experts. In

particular each job has been analysed, checked and rated in terms of

the levels of performance required across the four aptitudes, Holland's

six occupational themes and the eight Personality Type roles.

The main jobs database contains 774 jobs. However the job

suggestions are based on a sample of 437 of these jobs. These are

the jobs that were considered suitable for inclusion in the matching

system, and they exclude unskilled and many semi-skilled jobs.

1.5.2 Aptitude matching

For aptitude, the match score is found by computing a match score

using each of the four contributing aptitude areas: verbal reasoning,

numerical reasoning, abstract reasoning and the mean of the three

Memory and Attention Test scores. The match score for each area is a

measure of the closeness of the student's score on the relevant test to

the 'requirement score' for that area for the job in question.

The requirement score is an indication of the degree of aptitude,

expressed on a 1-10 scale, which is considered ideal for the job. Thus,

if a student obtains a STEN score of 7 on numerical reasoning and the

requirement score for numerical reasoning for the job is 7, then this is a

perfect match and the student is allocated a match score of 10 for this

component. If the student obtains a STEN score of 5, and the

requirement score is 7, then the student would be allocated a match

score of 8 for this component, and so on.

The highest match score of 10 is obtained when the student's score is

identical to the requirement score, whatever the requirement score

happens to be.

In addition a correction, which essentially a points 'penalty', is applied if

the student's STEN score is 3 points below the requirement score. This

is to take account of those situations when a student's level of

performance is clearly below that required for a job.

1.5.3 Interest matching

For interest, the match score is found in a similar way, by computing

the individual match scores for each of the six interest scales (Realistic;

Investigative; Artistic; Social; Enterprising; Conventional).

The

individual match scores are calculated in just the same way as for the

aptitudes, using requirement scores which indicate the ideal amount of

interest in the area in question for the given job.

33

A correction is also applied if a student's interest score on any of the

six scales is more than 6 points different from the requirement score.

In addition the ultimate match with the six interest scales is influenced

by any preferences a student has expressed in the third part of the

Career Interests Inventory. If a student has a preference for using a

specific skill (e.g. their musical ability) and/or has a marked preference

for or against some specific feature of work (e.g. dealing with numbers

all day) this is used to promote or demote relevant jobs in the final list

from which the recommended jobs are selected.

1.5.4 Personality matching

For personality matching, a different method is used. Whereas both the

aptitude and interest areas use a range of scores for the matching

process, personality uses the top two 'themes' (out of a possible 8)

that are suggested from the student's Personality Type.

This

simplification of Type preferences helps to match core elements of the

person with core elements of the job. It also fits with Type theory which

suggests that people have a dominant and auxiliary theme which work

together to manage the world effectively.

To achieve the matching all the jobs in the CasCaid database have

been allocated a score between 1 and 5 for the eight possible themes

(e.g. theme one scored a 5, theme two and three scored a 4 etc.). This

allows each job to be 'profiled' and a matching score to be calculated

according to the person's top two themes. This score is then weighted

to suggest the fit between the student's Type and the requirements for

the job.

1.5.5 Scaling and adjustments

The overall match scores are multiplied by constants to equalise the

ranges for each of the different contributory areas. In addition, further

constants are applied to equalise as far as possible the lower and

upper ranges of the match scores from the different areas, these

having been determined by observation of the natural distributions of

the match scores.

The adjustments described have the effect of equalising the scales for

the match scores from the different areas so that each begins on an

equal footing when contributing to the final match score. After these

adjustments, a second set of weightings is applied in order to allow the

three areas to contribute differentially to the matching process. Thus

34

in the first instance the order of weights is: aptitude, interests,

personality.

The end result of the matching process is that all 437 jobs in the

database are put in rank order for each student.

1.5.6 Selecting the main 15 careers suggestions

The criteria for selecting the main 15 career suggestions are based not

only on the overall ranking of jobs but also on the job family to which

each job belongs.