Dr Kitchener`s presentation - British Psychological Society

advertisement

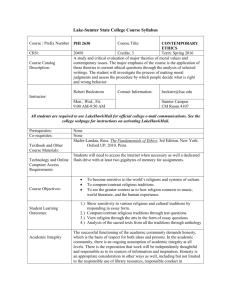

1 Learning Ethics: Emotion, Cognition and Socialization Karen Strohm Kitchener, Ph. D. Professor Emeritus University of Denver 2 Learning Ethics: Emotion, Cognition and Socialization I started writing in the field of ethics in psychology about 30 years ago. Since then the field has exploded and much has been written on the question of whether moral action depends on reasoning, and it is moral action about which we must be concerned. It doesn’t matter what moral platitudes students utter when they take their exams. What matters is how they act with clients, research participants, students, their colleagues and even their neighbors because I would hope that the moral beliefs they have developed professionally influence how they act beyond the boundaries of their position (whether or not the BPS Board of Trustees or the Health Professions Council is looking over their shoulder.). Unfortunately, how people act is not always dependent on how they reason. I am going to argue that how they act is also a result of neurological events that lead to emotional responses and the environment in which they find themselves. The evidence is strong that neurological processes many of which are preconscious elucidate a great deal about the reliability and predictability of our moral actions (Gazzaniga, 2010, Gazzaniga, 2011, Schuler & Churchland, 2011). Schuler and Churchland (2011), for example, argue that a unified theory of morality must take the findings of neurobiology into account and provide literature to support their claim. Additionally, data from cognitive psychology (Kahneman & Tversky, 1973) describe ways in which decision makers deviate from the most rational choices because they rely on heuristics or simplified decision making strategies 3 leading to predictable errors. Both point to the role that automatic emotional responses pay a role in ethical behavior. Furthermore, social psychologists have illustrated ways in which social environments have the power to make some ordinary people exhibit morally questionable behavior (Milgram, 1974, Zimbardo, 2007). Herbert Simon coined the term bounded rationality to describe a decision making model in which human rationality is limited, bounded by the situation and by human computational powers (Simon, 1955, 1990). I think we are talking about bounded rationality when we are talking about ethical reasoning. Let me give you some examples. A psychologist, Dr. No, had been seeing a woman about self-esteem issues and depression for over a year. I will call her Mary for clarity. Mary had come a long way. Her depression had lifted. She was becoming more active in the community and had started a new job where her skills were appreciated and she felt valued. Dr. No planned on starting the termination process. He was aware that he was attracted to her, but he also knew that it was important to maintain his professional boundaries. Mary had an early evening appointment. The psychologist always made sure that his secretary was going to be in the office, knowing that her presence would help him keep his boundaries in check. One evening when he had an appointment with Mary, his secretary informed him she had to leave early. Mary was there so he went ahead and started working with her, beginning to tell her that therapy was coming to an end. As he did so, she burst into tears and told him she was in love with him and couldn’t bear the thought of not seeing him again. He gently told her he was flattered by her feelings and explained how it was not only counterproductive for her, but also unethical for him to pursue the relationship outside of the office. She seemed to understand and left the office to go home, but her car wouldn’t 4 start. It was raining hard, so she returned to the office to wait for a tow truck. She was soaking wet, so Dr. No grabbed a towel and started to help her dry off. As he did so she turned and threw herself into his arms. They kissed and ended up having sexual relations on the couch in his office. I know this seems overly dramatic; however, it is based on a similar case. Dr. No knew what was ethical. Initially he even acted correctly, but his own biological drives and the social environment intervened to affect his actions. Dr. No’s sexual attraction to his client was neither unusual nor unethical per se. In fact, in a study of U.S. psychologists Pope and his colleagues (1986) found that the vast majority of therapists (87%) had some level of sexual attraction to at least one of not more of their clients. How can we help students and trained professionals to, first, understand what is moral and, then, develop social networks, and self-monitoring techniques that allow them to act on it? My second example comes from Gigerenzer (2010). He reports that 69% and 81% of Danish and Swedish citizens indicated that would be willing to donate their organs when they died. Compare that to the number from each country who actually did donate their organs. In fact, only 4% of the Danes but 86% of the Swedish are donors (Fig. 1). Consider data from the United Kingdom, the United States, and Germany and contrast them to countries like Belgium, France and Austria where almost 100% of the citizens are potential organ donors (Fig. 2). What are we to conclude? Should we conclude that those in the second group are more moral than those in the first? Gigerenzer points out that those in the second group are given the option of “opting out” as organ donors while for the most part those in the first group, the United States, Germany and the United Kingdom, have to agree to “opt-in” to be an organ donor. He concludes that moral behavior= f(mind, environment).(PP) I would want to clarify that to argue that moral 5 behavior= f(reason, emotions, environment). In this paper, I am using the term “socialization” broadly defined rather than environment since as educators we can help socialize students to be aware of environmental influences, but we cannot control all of the environments they will enter. All three must be addressed if students are to leave their educational environments and behave morally. It is also true if psychologists who are currently researchers, professors and practioners are to be re-educated to be moral psychologists. Let me step back a minute and return to the second component in my equation regarding moral behavior – emotion. Although a comprehensive review is not possible here, I want to begin with the data on the roles that our neurological wiring and emotion have on psychologists’ ethical actions. My familiarity with the literature on the neurological basis of morality is limit I have to acknowledge the role that our neurological processes play in moral judgments and actions. The use of functional magnetic resonance imaging (fMRI) has been a boon for the study the neural substrata of morality. For example, there are a few interesting studies in psychology suggesting that emotional responses to personal moral problems precede moral judgments and are associated with activity in the areas of the brain often related to emotion and social cognition (Greene et al., 2001; Greene et al., 2004; Greene & Haidt, 2002; Moll et al., 2002a, 2002b). Studies by Greene and his colleagues and Moll and his colleagues suggest that automatic, emotion-based moral assessments play an important role in guiding fast and effective responses to every day moral problems. While these studies do not do justice to the growing body of research in this area, they do give a sense of the work that is being done and support other work in empirical, moral psychology that suggests that many moral decisions result from affective processes that are non-conscious and automatic (Cushman et al., 2006, Greene & Haidt, 2002, Hauser et al, 2007). 6 Similarly, fMRI studies in neuro-economics have implications for the study of morality (Suhler & Churchland, 2011). Although they are actually studies of game theory, as Suhler and Churchland have pointed out the games allow participants to engage in moral behavior like generosity, reciprocating or breaching trust, punishing others for breaking rules and so on. Again, the studies show the importance of parts of the brain that are related to emotional responses and support the role of emotion in moral responses. They also show the importance of the brain’s functions that reinforce and motivate people to behave in cooperative or generous ways toward others rather than being selfish and taking all of the gains. Gazzaniga (2010, 2011) points to evidence that some moral responses have evolutionary, neurobiological roots. Further, he argues that many neurological responses are cross-cultural and developmental. If it is developmental, it changes over time. Emotional responses are not the same at 40 as they were at 4. More important to the topic under discussion today is that Gazzaniga also admits that there are many moral responses that are influenced by the culture of the person and what they have learned. I want to briefly address the third component of my equation on moral behavior - the role the environment plays in moral action. As already noted, the classic studies by Zimbardo (2007) on prison guards and Milgram (1974) on obedience indicate that some ordinary people act in immoral ways given different social environments. (Note that I say “some” because of recent work by Haslam and Reicher, 2012). Mumford and his colleagues have been studying moral misconduct in academics (Antes, & Mumford, 2110, Mumford et al, 2009a, Mumford et al, 2009b). For example, they studied what they called socialization into the biological, social, and health sciences where socialization was measured by the number of years graduate students had been working in their field (Mumford, et al., 2009a). Generally they found that with socialization into the social and 7 biological sciences ethical decision making improved or stayed the same. By contrast ethical decision-making decreased with socialization in the health sciences. In a related study, Mumford and his colleagues (2009b) examined whether exposure to unethical career events in research was related to ethical decision making and climate among graduate students. They found, beginning and advanced graduate students drawn from the same three areas were exposed to at least a low number of unethical actions in research by others, for example, advisers, peers, or principle investigators. Those who were further along in their program were exposed to more. These were related to ethical decision making and students’ perceptions of the departmental climate. In other words, their moral decisions were affected by the environment and with more exposure to unethical events their moral decision making declined. On the other hand, as far as I could tell they did not control for whether or not the students had taken an ethics class. Further, the number of years in graduate school is a very limited measure of socialization into the profession. Certainly, the literature on acculturation as applied to professional development offers a much more complex understanding of how socialization into the profession occurs (Anderson & Handelsman, 2010, Handelsman, Gottlieb, & Knapp, 2005). Mumford and his colleagues’ research does raise the issue of whether faculty members are modeling ethical reflection and behavior. If they are not then what students are taught in the classroom may be undermined by what they see their mentors and other faculty doing. This is why I titled my address “learning ethics” rather than “teaching ethics”. What students learn is not necessarily what we intend to teach them because the environment may undermine it. As Gigerenzer (2010) argues: “A situational understanding of behavior does not justify behavior, but it can help us understand it and avoid what is known as the fundamental attribution 8 error: to explain behavior by internal causes alone” (p. 540). This doesn’t mean that emotional and cognitive processes do not count, but that they interact with the social environment. Assuming we are concerned with students’ and professionals’ moral behavior we need to consider how to help them avoid behaving badly even when they have good intentions. With that as background, I want to turn to the reason component of the three aspects of moral behavior. I will begin by reviewing with you a model of what I initially called ethical reasoning (Fig. 3). I postulated that ethical justification had two levels (Kitchener, 1984). The first was the intuitive level, which is the seat of our emotional reactions to the situation, our values, the level of our professional identity development, and the facts of the situation itself. The second was the critical evaluative level which allows us to reflect on our intuitions, reform them when they are biased and make decisions when intuitions give us no guidance. The critical evaluative level of the model was recently elaborated by Professor Richard Kitchener and me (2012, Fig. 4). The critical evaluative level of the model was recently elaborated by Professor Richard Kitchener and me (2012, Fig. 4) both by fleshing out the ethical theories that might be used to decide between principles and the role of meta ethics and by indicating that moral theory justifies moral principles which justify moral rules and so on. The moral principles identified by the model differ somewhat from those identified by the BPS code. Most are self-explanatory. They include nonmaleficence (do no harm), beneficence (produce good), respect for autonomy, justice and fidelity (be truthful, keep promises). The principles among others were initially identified by Ross (1930) who argued they were prima facie duties. Beauchamp and Childress (1979) applied them with the exception of fidelity to medicine. I then applied them to psychology (1984) arguing that the American Psychological Association’s (APA) ethics code (1982) at the most fundamental level was based on these five principles. That foundation has been recognized in the current APA code (2010). I 9 think the same argument could be made for the BPS code. Although I will not make a thorough argument for that point here, I do want to provide a few examples. Clearly the principle of Respect parallels what I call “Respect for Autonomy”. Nonmaleficence (do no harm) is implicit in many of the standards and explicit in others. For example, standard 3.1i begins with the statement “Avoid harming clients” and 3.3i includes the statement that when designing research psychologists should consider the design from the perspective of participants in order to eliminate “potential risks to psychological well-being, physical health, personal values, or dignity.” I think I could go through the document and make similar arguments for most of the statements. Consequently, presenting these principles when teaching students often helps them make sense of ethical standards and gives them a reference for remembering them. Ross acknowledged that duties arising from moral principle sometimes conflict. When that occurs we must weigh the importance of the duties and then form a judgment about what to do. R. Kitchener and I (Kitchener, R. & Kitchener, K. 2012) argue that one should consider ethical theories, such as the Kantian maxim identified in the BPS code, act in such a way as you would want to be generalized into universal law, and last use meta- ethics to understand definitional discrepancies. Although ideally one should consider each of level when making a decision, however, unless one is very facial with the model, the practicalities of every day decision making lend a sense of idealism to it. One the other hand, when reflecting on one’s moral decisions, it becomes very insightful and can lead to making better moral decisions in the future. This is why there is an arrow that connects the critical evaluative and intuitive levels of moral reasoning (Fig. 3). Ideally, considering these principles and ethical theory will eventually influence one’s intuitive responses to a problem. As Rogerson, et al. (2011) noted there have been many attempts to refine and revise the critical evaluative level of moral reasoning, however, most focused on steps of practical ethical 10 decision making, rather than the reasoning on which it was based. By contrast, they point out the intuitive level has been insufficiently addressed. Anderson and I (Kitchener & Anderson, 2010) have attempted to remedy this to some extent in the second edition of Foundations of Ethical Practice, Research and Teaching in Psychology (Kitchener, 2000). What follows is based in part on that (Kitchener & Anderson, 2010) discussion. I think it has become obvious that individuals have internal ethical beliefs and emotional responses to problems. They bring these to any discussion or class on ethics whether in psychology or other areas. The ethical beliefs or “ethics of origin” as some (Anderson & Handelsman, 2010, Handelsman, Gottlieb & Knapp, 2005) have called them result from early learning from parents, teachers, and society about what individuals ought and ought not to do. I suspect they are the foundation for the neurological nets that lead to an initial automatic, emotional response to ethical problems. We argued that these early learning experiences form the basis of their ethical virtues. However, Rogerson et al (2011) and Gottlieb (2012) have pointed to less idealized aspects of the ethics of origin many of which we are unaware. They take the form of self-serving biases (I am good at what I do, therefore, I cannot be wrong.) or deep seated fears or prejudices arising from childhood experiences. Recently the argument has been made (Haidt, 2001, Rogerson et al., 2011) that intuition and emotion are the key to understanding how people grasp moral truths rather than reason. Furthermore, by ignoring the intuitive aspects of moral reasoning it becomes vulnerable to “subjectivity, bias, and rationalization” (Rogerson, et al., 2100, p. 615). Data from neuroscience, psychology, neuro-economics, and evolutionary neurobiology, all support this assertion and suggest that automatic emotional responses are critical motivators of moral behavior. But just as reason is not sufficient to lead to moral action, neither is emotion or intuition. As Goldstein 11 (2010) summarized “Reason without moral emotions is empty, moral emotions without reason are blind.” (p. 11). As the BPS code asserts “Thinking is not optional” (BPS, 2009, p. 5). As already noted, in the most recent articulation of the model (Kitchener, R. & Kitchener, K., 2011), Richard Kitchener and I fleshed out the role moral theory and meta-ethics play in ethical justification. However, even with the addition of these aspects people are still left with the fact that they have to reason to a conclusion, a conclusion that has to consider intuitional response, the facts of the situation, relevant expert opinion, values, ethical rules, ethical principles, ethical theory and so forth. They have to have the capacity to step back from their emotional responses and reflect on all of issues involved. Gazzaniga has argued (2011) that emotional responses change over time. Others have argued that as individuals become older and more educated, they typically developed the capacity to reason about their moral beliefs. As that capacity matures, it becomes more complex (Fischer & Rose, 2003, Rest, 1983). When Ross (1930) first set forth several moral principles as the foundation of ethics he also recognized people had to make a decision between which principle was most important in a particular case (Audi, 1993). He suggested that they did so using reflection. The use of reflection was in his view dependent on mental maturity. In other words, it was developmental. Ross’s point about the importance of mental maturity, which he assumed was present in adults, was echoed by Audi (1993) who argued reflection above all else allows us to decide which principles are knowable through what he saw as “reliable intuition” (1993, p. 8). This kind of reflection he thought could only be achieved by “thoughtful well-educated people” (p. 2). In other words, it was tied to education. Audi labeled Ross’s point of view as moral epistemology. The question remains; how does mental maturity develop, so individuals use mature moral epistemology to make reflective decisions? 12 In the remainder of this paper, I want to present a model of how such reasoning develops and a methodology that can be used to study its development. Finally, I want to talk about the implications of the ideas presented here for teaching and learning ethics. Evidence has been accumulating for several years that epistemological assumptions develop over time from childhood through the mid-twenties especially among those who continue their education (Baxter-Magolda, 1992, Hofer, 2004; King & Kitchener, 1998, 2004, Kuhn, 1992). Kitchener and King (1981, King & Kitchener, 1998, 2004) have tied the development of epistemological assumptions to the ability to consciously reflect on data, expert opinion, evidence and their own experience to draw a considered judgment. In fact, drawing on Dewey’s work (1933) on reflective thinking, we (King & Kitchener, 1998; Kitchener & King, 1981) called our model Reflective Judgment (RJ). It describes the relationship between epistemological assumptions and how people are able to reflect and then justify decisions when faced with what Churchman (1981) called ill-structured problems. These are problems that don’t have a clear-cut, right or wrong, answer or the answer is unknowable at the time. Consequently, there may be reasonable disagreement about their solution although it can be argued that some solutions are better than others. I have called this epistemic cognition (1983). When people are faced with ill structured problems, they must reflect on“the limits of knowledge, the certainty of knowledge and the criteria for knowing” (Kitchener, 1983, p. 230). King and I (Kitchener & King, 1981, King and & Kitchener, 1994) found there were seven distinct epistemic belief levels that develop over time from early childhood to potentially the mid-twenties or later depending primarily on age and education. These levels and their emergence are supported by Fischer and Rose’s (1994) work on growth spurts in brain activity, synaptic density and myelin formation. For example, they used data on EEG power and data on 13 RJ (Kitchener, Lynch, Fischer, & Wood, 1993) to show there are developmental spurts at approximately age 15 and 20 for both EEG power and RJ. Descriptively people’s thinking develops through three major phases which were labeled by King and Kitchener (1998) as Pre-Reflective, Quasi-Reflective, and Reflective Thinking (Table 1). The Pre-Reflective period includes three levels (PP). Initially, the epistemological assumptions are authority based or are so concrete individuals do not acknowledge that there are differences in points of view. Justification when faced with ill-structured problems is based on an authority or is accepted without consideration. As this view fades in Level 3 individuals discover that “authorities” disagree with each other, as a result they claim there are no justifiable answers and one answer is as good as another. Generally this was the modal view of approximately 17-18 year olds (King, Kitchener & Wood, 1994). In the Quasi-Reflective level (PP), levels 4 and 5, these views are changed to a subjectivism or relativism. Here, the view of knowledge is subjective or contextual since participants argue it is filtered through a person’s perception, so only interpretations of evidence or issues can be known. Consequently, beliefs are justified by the rules of inquiry for a particular context and by context-specific interpretations. Conclusions are difficult to draw because there are so many possible interpretations and the individual cannot perceive any way to choose between them. Level 4 was approximately the view of 21-22 year olds who had complete four years of university study based (King, Kitchener, & Wood, 1994). The Reflective level includes levels 6 and 7 (PP) with the last one encompassing true reflective judgments. In this stage (7), knowledge is the outcome of the process of reasonable inquiry in which the adequacy of solutions is evaluated in terms what is most reasonable according to current evidence. It becomes reevaluated when new evidence that is relevant, new tools of inquiry or new perspectives are available (King & Kitchener, 1998, p. 15). Beliefs are 14 justified at this stage probabilistically on a variety of considerations including the weight of the evidence, “the explanatory value of alternative judgments and the risk of erroneous conclusions, the consequences of alternative judgments, and the interrelationships of these factors.” The consequences of a judgment are defended as” representing the most complete plausible or compelling understanding of the issues based on the evidence at hand” (King & Kitchener, 1998, p. 16). These views were only seen among advanced doctoral students. We and closely associated colleagues using the Reflective Judgment Interview (RJI) have cross sectional data on 1700 people ranging from early secondary students (age 14) to nonstudent adults in their 60’s. (See King, Kitchener, and Wood (1994) or King and Kitchener (2004) for specifics.) The interview asks participants to think out loud about four ill structured problems in the intellectual domain. Cross sectional studies using the RJI show consistently higher scores are related to age and education. More convincingly, there are 7 longitudinal studies ranging from 1 to 10 years (Brabeck & Wood, 1990; King & Kitchener, 1994; Sakalys, 1984; Schmidt, 1985; Welfel & Davison, 1986). As King and I reported (1994) “the mean scores increased significantly for all groups tested at 1-to 4-year intervals” (p. 156) with two exceptions. These two showed no significant change. In other words, the data support the claim that there is a slow steady progress in the development of epistemological assumptions of students when tested on problems in the intellectual domain. The RJI was adapted for use with German college students (Kitchener & Wood, 1987). We tested students, mean ages 20, 25, and 29.5. The RJ scores of the oldest group (doctoral students) corresponded to those of doctoral students in the U.S. The scores of the other two groups were also comparable, though somewhat higher than U. S. students. The data showed the same sequentiality as students in the U.S. suggesting the generalizability of the model at least with some western European participants. 15 The kinds of questions that the interview used were as noted, ill-structured, which are typical of the kinds of problems that adults face every day. For example, Will a proposed urban growth policy protect farmland without sacrificing jobs?; Does a job in a small computer science company better fulfill my needs and career plans than one in a larger better known company?; Should Britain’s newspapers be regulated by an independent body of non-journalists or should they continue without state regulation? And so on. The parallels between these questions and moral dilemmas are striking (R. Kitchener & K. Kitchener, 2012). In fact the one on Britain’s newspapers deals with free speech issues; therefore, it raises issues of autonomy. The question that needs to be answered is; Does the relationships between epistemological assumptions and justification in the moral domain parallel that in the intellectual domain and is it a developmental process? As already noted, considered moral judgments aren’t relative to a particular situation, rather they are based on an evaluation of the evidence, expert opinion, and in the case of ethics, intuition, moral rules and principles, and the particulars of the situation. These judgments are reflective. Many times they can’t be judged to be right or wrong but neither are they relative. Rather they are the best alternative using the criteria just noted. If this is a correct characterization then the highest level of the Reflective Judgment model captures it. It is the mental maturity which Ross (1930) and Audi (1993) were describing. Assuming reflective reasoning in the moral domain follows the data on the RJI, it develops over time with age and education. Like in the intellectual domain, those reasoning at the highest level make decisions that are probabilistic, neither based on authority or relative to a particular context. In other words, they use the kind of reflective reasoning Ross (1930) and Audi (1993) assumed was necessary to make moral decisions. 16 Let me note that this view isn’t Kohlberg (1984) revisited. Although we argue that there are levels or stages suggesting that there are stages or levels of development in the epistemological assumptions in the moral domain and that the early levels are authority based, Kohlberg and his colleagues were investigating the epistemic level of moral reasoning and the Reflective Judgment model works at the meta-epistemic level. Kohlberg and colleagues were trying to investigate the beliefs people held about moral problems. The RJ model investigates how a person comes to hold or justifies those beliefs, whether they claim their beliefs to be right or wrong, and most importantly how they can know their beliefs are better or worse than another point of view. In other terms, Kohlberg was investigating the content of moral decisions. I am suggesting that the RJ model be used to investigate process of arriving moral decisions and the epistemological assumptions they used in doing so. Further, I want to emphasize that the epistemological assumptions that people hold are related to the judgment process they use in arriving at ethical decisions. Even if it is the case that decisions are made at a preconscious level before we are even aware of them (Gazzaniga & Heatherton, 2006, Gazzaniga, 2010), they do change with age and education, so there must be some feedback mechanism that affects the neural networks in our brains. In fact in my 1984 iteration of a model of ethical reasoning there was an arrow that spanned the critical evaluative level and the intuitive level of moral thinking (Fig. 3, PP5). That arrow represents the feedback mechanism that leads to the development of our intuitive, preconscious grasp of moral issues. The Reflective Judgment Model explicates the pattern of the development of reflection and the epistemological assumption that under lies it. Knowing those patterns allows us to make educational interventions to promote it. Before I move on to talk about the education implications, I want to briefly describe the Reflective Judgment Interview (RJI) which was used to access the developmental levels. I 17 believe that with a few changes it should be applicable to measuring changes in reasoning about moral problems. If we are going to be concerned with what students are learning about ethics we should also be concerned with how to assess it. Because we (Kitchener & King, 1981, King & Kitchener, 1994) were interested in the ability to assess different developmental levels, we wrote several ill-structured problems that were understandable by a wide variety of people at different age and educational levels. As our research agenda changed over the years other problems, some more specific to different areas like business and psychology, were added. For example, Some researchers content that alcoholism is due, at least in part, to genetic factors. They often refer to a number of family and twin studies to support this contention. Other researchers, however, do not think that alcoholism is inherited. They claim that alcoholism is psychologically determined. They also claim that several members of the same family often suffer from alcoholism is due because they share common family experiences, socio-economic status, or employment. We used ill-structured problems since as Dewey (1933) first observed, it is only when people are faced with real problems that they engage in real reflection. We developed a series of questions that elicited the epistemological rationale for the response as well the content. Our assumption was that one could arrive at the same conclusion, e.g., alcoholism is genetically determined, for very different reasons using different epistemological assumptions. For example, after asking participants their opinion about the issue we asked questions like: What do you think about this issue? On what do you base your point of view? Can you ever know for sure that your position in correct? so on! 18 Because people can hold the same point of view for very different reasons with different epistemological assumption we thought it was important, at least initially to use interviews. Interviews are better when asking individuals for their justification for judgments “because they enable the tester to seek more justification whenever anything seems unclear” (Ennis & Norris, 1990, p. 27). (See King & Kitchener, 1994, for a full rational.) Based on the interviews, we developed a paper and pencil measure, the Reasoning about Current Issues Test (RCI) (King & Kitchener, 2004). Problems like the one on alcoholism are being used by Professor Jesse Owen (private communication, 2013) to develop a paper and pencil based on the format of the RCI that will measure cognitive complexity among counseling and clinical psychology graduate students. I think, moral issues could be written in a way that the RJI could be adapted to the moral domain. For example A counseling center receives complaints from women that they have been sexually assaulted after they have been slipped a date rape drug. They all fearfully report the same name of a star athlete who assaulted them and plead with the therapist not to report it. On one had some of the psychologists argue that they have a primary obligation to maintain confidentiality. Others remind them that the name of the fraternity could be disclosed with the consent of the women and still protect their identity (Based on a case from Kitchener & Kitchener, 2012). or take the following case based on one that Mumford and his associates (2009) use to measure ethical climate. Two assistants in a psychology lab are working with a principle investigator. . Assistant A has written a paper on her own ideas on a topic related to his work. She allows Assistant B to read it. Later she finds a grant proposal authored by Assistant B and the principle investigator which clearly uses many of her ideas. She tells the researcher and 19 shows him the paper which she shared with Assistant B. On one hand, the principle investigator could accuse Assistant B of plagiarism and consider firing him and work with assistant A. On the other hand, he could reprimand Assistant B and include both assistants as co-authors of the grant depending on their contributions. Dilemmas like these could be used to investigate whether there is moral development in our psychology programs and the RJI interview and rating rules could be adapted to allow such an investigation. King and I (1994) provide observations and suggestions for how faculty can promote reflective thinking in the classroom. One idea that has grown out of this work is the importance of thinking developmentally about students. For example, based on cross sectional data (King, Kitchener, & Wood, 1994) on traditional age students (21-23 years old) beginning an MA may exhibit thinking ranging from levels 4 to 6. Level 5, a kind of relativism, is often the view with which they begin their work. They will find assignments that require them to draw a reflective conclusion challenging and both cognitively and psychologically unsettling. They may revert back to level 4 reasoning, for example, “everybody has a right to their own opinion” when asked to make a judgment about which of several hypotheses is most valid. It is also important to remember, however, as King and Siddique (2011) have argued that university students exhibit a great deal of variability “even when they attend selective and culturally distinctive institutions. They are a sum of his or her prior experiences in families, schools, communities…their relationship to the world and historic events…”(p. 125). I would add their cultural background. In other words, you need to step inside their world view and begin there rather than assuming they can think like you do. Using ill structured problems will also help stimulate their development. This is true not just in the ethics class, but throughout the curriculum. Too often educators use tests and ask 20 questions that are well structured and ask for right or wrong answers. Sometimes we need to ask those kinds of questions to evaluate whether students have a knowledge base, but they don’t require reflection linked to how they know what they know. Students also need conceptual support to work at what Fischer (1980) called their optimal level, which he and Rose (1994) suggest is the highest level at which they are neurologically capable of producing. This includes providing multiple examples in which higher level reasoning skills are required (Kitchener, Lynch, Fischer & Wood, 1993) and scaffolding new skills on ones they already have acquire\uired (King & Siddique (2011). Fischer (1980) also recognized that people most often work at what he called their “functional level”. This is the level at which they feel most comfortable. It is their world view about the nature of knowledge and knowing, but is our job to move them beyond where they are comfortable, so they reason more complexly. Consequently, when trying to promote development faculty needs to provide both supports and challenges (King & Kitchener, 1994). These supports and challenges need to be both emotional and cognitive. The implication of this model for faculty who wish to offer highly challenging activities is that they must also lay a supportive foundation so that students will risk using higher level reasoning skills that feel unfamiliar or that introduce doubt and perplexity (King & Kitchener, 1994). Laying a supportive foundation includes developing a trusting atmosphere in the classroom where students feel like they are all in the same boat and that no one is going to be overly critical if they make a mistake. Examples are available in our book (King & Kitchener, 1994) as well as in Owen and Lindley (2010) who apply it to the training of counseling and clinical psychologists. Data from Antes et al.’s (2009) meta-analysis of ethics education interventions provides some support for these instructional methods as well as additional insights. Some methods of ethics instruction yielded larger effect sizes than others. Instruction that focused on a cognitive 21 problem-solving approach had the largest effect sizes with instruction that focused on socialinteractional elements of ethical problems were the next most effective. This would fit with the importance of focusing on ill-structured problems and providing the social support to explore the limits of students’ thinking. They also found that older participant’s benefited the most from instruction. They interpreted this as suggesting that some knowledge of the field was helpful before adding complex ethical decisions. This could also be attributed to developmental differences or both. The Antes et al. meta-analysis demonstrated that using in class case analyses were more effective than other approaches like lecture-based instruction. This finding supports the use of illstructured cases and the opportunities for reflection. They also found that covering work that involved students’ ethical concerns and faulty reasoning strategies led to more effective instruction. I would often start my doctoral course with the question “What ethical concerns have you encountered in your work?” Similarly, my colleague, Professor Sharon Anderson (2013) began her ethics course by asking challenging questions like, “How do you know the thing you choose to do is the thing you ought to do?” She heard response such as “I know it’s what I need to do because it feels right”, “I decide the right thing to do based on the policies or rules I am expected to follow”, “I try to do the greatest good for the people I serve”, or “If I could tell my mother about my decision, or if it ended up on the front page of a newspaper, I wouldn’t be embarrassed”. Other students seemed stuck and responded with answers like “Who is to say what is right or what I ought to do?” Some of these responses came from student’s immediate level of moral reasoning. Others showed more cognitive reflection with an implicit understanding of some moral rules and principles (R. Kitchener & K.S. Kitchener, 2012). All could have been rated using the RJ model (Kitchener & King, 1981, King & Kitchener, 1984), 22 especially since Anderson followed up with questions like “And how do you know your decision feels right?” or “What makes your decision right?” Although the Reflective Judgment Interview has not been used to measure changes in ethical reasoning, the RJI has been effective and reliable in measuring change in epistemological assumptions in the intellectual domain. There is a methodology and scoring process which could be adapted to ill-structured moral problems and there are suggestions about how to promote development in the class room. If the RJ model is adapted to teach and assess moral epistemology, we do need to be careful, however, in assuming that the same pattern of development will be found in the moral area as in the intellectual one. There seems to be an assumption in higher education that thinking reflectively about intellectual problems is a positive attribute and one that is modeled with some consistency by faculty. Based on our consultations with faculty, I believe that assumption is probably true. However, it may not be true in the moral domain, if not all faculty are modeling moral behavior. If students see faculty bending rules or acting unethically, we may find, like Mumford et al. (2009) that despite our best efforts in the classroom, they are undermined by modeling of unethical behavior by mentors, teachers, or supervisors. This underlines the importance of model moral behavior in research, teaching, and supervision. This leads to the last issue- the environmental component of ethical action. It is the most difficult one with which to deal. There is no way to educate students about all of the social situations they will encounter in their practical training, internship or at work. However, there can be honest dialogue between faculty and students describing real world experiences they both have encountered and those they have handled well and those they have handled poorly without repercussions. Video vignettes that require students to articulate responses to actors playing seductive clients or colleagues they see acting unethically are also useful training devices. They 23 require them to think through how their responses will affect clients or staff. Faculty members, especially those supervising students during their practical training can alert students to the fact they may be the only one on the site who is sensitive to ethical concerns and how to handle them diplomatically. How then do we put this altogether? There are several options. The acculturation literature as it is applied to students’ ethical concept of themselves is one place to start (Anderson & Handelsman, 2010, Handelsman, Gottlieb & Knapp, 2005). It considers students “ethics of origin” and how that influences their acculturation into the culture of psychology. Anderson (2012) suggests that it has the potential to deal with the emotional, cognitive and social aspects of the developing psychologist. As I have already mentioned, I believe, the Reflective Judgment model can provide insight into where students are in their epistemic development and how they are then able to consider and solve complex ethical problems. It needs to be remembered that often there is an emotional component to cognitive changes which why a supportive climate in the classroom is important. Last, students need frank discussions of the ethical environments in which they find themselves. It is difficult and takes courage to be the only one who speaks up about the ethics of a situation. Hopefully, I found ways to give students that courage and that you will too. 24 Table 1: Summary of Reflective Judgment Levels __________________________________________________________________ Pre-Reflective Thinking (Stages 1,2 & 3) Stage 1 Knowledge is absolute,concrete; obtained with certainty by direct observation. Justification not needed, alternatives not perceived or ignored. Stage 2 Knowledge is absolute or certain but not immediately avaible. Justification by authorities; issues have right and wrong sides. Stage 3 Knowledge is absolutely certain, but temporarily uncertain; only personal beliefs can be known. Justification; when certainty exists, beliefs justified by authorities.When answers do not exist, beliefs are personal opinions. Quasi-Reflective Thinking (Stages 4 and 5) Stage 4 Knowledge is uncertain and idiosyncratic to the individual because of situational variables, e.g. incorrect reporting of data; always involves ambiguity. Justification involves giving reasons and using evidence; arguments and evidence are idiosyncratic. Stage 5 Knowledge is contextual and subjective; filtered through perceptions ; only interpretations of evidence or issues are known. Justification occurs within a particular context by means of the rules of inquiry for the context; conclustions delayed (relativism). 25 Reflective Thinking (Stages 6 and 7) Stage 6 Knowledge constructed into individual conclusions based on information from a variety of sources. Interpretations are based on evaluations of evidence and the evaluated opinions of reputable of experts. Justification occurs by comparing evidence and opinion from different perspectives and solutions evaluated by criteria such as evidence, utility, or pragmatic need for action. Stage 7: Knowledge is outcome of reasonable inquiry; solutions are constructed; adequacy evaluated by what is most reasonable or probable; reevaluated when appropriate. Justification: probabilistic; based on interpretive considerations: weight of the evidence, explanatory value of the interpretations, risk of erroneous conclusions, and interrelationships. Conclusions represent most complete, plausible, or compelling understanding. Based on King and Kitchener (1994) 26 Figure 1. Based on Gigerenzer (2010). 27 Figure 2. Based on Gigerenzer (2010). 28 Meta-ethics ↕ Ethical Theory ↕ Level II: Critical Evaluative Level: Ethical Principles ↕ Ethical Rules ---------------------------------------------------------------------------------------------------Level I: Immediate Level Particular Cases Situational Information Ordinary Moral Sense Figure 3. A model of ethical reasoning. Reprinted from “Ethical Foundations of Psychology,” by R. F. Kitchener and K. S. Kitchener, APA Handbook of Ethics in Psychology: Vol.1. Moral Foundations and Common Themes, S. J. Knapp (Editor-in-Chief) Copyright 2012 by the American Psychological Association. Adapted from “Intuition, Critical Evaluation and Ethical 29 Principles: The Foundations for Ethical Decisions in Counseling,” by K. S. Kitchener, 1984 by Division of Counseling Psychology of the American Psychological Association. 30 Ethical Theory Ethical Principles Moral Standards (Code) Utilitarianism Deontology Virtue Ethics Etc Non-maleficence Beneficence Justice Autonomy Fidelity Confidentiality Informed consent Deception Dual Role Relationships Etc Particular Acts Figure 5. Ideal model of ethical reasoning. Reprinted from “Ethical Foundations of Psychology,” by R. F. Kitchener and K. S. Kitchener, APA Handbook of Ethics in Psychology: Vol.1. Moral Foundations and Common Themes, S. J. Knapp (Editor-in-Chief) Copyright 2012 by the American Psychological Association. 31 References Anderson, S. K. (2012). Ethical acculturation as socialization: Examples from the classroom. Paper presented at the annual meeting of the American Psychological Association, San Diego. Anderson, S. K., & Handelsman, M. M. (2010). Ethics for psychotherapists and counselors: A proactive approach. Malden, MA: Wiley-Blackwell. American Psychological Association (1981). Ethical principles of psychologists. American Psychologist, 36, 633-638. American Psychological Association (2010). Ethical principles of psychologists and code of conduct (2002, Amended June 1, 1010). Retrieved from www.apa.org/ethics/code/ index.aspx Antes, A, L., Murphy, S. T., Waples, E.P., Mumford, D. L., Brown, R.P., Connelly, S. & Davenport, L. D. (2009). A meta-analysis of ethics instruction effectiveness in the sciences. Ethics and Behavior, 19, 379-402. doi: 10:1080/10508420903035380 Baxter-Magolda, M. B. (1992). Knowing and reasoning in college students: Gender related patterns in students’ intellectual development. San Francisco: Jossey-Bass. Brabeck, M. & Wood, P. (1990) Cross-sectional and longitudinal evidence for differences between well-structured and ill-structured problem solving abilities. In. M. L. Commons, C. Armon, L. Kohlberg, F. A. Richards, T. A. Grotzer, & J. D. Sinnott, Eds. Adult development 2: models and methods in the student of adolescent and adult thought. New York: Praeger. British Psychological Society (2009). Code of ethics and conduct. London: Ethics Committee of the British Psychological Society. Audi, R. (1993). Ethical reflections. The Monist, 76, 295-315. 32 Beauchamp, T. L. & Childress, J. E. (1979). Principles of biomedical ethics. Oxford: Oxford University Press. Brabeck, M. & Wood, P. (1990). Cross-sectional and longitudinal evidence for differences between well-structured and ill-structured problem solving abilities. In M. L. Commons, C., Armon, L. Kohlberg, F. A. Richards, T. A. Grotzer, & J. D. Sinnott (Eds.). Adult development 2: Models and methods in the student of adolescent and adult thought. Praeger, New York. Churchman, C.W. (1981). The design of inquiring systems: Basics concepts of systems and organizations. Basic Books, New York. Cushman, F., Young, L, & Hauser, M. D. (2006). The role of conscious reasoning and intuition in moral judgment: testing three principles of harm. Psychological Science, 17, 10821089. Dewey, J. (1933). How we think: A restatement of the elation of reflective thinking in the educative process. Heath: Lexington, MA. Ennis, R. H. & Norris, S. P. (1990). Critical thinking assessment: Status, issues, needs. In S. Legg & J. Algina (Eds.). Cognitive assessment of language and math outcomes. Ablex: Norwood, NJ. Fischer, K. W. (1980). A theory of cognitive development: The control and construction of Hierarchies of skills. Psychological Review, 87, 477-531. Fischer, K. W. & Rose, S. (1994). Dynamic development of coordination of components in brain and behavior. In C. Dawson & K. W. Fischer (Eds.). Human behavior and the developing brain. New York: Guilford Press. pp. 3-67. Gazzaniga, M. (2010), Not really. In Does moral action depend on reasoning? (pp. 4-7). John Templeton Foundation, Online at www.templeton.org/reason. 33 Gazzaniga, M.S. (2011). Who’s in charge? Free will and the science of the brain. New York: Harper Collins. Gigerenzer, G. (2010). Moral satisficing: Rethinking moral behavior as bounded rationality. Topics in cognitive science, 1, 528-554. doi: 10.1111/j.1756-8765.2010.01094.x Goldstein, R. N. (2010). Yes, and no, happily. In Does moral action depend on reasoning? (pp.8-11). John Templeton Foundation, Online at www.templeton.org/reason. Greene, J. and Haidt, J. (2002). How (and when) does moral judgment work? Trends in cognitive sciences, 6, 517-523. Grene, J. D., Sommerville, R. B., Nystrom, L Darley, J., and Cohen, J. D. (2001). An fMRI investigation of emotional engagement in moral judgment. Science, 293, 2105-2108. Greene, J. D., Nystrom, L. E., Engell, A. D., Darley, J. M. and Cohen, J. D. (2004). The neural basis of cognitive conflict and control in moral judgment. Neuron, 44, 389-400. Haidt, J. (2001). The emotional dog and its rational tail: A social intuitionist approach to moral judgment. Psychological Review, 108, 814-834. doi:10.1037/0033-295X.108.4.814 Handelsman, M. M., Gottlieb, M. C., & Knapp, S. (2005). Training ethical psychologists: An acculturation model. Professional Psychology: Research and Practice, 36(1), 59–65. doi:10.1037/0735-7028.36.1.59 Haslam, S. A. & Reicher, S. D. (2012). Contesting the “nature” of conformity: What Milgram and Zimbardo’s studies really showed. PLoS Biology, 13, e1001486. doi: 10.1371/journalpbio.100426. Hofer, B. K. (2004). Epistemological a\understanding as a metacognitive process: Thinking Out loud during online searching. Educational Pschology, 39, 43-55. Hauser, M., Cushman, F., Young, L., Jin, R. K., & Mikhail, J. (2007). A dissociation between moral judgments and justifications. Mind and Language, 22, 1-21. 34 King, P.M. & Kitchener, K. S. (1998). Developing reflective judgment: Understanding and promoting growth and critical thinking in adolescents and adults. Jossey-Bass, San Francisco. King, P. M. & Kitchener, K. S. (2004). Reflective judgment: Theory and research on the development of epistemic assuptions through adulthood. Educational Psychology, 39, 518. King, P. M., Kitchener, K. S. & Wood, P. K. (1994). Research on the reflective judgment model. (pp. 124-188). In P. M. King & K. S. Kitchener, Developing reflective judgment: Understanding and promoting growth and critical thinking in adolescents and adults. Jossey-Bass, San Francisco. King, P. K. & Siddiqui, R. (2011). Self-authorship and metacognition. In C. Hoare,The Oxford handbook of reciprocal adult development and learning. p.p. 113-131. Oxford, Oxford University. doi: 155.dc222010032996 Kitchener, K. S. (1983). Cognition, metacognition and epistemic cognition: A three level model of cognition processing. Human Development, 4, 222-232. Kitchener, K. S. (1984). Intuition, critical evaluation, and ethical principles: The foundation for ethical decisions in counseling psychology. The Counseling Psychologist, 12, 43-55. doi: 10.1177/11000084123005 Kitchener, K. S. (2000). Foundations of ethical practice, research, and teaching in psychology. Lawrence Erlbaum, Mahwah, NJ. Kitchener, K. S. & Anderson, S. K. (2010). Foundations of ethical practice, research and teaching in psychology and counseling. Routledge, New York, (2nd, Edition). 35 Kitchener, K. S. & King, P. M. (1981). Reflective Judgment: Concepts of justification and their relationship to age and education, Journal of Applied Developmental Psychology, 2, pp 89-116. Kitchener, R. F. & Kitchener, K. S. (2012). Ethical foundations of psychology. In S. Knapp (Ed.), APA Handbook of ethics in psychology, Vol. 1, pp. 3-42. Washington, DC: American Psychological Association. doi: 10. 1037/13271-000 Kitchener, K. S., Lynch, C. L., Fischer, K. W., & Wood, P. K. (1993). Developmental range of reflective judgment: The effect of contextual support and practice on developmental stage. Developmental Psychology, 29, 893-906. Kitchener, K. S., & Wood, P. K. (1987). Development of concepts of justification in German university students. International journal of behavioral development, 10, 171-185 Kohlberg, L. (1984). The psychology of moral development: The nature and validation of moral stages. Harper & Row: San Francisco. Kuhn, D. (1992). Thinking as argument. Harvard Educational Review, 42, 155-178. doi: 0017.8055/920500-0155 Milgram, S. (1974). Obedience to authority: An experimental view. New York: Harper & Row. Moll, J., de Oliveria-Souza, R., Bramati, I. E. & Grafman, J. (2002a). Functional networks in emotional moral and nonmoral social judgments. NeuroImage, 16, 696-703. Moll, J., de Oliveria-Souza, R., Eslinger, P. J., et al. (2002b). The neural correlates of moral sensitivity: a functional magnetic resonance imaging investigation of basic and moral emotions. Journal of Neuroscience, 22, 2730-2736. Mumford, M.D., Connelly, S., Murphy, S. T., Davenport, L. D., Antes, A. L., Brown, R. P., Hill, J. H., & Waples, E. P. (2009a) Field and experience influences on ethical decision 36 making in the sciences. Ethics & Behavior, 19, 263-289. doi: 10.1080/105084209030335257 Mumfort, M. D., Waples, E. P., Antes, A. L., Murphy, S. T., Connelly, S., Brown, R. P., et al. (2009b). Exposure to unethical career events; Effects on decision making, climate, and socialization. Ethics & Behavior, 19, 351-378. doi: 10.1080/10508-420903034356 Owen, J. & Lindley, L. D. (2010). Therapists’ cognitive complexity: Review of theoretical models and development of an integrated approach for training. Training and education in professional psychology, 4, 128-137. doi: 10.1037/a001 7697 Rest, J. (1983). Morality. In J. Flavell & E. Markham (eds.), Manual of child psychology: Vol. 4: Cognitive development (pp. 520-629). New York: Wiley Rest, J. (1986). Moral development: Advances in research and theory Praeger: New York. Rogerson, M. D., Gottlieb, M. C., Handelsman, M. M., Knapp, S., & Youngren, J. (1011). Nonrational processes in ethical decision making. American Psychologist, 66, 614-623. doi: 10:1037/a0025215 Ross, W. D. (1930). The right and the good. Oxford, England: The Clarendon. Sakalys, J. A. (1984). Effects of a research methods course on nursing students research attitudes and cognitive development. Nursing Research, 33, 290-295. Schmidt, J. A. (1985). Older and wiser? A longitudinal student of the impact of college on intellectual development. Journal of College Student Personnel, 26, 388-394. Simon, H. A. (1955). A behavioral model of rational choice. Quarterly Journal of Economics, 69, 99-118. Simon, H. A. (1990). Invariants of human behavior. Annual Review of Psychology, 41, 1-19. Suhler, C. & Churchland, P. (2011). The neurobiological basis of morality. In J.Illes, J. & Shahaien, B. J. (Eds.) Oxford handbook of neuroethics. pp. 37 Welfel, E. R. & Davison, M. L. (1986). The development of reflective judgment in the college years: A four year longitudinal study. Journal of College Student Personnel, 27, 209-216. Zimbardo, P. (2007). The Lucifer effect: Understanding how good people turn to evil. New York: Random House.