Image stitching based on scale invariant feature transform

advertisement

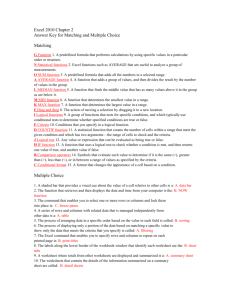

Image stitching based on scale invariant feature transform 1 A.Ravindrakumar Assoc.Prof. Dept. of CSE K.E.C, Kuppam. 2 Abstract: Image Mosaic algorithm based on SIFT (scale invariant feature transform) is discussed in this paper with improved matching algorithm. Along with the basics of the process like image mosaic, feature matching, image fusion few important terminology like RANSAC(Random Sample Consensus) and Linear weighted fusion algorithm are also discussed. Then proposed matching algorithm is used to match the key points. First, rough match pairs are obtained by Nearest Neighbor algorithm. Second, match rate information of the neighbor keypoints and the global information are calculated to update the match precision, so each keypoint matching rate spreads to its neighborhood, which eliminates a large number of mismatches to achieve the exact match. The results are compared with the former method to understand the improvements done by the proposed method to above imaging algorithm. Finally conclusion of the study is done to summarize the image mosaic of two different images. Keywords: SIFT; Image Mosaic; Feature matching; Image fusion INTRODUCTION Image mosaic is a technology which can combine two or more images in to a larger image. There are many image matching methods with promising matching results. Image mosaic techniques can mainly divided in to two categories: Based image mutual information and Based on image feature. 1A.Ravindrakumar, Associate professor, Department of CSE /Kuppam Engineering College, JNTUA, Kuppam, Chittor district, Andhra Pradesh, India, (e-mail: avula.ravindra1981@gmail.com). 2K.Jagannath, Associate Professor, Department of CSE, Kuppam Engineering College, JNTUA, Kuppam, Chittor district, Andhra Pradesh, India, (e-mail: appu.gk@gmail.com). 3S.Balaji, Assistance professor, Department of CSE, Kuppam Engineering College, JNTUA, Kuppam, Chittor district, Andhra Pradesh, India, (e-mail: balajichetty6@gmail.com). K.Jagannath Assoc.Prof. Dept. of CSE K.E.C, Kuppam. 3 S.Balaji Asst.Prof. Dept. of CSE K.E.C, Kuppam. Usually former image mosaic algorithms requires high overlap ratio of two images due to that high mismatch rate exist. Mismatch can be reduced among image pars by improving feature correspondence between image pairs are available and utilize these correspondences which register the image pairs Feature is defined as an “interesting” part of an image, and feature are used as a starting point for many computer vision algorithms, the desirable property for a feature detector is repeatability: whether or more different images of the same scene when feature detection is computationally expensive and there are time constraints, a higher level algorithm may be used to guide the feature detection stage, so that only certain parts of the image researched For features. Many computer vision algorithms are used feature detection as the initial step, so as a result, a very large number of feature detection as the initial step, so as a result, a very large number of features will developed. At an overview level, these features can be divided into as following groups: Edges, corners\interest points, Blobs/regions of interest points, Ridges. Feature points are displayed in fig:1 SIFT is a high level image processing algorithm which can be used to detect distinct features in an image. In recent years, SIFT feature matching algorithm is becoming a hot and difficult research topic in the field of matching feature all the world. in 1999, David Lowe from Colombia University established a novel point characteristics extracting method due to the SIFT algorithm. it is scale and rotation invariant and robust to noise, change in illumination, 3D camera view point so image mosaic base on SIFT and it’s variant becomes a focus recently generally, image mosaic algorithm based on scale invariant feature transform algorithm contains as following steps: 1: Extract the sift feature from the overlapped images. 2: Feature matching and image transformation. 3: Image fusion. Remaining part of this paper is therefore organized as follow: Section 2 presented reliable feature extraction by using shift algorithm. In section 3, Feature matching algorithm methods are introduced and image transformation matrix is calculated through RANSAC. in Section 4, image fusion is implemented by fade-in, fade-out method. Experimental results and related analysis are shown in section 5 and finally summary in section 6 followed by references. Fig2:. local maximum detection in DoG scale space 2.2 Key point Localization Fig:1 image with feature points indicated 2.1 Establishment a/scale space and Extreme points finding SIFT multi scale feature relies on the Gaussian function to scale the image transformation in to a single multi-scale image space, on which stable feature points can be extracted. It has been shown by Koenderink(1) and Lindeberg(2) that under a variety of reasonable assumptions the only possible scalespace kernel is the Gaussian function. The scale space of image is defined as: L(X,Y,)=G(X,Y,) * I(X,Y) (2) Where I(X,Y) is the input image, is scale space factor, and G(x,y,) is @D gaussian convolution cordvia 1 𝐺(𝑥, 𝑦, 𝜎) = 2𝜋𝜎2 𝑒 −(𝑥 2 +𝑦2 ) /2𝜎 2 (3) The smaller the value of the smaller scale, image detail is less smooth. In order to effectively detect stable keypoints the scale space, difference of Gaussian scale space (DOGscalespace) was put forward, which was generated by the convolution of difference of Gaussian nuclear and original image. In this stage a candidate keypoint detected in stage A will be refined to subpixel level and eliminated if found to be unstable. Keypoint location is to remove noise-sensitive points ornon-edge points, which enhance the stability of the matching and improvement of noise immunity. Reference [3] proposed that the extreme points of low contrast will be removed by using Taylor series to expand scale-space function D=(X,Y),at the sampling point . Trace and determinant ratios of Hassinmatrix is to reduce the edge effect of DOG function. 2.3 Orientation Assignment In this function stage location information can be exacted by from keypoints with identified and scale. Orientation assignment decrypts the feature points’ location information based on local characteristics of image, which make the feature descriptors remain invariable for image rotation. An orientation histogram is formed from gradient orientations of neighbor pixels of keypoints. According to the histogram; orientation to the key points ca n be assigned. 𝑚(𝑥, 𝑦) = √(〖(𝐿(𝑥 + 1, 𝑦) − 𝐿(𝑥 − 1, 𝑦))〗^2 + +〖(𝐿(𝑥, 𝑦 + 1) − 𝐿(𝑥, 𝑦 − 1))〗^2 ) (4) 𝐿(𝑥,𝑦+1)−𝐿(𝑥,𝑦−1) ∅(𝑥, 𝑦) = 𝑡𝑎𝑛−1 ( 𝐿(𝑥+𝐿𝑦)−𝐿(𝑥−𝐿𝑦) ) 𝐷(𝑋, 𝑌, 𝜎) = (𝐺(𝑥, 𝑦, 𝑘𝜎) − 𝐺(𝑥, 𝑦, 𝜎)) ∗ 𝐼(𝑥, 𝑦) = 𝐿(𝑥, 𝑦, 𝑘𝜎) − 𝐿(𝑥, 𝑦, 𝜎) (1) After generating scale space, in order to find extreme points, each sampling point is comparing with all its 26 neighboring points on the current scale and 9*2 points on lower and upper adjacent scale. Thus, as shown Fig 2, whether this point is the local extreme point is determined. (5) Equations (4)&(5) give modulus and direction of the gradient at pixel(X,Y), where the scale of L is there respective scale of each point. In the actual calculations, we sample in the neighborhood window centered at keypoint and obtain the neighborhood gradient direction by statistics histogram.(Gradient histogram ranges from 0 to 360 degrees, where each 10 degrees form a column, a total of 36 columns) the gradient histogram has 36 bins covering the 360 degree range of orientation. The peak histogram shows the dominant direction of the keypoints neighborhood gradient, and it is also considered as the dominant direction of the key point. figure 3 gives the example. robustness, Lowe[4] suggested that descriptor for each keypoint use altogether 16 seed, and such a keypoint can generate the 128 data that ultimately form 128 dimensional SIFT feature vector, which eliminate the effect of scale, rotation and other geometric distortion factors, continue to the length of the feature vector normalization, to further remove the effect of the light change. 3.SIFT MATCHING ALGORITHMS 3.1 General SIFT Matching Algorithm 0 The correspondence between feature points P, in the reference image input and feature points, P’, in the input image will be evaluated. 2 Fig 3: Determines the main gradient direction by the gradient direction histogram A key point may be specified with multiple directions (one dominant direction, more than one secondary direction), which can enhance the robustness of matching. Hence, the image keypoints detecting is completed, and each keypoint has three information : position, corresponding scale and direction. 2.4 : Keypoint Descriptor:The following is a feature point definition of descriptors in a local area, so that in maintains invariable to brightness change and the angle of view changes. First coordinate axis direction as the key point to ensure the rotation invariance. Figure 4: Feature vector generated neighborhood gradient information by keypoint Secondly, take in to account 8*8 window around each keypoint. Central sunspot showed in left part of fig4 is the current keypoint position. Each cell represent a neighborhood pixel in the scale of keypoint, direction of the arrow in each cell represents the pixel gradient direction, the arrow length represent the gradient mold value, and in the blue color circle represents the scope of the Gauss weighting. The more pixels near the keypoint, the greater contribution to the greater direction information. Thirdly, in each 4*4 sub window, gradient direction histogram is formed with 8 orientation bins, which is defined as seed point as showed in the right part of the fig3. A keypoint in this figure is composed of 2*2 altogether 4 seed points, each with 8 directions vector information. This neighborhood joint ideological orientation information enhances the ability of anti-noise algorithm. In actual computational process, to strengthen the match Matching of feature points can be done by using nearest neighbor algorithm from the set of feature vectors obtained data from input image. The nearest neighbor is defined as the feature point with minimum Euclidean for the invariant descriptor space vector. However, the descriptor has a high dimension, Matching the feature point by comparing the feature vector one by one will have high complexity in o(n*n) time. This can be done in o(nlogn)time by using k-d tree [5] to find all matching pairs. 3.2: Improved SIFT Matching Algorithm This article proposed one new feature matching method[7], which takes the information of the neighborhood keypoints and global direction and position mean change value in to account, thereby it better eliminates the mismatches and increase matching accuracy. Suppose that image I1 is reference image while image I2 is the images to be matched, concrete steps are as follows: 1) For each keypoint I in image I1,bulid a K-D tree for I2 to find two nearest candidate point a and point b in image I2. 2) Calculate E(I,a)/E(I,b) as the rough matching rate MR (i) of I point and a point. Whether it was bigger than a constant threshold value T determines I point and a pointroughly match. 3) Compare the scale difference of the two points with the global mean value, as well as the rotation. if one of the differences so greatly that it surpasses the prescriptive threshold value, the match is defined as a mismatch. 4) Update matching rate of each point. When updating the matching rate for keypoint I in image II, Add the sum of all matching rate of r radius neighborhood characteristic point in image II by weight to the old matching rate of keypoint I as new matching rate. MR(i)=MR(i)+w(j)m(j) Where w(j) is the weight of point j, the nearer to keypoint I the greater the weight. Similarly, in image I2, update the matching rate for keypoint a which is the matched point of point i. New matching rate is achieved by the following formula, MR(a)=MR(a)+w(j)m(j) 5) Weather ratio MR(i) / MR(a) is within a certain threshold determinates the I point and a point is a exact match pair. 6) Change neighborhood radius the value r to iterate the matching rate, until the condition which establishment in advanced is satisfied. In the experiment, we limit the number of iterations. 7) Take these match pairs as RANSAC input and calculate the transformation matrix from image ii to image12. The neighborhood radius value r is the essential to eliminate mismatches. On one hand, the smaller value of r, the less global information involved, and the greater possibility of mismatch. On the other hand, the larger the value r the smaller possibility of mismatch, but simultaneously correct keypoints around the mismatch point may be also removed by mistake because of its disturbance. Thereby, in texture-rich images a large r value is suitable, but a smaller value in the simple texture images. In this article, the adaptive method for value r is based on the number of current matching keypoint pairs. mismatches,it is faster than the previous methods and the matching results of proposed method and image mosaic results areas shown in fig:6 and Table:1 Experimental results show significant improvement Fig5a:image source1 4. IMAGE FUSION After image registrations, we need image fusion. In order to eliminate the splicing trace, this article uses the fade in fade out thought. the linear weighting fusion algorithm has been adopted, to achieve the desired gradually –enter gradually – leave gradually – leave effect in the overlap image region. Since the mosaic image in this article was colour image,so the imags of fusion cannot be as simple as gray scale fusion,which requires each of the three component of the color separate linear transition equation for below P(X,Y)=pL(x,y)X+pR(x,y)X(1-) Fig5b:image source2 Where P(x,y) is fused overlap section of images, pL(x,y), pR(x,y) are respectively left and right images over lap, is weighting factor.supposes from 1->0, increasing by the increase 1/t, composite image transits by left image to right one. 5.Experimental Results The algorithm has been implemented in matlab2011a and experiments are carried out on a laptop with I3 processor memory 4GB ram.In order to verify the effectiveness of the matching algorithm, experimental result of two image matching results is given below figures shows two images to be matched and the matching points detected by sift and proposed methods.there are a couple of errors shown in the method 1 matching result shown in figure(5A) AND 5B.The improved SIFT matching algorithm, ailmost elemenates all the Fig 6: Resultant mosaic image of two source images Feature Matching Mathing Rate Time Used General SIFT Matching 94.37% 3872ms Improved SIFT Matching 98.54% 4421ms Table 1:Mosaic Result Data CONCLUSION: Since the traditional features matching process almost ignores the global information, we have concluded a mosaic algorithm based on SIFT.SIFT Descriptors of key points own good features which is scale and rotation invariant and robust to noise, change in illumination, 3D camera view point. In addition, the proposed algorithm enhances the matching accuracy through combining nearest neighbor algorithm, neighborhood matching rate diffusion and RANSAC Algorithm. Experimental results show that the improved method with strong robustness performs effectively. The ethod can be applied to other SIFT variants. REFERENCE: 1. 2. 3. 4. 5. 6. 7. 1.Jinpeng Wang “Image Mosaicing Algorithm Based on Salient Region and MVSC” international conference on Multimedia and Signal Processing 2011 Lindeberg T. “Scale-Space theory: a basic tool for analyzing structures at different scales,” journal of Applied Statistics, 1994,21(2)224-270. BROWN M and LOWE 0 G, “Invariant features from interest pointgroups,” Proceedings in British Machine Vision Conference UK British, Machine Vision Association ,2002, pp.6S6-66S. D.G Lowe, “Distinctive image features Scale-invariant keypoints”, International journal of computer vision,2004,60(2):91-110. Zhen Hua and Yewei Li “Image stitch Agorithm Based on SIFT and MVSC” Seventh International Conference on fuzzy systems and knowledgeDiscovery(FSKD 2010)-2010 Brown M,Lowe D.G. Recognising panoramas, proceedings of the 9th international conference on computer vision (ICCV03), nice, october 2003. KRZYSZTOF SLOT, HYONGSUK KIM.Key points derivation for object class detection with SIFT algorithm Proceedings of international conference on Artificial intelligence and Soft computer2006:850-859 A.Ravindrakumar is working as Associate Professor in KUPPAM ENGINEERING COLLEGE, Kuppam, Chittoor Dist. He received his B.Tech degree in Computer Science and Engineering (CSE), from Sri Krishnadevaraya college of Engineering, Ananthapuram, Andra Pradesh, India. M.Tech degree in Computer Science Engineering from Dr.M.G.R University, Chennai, and Tamilanadu, india. Email.Id: avula.ravindra1981@gmail.com. K.Jagannath is working as Associate Professor in KUPPAM ENGINEERING COLLEGE, Kuppam,Chittoor Dist. He received his B.Tech degree in Information Technology (IT), from Kuppam Engineering College Kuppam, Chittor District, and Andra Pradesh, India. M.Tech degree in Computer Science Engineering from Dr.M.G.R University, Chennai, and Tamilanadu, India. Email.Id: : appu.gk@gmail.com S.Balaji is working as Assistant Professor in KUPPAM ENGINEERING COLLEGE, Kuppam, Chittoor Dist. He received his B.Tech degree in Information Technology (IT), from Kuppam Engineering College Kuppam, Chittor District, Andra Pradesh, India. M.Tech degree in Computer Science Engineering from Dr.M.G.R University, Chennai, and Tamilanadu, India. Email.Id: balajichetty6@gmail.com