A case study of Lost Belief (2015): and the effectiveness of

advertisement

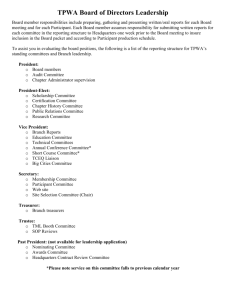

A case study of Lost Belief (2015): and the effectiveness of interactive documentaries Emily Burcham Abstract This report is looking into how effective interactive documentaries are on audiences. I will be comparing two leading interactive documentaries Fort McMoney and Hollow to see how they gauged their audience impact. As well as conducting user testing on my own Interactive documentary via a sit along observation, to see how effective Lost Belief is on a group from its target audience. Communicating Research B1032563 Contents Introduction Literature Review o Fort McMoney o Hollow Research methods o o o o Sit-Along Observation Screen Capture Observation Form Questionnaire Discussion of findings o Screen Capture o Observation form o Questionnaire Conclusion Bibliography Appendices 1 Introduction The filmmaking industry is undergoing a revolution; with technology at the heart of it. The advent of new filmmaking methods is increasing possibilities and making audiences more involved in films “technology has always changed the way we see and talk about the world” (Kopp, 2014) Interactive filmmaking is a growing industry, but I believe that there isn’t enough research on how effective they can actually be. “There is a totality of experience, which is of a different order to a linear documentary” (Perlmutter, 2014). Many filmmakers are apprehensive about adopting new technology believing that it will kill traditional filmmaking. A genre where this philosophy is most significant is in the documentary filmmaking industry. Interactive documentaries or i-docs implement documentary films within an interactive user interface. There is a lot of debate around whether interactivity in filmmaking engages or disengages an audience. With this paper my aim is to add some clarity to that debate by looking at my own Interactive documentary. To see how my user interacts with it in terms of its design and subject matter, looking into what kind of effect it has on people and finding out how similar i-docs also do this. Lost Belief is based around the Pagan calendar, with one of the main aims being to change people’s perceptions about the religion. Therefore gauging how well it impacts on people is very important. I will do this by user testing Lost Belief on a group of people from my target audience. I will then reflect on these results to see if it has the intended impact. This will then hopefully answer the question of, how effective is my I-doc on its audience and do the complexities of it affect the user’s emersion into the story? I plan to look into how industry professionals judge the impact their interactive documentaries have. What techniques they have used, what outcomes they have derived, as well as whether they feel they have benefited more from being interactive or whether they could of achieved the same impact from being a conventional documentary? I will then conduct my own user testing based on my research, which will be carried out in the form of a sit-along observation and questionnaire. This information should hopefully aid my practice in making Lost Belief. Giving me an insight on how well it functions and its effect on my audience. Literature Review An interactive documentary is “any project that starts with the intention to document the ‘real’ and that uses digital interactive technology to realise this intension” (Aston and Gaudenzi, 2012) The impact that i-docs have and how people judge that impact has always been a disputed. Tom Perlmutter (2014) claims that “there’s a totality experience, which is of different order from liner docs” saying that the i-doc “is becoming the artwork of our age” (Perlmutter, 2015). He eludes to the fact that Interactive films are the next big thing to change the media world, like TV did to cinema and cinema did to theatre. However there are still some filmmakers that have reservations towards adopting digital media into films. Irene McGinn (2010) believes that “the types of narrative required for a satisfying game, verses a satisfying film, are too different for a complete and successful convergence to occur” (McGinn, 2010) to many, interaction takes the engagement of the story away from the audience as they’re able to venture to other sections whenever they want, possibly missing parts of the story. With traditional linear documentaries the audience don’t get an option, 2 they follow the story the filmmaker wants them to make. A lot of filmmakers don’t want their audiences to have control over their story they want them to view what the filmmaker wants them to view. There is also an issue from a commercial perspective, interactive films are limited in the way they are distributed, with the main platform being online. So financing an interactive project is restricted as many believe that interactive films won’t have the same scale of audience as a traditional documentary. However there are filmmakers like Ingrid Kopp (2014), who have transitioned into the world of interactive documentary from a filmmaking past that explain as a filmmaker “we never really needed to think about what the audience was doing because we kind of new what they were doing” (Kopp, 2014). However with interactive design the user and the audience’s engagement with the project is vital to its impact on its subject. “The user is actively affecting the reality of the interactive documentary while browsing it, but she is also affected by it” (Gaudenzi, 2009). The affect the i-doc has on the user can only determine how effective the i-doc is. But how do we measure the scale to which people are affected? Well leading creative technologist Clint Barry (2014) claims that “impact isn’t this number, in a way, it’s something you really have to dig into and study” (Power to the Pixel, 2014) his company Hi-impact use many systematic methods to extract audience data. They try to understand how to effectively manipulate the structure of a project to appeal to certain audiences. However from looking into existing i-docs to see how they determine their effectiveness the majority choose much simpler methods, such as user testing and social media. Fort McMoney A great example of an i-doc that has had enormous impact on its subject matter is Fort McMoney (Dufresne, 2015) an interactive documentary referred to as a docu-game (see appendix 1). It is set in one of the world’s largest oil reserves Fort McMurray and uses game mechanics to engage the user in creating debates around the social, economic and ethical issues raised within community. “We wanted to put the whole community in the hands of the audience” (Power to the pixel, 2015) this structure evidently meant that they needed to measure the impact the i-doc had, as this was crucial to the success of the project. If people didn’t engage with it there wouldn’t have been a project. The team designed Fort McMoney to have many social areas that stimulated their audience and influence debates in different ways. This has led to some staggering numbers on how much of an audience they received “2, 000, 000 page views, 615, 000 visits, 412, 000 players and 6500 comments” (Power to the pixel, 2015). However as David Furness said “numbers alone aren’t enough to gauge the success of an interactive documentary” (Dufresne and Flynn, 2015). They used many diverse techniques to gain impact like utilising the press “creators provided innovative content and newspapers drive audiences to the project” (Dufresne and Flynn, 2015). They initially partnered with news agencies to spread the word, which helped generate a debate around the i-doc. During the production they set up some ‘game masters’ to encourage the audience “those people were there to stimulate the debate” (Power to the pixel, 2015). But the main method I was interested in, and have taken influence from, is how they used user testing to shape the impact of the i-doc. David states “For me, user testing is asking not only ‘do you understand the story,’ but also ‘do you understand the experience?’” (Dufresne and Flynn, 2015) This interactive documentary “has the potential to create and sustain engaged communities or interests around issues and shared concerns” (Nash, 2015) which is something that I want to achieve with Lost Belief. I’ve taken guidance from the way they have measured audience engagement via user testing. 3 Hollow An interactive documentary that follows along the same lines as Lost Belief in the way it is structured is Hollow (see appendix 2). An interactive documentary that tells the story of McDowell; a small county in America that is being deserted by its young people, as they head off to find work in bigger cities, leading to the county’s economy declining. “There is so many things that we wanted to happen with Hollow, all these stories that we wanted to see change” (McMillion, 2014) the film lets the residents of the McDowell county upload their personal stories and opinions which are then put into the i-doc, within a parallax scrolling infrastructure, which is similar to how Lost Belief is structured. One of the main ways they managed to make an impact with the film was by associating it with news agencies and other relevant media “no-ones just gonna stumble upon your project you have to put it out there and you have to put it in as many places as possible” (McMillion, 2014). But even Elaine McMillion the director/producer admits herself that “In the case of Hollow, major infrastructural issues and health issues and educational issues can’t be changed over the course of one year” (Morowitz, 2015) they knew from the outset that this i-doc alone wasn’t going to change things over night, it was going to be from spreading the message. “I don’t know if we ever really thought that all of our philosophy behind this would actually pay off, but we are seeing it through these peoples emails and tweets to us” (Astle et al., 2013) A lot of their judgement of their impact was based on the social media they received. This i-doc has really impacted on the small town to try and make a difference, and that is mainly seen not from user testing, statistics or gauging the opinions of a global audience but from simply seeing the change in the community “I measure more on the individual level and the impact that I’ve seen and heard I’ve heard from community members after the project” (Morowitz, 2015). From this research I have found that the best way to measure impact is to spread the word about it, utilise social media and user test it on its audience. Research Methods In order to gauge the effectiveness of Lost Belief I will be user testing it on people that fit within my target audience. User testing is “evaluating a product or service by testing it with representative users” (Usability.gov, 2015) it’s a crucial part of the production process in any interactive project, without good functionality the projects effectiveness on the audience is compromised. “When usability testing is a part of design and development, the knowledge we get about our users experience supports all aspects of design and development” (Barnum, 2011). Following Fort McMoney’s and Hollow’s example I want to find out, does my audience understand the concept of Lost Belief and was it easy to understand that concept. Sit-Along Observation I conducted my user testing via a sit-along observation so that I would be able to gauge the emotional response my user has to the project, because one of the benefits of this method is that your able to see their physical response to the project. “Although it is possible to collect far more elaborate data, observing users is a quick way to obtain an objective view of a product” (Gomoll and Nicol, 1990). I also wanted to document how well they explored the project, looking at which 4 navigation path they took, how much attention they spent on each section and whether they enjoyed it. I did this by following the three main observational protocol; test monitoring where I observed and recorded the participants behaviour, direct recording where I recorded what the participant is doing and thinking aloud where I recorded what the participant was saying during the observation “In the interest of making usable designs we observe, we don’ ask” (Nielsen and Pernice, 2011). I conducted myself in an unobtrusive manner with my participants so they could explore my i-doc on their own “with unobtrusive observation you learn whether people can use your design in an easy and efficient way, and whether it is not the case” (D'Hertefelt, 1999). The way I conducted myself during the observation was vital as I wanted my participant to feel as relaxed as possible in order to get the best results. “Getting this interaction right is essential to creating a positive experience for the participant and reliable results from the test” (Barnum, 2011). Originally I set out to have around 10 participants, however with the amount of data I will be gaining I decided to cut this down, to save time and focus more on the each participant. I eventually had four participants which I found by sending out an email (see appendix 3) to people who fitted within my target audience of around 20-50 year olds with a sense of how to use technology. This gave me a perception of how Lost Belief will be received within its general audience. However there are some issue with user testing via an observation method, the main being that you gain so much data that it can be hard to analyse. However I feel, I’ve combated this issue by not having many participants and by setting the data out into a table. Screen capture My plan was to record the screen of the user as they went through Lost Belief to get data on what buttons they clicked, which navigation path they took and how much time they spent on certain sections. I would of liked to of had uniformed videos, however due to the software not being available in the university they were all filmed in different locations, so they varied in quality. For some participants I had to film with a video camera instead of using screen capture software. Which wasn’t ideal as it was harder to try and keep them out of the footage and focus on the screen; but I made this clear to my user and they all seemed happy with this method. But due to a number of reason, lack of memory or battery on the equipment, I wasn’t able to film all of the observation. With some participants I observed them using their own computers so managed to film the screen via their screen capture software. But this wasn’t ideal and if I was to do this again I would definitely try and be a bit more organised. Instead of saying to my participants that they could chose a time and place I would of allocated them a time and found a space ideal for the observation to take place. Apart from this set back, I feel that the observations went well. They led to a number of changes throughout the testing. Which meant that each participant tested a different version of Lost Belief and led to each observation being different. This I feel was better, than testing the same version every time as I could gauge their reactions to the changes as well as to the initial project. I plan to also reference these videos in the development of Lost Belief as this research has helped the production of my project. In all this footage has been invaluable to me. Observation Form Whilst the participants tested Lost Belief, I filled out an observation form in order to record their physical reaction to the i-doc that wasn't being recorded on the screen. I noted data such as what questions they asked, if they got stuck or if there was anything that they want to comment on. 5 Once I had all this data I collated it into a table to better clarify the results. I could see what needed changing in my project in order for it to have better functionality and better impact on its audience. On the form I logged the participant’s age and what mood they were in when testing Lost Belief. I felt that their age was significant as I wanted to see how people from different generations reacted to it. Although I only managed to get one participant over the age of 30 as some people had to drop out due to ill health. This still gave me a different perspective on the project, which is especially helpful as my target audience is mainly people in their late 20’s and 30’s. I would of liked to of had more people within this bracket, however the participants were mainly people I knew and so were in their early 20’s. Their mood was also important to my study as I want to see the feeling they got from Lost Belief, this could only be done from a sit-along observation. As it gave me a good idea of just how effective the i-doc was on their general temperament. Questionnaire Whilst the observation will tell me their responses and the screen capture will tell me how well they navigated through the project, I also want to know their opinion of the project. So once the participant had gone through the i-doc I asked them to fill out a questionnaire to gain their comprehension of Lost Belief. The questionnaire will be mainly based on the Likert scales (Likert 1932) where the user is offered a set of answers based on a scale of 1-5. “Participant don’t always like to state a negative opinion out-loud, but they are often wiling to express one when faced with a ranking system” (Unger and Chandler, 2009). I wanted to use this method as it allowed me to get a better perspective of what my participant thought about the project whilst at the same time not offering the participant a too complex answer. I want to keep it rather minimal as I don’t want to overwhelm my participant with questions, as they’ve already spent time user testing the project. When constructing the questions I followed Bell and Waters plan to “go back to your hypothesis or to the objectives and decide which questions you need to ask to achieve those objectives” (Bell, 2010). So based on this the questions I put in the questionnaire were based around the objectives I set out in my proposal. Following the Likert scale I decided to offer my participant a set of 1-5 options ranging from nothing – a lot, to gauge their reaction in a way that wouldn't intimidate my user. I will also give them the option to express their opinions via a standard text box, so if they have more to say that can’t be expressed via the Likert scale they have that option. Discussion of findings I conducted my user testing and got some great data from all the methods I used “combining two research methods provides a richer picture of the user than one method can provide on its own” (Unger and Chandler, 2009). I managed to get four participants to take part that all signed consent forms (see appendix 4), however a few participants dropped out due to ill health or time constraints. I made sure that they knew what was happening and how the user testing would affect my project. I also made sure they were comfortable to take part before proceeding. They also knew my practice was to film them as they went through the i-doc whilst filling out an observation form. Then once they had finished I asked them to fill out a questionnaire based on their experience. So with all my data collected I began to determine what it all meant. 6 Screen Capture Looking through all my footage (see appendix 5) I could see exactly how my user reacted to it. Their responses to the Lost Belief was generally quite positive, although the participants did point out quite a few flaws. I could see from the footage which areas they clicked without me guiding them. They often didn’t understand which way to go or chose a section and didn’t know how to continue the story. Participant 1 decided to scroll down instead of using the navigation system. Participant 2 mainly clicked on the centre images taking them to the video but couldn’t understand where the next pages were. Participant 3 got really stuck trying to navigate through the pages, going from home to yule to spring but not to any other pages. Participant 4 had better luck navigate through all the pages but used a scrolling method the majority of the time. Apart from the forth participant they all chose Yule first, which is good as this is where I want them to start “People think ‘topdown’, so to enhance interactivity, you should make navigation obvious, convenient and easy to use” (Le Peuple and Scane, 2003) we traditionally go from left to right and from top to bottom in the western world when we are faced with an interface, which is why i designed Lost Belief so that Yule would be first. I think this theory is somewhat proven by this user testing. From this I found that my navigation wasn’t really working and I needed to make some serious changes. Observation Form With the footage at not the best quality, I got the majority of data from the observation form (see appendix 6). I also noted down which navigational path they took. To see if the participants could easily understand each part and whether they missed sections which would interfere on their engagement. Participant 1 didn’t use my navigation much, which led to them missing parts depending on where they clicked. With them using a scrolling method they also didn’t really focus much on the project. Participant 2 was a lot more engage with the project but mainly liked the video elements as they clicked on them first but didn’t realise they’re was more than just videos. This meant that they missed a lot of the other sections so I had to guide them to those parts. But I did get a very positive response from participant 2. So after this I changed my navigation so that the buttons were a lot more significant I tested the third participant. However even with these changes, they still didn’t understand the project. They found themselves getting stuck between the different sections and wanted to stop the testing half way through, so they missed a large chunk of the i-doc. After this I made an introduction so the navigation was a bit clearer. Participant 4 found it a lot easier to move through but still had some slight trouble. They too also clicked on the centre images first and scrolled instead of using the buttons but managed to find and watch each section. There was a few issues as well as the navigation however. For participant 1 the pages took a while to load which meant that they got bored of waiting and scrolled past parts that hadn’t loaded in time. This meant that they didn’t engage with them parts, which are essential to understanding the pagan belief. So I added a page loader after this user test, in order to eradicate this issue with my second contributor. Participant 2 struggled to read the text on some parts and didn’t realise they could scroll to other parts due to the fact that they went to the video sections first. They also wanted to know more information on where the videos were filmed and didn’t realise the videos had ended, as they did so abruptly. So after this made the buttons clearer for my third participant however they were still confused by some of the images on the scrolling pages, they didn’t like the way that they appeared on the page. Participant 4 had less trouble as I added an introduction explaining the i-doc more, they found some text hard to read but in general enjoyed the piece. 7 There were also some good points that came from my user testing. Participant 1 enjoyed the illustrations and how they were animated. Participant 2 really enjoyed the films and was really engaged with the project in general, they said that they found the piece very informative. Participant 3 found my music quite calming and like the incorporation of a twitter feed. Participant 4 also enjoyed the videos and enjoyed reading about the rituals. So I feel every participant had a good reaction but a lot of issues were found and some participants engagement varied due to these issues. Questionnaire I got a lot of information from the observation form but I got even more from their questionnaire (see appendix 7). A main aim of Lost Belief is to educate its audience on the pagan belief. So one of the main questions I wanted to ask is just how much my audience has learnt from the i-doc? Out of the four participants two said they had learnt a lot, one said they had learnt a little and one said that they didn’t know. This is a very positive result to me. From this I can judge that Lost Belief has at least a little impact on my audience and their knowledge on paganism. Following on from this I wanted to know if their perceptions on Paganism had change. Many still believe that paganism is a wicked culture of sacrifice and witchcraft; yet their true belief is simply a reverence for nature and all living things. Another main aim of Lost Belief is to dispel that misconception. From the questionnaire I found that I had mixed results. One said their perceptions had changed a lot, one said a little, one said not sure and one said not a lot. From this I can’t really determine a result. I wish I had user tested more people as this might have given me a more conclusive result. Especially as this is a rather important topic that I want to get an answer to. However I also asked them to explain why they’re perception did or didn’t change. Two people said that they had an idea about paganism but they learnt more about it, one person didn’t realise just how popular it is and one person thought it was cool. From this I gathered that Lost Belief did have some impact on their already existing idea of the pagan religion and that they did find it fairly engaging. The plan with Lost Belief is to create a social aspect within the community. A place for them to go and share their events and stories. I want the i-doc to be engaging to my audience, in order for people to accept their belief and possibly partake in pagan activities. Therefore asking the question of would you consider going to a pagan event? is important to my user test; three of my participants said that they would go to a pagan event with only one saying that they wouldn’t. This is a great result as it means that my i-doc is making people more aware of pagan events and that people are becoming more open-minded towards it. I would really love to see more people embracing this culture and from these results it seems that the i-doc is changing that. Another factor is how much they enjoyed Lost Belief. This is important to any project, but I wanted to know which section they enjoyed the most. For me, this will tell me, what part of the i-doc my audience is going to engage with the most. It seems that everyone enjoyed my calming music. Some enjoyed the videos, some the animation, and some just enjoyed the whole thing. This is a great result for me, knowing that people are enjoying my piece is going to make it more engaging for audiences and makes all the effort is worth it. I also asked them to leave any comments in case they maybe didn’t find it enjoyable or just wanted to let me know any extra thoughts on it that weren’t answered with the questions. Some just added to what they thought was good about the 8 project, with one stating that they would recommend it others and found it a good educational tool. Whereas others commented on what I could improve upon. This feedback was very beneficial, as it gave me the impression that not only are people engaging with it themselves but they are willing to actively help me improve it and spread the word about it. Conclusion So from the user testing I have gathered that my navigation needs improving but overall the message is getting through to my audience. Although there were a few issues, the majority seemed engaged with the project which means that my i-doc is having some effect on people. This sit-along observation and the questionnaire have been invaluable to my project. I have learnt a great deal about just how effective Lost Belief is on its audience. If I was to do it again however I would definitely work more professionally in the organisation of my user testing. I would make sure they were all tested in the same room, under the same conditions and with the same software. This user testing has not only been a benefit to this module but it has also helped my master’s degree. From the feedback I have gained I have added an introduction to better explain my piece and I have also restructured my main navigation. Simplifying it so that my user won’t miss certain sections and pieces of information. Without this user testing my project would be a very complex piece that I don’t believe would have engaged my audience half as much as I want. But from people’s reaction I think I have put Lost Belief into a very positive position, and the new structure derived from the testing is going make my project very effective on its audience. Bibliography Astle, R., Rizov, V., Poppy, T., Hemphill, J., Osenlund, R., Sanders, J., Harris, B. and Harris, B. (2013). Elaine McMillion and Jeff Soyk on Hollow | Filmmaker Magazine. [online] Filmmaker Magazine. Available at: http://filmmakermagazine.com/72963-elaine-mcmillion-and-jeff-soyk-on-hollow/ [Accessed 4 May 2015]. Aston, J. and Gaudenzi, s. (2012). Interactive documentary: setting the field. studies in documentary film, [online] 6(2), pp.125-139. Available at: http://dx.doi.org.lcproxy.shu.ac.uk/10.1386/sdf.6.2.125_1 [Accessed 4 May 2015]. Barnum, C. (2011). Usability testing essentials : ready, set ... test!. Amsterdam ; London: Morgan Kaufmann, pp.10, 12, 13 chapter 7. Bell, J. (2010). Doing your research project. Open University Press. P.153 D'Hertefelt, S. (1999). Knowledge Base - Observation methods and tips for usability testing. [online] Users.skynet.be. Available at: http://users.skynet.be/fa250900/knowledge/article19991212shd.htm [Accessed 4 May 2015]. Dufresne, D. (2015). Fort McMoney. [online] Fortmcmoney.com. Available at: http://www.fortmcmoney.com/#/fortmcmoney [Accessed 4 May 2015]. 9 Dufresne, D. and Flynn, S. (2015). Fort McMoney: Concept to Launch | MIT – Docubase. [online] Docubase.mit.edu. Available at: http://docubase.mit.edu/lab/case-studies/fort-mcmoney-conceptto-launch/ [Accessed 4 May 2015]. Emily, B. (2015). Lost Belief. [online] Lostbelief.co.uk. Available at: http://www.lostbelief.co.uk/ [Accessed 5 May 2015]. Gaudenzi, S. (2009). Chapter 4 – the Live documentary. 1st ed. [ebook] p.5. Available at: http://www.interactivedocumentary.net/wp-content/2009/02/Ch-4_Live-Doc_web-draft.pdf [Accessed 4 May 2015]. Gomoll, K. and Nicol, A. (1990). Performing Usability Studies. [online] Courses.cs.washington.edu. Available at: http://courses.cs.washington.edu/courses/cse440/08au/readings_files/gomoll.html [Accessed 4 May 2015]. Kopp, I. (2014). Interactive Storytelling Adventures. [video] Available at: https://www.youtube.com/watch?v=SgyrmP3DtcQ [Accessed 4 May 2015]. Likert, R. (1932). A Technique for the Measurement of Attitudes. Archives of Psychology, 140, 1–55. Le Peuple, J. and Scane, R. (2003). User interface design. Exeter: Crucial, p.52. McGinn, I. (2010). The effects of new media on how film is produced and consumed. [Blog] Meta Pancakes. Available at: http://metapancakes.com/?p=94 [Accessed 4 May 2015]. McMillion, E. (2015). Hollow — an Interactive Documentary. [online] Hollow Interactive. Available at: http://hollowdocumentary.com/ [Accessed 4 May 2015]. McMillion, E. (2014). Hollow: Our Lessons Learned (Part 2). [video] Available at: https://www.youtube.com/watch?v=c-O8A7KwA1g [Accessed 4 May 2015]. Morowitz, M. (2015). Elaine Sheldon and Jeff Soyk discuss media impact and Hollow | The Ripple Effect. [online] Harmony-institute.org. Available at: http://harmonyinstitute.org/therippleeffect/2015/01/12/elaine-sheldon-and-jeff-soyk-discuss-media-impact-andhollow/ [Accessed 4 May 2015]. Nash, K. (2015). Clicking on the real: telling stories and engaging audiences through interactive documentaries. [Blog] The Impact Blog. Available at: http://blogs.lse.ac.uk/impactofsocialsciences/2014/04/15/clicking-on-the-real-participationinteraction-documentary/ [Accessed 4 May 2015]. Nielsen, J. and Pernice, K. (2011). Eyetracking web usability. Berkeley, California: New Riders, p.416. Perlmutter, T. (2014). 2014 Sunny Lab Synthesis - The future of Interactive Documentary. [video] Available at: https://www.youtube.com/watch?v=kGWdSu8696Y [Accessed 4 May 2015]. Power to the pixel, (2015). WE WERE IN FORT MCMURRAY LONG BEFORE LEONARDO DICAPRIO Raphaëlle Huysmans, TOXA. [video] Available at: https://vimeo.com/114006655 [Accessed 4 May 2015]. 10 Power to the Pixel, (2014). Optimising Stories for Future Impact by Clint Beharry, Senior Creative Technologist, Harmony Institute. [video] Available at: https://vimeo.com/78721531 [Accessed 4 May 2015]. Unger, R. and Chandler, C. (2009). A project guide to UX design : for user experience designers in the field or in the making. Berkeley, CA: New Riders, Chapter 1. P.102 Usability.gov, (2015). Usability Testing. [online] Available at: http://www.usability.gov/how-to-andtools/methods/usability-testing.html [Accessed 4 May 2015]. Appendices 1) Fort McMoney - http://www.fortmcmoney.com/#/fortmcmoney 2) Hollow - http://hollowdocumentary.com/ 3) Email 4) Consent forms 11 5) Screen Captures 01. https://www.youtube.com/watch?v=HfoPl2fqvPU https://www.youtube.com/watch?v=m1byq_VDVCs 02. https://www.youtube.com/watch?v=uALRaFG5j5I 03. https://www.youtube.com/watch?v=Je4GfJ0I5h8 04. https://www.youtube.com/watch?v=d_sK2qH_qdQ 6) Observation Form 12 7) Questionnaires 13