Data Compression What I`m going to do in this talk about data and

advertisement

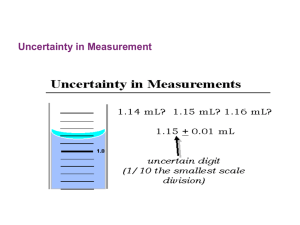

Data Compression What I'm going to do in this talk about data and how we, computer scientists deal with data, but in this lecture will be about data compression. [Slide 1] But the overall issue us how do we store data, how do we transmit data over computer networks and how do we do this both reliably and efficiently? And reliably means we're worried about errors that might occur in the storage. We're worried about errors that might occur during the transmission of that information and also in the third lecture, we're worried about how we do that reliably in a sense that it's secured and so how we can protect our data. The three lectures, this will be about how we compress data to get it down to hopefully the smallest number of let's say bits of reader storage or transmission and one of the first distinctions we want to work with is that data is sort of the raw form. It's a bits and bytes and ... but it's ... what we're really interested is information. We want to make a distinction between data and information because the key to compression will be how we can reduce the amount of raw data to a smaller amount that just contains the information that we need. And what I won't talk about but I should at least mention is that there's another level, there's really a fourth part that where we way well what about working with large distributed databases? How do you deal with things that are stored either multiple places like when you might back your information in the cloud or you have a very large database and multiple people are working on it. [Slide 2] The first part is this ideas about what is information and that information is not the same as raw data. And a standard example is the weather and the idea here is that we get the most information when something happens that we can't predict and we don't expect. So here's a good day, I'm sitting here in my office in New Mexico, it is a beautiful day outside and I look out and say, "Oh gee, that's a nice day." But it's almost always a nice day here. If I tell you it's a nice day in New Mexico, you're really not getting very much information out of that. But if I say "Oh, it's snowing today" then you get a fair amount of information out of that or if I say something like that's less, like "There's a tornado outside," then you get a tremendous amount of information out of that. How can we use that basic notion that that information is somehow coupled to our expectation or the probability that something is going to happen? Let's look at a very, very simple example [Slide 3] where we have just 2 things we're trying to say transmit. I have a very ... say I have a very simple transmitter and all it does is every once in a while send out a 0 or 1 and the 0 says "Oh it's Data Compression Page 1 of 5 sunny outside" or if it's at night at least that it's clear and one if it's cloudy and or it's not sunny. You'd expect overtime to see a long sequence that would have mostly zeros in it and occasionally there would be a one occurring that would say "Okay, nice day, nice day, nice day, nice day." And then "Oh, not a nice day, nice, day, nice day" and so on. We say well if I'm going to send that information, I'm sorted away wasting a lot of the bandwidth or if I'm going to store it, I'm wasting a lot of storage by just storing a lot of zeros at an occasional one. I noticed that this example is almost identical if you consider what happens when you try fax a standard page of texts, that the page is mostly white and occasionally there's black that corresponds to the text and if I were to digitize that page to a very high resolution which is what you do in your fax machine and zeros stood for why one was black, then I would have a long, long sequence, typically a fax machine might digitize this 300 or 600 bits per inch that were mostly white and all black and I would be mostly sending or saving zeros. One very simple way to go about it just to give you some feeling for this is to go back where we're going to look at the sequence up here and [Slide 4] say "What if I re-write it?" And this technique is called run length coding where we say is we know there's mostly zeros, why don't I just tell you how many zeros there are and then there's a one and then how many more zeros there are and if ... it would ... I could re-write it something like at least in English as 13 zeros followed by one. 13 more zeros followed by another one and then 6 more zeros and I could encode that in a very simple way of just saying 13 13 6 because I know that the run will end when there's a one and if there were 2 ones in a row then I would encode it as say 13, if we would say 13 zeros followed by 2 ones, I could just do 13 zero 13 6 and so on. And that ... I identify then take this decimal representations and encode it in binary, you have very, very compact representation. For example, if I use base 16, remember we talked about representing numbers in different bases and base 16, that means you'd get 4 bits for each of the run lengths then I'd need 4 bits for the first 13, 4 bits of the second 13 and then 4 bits for the 6, then I'd be sending 12 bits instead of the 34 bits in original sequence of zeros and ones and in fact that's the basis of how fax transmission works that you use a little more sophisticated but still it's the idea of run length coding and that the information is where the ones are and not in there's essentially no information in those zeros. In fact the first example of that that was widely used [Slide 5] was Morse code. Morse code was dots and dashes where what they really meant was short and long when somebody was sitting there on the telegraph, pushing down on Data Compression Page 2 of 5 telegraph and because the lines were very, very slow in that day and you also had people that had very limited speed into transmit, what they did was try and encode the ... well first the alphabet into sequences of dashes and dots and or equivalently zeros and ones where the most probably letters were encoded with the shortest messages. If you see that the E there is encoded as just a dot and let's look at something else that's fairly probably, the T is the probably second most probably letter in the alphabet that was a single dash so you can think this one is a zero and the other is being a one and the least likely things that you would see for example an X was encoded with 4 bits or a dash, dot, dot, dash. What you see in that picture is what's called the International Morse Code. [Slide 6] Now how that's used in practice is as we've said, fax machines, there is something called Huffman coding which solves that problem mathematically correctly which says ... well what that says is that if you for example had a bunch of ... let's generalize this a little bit, and we had a bunch of symbols. For example [Slide 5] in Morse code, here we have a whole bunch of symbols that we want to transmit and we would first start of by first trying to figure out what the probability of each one was and then figure out what's the best code for those ... that set of symbols with the probabilities that I measured [Slide 6]. Huffman solved that problem quite a while ago and it is the basis and has a very nice algorithm for coding that, but that's the basis of zip file. Most of you use zip files to transmit things and a zip file is something that uses a dynamic Huffman code. But the real compression occurs in things like images and we're going to be talking about that in a minute or so. But tiff images that some of you may have seen one form that tiff images are routinely stored in as is using Huffman coding. But if you look just at Huffman coding and all you’re going to do is the alpha … standard alpha-numeric characters or the ASCII of characters, you’ll get a little compression but not really the kind of dramatic compression you’d like to do because what really happens is if you look at … start looking at English words and sort of playing a game where you try to guess the word, given the first couple of letters or as people like me, the side readers do, we just figure out what the word is most of the time by looking at the first few letters. You notice that if you have a T, well the probably if you see an H next, that’s a fairly high probability event but a T followed by a Q has essentially no probability of happening in English and so you say “Well, why don’t I encode pairs?” And then you could argue I want to encode triplets and get a better code and in fact that’s where the real compression would come if you would try to compress a compressed text. Data Compression Page 3 of 5 But let’s talk about images because images have … are larger, a typical image might have … color image might be 3 or 4 megabytes of raw data and I’d really want to compress those kinds of data and we’ll get at the end, I’m going to talk about single images first but really to notice that most of the compression and that’s what you see for example in television now that has gone digital is most of the compression actually happens because things don’t change much frame to frame but let’s at least start by talking about single images. [Slide 7] The idea is that there’s a lot of redundancy. Well redundancy is the same as this problem of saying it’s a nice day, it’s a nice day, it’s a nice day. It’s pretty much the same and we see that in images as we said when we go frame to frame or if you look at the background and if you take a picture of a person, you may not … you may put the person against the white or simple background and there’s nothing much changes in the background but you’re interested in the stuff where it’s changing, where the person is. And the second thing is that whether you do a perfect reconstruction or not, that if i take data and I use this idea that there’s redundancy to get a better code and the code is … the code takes out that redundancy, but I get a perfect reconstruction and I might want to do that if I’m say encoding a book, I might want to get all the words exactly right. But if you’re dealing with an image, you can deal with small errors in the reconstruction because your eye is not going to really pick them up and if you allow for some loss of data, you can have much, much more efficient algorithm. If you look at the ones [Slide 8] that you will probably see if you use the web, there’s a couple that are fairly standard, a gif image is … what it does is reduce the number of colors saying “Look, if the colors don’t change very much [inaudible 00:12:18] going to pick them up, so if I can use just a reduced number of colors and then recolor the image that you give me and use a table to say “Well, at this pixel, this is the point, the index into the table for that color, I’ll get an image that in most applications but certainly not all, but in most applications, especially where you’re not trying to do say a photograph, you’re just trying to get some colored information. Out there is gif images can give you a tremendous amount of reduction. If jpeg is the one that is fairly standard for doing single images and what that one does is divide the image up into small blocks like the 16x16 blocks and then tries to compress each block by using actually 2 schemes. 1 is to try and just find the most important parts or the most important parts of that image and allow a little bit of loss, but second it actually uses a run length code to encode that reduced image and as you’ll see in the next example, you can get a lot of compression at a jpeg. Data Compression Page 4 of 5 And mpeg is really based on jpeg but it adds frame to frame compression and as I’ve said that general sequence of images when you’re watching television or a movie that you don’t see much change going from to frame. Now one way you might notice jpeg and mpeg is if you’re watching say a movie from a DVD or if you’re streaming a movie down and there’s some sort of a small error, you’ll see a little block up here on the image where there’s been an error and there’s been a mistake made, but that doesn’t occur very often or sometimes you can notice on mpeg images where you have a very … an image that … or a sequence in a movie that’s at night and you’re watching it from a DVD, you’ll see in the background as if you have a very gray background, you’ll see the little blocks, little blocks of gray rather than seeing it as being smooth. But again it’s an acceptable image for your eye and once we allow a little compression is look at this example [Slide 9] here that if you look at this … on the left is a tiff image and that’s a tiff, there are a couple of forums in tiff. This ia tiff that was Huffman coded and has no compression at all, so we can say that’s our starting image and I think it was something like a 512 by 512 and be there is a jpeg compressed image by a factor of 18 and the one on the right is a jpeg compressed image by a factor of 37 and I think it’d be pretty hard press to see visually the differences between even the image on the right that was compressed by a factor 37 and the image on the original image on the left. And that is true for most images that we deal with. [Slide 10] Is there a limit? Well the limit generally is … memory used to be the limit when memory was extensive and computers didn’t have much memory. That’s less of an issue than it was before because you have things that are now measured in gigabytes and terabytes and you don’t worry too much about single images. The real issue is sending things over the internet because not the rate of which you can send things over the internet reliably which is what we’re going to talk about in the next segment ... Data Compression Page 5 of 5