docx - Tsinghua Math Camp 2015

advertisement

Probability & Statistics

Professor Wei Zhu

July 24th

(I)

The sum of two normal random variables may not be

normal.

Counter example: 𝑍~𝑁(0,1), and the value of random variable W depends on a

𝑍, 𝑖𝑓 ℎ𝑒𝑎𝑑

coin (fair coin) flip: 𝑊 = {

, please

−𝑍, 𝑖𝑓 𝑡𝑎𝑖𝑙

(a) Find the distribution of W.

(b) Is the joint distribution of Z and W a bivariate normal?

(c) Is W+Z normal?

Answer:

(a)

𝐹𝑊 (𝑤) = 𝑃(𝑊 ≤ 𝑤)

= 𝑃(𝑊 ≤ 𝑤|𝐻)𝑃(𝐻) + 𝑃(𝑊 ≤ 𝑤|𝑇)𝑃(𝑇)

= 𝑃(𝑍 ≤ 𝑤)𝑃(𝐻) + 𝑃(−𝑍 ≤ 𝑤)𝑃(𝑇)

1

1

= 𝐹𝑍 (𝑤) × + 𝑃(𝑍 ≥ −𝑤) ×

2

2

1

1

2

2

= 𝐹𝑍 (𝑤) × + 𝑃(𝑍 ≤ 𝑤) ×

So 𝑊~𝑁(0,1).

1

1

= 𝐹𝑍 (𝑤) × + 𝐹𝑍 (𝑤) ×

2

2

= 𝐹𝑍 (𝑤)

(b)

𝑃(𝑊 + 𝑍 = 0) = 𝑃(𝑊 + 𝑍 = 0|𝐻)𝑃(𝐻) + 𝑃(𝑊 + 𝑍 = 0|𝑇)𝑃(𝑇)

1

1 1

= 1× +0× =

2

2 2

Therefore, 𝑊 + 𝑍 is not a normal random variable.

(c)

The joint distribution of Z and W is not bivariate normal. This can be shown by

deriving the joint mgf of Z and W and compare it with the joint mgf of bivariate

normal (which we already knew, from the previous lecture).

𝑀(𝑡1 , 𝑡2 ) = 𝐸(𝑒 𝑡1 𝑍+𝑡2 𝑊 )

= 𝐸(𝐸(𝑒 𝑡1 𝑍+𝑡2 𝑊 |𝑜𝑢𝑡𝑐𝑜𝑚𝑒 𝑜𝑓 𝑐𝑜𝑖𝑛 𝑓𝑙𝑖𝑝))

= 𝐸(𝑒 𝑡1 𝑍+𝑡2 𝑊 |𝐻)𝑃(𝐻) + 𝐸(𝑒 𝑡1 𝑍+𝑡2 𝑊 |𝑇)𝑃(𝑇)

1

= 𝐸(𝑒 (𝑡1 +𝑡2 )𝑍 ) ×

1

1

+ 𝐸(𝑒 (𝑡1 −𝑡2 )𝑍 ) ×

2

2

(𝑡1 −𝑡2 )2

1 (𝑡1 +𝑡2 )2

2

= (𝑒

+𝑒 2 )

2

This is not the joint mgf of bivariate normal. Therefore the joint distribution of

𝑊 𝑎𝑛𝑑 𝑍 is not bivariate normal.

(II)

Other Common Univariate Distributions

Dear students, besides the Normal, Bernoulli and Binomial distributions, the

following distributions are also very important in our studies.

1. Discrete Distributions

1.1. Geometric distribution

This is a discrete waiting time distribution. Suppose a sequence of independent

Bernoulli trials is performed and let X be the number of failures preceding the

first success. Then 𝑋~𝐺𝑒𝑜𝑚(𝑝), with the pdf

𝑓(𝑥) = 𝑃(𝑋 = 𝑥) = 𝑝(1 − 𝑝)𝑥 , 𝑥 = 0,1,2, …

1.2. Negative Binomial distribution

Suppose a sequence of independent Bernoulli trials is conducted. If X is the

number of failures preceding the nth success, then X has a negative binomial

distribution.

Probability density function:

𝑓(𝑥) = 𝑃(𝑋 = 𝑥) =

(𝑛 + 𝑥 − 1)

⏟ 𝑛−1

𝑥

𝑝𝑛 (1 − 𝑝) ,

ways of allocating

failures and successes

𝑛(1 − 𝑝)

,

𝑝

𝑛(1 − 𝑝)

𝑉𝑎𝑟(𝑋) =

,

𝑝2

𝑛

𝑝

(𝑡)

𝑀𝑥

=[

] .

1 − 𝑒 𝑡 (1 − 𝑝)

𝐸(𝑋) =

1. If n = 1, we obtain the geometric distribution.

2. Also seen to arise as sum of n independent geometric variables.

2

1.3. Poisson distribution

Parameter: rate 𝜆 > 0

MGF: 𝑀𝑥 (𝑡) = 𝑒 𝜆(𝑒

𝑡 −1)

Probability density function:

𝒇(𝒙) = 𝑷(𝑿 = 𝒙) =

𝒆−𝝀 𝝀𝒙

, 𝒙 = 𝟎, 𝟏, 𝟐, …

𝒙!

1. The Poisson distribution arises as the distribution for the number of “point

events” observed from a Poisson process.

Examples:

Figure 1: Poisson Example

2. The Poisson distribution also arises as the limiting form of the binomial

distribution:

𝑛 → ∞,

𝑛𝑝 → 𝜆

𝑝→0

The derivation of the Poisson distribution (via the binomial) is underpinned by a

Poisson process i.e., a point process on [0, ∞); see Figure 1.

AXIOMS for a Poisson process of rate λ > 0 are:

(A) The number of occurrences in disjoint intervals are independent.

(B) Probability of 1 or more occurrences in any sub-interval [𝑡, 𝑡 + h) is 𝜆h +

𝑜(h) (h → 0) (approx prob. is equal to length of interval 𝑥𝜆).

(C) Probability of more than one occurrence in [𝑡, 𝑡 + h) is 𝑜(h) (h → 0) (i.e.

prob is small, negligible).

Note: 𝑜(h), pronounced (small order h) is standard notation for any function

𝑟(ℎ) with the property:

𝑟(ℎ)

=0

ℎ→0 ℎ

𝑙𝑖𝑚

3

1.4. Hypergeometric distribution

Consider an urn containing M black and N white balls. Suppose n balls are

sampled randomly without replacement and let X be the number of black balls

chosen. Then X has a hypergeometric distribution.

Parameters:

𝑀, 𝑁 > 0, 0 < 𝑛 ≤ 𝑀 + 𝑁

Possible values: 𝑚𝑎𝑥 (0, 𝑛 − 𝑁) ≤ 𝑥 ≤ 𝑚𝑖𝑛 (𝑛, 𝑀)

Probability density function:

𝑴) ( 𝑵 )

𝒇(𝒙) = 𝑷(𝑿 = 𝒙) = 𝒙 𝒏 − 𝒙 ,

(𝑴 + 𝑵)

𝒏

𝑀

𝑀 + 𝑁 − 𝑛 𝑛𝑀𝑁

𝐸(𝑋) = 𝑛

,

𝑉𝑎𝑟(𝑋) =

.

𝑀+𝑁

𝑀 + 𝑁 − 1 (𝑀 + 𝑁)2

(

The mgf exists, but there is no useful expression available.

1. The hypergeometric distribution is simply

# samples with x black balls

# possible samples

𝑀

𝑁

( )(

)

= 𝑥 𝑛−𝑥 ,

𝑀+𝑁

(

)

𝑛

2. To see how the limits arise, observe we must have x ≤ n (i.e., no more than

sample size of black balls in the sample.) Also, x ≤ M, i.e., x ≤ min (𝑛, 𝑀).

Similarly, we must have x ≥ 0 (i.e., cannot have < 0 black balls in sample), and

𝑛 − 𝑥 ≤ 𝑁 (i.e., cannot have more white balls than number in urn).

i.e. 𝑥 ≥ 𝑛 − 𝑁

i.e. 𝑥 ≥ max (0, 𝑛 − 𝑁).

𝑀

3. If we sample with replacement, we would get 𝑋 ∼ 𝐵 (𝑛, 𝑝 = 𝑀+𝑁). It is

interesting to compare moments:

4

finite population correction

hypergeometric

𝐸(𝑋) = 𝑛𝑝

binomial

𝐸(𝑥) = 𝑛𝑝

↑

𝑀+𝑁−n

𝑉𝑎𝑟(𝑋) =

[𝑛𝑝(1 − 𝑝)]

𝑀+𝑁−1

𝑉𝑎𝑟(𝑋) = 𝑛𝑝(1 − 𝑝)

↓

When sample all

balls in urn 𝑉𝑎𝑟(𝑋)~0

2. Continuous Distributions

2.1 Uniform Distribution

CDF, for a < 𝑥 < 𝑏:

𝑥

𝑥

𝐹(𝑥) = ∫ 𝑓(𝑥)𝑑𝑥 = ∫

−∞

𝑎

1

𝑥−𝑎

𝑑𝑥 =

,

𝑏−𝑎

𝑏−𝑎

that is,

0

𝑥−𝑎

𝐹(𝑥) = {

𝑏−𝑎

1

𝑀𝑋 (𝑡) =

𝑥≤𝑎

𝑎<𝑥<𝑏

𝑏≤𝑥

𝑒 𝑡𝑏 − 𝑒 𝑡𝑎

𝑡(𝑏 − 𝑎)

A special case is the 𝑼(𝟎, 𝟏) distribution:

𝒇(𝒙) = {

𝟏, 𝟎 < 𝑥 < 1

𝟎, 𝐨𝐭𝐡𝐞𝐫𝐰𝐢𝐬𝐞,

𝐹(𝑥) = 𝑥 for 0 < 𝑥 < 1

1

𝐸(𝑋) = ,

2

𝑉𝑎𝑟(𝑋) =

1

,

12

𝑀(𝑡) =

𝑒𝑡 − 1

.

𝑡

2.2 Exponential Distribution

PDF: 𝒇(𝒙) = 𝝀𝒆−𝝀𝒙 , 𝒙 ≥ 𝟎

CDF: 𝑭(𝒙) = 𝟏 − 𝒆−𝝀𝒙 ,

𝑀𝑋 (𝑡) =

𝜆

, 𝜆 > 0, 𝑥 ≥ 0.

𝜆−𝑡

This is the distribution for the waiting time until the first occurrence in a Poisson

process with rate parameter 𝜆 > 0.

5

Exercise: In a Poisson process with rate 𝜆, the inter-arrival time (time between

two adjacent events) follows i.i.d. exponential(𝜆).

1. If 𝑋~𝐸𝑥𝑝(𝜆) then,

𝑷(𝑿 ≥ 𝒕 + 𝒙|𝑿 ≥ 𝒕) = 𝑷(𝑿 ≥ 𝒙)

(memoryless property)

2. It can be obtained as limiting form of geometric distribution.

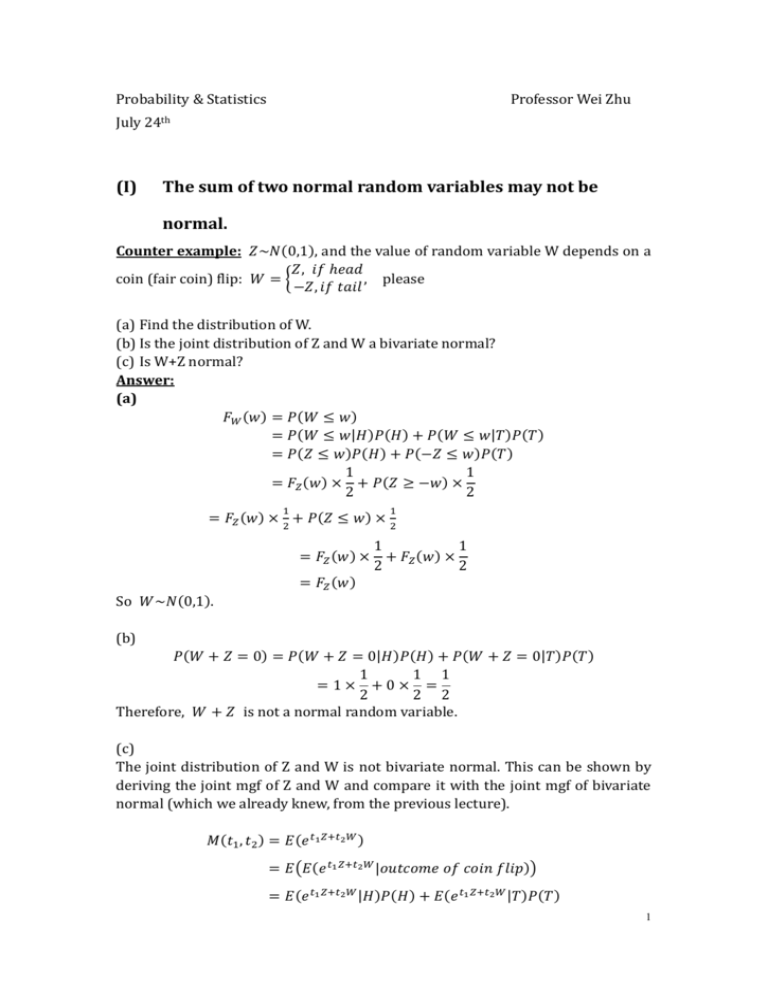

2.3 Gamma distribution

𝒇(𝒙) =

𝝀𝜶

𝜞(𝜶)

𝒙𝜶−𝟏 𝒆−𝝀𝒙 , 𝜶 > 0, 𝜆 > 0, 𝑥 ≥ 0

With

∞

gamma function: 𝛤(𝛼) = ∫0 𝑡 𝛼−1 𝑒 −𝑡 𝑑𝑡

𝜆

𝛼

mgf : 𝑀𝑋 (𝑡) = (𝜆−𝑡) , 𝑡 < 𝜆.

Suppose Y1 , . . . , Y𝐾 are independent Exp(λ) random variables and let

X = Y1 + ⋯ + Y𝐾 .

Then 𝑋 ∼ Gamma(𝐾, 𝜆), for 𝐾 integer. In general, 𝑋 ∼ Gamma(α, λ), α > 0.

1. α is the shape parameter,

𝜆 is the scale parameter

𝑌

Note: if 𝑌 ∼ Gamma (𝛼, 1) and 𝑋 = 𝜆, then 𝑋 ∼ Gamma(α, λ), That is, λ is

scale parameter.

Figure 2: Gamma Distribution

𝑝 1

2. Gamma ( 2 , 2) distribution is also called χ2𝑝 (chi-square with 𝑝 df)

distribution

6

if 𝑝 is integer;

χ22 = exponential distribution (for 2 df).

3. Gamma (𝐾, 𝜆) distribution can be interpreted as the waiting time until the

𝐾 𝑡ℎ occurrence in a Poisson process.

2.4 Beta density function

Suppose Y1 ∼ Gamma (𝛼, 𝜆), 𝑌2 ∼ Gamma (β, λ) independently, then,

𝑌1

X=

~𝐵(𝛼, 𝛽), 0 ≤ 𝑥 ≤ 1.

𝑌1 + 𝑌2

Remark: see soon for derivation!

2.5 Standard Cauchy distribution

Possible values: 𝑥 ∈ 𝑅

1

1

1

1

2

𝜋

PDF: 𝑓(𝑥) = 𝜋 (1+𝑥2 ) ; (location parameter 𝜃 = 0)

CDF: 𝐹(𝑥) = + arctan𝑥

𝐸(𝑋), 𝑉𝑎𝑟(𝑋), 𝑀𝑋 (𝑡) do not exist.

The Cauchy is a bell-shaped distribution symmetric about zero for which no

moments are defined.

If

𝑍1 ∼ 𝑁(0, 1)

𝑍1

~Cauthy distribution.

𝑍2

and

𝑍2 ∼ 𝑁(0, 1)

(III) Let’s Have Some Fun!

independently,

then

𝑋=

The Post Office

A post office has two clerks, Jack and Jill. It is known that their service times

are two independent exponential random variables with the same parameter

λ, and it is known that Jack spends on the average 10 minutes with each

customer. Suppose Jack and Jill are each serving one customer at the moment,

(a) What is the distribution of the waiting time for the next customer

in line?

7

(b) What is the probability that the next customer in line will be the

last customer (among the two customers being served and

him/herself) to leave the post office?

Solution:

(a) Let X1 and X2 denote the service time for Jack and Jill, respectively. Then the

waiting time for the next customer in line is:

Y=min (X1,X2), where Xi ~ iid exp(λ), i=1,2.

Furthermore, E(X1)=1/λ=10, λ=1/10. From the definition of exponential R.V.,

f X i ( xi ) e xi , xi>0, i=1,2 and P(Xi>x)= f xi (u )du = e xi , xi>0, i=1,2.

x

FY(y)=P(Yy)=1-P(Y>y)=1-P(X1>y,X2>y)=1-P(X1>y)·P(X2>y)=1- (e-λy)2=1- e-2λy

Therefore fY(y)=

d

FY(y)=(2λ) e-(2λ) y, y>0. Thus Y ~ exp (2λ), where λ=1/10.

dy

Note: Because of the memoryless property of the exponential distribution, it

does not matter how long the current customers has been served by Jack or

Jill.

(b) One of the two customers being served right now will leave first. Now

between the customer who is still being served and the next customer in line,

their service time would follow the same exponential distribution because of

the memoryless property of the exponential random variable. Therefore, each

would have the same chance to finish first or last. That is, the next customer

in line will be the last customer to leave with probability 0.5.

Exercise.

1.

Let X be a random variable following the Bernoulli distribution with success

probability p. Please

(1) Write down the probability density function of X,

(2) Derive its moment generating function,

(3) Derive the moment generating function of a B(n,p) (Binomial with

parameters n and p) random variable.

(4) Derive that the summation of n i.i.d Bernoulli (p) random variables would

follow B(n,p).

2.

Let X~N(μ1 , σ12 ), and Y~N(μ2 , σ22 ). Furthermore, X and Y are independent

to each other. Please derive the distribution of

(1) X+Y

(2) X-Y

(3) 2X-3Y+5

8

Solutions:

Q1.

(1) f(x) = px(1-p)1-x, x= 0 or 1.

(2) 𝑀𝑋 (t) = E(𝑒 𝑡𝑋 ) = 𝑒 0∙𝑡 𝑃(𝑋 = 0) + 𝑒 1∙𝑡 𝑃(𝑋 = 1) = 1 − 𝑝 + 𝑝𝑒 𝑡

(3) Assume Y ~ B(n,p)

𝑛

𝑛

𝑘=0

𝑘=0

𝑛

𝑀𝑌 (t) = E(𝑒 𝑡𝑌 ) = ∑ 𝑒 𝑡𝑘 𝑃(Y = 𝑘) = ∑ 𝑒 𝑡𝑘 ( ) 𝑝𝑘 (1 − 𝑝)𝑛−𝑘

𝑘

𝑛

𝑛

= ∑ ( ) (𝑝𝑒 𝑡 )𝑘 (1 − 𝑝)𝑛−𝑘 = (1 − 𝑝 + 𝑝𝑒 𝑡 )𝑛

𝑘

𝑘=0

(4). Assume 𝑋1 , 𝑋2 , … , 𝑋𝑛 ~𝐵𝑒𝑟𝑛𝑜𝑢𝑙𝑙𝑖(𝑝) i. i. d

Let Y = ∑𝑛𝑖=1 𝑋𝑖

𝑛

𝑀𝑌 (t) = E(𝑒 𝑡𝑌 ) = E(𝑒 𝑡 ∑𝑖=1 𝑋𝑖 )

𝑛

= ∏ E(𝑒 𝑡𝑋𝑖 ) = [E(𝑒 𝑡𝑋1 )]𝑛 (since 𝑋𝑖 𝑎𝑟𝑒 i. i. d. )

𝑖=1

= (1 − 𝑝 + 𝑝𝑒 𝑡 )𝑛 (from conclusion of part (1))

Since the probability distribution can be identified by mgf, by comparing the results

of part (3) and (4), we conclude that the sum of n i.i.d Bernoulli(p) random variables

would follow B(n, p).

Q2

If Z ~ N (μ, σ2)

∞

𝑀𝑍 (t) = E(𝑒

𝑡𝑍 )

=∫ 𝑒

−∞

𝑡𝑧

1

√2𝜋𝜎

𝑒

−

(z−μ)2

2𝜎2 𝑑𝑧

∞

= ∫

1

−∞ √2𝜋𝜎

∞

[𝑧−(μ+𝜎

1

1

−

2𝜎2

= exp (𝜇𝑡 + 𝜎 2 𝑡 2 ) ∫

𝑒

2

−∞ √2𝜋𝜎

𝑒

−

1

[𝑧 2 −(2μ+2𝜎2 𝑡)+𝜇 2 ]

2𝜎2

𝑑𝑧

2 𝑡)]2

𝑑𝑧

1

1

= exp (𝜇𝑡 + 𝜎 2 𝑡 2 ) ∙ 1 = exp (𝜇𝑡 + 𝜎 2 𝑡 2 )

2

2

where 𝑓(x) =

1

√2𝜋𝜎

2

𝑒

−

[𝑧−(μ+𝜎2 𝑡)]

2𝜎2

𝑖𝑠 𝑡ℎ𝑒 𝑝𝑑𝑓 𝑜𝑓 𝑁(μ + 𝜎 2 𝑡, 𝜎 2 )

Then, we have

1

𝑀𝑋 (t) = exp (𝜇1 𝑡 + 𝜎12 𝑡 2 )

2

1

𝑀𝑌 (t) = exp (𝜇2 𝑡 + 𝜎22 𝑡 2 )

2

Since X and Y are independent, by theorem, we have

(1) 𝑀𝑋+Y (t) = 𝑀𝑋 (t)𝑀Y (t)

9

1

= exp [(𝜇1 + 𝜇2 )t + (𝜎12 + 𝜎22 )𝑡 2 ]

2

⇒ 𝑋 + Y ~ N(𝜇1 + 𝜇2 , 𝜎12 + 𝜎22 )

(2) 𝑀𝑋−Y (t) = 𝑀𝑋 (t)𝑀Y (−t)

1

= exp [(𝜇1 − 𝜇2 )t + (𝜎12 + 𝜎22 )𝑡 2 ]

2

2

2

⇒ 𝑋 − Y ~ N(𝜇1 − 𝜇2 , 𝜎1 + 𝜎2 )

(3) 𝑀2𝑋−3Y+5 (t) = 𝑀𝑋 (2t)𝑀Y (−3t) ∙ 𝑒 5𝑡

1

= exp [(2𝜇1 − 3𝜇2 + 5)t + (4𝜎12 + 9𝜎22 )𝑡 2 ]

2

⇒ 2𝑋 − 3Y + 5 ~ N(2𝜇1 − 3𝜇2 + 5, 4𝜎12 + 9𝜎22 )

10