Appendix

advertisement

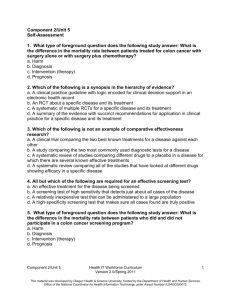

Appendix, page 1 Appendix Appendix ..................................................................................................................................................... 1 Datasets .............................................................................................................................................. 1 Detailed Methods ............................................................................................................................ 1 Relation to previous work ............................................................................................................. 4 Figures ................................................................................. Error! Bookmark not defined. References ......................................................................................................................................... 5 Datasets All four synopses were formed using a seeding PubMed search (Appendix Figure 1) that returned publications up to their inception date; thereafter a maintenance search strategy was used. The difference between the seeding and maintenance search is that the former is more specific whereas the latter is more sensitive. This is for practical reasons: using the maintenance search strategy during the database inception would retrieve an unmanageably large number of articles to be screened. When continuously monitoring the literature, however, it is feasible to use a very sensitive strategy. The maintenance search strategy has remained constant for all four databases. Detailed Methods We view the citation screening problem as a specific instance of the text classification problem, the aim in which is to induce a model capable of automatically categorizing text Appendix, page 2 documents into one of k categories. Spam filters for e-mail are common examples of text classifiers. These classifiers automatically designate incoming e-mails as spam or not. To use a text classification model, one must first encode documents into a vector space, in order for it to be intelligible to the classification model. We make use of the standard Bag-of-Words (BoW) encoding scheme, in which each document is mapped to a vector whose ith entry is 1 if word i is present in the document and 0 otherwise. We map the titles, abstracts and MESH terms of a given document into separate BoW representations (the latter might be called a Bag-of-Mesh terms representation). These mappings are referred to as feature-spaces. For our base classifier, we use the Support Vector Machine (SVM).1 SVMs have been shown empirically to be particularly adept at text classification.2 Briefly, SVMs work by finding a hyperplane (i.e., high-dimensional generalization of a line) that separates instances (documents) from the respective classes with the maximum possible margin. The intuition behind the SVM approach is illustrated in Appendix Figure 2, which shows a simplified fictitious 2-dimensional classification problem comprising two classes: the plusses (+) and the minuses (-). There are an infinite number of lines that separate the classes, one of which is shown in the left-hand side of the figure, for example. The intuition behind the SVM is that it selects the separating line in a principled way. In particular, it chooses the line that maximizes the distance between the nearest members of the respective classes and itself; this is referred to as the margin. In light of this strategy, the separating line the SVM would select in our example is shown on the right hand side of the figure. In practice, this line is found by solving an objective function expressing this max-margin principle. Appendix, page 3 There are a few properties that make the citation classification problem unique from a data mining/machine learning perspective. First, there is severe class imbalance, i.e. there are far fewer relevant than irrelevant citations. This can pose problems for machine learning algorithms. Second, false negatives (relevant citations misclassified as irrelevant) are costlier than are false positives (irrelevant citations misclassified as relevant), and we therefore want to emphasize sensitivity (recall) over specificity. Accordingly, we have tuned our model to this end. In particular, we first build an ensemble of three classifiers, one per each of the three aforementioned feature spaces. Each of these classifiers is trained with a modified SVM objective function that emphasizes sensitivity by penalizing the model less heavily for misclassifying negative (irrelevant) examples during training. When a prediction for a given document is made, we follow a simple disjunction rule to aggregate predictions; if any of the three classifiers predicts relevant, then we predict that the document is relevant – only when there is unanimous agreement that the document is irrelevant do we exclude it. To further increase sensitivity, we build a committee of these ensembles to reduce the variance caused by the sampling scheme we use. More specifically, we undersample the majority class of irrelevant instances before training the classifiers, i.e. we discard citations designated as irrelevant by the reviewer at random until the number of irrelevant documents in the training set is equal to the number of relevant documents. This simple strategy for dealing with class imbalance has been shown empirically to work well, particularly in terms of improving the induced classifiers’ sensitivity.4-7 Because this introduces randomness (the particular majority Appendix, page 4 instances that are discarded are selected at random), we build a committee of these classifiers and take a majority vote to make a final prediction. This ensemble strategy is known as bagging7,8 and is an extension of bootstrapping to the case of predictive models.8 Bagging reduces the variance of predictors. We have found bagging classifiers induced over balanced bootstrap samples works well in the case of class imbalance, consistent with previous evidence that this strategy is effective,4,5 and have proposed an explanation based on a probabilistic argument.10 Specifically, for our task we induce an ensemble of 11 base classifiers over corresponding balanced (i.e., undersampled) bootstrap samples from the original training data. The final classification decision is taken as a majority vote over this committee. We chose an odd number (n=11) to break ties. The exact number is arbitrary, but has worked well in previous work.4,5 Each base classifier itself is an aggregate prediction (an OR Boolean operator; i.e., each base classifier predicts that a document is relevant if any of its members does) over three separate classifiers induced, respectively, over different feature-space representations (titles, abstracts, MeSH terms) of the documents included in independently drawn balanced bootstrap samples. For a schematic depiction of this, see the Figure in the main manuscript. Relation to previous work There has been a good deal of research in the machine learning and medical informatics communities investigating techniques for semi-automating citation screening.4-7, 11-18 These works have largely been ‘proof-of-concept’ endeavors that have explored the feasibility of automatic classification for the citation screening task (with promising results). By contrast, the present work looks to apply our existing classification Appendix, page 5 methodology prospectively, to demonstrate its utility in reducing the burden on reviewers updating existing systematic reviews. Most similar to our work here, Cohen et al. conducted a prospective evaluation of a classification system for supporting the systematic review process.14 Rather than semiautomating the screening process, the authors advocated using data mining for work prioritization. More specifically, they induced a model to rank the retrieved set of potentially relevant citations in order of their likelihood of being included in the review. In this way, reviewers would screen the citations that are most likely to be included first, thereby discovering the relevant literature earlier in the review process than they would have had they been screening the citations in a random order. Note that in this scenario, reviewers still ultimately screen all of the retrieved citations. This differs from our aim here, as we prospectively evaluate a system that automatically excludes irrelevant literature; i.e., reduces the number of abstracts the reviewers must screen for a systematic review. References 1. Vapnik VN. The nature of statistical learning theory. Springer Verlag; 2000. 2. Joachims, T. Text categorization with support vector machines: Learning with many relevant features. ECML , 137-142. 1998. Springer. 3. Van Hulse, J., Khoshgoftaar, T. M., and Napolitano, A. Experimental perspectives on learning from imbalanced data. 935-942. 2007. ACM. 4. Small KM, Wallace BC, Brodley CE, Trikalinos TA. The constrained weight space SVM: Learning with labeled features. International Conference on Machine Learning (ICML). 2011. 5. Wallace BC, Small KM, Brodley CE, Trikalinos TA. Active learning for biomedical citation screening. Knowledge Discovery and Data Mining (KDD). 2010. Appendix, page 6 6. Wallace BC, Small KM, Brodley CE, Trikalinos TA. Modeling annotation time to reduce workload in comparative effectiveness reviews. Proc ACM International Health Informatics Symposium (IHI). 2010. 7. Wallace BC, Small KM, Brodley CE, Trikalinos TA. Who should label what? Instance allocation in multiple expert active learning. Proc SIAM International Conference on Data Mining. 2011. 8. Breiman, L. Bagging Predictors. Journal of Machine Learning, 123-140. 1996. 9. Kang P, Cho S. EUS SVMs: ensemble of under-sampled SVMs for data imbalance problems. Neural Information Processing (NIPS), 837-846. 2006. 10. Wallace BC, Small K, Brodley CE, Trikalinos TA. Class Imbalance, Redux. In Proc. of the International Conference on Data Mining (ICDM), 2011. 11. Bekhuis T, Demner-Fushman D. Towards automating the initial screening phase of a systematic review. Stud Health Technol Inform. 2010;160 (Pt 1):146-50. 12. Cohen AM, Hersh WR, Peterson K, Yen PY. Reducing workload in systematic review preparation using automated citation classification. J Am Med Inform Assoc. 2006;13:206-219. 13. Cohen AM, Ambert K, McDonagh M. Cross-topic learning for work prioritization in systematic review creation and update. J Am Med Inform Assoc. 2009; Erratum in: J Am Med Inform Assoc. 2009;16(6):898. 14. Cohen AM, Ambert K, McDonagh M. A Prospective Evaluation of an Automated Classification System to Support Evidence-based Medicine and Systematic Review. AMIA Annu Symp Proc; 2010. 15. Frunza O, Inkpen D, Matwin S, Klement W, O'Blenis P. Exploiting the systematic review protocol for classification of medical abstracts. Artif Intell Med. 2011;51(1):17-25. 16. Matwin S, Kouznetsov A, Inkpen D, Frunza O, O'Blenis P. A new algorithm for reducing the workload of experts in performing systematic reviews. J Am Med Inform Assoc. 2010;17(4):446-53. 17. Polavarapu N, Navathe SB, Ramnarayanan R, ul Haque A, Sahay S, Liu Y. Investigation into biomedical literature classification using support vector machines. Proc IEEE Comput Syst Bioinform Conf. 2005:366-74. 18. Yu W, Clyne M, Dolan SM, Yesupriya A, Wulf A, Liu T, Khoury MJ, Gwinn M. GAPscreener: an automatic tool for screening human genetic association literature in PubMed using the support vector machine technique. BMC Bioinformatics. 2008;9:205.