DDI Toolkit - Kelly High School

advertisement

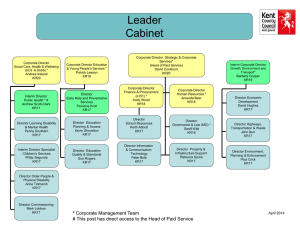

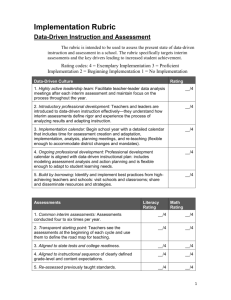

Adapted for the Area 25 Assessment Project Data-Driven Instruction: A Tool Kit High School Interim Assessment Project in Chicago Public Schools 2008-2009 High School Interim Assessment Project in Chicago Public Schools 2009-10 1 Table of Contents Title ............................................................................................................................................. Page* Tool 1 – Assessing your use of the DDI Implementation Rubric ....................................................... 5 Tool 2 - Guiding Questions for Completing the DDI Rubric ................................. 6, DW 16-17,18, 21 Tool 3 - Creating a DDI Planning Calendar ........................................................................10, DW 17 Tool 4 – Developing an Entry Plan...................................................................................................11 Tool 5 – Predicting Task ..................................................................................................................13 Tool 6 – Using a “Test-in-Hand” Analysis .......................................................................1, DW 20, 85 Tool 7 – Conducting a “Results Meeting” ........................................................................... 20, DW 25 Tool 8 – Developing the Re-teaching Lesson Plan ....................................................... 22, DW19, 25 Tool 9 – Creating a Student Prescription Plan .................................................................................24 Tool 10 – Bottom Line Results Workshop ................................................................... 25, DW, 25, 90 Tool 11 – Conducting a Walkthrough ...............................................................................................26 Tool 12 – Engaging in Student Reflection ....................................................................................... 27 The first page number is from the tool kit. Any numbers are from DW are from a book entitled “Data Wise” edited by Kathryn Parker Boudett, Elizabeth A. City, and Richard J. Murnane, Harvard Education Press, Cambridge, Massachusetts (2008). High School Interim Assessment Project in Chicago Public Schools 2009-10 2 Introduction Data-Driven Instruction (DDI) A High School Model HS Interim Assessment Project in Chicago Public Schools ’08-’09 Data-Driven Instruction (DDI) is a part of a coherent school improvement strategy. It is based in patterns of practice and action in urban public schools in the United States. It is also supported by the Urban Excellence Framework, a report authored by New Leaders for New Schools that describes key insights about school practices, principal actions, tools/processes, and the necessary leadership to support schools in making dramatic gains. The purpose of this Tool Kit is to provide DDI focus high schools with prototype tools that can be that can be used to support their use of interim assessments as a part of a comprehensive strategy for improvement. The Components of Data-Driven Instruction Data-Driven Culture Leadership team, Vision, Calendar, Professional development, Transparency Assessments (Literacy and Math) Common interim assessments, Defined standards, Aligned assessments, Standards re-assessed, Wrong answers/written responses Analysis Immediate assessment results; item-level analysis, standards-level analysis and student analysis as well as bottom-line results; Teacher-owned, Test-in-hand analysis; Deep analysis Action New lessons; lesson plans/units explicit assessment, reviewed lesson plans and implementation; Accountability, Engaged students High School Interim Assessment Project in Chicago Public Schools 2009-10 3 A Summary of the Data-Driven Instruction Process 1. A Leadership team needs to be in place to begin the Data-Driven Instruction 2. 3. 4. 5. 6. 7. 8. 9. 10. 11. 12. 13. (DDI) process. Ask each individual on your leadership team to individually assess your school’s current level of implementation of DDI using the Data-Driven Instruction Implementation Rubric – See Tool 1. Use Guiding Questions for Completing the DDI Implementation Rubric with the leadership team to generate a consensus rating of your school’s current data-driven instruction process. In rating yourself, you may want to use the question “What evidence would we need to see if we were proficient in this indicator of data-driven instruction?” – see Tool 2. Create a DDI Planning Calendar that can be shared with all staff prior to the beginning of school – see Tool 3. Develop an Entry Plan prior to the beginning of the year, which includes leadership training, vision, professional development, assessment, and action – see Tool 4. Review assessments and items created by the Interim Assessment project in Chicago Public Schools to finalize common assessments, related to standards, and aligned with difficulty Follow Planning Calendar and Entry Plan as school year begins. Ask teachers in areas of assessments to complete Prediction Task – see Tool 5. When teachers receive the results for the data report for their classes, they should complete an analysis of their data using the Test- in-Hand Analysis – see Tool 6. Schedule a Results Meeting for teacher teams to analyze data and determine consensus around the best actions – See Tool 7. Work with staff to create Re-teaching Lesson Plans for immediate action in their classes and place on the Planning Calendar – See Tool 8. Create ways to engage students in improving their own learning. An individual Prescription Sheet can be used with support staff and students – see Tool 9. Finish the school year with a Bottom-Line Results analysis and suggestions for next year’s Entry Plan – see Tool 10. 14. Use the Conducting a Walk-through Tool to help structure a classroom observation using a Re-teaching Lesson Plans – See Tool 11. 15. Engage students in an analysis of their own results using the Student Reflection sheet – see Tool 12 16. Celebrate the great work of your staff – no tools needed! High School Interim Assessment Project in Chicago Public Schools 2009-10 4 1 Data-Driven Culture—Tool 1 Data-Driven Instruction (DDI) Assessing your use of the DDI Implementation Rubric HS Interim Assessment Project in Chicago Public Schools ’08-’09 The purpose of this tool is to provide a rubric that can be used to assess your school’s current state of data-driven instruction. The rubric specifically targets interim assessments and the key drivers leading to increased student achievement. Instructions: Each member of the school’s leadership team should rate the indicators of data-driven instruction at their school. After the individual ratings are complete, use Tool 2 to create a consensus around the school’s implementation of DDI. 4=Exemplary Implementation 3=Proficient Implementation 2=Beginning Implementation 1=No Implementation Data-Driven Culture 1. Leadership Team trained and highly active in all stages of the process 2. Vision established by school leadership and repeated relentlessly throughout the year 3. School year began with a detailed Calendar that includes time for: assessment creation/adaptation, implementation, analysis, team meetings, and re-teaching (flexible enough to accommodate district changes/mandates) 4. Professional development aligned with data-driven instructional plan 5. Transparency achieved by teachers seeing the assessments at the beginning of each cycle Assessments (Literacy and Math) 1. Common interim assessments administered 4-5 times per year 2. Defined standards aligned to the state test and college-ready expectations 3. Aligned assessments to instructional sequence of clearly-defined grade level/content expectations 4. Previous standards re-assessed 5. Wrong answers/written responses illuminate student misunderstandings Analysis 1. Immediate turnaround of assessment results (ideally 48 hours, 1 week maximum) 2. User-friendly, succinct data reports include: item-level analysis, standards-level analysis and student analysis as well as bottom-line results 3. Teacher-owned analysis 4. Test-in-hand analysis between teacher(s) and instructional leader 5. Deep analysis which moves beyond “what” students got wrong and answers “why” they got it wrong Action 1. New lessons collaboratively planned based on data analysis 2. Teacher lesson plans/units explicitly included dates, times, standards, specific strategies, and ongoing assessment 3. Instructional leaders reviewed lesson plans and implementation for evidence of reteaching and re-assessing 4. Accountability created by observing changes in teaching and in-class assignments that are aligned with the Planning Calendar and teacher lesson plans 5. Engaged students know the end goal, how they did, and what actions they are taking to improve /4 /4 /4 /4 /4 Lit. Math /4 /4 /4 /4 /4 /4 /4 /4 /4 /4 /4 /4 /4 /4 /4 /4 /4 /4 /4 /4 TOTAL: Created by New Leaders for New Schools and Paul Bambrick-Santoyo, June 11, 2007 High School Interim Assessment Project in Chicago Public Schools 2009-10 5 /100 2 Data-Driven Culture—Tool 2 Data-Driven Instruction (DDI) Guiding Questions for Completing the DDI Rubric HS Interim Assessment Project in Chicago Public Schools ’08–’09 The purpose of these guiding questions is to provide your school with a tool to use when completing the DDI Implementation Rubric. Accuracy is essential for identifying school needs and prioritizing them. Additionally, by aligning the resources with the key drivers on the rubric, and assessing a school over time, school staff will be able to determine the level of success of this process. Instructions: This document provides guiding questions for a school to use when completing the DDI Implementation Rubric (Tool 1). It is not necessary to ask all questions, but by following the general outline of questions, it will help to ensure consistency. It is important for the rubric to be completed in collaboration with the Principal and DDI Leadership Team. Culture 1. DDI Leadership Team trained and highly active in all stages of the process Do you have a leadership team in place? What is the make up of the team and how much support do they need in content and data/assessment? Does the team use the same words? In other words, do they know the vision and the rationale for why the school is using interim assessments to drive instruction? Did the team help design/adapt the assessments? If the assessments have been provided, has the team seen them? Have they taken the assessments? 2. Vision established by school leadership and repeated relentlessly throughout the year Does the staff know that being data driven is highly valued at the school leadership level? If someone were to stop five teachers in the hall and ask what the leadership at their school values most, what would they say? Does the staff know why the leadership believes this is a strong lever to drive achievement gains? Does the staff know how they will be involved with the work during the year? Does the staff know how the professional development for the year aligns to the data driven instruction initiative? 3. School year began with a detailed Calendar that includes time for: assessment creation/adaptation, implementation, analysis, team meetings, and re-teaching (flexible enough to accommodate district changes/mandates) Did a calendar exist last year in your school? Was the calendar shared with all teachers? Do you have a calendar for the coming year? High School Interim Assessment Project in Chicago Public Schools 2009-10 6 Tool 2 – Cont’d 4. Professional Development aligned with data-driven instructional plan Launch of data driven model, including messaging? PD plan for training the leadership team on how to do the work? Training for teachers in analysis and planning based on data analysis? Does the PD plan for the year match root causes of school needs? Does the PD plan accommodate the findings of the interim assessment data analysis? 5. Transparency achieved by teachers seeing the assessments at the beginning of each cycle Simple yes or no? If tests are secure, do you have steps to achieve the same thing as transparency Assessments (Literacy and Math) 1. Common interim assessments administered 4–5 times per year Simple yes or no? Note: “common” refers to the same grade level/content area administering the same interim assessments. 2. Defined standards aligned to the state test and college-ready expectations Do your interim assessments mirror the state test in content, rigor, and format (including length)? If the students are successful with the interim assessments, will they be on track to be successful in college level work? 3. Aligned assessments to instructional sequence of clearly-defined grade level/content expectations Are the standards easily understood and applied by teachers? If not, are documents and trainings in place that will ensure standards are understood? Are the assessments aligned with the instructional sequence? Will this sequence equal success on the standards by the end of the school year? 4. Previous standards re-assessed Simple yes or no? How is the reassessment of standards determined? Is it random? Are the most important standards reassessed, or are the reassessment questions determined by the items missed on an earlier interim? 5. Wrong answers/written responses illuminate student misunderstandings On multiple choice items, do the wrong answers reflect the most common student misunderstandings? On written answers, does the rubric enable you to score the student responses and determine instructional steps? Analysis 1. Immediate turnaround of assessment results (ideally 48 hours, 1 week maximum) Simple yes or no? If there is extensive writing involved in student responses, how long would it take you to score them? Are the written answers being graded collaboratively to ensure consistency? High School Interim Assessment Project in Chicago Public Schools 2009-10 7 Tool 2 – Cont’d 2. User-friendly, succinct data reports include: item-level analysis, standards-level analysis and student analysis, as well as bottom-line results Do the reports provide information at the most discrete level of the standard? Do the reports provide information from three perspectives—comparison to standard, self, and others? Do the reports include a distracter (wrong answer) analysis? If there are an abundance of data reports, are they being prioritized for importance so as not to overwhelm the teachers? 3. Teacher-owned analysis Who does the analysis of the data? Who draws the conclusions from the data? Are teachers led to discover the conclusions? Does the analysis result in the identification of key standards for re-teaching? Are teachers skilled in analyzing data, and if not, is their capacity being enhanced? 4. Test-in-hand analysis between teacher(s) and instructional leader When the analysis is done, is the test in front of people? Are the instructional leaders directly involved with the analysis? 5. Deep analysis which moves beyond “what” students got wrong and answers “why” they got it wrong When analyzing data, does the analysis stop at what they got wrong, or does it push farther to try and determine the misunderstanding? Are there distracter analysis documents available (documents that indicate the possible misunderstanding if the answer was chosen)? Did teachers create these? Action 1. New lessons collaboratively planned based on data analysis Is there a structure in the school for collaborative planning (is there time built in)? Do content/grade level teams plan together? Is a leader involved with the planning? Are the plans directly tied to the analysis of the interim assessments? 2. Teacher lesson plans/units explicitly include dates, times, standards, specific strategies, and ongoing assessment When planning based on the data, are the teachers explicit? Is there a consistent format that is used by the teachers for planning that includes these aspects? 3. Instructional leaders reviewed lesson plans and implementation for evidence of reteaching, and re-assessing Are the instructional leaders directly involved in the planning process? Do the instructional leaders understand the difference between re-teaching and re-assessing instruction? 4. Accountability created by observing changes in teaching and in-class assignments that are aligned with DDI Planning Calendar and teacher lesson plans Does the principal follow up with observations in classrooms after the planning? Do the classroom observations demonstrate a closing of the cycle that started with the interim assessment–through analysis–through planning–back to teaching? High School Interim Assessment Project in Chicago Public Schools 2009-10 8 5. Are the re-teaching efforts and instructional strategies different, or just more of the same? Engaged students know the end goal, how they are doing, and what actions they are taking to improve Is the school involving the students in the tracking of their academic growth? Are the students engaged in the learning directly tied to the interim assessments? High School Interim Assessment Project in Chicago Public Schools 2009-10 9 Data and Culture—Tool 3 3 Data-Driven Instruction (DDI) Creating a DDI Planning Calendar HS Interim Assessment Project in Chicago Public Schools ’08-’09 School Name:______________________________________School Year:________________ Person responsible for creating and updating the Plan:_________________________________ Names of the DDI Leadership Team members:________________________________________ _____________________________________________________________________________ Date Event* Checklist: Planning Calendar TOP 10 Keys for Success in Creating a Great Planning Calendar* 1. Dates to train school leadership team 2. If creating, modifying, or adding assessments, time to do this work and who will do it 3. Date to introduce staff to the actual assessments 4. Dates for needed PD related to data driven instruction, including the launch 5. Date to implement prediction task for interim assessment 6. Dates and times for scoring assessments and compiling data reports 7. Dates and times for analysis of data 8. Dates and times for instructional planning following analysis 9. Time for reteaching and intervention efforts 10. Time built in for leaders to follow up in classrooms Prepared by New Leaders New Schools, 2008 High School Interim Assessment Project in Chicago Public Schools 2009-10 10 Example of An Interim Assessment Cycle Used for One Assessment Monday Tuesday Wednesday Thursday Friday 1 Final versions of tests ready Copy assessment Copy assessment Copy assessment Answer sheets ready Prediction task Distribute assessments to teachers 2 3 Prediction task Assessment Assesment Data-entry Data-entry Distribute assessments to teachers administration administration Prepare data reports Prepare data reports Principal review data reports, identify areas of strength and weakness Data review meetings Prepare for data review meetings Plan for re-teaching Test-in-Hand Analysis Results Meeting Protocol Prepared by New Leaders New Schools 2008 High School Interim Assessment Project in Chicago Public Schools 2009-10 11 4 Data-Driven Culture—Tool 4 Data-Driven Instruction (DDI) Developing an Entry Plan HS Interim Assessment Project in Chicago Public Schools ’08–’09 The purpose of an Entry Plan is to help you and your staff members identify concrete actions that will take place during the implementation of your school’s Data-Driven Instruction initiative. Instructions: Please use your Leadership Team’s consensus assessment of the DDI Implementation Rubric and your DDI Instruction Planning Calendar as the starting place for your school’s Entry Plan, which fully fleshes out the key actions starting in August and throughout the school year. School Name: _ School Year: _________________ Names of DDI Leadership Team Members: _____________________________________________________________________________ _____________________________________________________________________________ Event Plan School: Objective: Strategy: Assessment: Task When High School Interim Assessment Project in Chicago Public Schools 2009-10 12 Checklist: An Entry Plan TOP 10 Keys for Success* 1.Build a shared vision. 2.Review standards and benchmarks (expectations for student skills) for learning with faculty. 3. Build a common vocabulary among staff. This should be used with students, so that they develop the same vocabulary. 3. Align expectations both vertically. What is missing coverage? 4. Prepared by New Leaders New Schools, 2008 Prepared by New Leaders for New Schools April, 2008 High School Interim Assessment Project in Chicago Public Schools 2009-10 13 5 Assessment—Tool 5 Data-Driven Instruction (DDI) Predicting Task (Revised 7/9/09) HS Interim Assessment Project in Chicago Public Schools ’08–’09 Teacher Name:__________________________________School Year: _______________ Content Area of Assessment: ________________________________________________ Assessment Name/#________________________________________________________ Course Name/Number and Period: ____________________________________________ The purpose of the predicting task is to provide individual or teams of like teachers with a format for reviewing the performance of the students in their classes before the assessment is given, and then to compare this prediction to the actual student achievement. It will also help a teacher identify those areas that need additional emphasis through re-teaching and re-assessing. Materials: Teacher Key (includes items and information about the assessment) Tool 5 Directions: 1. 2. 3. 4. 5. 6. 7. 8. 9. Read each test item on the Teacher Key. In the top left enter in the number of students in your class (or group). In Column A, enter the item number. In Column B, enter the number of students in your class/classes who you think will get the answer correct. In Column C enter the percentage of students who will answer each item correctly. In Column D enter an “X” if this item is not in the class curriculum before the test will be administered. In Column E, enter an “X” if this item is not taught at the level of rigor in the assessment. In Column F, enter an “X” if this standard is not taught in this course. In Column G, enter an “X” if you predict an item to be one of the hardest on the test. High School Interim Assessment Project in Chicago Public Schools 2009-10 14 Table 5: Test-in-Hand Analysis - Prediction Predicting Student Performance Number of Students in Class A Item # B C D E F G Number of students who will answer correctly Percent of students who will answer correctly Item not in the curriculum before test Item not taught at the level of rigor assessed Standard was not taught in this course Predicted to be the one of the hardest items on the test 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 17 18 19 20 21 22 23 24 25 High School Interim Assessment Project in Chicago Public Schools 2009-10 15 6 Analysis—Tool 6 Data-Driven Instruction (DDI) Using a Test-in-Hand Analysis HS Interim Assessment Project in Chicago Public Schools ’08-’09 Teacher Name______________________________________ School Year: __________ Content Area of Assessment: _______________________________________________ Assessment Name/#_______________________________________________________ Course Name/Number:_____________________________________________________ The purpose of the Test-in-Hand Analysis is to provide individual teachers or groups of like teachers with a format to review data and begin the process of choosing areas that need additional re-teaching and re-assessing. Materials: Teacher Answer Key Tool 6 and Tool 8 Condensed Item Analysis Report (Teacher) Condensed Item Analysis Report (All School or All Grade Level) Test Statistics Report (Teacher) Completed Table 5 – Predicting Task Data Part 1 Directions: Complete Table 6: Using a Test-In-Hand Analysis 1. In Column A, enter the number of the item 2. In Column B, enter the number of students who answer correctly. 3. In Column C, enter the actual % correct from the “Condensed Item Analyses Report.” 4. In Column D, enter the difference between the predicted and actual percent scores. 5. In Column E, enter an “X” if the predicted percent correct is higher than the actual % correct or if more than 50% of the students answered incorrectly. 6. Optional: In Column F, enter the number of students in the school who answered correctly. 7. Optional: In Column G, enter the percent of student in the school who answered correctly, 8. In Column H, enter an “X” if the percentage of the students in the school answering the item correctly is greater than the class or if more than 50% of the students missed the item 9. In Column I, enter an “X” if this one of the three hardest items on the test. 10. Examine the “Test Statistics Report” to determine if there are any standards that are problematic for students. Use this data for step 11. High School Interim Assessment Project in Chicago Public Schools 2009-10 16 11. In Column J, enter an “X” by those items that have the greatest number of “X”s or are most important to re-teach. Use the data that has been collected in Tables 5 and 6 to determine high priority items for reteaching. Part 2 Directions: 1. Examine the “Test Statistics Report” to determine any problems with mastery of the standards. 2. Use the Condensed Item Analysis Report to examine the answer choices for responses for items that have been identified for re-teaching. 3. Use the Mining the Meaning of Incorrect Answer Choices to help understand why students are having difficulty answering specific questions. This follows Table 6. 4. Take the insight that you have gained from this analysis and begin Tool 8. High School Interim Assessment Project in Chicago Public Schools 2009-10 17 Table 6: Using Test-In-Hand Analysis Data Analyzing Test Data Number of students in class who took the test Number of students in school who took the test A B C D E F G H I Number of students who answered correctly Percent of students who answered correctly Predicted % correct higher than actual % correct Difference between predicted and actual correct Number of student in school who answered correctly Percent of students in school who answered correctly Difference between classroom and school percent of students who answered correctly Lowest percent items on test Consider this item is important for reteaching High School Interim Assessment Project in Chicago Public Schools 2009-10 18 Mining the Meaning of Incorrect Answer Choices Use these characteristics to “unpack” the reasons that students may be unsuccessful on specific test items. 1. “Pure Guess” Qualities: This is an item where the percent choosing each response is approximately equally distributed. Actions: Look for items at the end of a section or that have difficult vocabulary. Work with students on strategies to complete all items. 2 “Ultimate Misconception” Qualities: One incorrect answer choice is chosen by a large percent of the students. Actions: Evaluate the answer choice that is drawing even the best students to this choice. 3. “The Split” Qualities: The majority of students choose two different answer choices. Actions: Usually the second answer choice has a misconception that students with limited understanding choose. Identify the misconception. 4. “The Big Zero” Qualities: An answer choice is not a viable misconception because no one chose it. Actions: Rewriting the answer choices with a misconception can usually solve this problem. 5. “The Missing Bubble” Qualities: This is a typical problem when students do not have enough time to finish a test or loose interest in responding to the items. Actions: Teach the test prep strategy of skipping harder items and moving through the test until the end of the time allotted. 6. “Vocabulary Enigma” Qualities: Often students miss items because they do not understand the terms that are using in the item stem. Actions: Examine the vocabulary in the ACT and WorkKeys tests so that students have an understanding of the basic vocabulary used. High School Interim Assessment Project in Chicago Public Schools 2009-10 19 7. “Misconception Mystery” Qualities: When an item has been written with no identifiable misconceptions in the answer choices, then answers may tell you little about what students do know or do not know. Actions: Use the item as an open-ended item, bell-ringer, or extra credit question to help you determine why students are confused. High School Interim Assessment Project in Chicago Public Schools 2009-10 20 7 Analysis—Tool 7 Data-Driven Instruction (DDI) Conducting a Results Meeting (Revised 8/29/09) HS Interim Assessment Project in Chicago Public Schools ’08–’09 The purpose of the Results Meeting is for teacher teams to work as a group to analyze the interim assessment data from common courses, grade levels, or across the school. This meeting is designed for 55 minutes, but can be readjusted for more/less time. The meeting recorder should use chart paper or a chalk/white board to create a list of the consensus best actions. Materials : Tool 7 and Tool 8 Condensed Item Analysis Report for Common Courses, Grade Levels, or School Instructions: The following provides a description of the Results Meeting. Use the “Results Meeting Agenda” with teachers. Participants will also need a copy of Tool 8 “Developing a Re-teaching Plan.” 1. Identify Roles of Participants (2 minutes) Timer Facilitator Recorder 2. Identify objective to focus on (2 minutes or given) 3. Discuss what teaching strategies have worked so far – e.g., been successful in helping students achieve on the interim assessment (5 minutes) 4. What are the chief challenges that teachers faced in helping students achieve on the interim assessment? (5 minutes) 5. Brainstorm proposed solutions for challenges that teachers face using the Brainstorm Protocol. (10 minutes) 6. Have each participant consider the feasibility of each idea and then ask each person to share his or her reflections by using the Reflection Protocol. (5 minutes) 7. Gain consensus in the group around the best actions by using the Consensus Protocol (15 minutes) 8. Participants begin Re-teaching Lesson Plans in order to identify tasks needed to be done by whom and when. (10 minutes) 9. Place dates in the DDI Planning Calendar (Facilitator) High School Interim Assessment Project in Chicago Public Schools 2009-10 21 RESULTS MEETING AGENDA Total Time 55 Minutes Identify Roles: Timer, Facilitator, Recorder Identify Objectives to focus on (2 minutes or provided) (2 minutes) College Readiness/Work-Key skills or standard _________________ Target participants (e.g. subgroups, courses): Grade level(s): Discuss Successes to identify what has worked so far (5 minutes) Discuss Chief Challenges that have blocked student success in the past (5 minutes) Brainstorm Proposals (e.g. ideas or solutions) for overcoming the challenges (10 minutes) Reflect on the feasibility of proposals (5 minutes) Consensus around the best actions to take (15 minutes) Use Tool 8 “Developing the Re-teaching Plan” to identify tasks needed to be done by whom and when. Place dates on DDI Planning Calendar. (10 minutes) Discuss Protocol Brainstorm Protocol Respond to the issue under discussion. Go around the room with each person in the group responding to the issue. Record ideas generated during the discussion on chart paper to remain visible throughout the meeting. Go in order around the room and give each person 30 seconds to share something. Recorder places information on the chart paper/board or computer for all to see. If a person does not have something to share, say “Pass”. No judgments should be made; if you like what someone else said, when it’s your turn simply say, “I would like to add to that by…” Even if 4-5 people “Pass,” keep going for the full time (30 seconds per person). Reflect Protocol Consensus Protocol Each person involved in the process participates in silent/individual reflection on the issue at hand. Identify areas that everyone agrees with. Each person should have the opportunity to share his or her reflections for 30 to 60 seconds. Be concrete in the ideas that will be shared with others as a consensus. If a person doesn’t have a reflection to share, say “Pass” and come back later. High School Interim Assessment Project in Chicago Public Schools 2009-10 22 8 Action—Tool 8 Data-Driven Instruction (DDI) Developing the Re-teaching Lesson Plan (Revised 8/29/08) HS Interim Assessment Project in Chicago Public Schools ’08–’09 The purpose of the Re-teaching Lesson Plan is to provide schools with a tool to share the “reteaching” and “re-assessing” strategies that are based on an analysis of interim assessment data. Instructions: Use this lesson plan template as a guide to the types of components that should be considered in re-teaching and re-assessing. Overview Objective(s) focused on: College Readiness/Work-Key skill or standard: Target participants (e.g. subgroups, courses, all): Grade level(s): Date(s) for Re-teaching: Date(s) for Re-assessing: Re-teaching Strategies What are the tasks needed to be done to be ready to re-teach? (Do now’s and assignments?) Who will complete the tasks? When will each task need to be done? Brief description of the lesson, including teacher guide (e.g., what questions are students asked or how to structure the activity). High School Interim Assessment Project in Chicago Public Schools 2009-10 23 Re-assessing strategies What are the tasks needed to be done to be ready to re-assess? (“Do now” and assignments) Who will complete the tasks? When will each task need to be done? Brief description of re-assessing strategies, including student guides with formative assessments such as homework or other work for students to show mastery. New Leaders for New Schools August, 2008 High School Interim Assessment Project in Chicago Public Schools 2009-10 24 9 Action—Tool 9 Data-Driven Instruction (DDI) Creating a Student Prescription Sheet HS Interim Assessment Project in Chicago Public Schools ’08–’09 The purpose of the Prescription Sheet is to provide independent review and reinforcement of skills for students on an individual basis by student’s level of need. Instructions: This sheet can be used by an individual teacher or a staff member involved with student support services (e.g. tutoring, special education, ELL, SES, before/after school programs, learning centers. It can be used as a communication tool for students who may need supplemental support. Use the individual student item analysis for students who are at risk to choose clusters of items/standards for review and reinforcement for a short period of time. Determine programs/services/strategies to be used for re-teaching. For each standard/item cluster, plan tasks to accommodate the independent needs of students. When completing the sheet, ask yourself, “What can each student do along the continuum of skills?” Although there will be some blank spaces in the form, the goal is to have more spaces filled than empty. Student Name School Year: Grade: Course Name/#: Standard/Item Cluster: Program and/or Service Computer-Based Activities Hands-on Activities Textbook/Workbook Other Strategies Assessment Strategy: Comments: New Leaders for New Schools August, 2008 High School Interim Assessment Project in Chicago Public Schools 2009-10 25 10 Analysis and Action—Tool 10 Data-Driven Instruction (DDI) Bottom Line Results Workshop (Revised 8/29/08) HS Interim Assessment Project in Chicago Public Schools ’08–’09 The purpose of the Bottom Line Results Workshop is to provide an opportunity for the DDI Leadership Team to reflect and act on what happened throughout the year, bottom-line results are aggregate numbers. As each teacher works with students to support student achievement results, so does the school system need to support teachers, support staff, and students based on the data. The DDI Leadership Team works as a group to analyze the interim assessment data and EPAS data from a predetermined period. This meeting is designed for 2 hours. The recorder should use chart paper or a chalk/white board to create a list of the consensus best actions. Instructions: The following provides the agenda for the “Bottom Line Results” Workshop meeting agenda (at the end of this tool). The principal will facilitate the meeting and one or more members of the DDI Leadership Team will present the data. 1. Identify Roles of Participants (2 minutes) Timer Facilitator Recorder(s) – Summarizing Results, Changes needed in Entry Plan and/or DDI Planning Calendar, Summary to share with staff 2. Present a snapshot of data related to EPAS and interim assessments results by grade, content level, standards, and individual students over time. (30 minutes) 3. Identify areas to focus on in content areas, grade levels, and/or course-alike groups. (10 minutes) 4. Discuss what teaching strategies have been successful in helping students achieve on these assessments at grade levels or in content areas. (reflection time 3 minutes, discussion 17 minutes). 5. What are the chief challenges that teachers faced in helping students achieve on the assessments? (15 minutes) 6. Brainstorm proposals for challenges that teachers have faced using the Brainstorm Protocol. (20 minutes) 7. Have each participant consider the feasibility of each proposal and then ask each person to share his or her reflections using the Reflection Protocol. (10 minutes) High School Interim Assessment Project in Chicago Public Schools 2009-10 26 8. Gain consensus in the group around the changes that need to made in the DDI Planning Calendar. (10 minutes) 9. Place the consensus actions in the DDI Planning Calendar. (facilitator) High School Interim Assessment Project in Chicago Public Schools 2009-10 27 BOTTOM LINE RESULTS MEETING AGENDA Total Time 2 Hours Identify Roles: Timer, Facilitator, Recorder(s) – Summarizing Results, Changes needed in DDI Planning Calendar, Summary to share with all staff (2 minutes) Present Data Include Multiple Assessments if Available (30 minutes) Identify Areas to focus on (10 minutes or provided) Area (e.g., algebra, writing) _______________________________________________ College Readiness/Work-Key or standards skills _______________________________ Target participants (e.g. subgroups, courses): Grade level(s): Discuss Successes to identify what has worked so far. (15 minutes) Discuss Chief Challenges that have blocked student success in the past. (20 minutes) Brainstorm Proposals (e.g. ideas or solutions) for overcoming the challenges. (20 minutes) Reflect on the feasibility of proposals. (5 minutes) Consensus around the best actions to take. (15 minutes) Place dates on DDI Planning Calendar. (Facilitator) High School Interim Assessment Project in Chicago Public Schools 2009-10 28 Bottom Line Results Meeting Agenda Tool 7 - Continued Discuss Protocol Respond to the issue under discussion Go around the room and have each person in the group respond to the issue. Record ideas generated during the discussion on chart paper to remain visible throughout the meeting Reflect Protocol Brainstorm Protocol Go in order around the room and give each person 30 seconds to share something. Recorder places information on the chart paper/board or computer for all to see. If a person does not have something to share, say “Pass”. No judgments should be made. If you like what someone else said, when it’s your turn simply say, “I would like to add to that by…” Even if 4-5 people “Pass,” keep going for the full time (30 seconds per person). Consensus Protocol Each person involved in the process participates in silent/individual reflection on the issue at hand. Identify areas that everyone agrees with. Each person should have the opportunity to share his or her reflections for 30 to 60 seconds. Be concrete in the ideas that will be shared with others as consensus. If a person doesn’t have a reflection to share, say “Pass” and come back to him or her later. New Leaders for New Schools August, 2008 High School Interim Assessment Project in Chicago Public Schools 2009-10 29 11 Analyses and Action —Tool 11 (Revised 8/29/08) Data-Driven Instruction (DDI) Conducting a Walk-through Revised (8/29/08) HS Interim Assessment Project in Chicago Public Schools ’08–’09 The purpose of the Conducting a Walk-through tool is to provide individuals who are monitoring the implementation of re-teaching and re-assessing plans with a tool to use in visiting classes. The tool should be used in coordination with the Re-Teaching Lesson Plan where dates for reteaching and re-assessing are provided. Implementation of these classroom plans is essential for the success of the DDI efforts in your school. Instructions: In the first column rate the level of implementation in a classroom of the re-teaching, reassessing, and/or other strategy being used. Note any comments in the third column. Teacher’s Name: Observation Date: ___________ Observation of (circle one or more): Re-Teaching Re-Assessing Other: Course Name/Number _________________________________________________________ 0= Poor evidence of implementation 1= Fair evidence of implementation 2= Good evidence of implementation 3= Excellent evidence of implementation NA= Not applicable Rating Observation of: 0 1 2 3 NA Focus on the state standard and/or college readiness/work-key skills selected 0 1 2 3 NA Key actionable changes in class 0 1 2 3 NA Evidence of change from previous observations 0 1 2 3 NA Students engaged as a result of the strategy 0 1 2 3 NA Students learning as a result of the strategy Comments Things that I am impressed by: Things I have noticed: Based on Paul Bambrick-Santoyo’s Observation Tracker, a Excel Program that has been made available to schools participating in the High School Interim Assessment Project High School Interim Assessment Project in Chicago Public Schools 2009-10 30 12 Analysis and Action- Tool 12 Data-Driven Instruction (DDI) Engaging in Student Reflection (New 8/29/08) HS Interim Assessment Project in Chicago Public Schools ’08–’09 The purpose of the Engaging in Student Reflection Tool is to provide a format for students to analyze their performance through examining their assessment results, reflecting on why they missed items, and their plans for the future. Directions: 1. Read each test item on the Interim Assessment. Begin with Worksheet 12.1. 2. In Column B, in two or three words, write down the skill that was being tested. 3. In Column C, place a check for each item that was answered right or wrong. 4. In Column D, place a check for each item that was answered wrong. Was it a “Careless mistake” or “Didn’t know the content”? 5. When Columns B to D are complete, add up the total number right, wrong, careless mistake, and didn’t know the content, and transfer them to Worksheet 12.2. 6. Plot the data from Worksheet 12.2 using a bar graph. 7. Complete the Reflection Questions. High School Interim Assessment Project in Chicago Public Schools 2009-10 31 Student Reflection Tool HS Interim Assessment Project in Chicago Public Schools ’08–’09 To prepare for the next interim assessment, we want to help you find out a little more about yourself, your test taking style, and also how you can SHINE on assessments. Student Name: ___________________________ ___Date: _______________________ Assessment Name/#______________________________________________________ Course Name/Number and Period:___________________________________________ A Item # B What skill was being tested? Worksheet 12.1 C Did you get the item right or wrong? Right Wrong D If wrong, why did you get the item wrong? Be honest. Careless Mistake Didn’t know the content 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 High School Interim Assessment Project in Chicago Public Schools 2009-10 32 Worksheet 12.2 Please add the number in columns C and D from Worksheet 12.1 and place them below Total # answers right Total # answers wrong Total # of items where you made a careless mistake Total # of items where you did not know the content On the graph below, plot the data from Worksheet 12.2 in a bar graph. Your Right and Wrong Answers 40 39 38 37 36 35 34 33 32 31 30 29 28 27 26 25 24 23 22 21 20 19 18 17 16 15 14 13 12 11 10 9 8 7 6 5 4 3 2 1 # # # # Right Wrong Care- Did not know less High School Interim Assessment Project in Chicago Public Schools 2009-10 33 Reflections: 1. Think about all the tests that you have taken this year. Which test did you do the best on? Why do you think that you did well on this test? 2. Think about all the tests that you have taken this year. Which test are you not proud of? Why not? 3. Are you happy with the number of careless errors you made? If yes, why? If no, why not? 4. Are you happy with the number of “Didn’t know the content” responses you made? If yes, why? If no, what should you have done before the test? 5. What do you plan to plan to do in the future about areas that you did not do well? 6. Name three skills you need extra help on before moving on. a. __________________________________________________ b. __________________________________________________ c. ___________________________________________________ 7. Based on the information on the graph, you will be able to learn more about your learning and test-taking style. High School Interim Assessment Project in Chicago Public Schools 2009-10 34 If you have… You are a… In class you… - are one of the first students to finish the independent practice. More careless errors than “don’t know”… RUSHING ROGER - want to say your answer before you write it. - often don’t show your work. - get assessments back and are frustrated. More “don’t know” than careless errors. BACKSEAT BETTY - not always sure that you understand how to do independent work. - are sometimes surprised by your quiz scores. During class you should… - SLOW DOWN! - ask the teacher to check your work or check with a partner. - push yourself toward perfection, don’t just tell yourself “I get it”. - take time to slow down and explain your thinking to your classmates. During assessments you should… - SLOW DOWN. You know you tend to rush, make yourself slow down. - REALLY double check your work (since you know you tend to make careless errors). - use inverse operations when you have extra time. - keep track of your mistakes and look to see if you keep making the same ones over and over. - ask questions about the previous night’s homework if you’re not sure it’s perfect. - do all of the problems with the class at the start of class. - use every opportunity to check in with classmates and teachers to see if you’re doing problems correctly. - do the problems you’re SURE about first. - take your time on the others and use everything you know. - ask questions right after the assessment while things are still fresh in your mind. Are you a Rushing Roger? Then answer question #1: 1) In your classwork and homework, if you notice you keep making similar careless errors, what you should you do? Are you a Backseat Betty? Then answer question #2. 2) When you get a low score on a quiz, what should you do? High School Interim Assessment Project in Chicago Public Schools 2009-10 35 Appendix A. Test-in-Hand Analysis Number of students in class: Number of students in class who took test: 28 Number of students in school who took test 28 300 Predicting Student Performance Item # 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 Number of students who will answer correctly Percent of students who will answer correctly Item not in the curriculum before test Item not taught at the level of rigor assessed Standard was not taught in this course Analyzing Test Data Predicted to be one of the hardest items on the test 0% 0% 0% 0% 0% 0% 0% 0% 0% 0% 0% 0% 0% 0% 0% X X X X X X X X X X X X X X X Number of students who answered correctly Percent of students who answered correctly Predicted % correct higher than actual % correct 0% 0% 0% 0% 0% 0% 0% 0% 0% 0% 0% 0% 0% 0% 0% Difference between predicted and actual correct 0% 0% 0% 0% 0% 0% 0% 0% 0% 0% 0% 0% 0% 0% 0% Please contact mkulieke@nlns for a copy of the electronic copy of the calculating spreadsheet. High School Interim Assessment Project in Chicago Public Schools 2009-10 36 Number of student in school who answered correctly Percent of students in school who answered correctly Difference between classroom and school percent of students who answered correctly Lowest percent items on test Consider this item is important for re-teaching 0% 0% 0% 0% 0% 0% 0% 0% 0% 0% 0% 0% 0% 0% 0% 0% 0% 0% 0% 0% 0% 0% 0% 0% 0% 0% 0% 0% 0% 0% X X X X X X X X X X X X X X X X X X X X X X X X X X X X X X