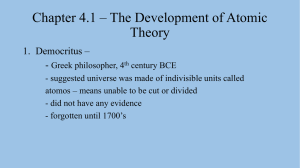

[PC] Tutorial 2: Phasing by isomorphous replacement

advertisement

![[PC] Tutorial 2: Phasing by isomorphous replacement](http://s3.studylib.net/store/data/005887303_1-d7e0ac0443dec394cb20740d43c3c9d7-768x994.png)

Tutorial 2: Phasing by isomorphous replacement Mike Lawrence, September 2007 You will have already completed Tutorial 1, the aim of which was to teach you how to process oscillation data using the HKL suite and, as an example, to process the native data set for the pneumococcal surface antigen A (PsaA) data set. The aim of this tutorial is to teach you (i) how to process a heavy atom derivative data set (ii) how to compare this data set with the native data set to see whether or not the heavy atom is bound in a useful fashion (iii) how to inspect heavy atom Patterson maps (iv) how to compute heavy atom phases and improve them by solvent flattening (v) how to inspect and interpret heavy atom maps In order to do this tutorial you will need to have access to a computer containing the platinum nitrate derivative data set for PsaA, the processed native data set for PsaA from Tutorial 1, and a variety of software and their manuals. You should also have completed as much as possible of the isomorphous phasing pages on the Birkbeck course. The theory associated with heavy-atom phasing and the practical detail of heavy atom derivatization will be dealt with in the lectures. Once the heavy atom data sets have been obtained there are an increasing variety of pathways to obtained heavy atoms maps, depending on the software package being used. These pathways are more or less automated depending on the packages being used.. This tutorial will focus on one pathway – use HKL to process the data, use CCP4i (the CCP4 gui) to scale and merge the heavy atom data with the native data set, use CCP4i to compute difference Pattersons as appropriate, use SOLVE for determining the heavy atom positions and RESOLVE for solvent flattening. Other useful pathways involve the programs RSPS for manual determination of the heavy atom positions, MLPHARE for heavy atom phasing, SHARP for heavy atom phasing and solvent flattening, DM for solvent flattening. Undoubtedly more are emerging. Part 1. Processing the derivative data set Background The K2Pt(NO3)4 data set consist of 202 one-degree oscillation images collected on a Rigaku R-axis IV image plate detector mounted on a laboratory rotating anode X-ray source. The data set is stored in the directory $PSAA_DATA/ptnit and the respective file names are of the form psa40###.osc, where ### is a three-digit number ranging from 001 to 202. This data set was obtained by soaking the crystal in 12 mM platinum nitrate overnight, and then mounting in the conventional way for cryo-crystals. Heavy atom data set processing with HKL is done in the same fashion as native data set processing, except that the Friedel pairs must be kept separate to allow for anomalous scattering by the heavy atom. Exercise Process the derivative data set using HKL. Processing of derivative data within HKL is identical to that of native data. The only difference is that in the scalepack command file an extra command is needed, namely anomalous on This instructs scalepack to separate the anomalous pairs in the output. You may also use the scale anomalous command, which then keeps the Friedel pairs separate during both scaling and statistics computation. This is worthwhile if the data is of good quality and adequate redundancy. Questions 1.1 What are the merging R-factor, the completeness, the maximum resolution, the mean redundancy, and the mean signal-to-noise for this data set ? 1.2 How does these statistics compare with those of the native ? 1.3 Is the space group for this data set the same as that of the native ? 1.4 How does the post-refined cell compare to that of the native data set ? 1.5 Examine the output reflection file from scalepack. Comment on why the number of columns varies down the file. What values are contained in these columns ? Part 2. Merging the heavy atom and native data sets Background The CCP4 suite of programs has a very convenient way of handling multiple heavy atom and native data sets, namely via a file format that has labelled columns for the data (*.mtz files). Each column within the file carries a particular data item for each reflection and can be referred to by a user-defined name. Use of that particular column of values by a CCP4 program requires that that column be associated with a program column name. Likewise, new columns of data associated with each reflection can be created by the program and the user can assign a name to each output column. This is particularly simple within the CCP4 gui; within CCP4 script files this is done via the LABIN and LABOUT assignment commands. Two CCP4 programs are useful here: scalepack2mtz and cad. The former converts a scalepack output file to mtz format (should be done for both the derivative and native data sets) and the latter merges the two mtz files into a single mtz file. The "truncate" procedure is also invoked to give a systematic handling of negative intensities. Note that multiple derivatives can be progressively merged into a single file, and their columns names are conveniently distinguished. cad also does some other useful things, such checking that the files are sorted in the same order and use the same convention for the Laue group asymmetric unit. A free R-factor column is also added and dummy reflection records are also created for missing reflections. Useful checks on the import include (a) a check of the Wilson plot, to see that it is protein-like (b) a check on whether or not there is systematic variation in reflection amplitude with respect to index parity (c) a check for excessive anisotropy (d) a check for crystal twinning cad itself should run without much informative output, but a quick scan of the log file for errors can do no harm ! Exercise Use the CCP4 gui to import the native and ptnit data sets into CCP4 mtz format using scalepack2mtz, and then merge these into a single file using cad. Questions 2.1 Perform each of the above checks on the import of both the native and the ptnit derivative data and discuss the relevant numbers (twinning is not possible in P212121 so ignore that check for now). Part 3. Scaling the heavy atom data set to the native data set Background Heavy atom phasing is dependent on obtaining accurate values for the differences in amplitude of (i) the native and derivative reflections and (ii) the Friedel mates of the derivative (if anomalous scattering is present). This is dependent upon having the native and derivative data sets on the same scale. There are two approaches – either place both data sets on the same absolute scale, or place the data sets on a common relative scale. The latter approach is usually better, as it is not always clear what the best absolute (Wilson) scale is. The basis of relative scaling is much the same as scaling individual images to each other in scalepack, i.e. computing an overall scale factor and a “temperature factor”. The only improvement is to allow for anisotropic scaling, i.e. the temperature factor is described by a set of components which describe its variation as a function of direction in reciprocal space. This can be further elaborated into “local scaling”, but the latter form of scaling is not widely used. Effective scaling will ensure that mean native and derivative amplitudes are “as similar as possible” across the resolution range being considered. Often it is not possible to get good scaling across the entire resolution range and it is thus useful to omit the low resolution data (say lower than 20 Å or lower than 15 Å). Care must also be taken to exclude outlier data that may erroneously effect the scaling. This may require a few rounds of scaling to get the best possible result. Derivative scaling in CCP4 is done with the program scaleit. scaleit does all of the above and produces many useful statistics which can be used to assess the extent to which useful isomorphous derivatization has occurred. Useful checks of the scaleit output file including the following (a) Check the anisotropy scaling matrix to see whether or not the degree of anisotropy of the data is signficantly different between the native and derivative. (b) Are there reflections with exceptionally high isomorphous or anomalous differences, and should these be excluded from scaling by repeating the run, or by total deletion from the data set. Beware, this procedure could delete entirely valid data! (c) An assessment of the differences in terms of a normal error model. The numbers to check here are the Gradient (should be >> 1) and the intercept (should be ≈ 0.). Check the original paper if in doubt (Smith and Howell, J. Appl. Cryst. (1992), v25, 81-86). (d) The matrix of differences vs resolution. These should look normal in distribution without being significantly skewed. (e) The most useful table is probably the final one, which provides the scale factors that would be appropriate to each resolution shell (should all be ≈ 1.), Riso, RF_I, and the weighted R-factor as well as the size of the anomalous signal compare to the isomorphous signal. These values should all correspond to that expected for an isomorphous derivative. (see lectures). Exercise Use scaleit within the CCP4 gui to scale the ptnit data set to the native data set. Questions 3.1 Examine the scaleit log file and perform each of the above checks and comment on whether or not they suggest the derivative will be useful or not. Part 4. Plotting Harker sections for the ptnit derivative and determining the heavy atom coordinates Background A Patterson function computed with coefficients |ΔFiso|² will show peaks at positions corresponding to the inter-atomic heavy atom vectors. Space group symmetry often results in the vectors relating a heavy atom to its symmetry mates to lie in certain planes within the Patterson (the so-called Harker planes). Analysis of the positions of these vectors can lead to heavy atom coordinates. The traditional way of doing this is to print out and view the Harker planes. Exercise Calculate a ptnit difference Patterson map using the CCP4i gui. Plot these using pltdev (as far as I am aware this program has to be accessed via the command lie rather than via the gui). Questions 4.1 Comment on the Harker section plots. How many platinum atoms do you think are bound to the protein? 4.2 Compute the fractional coordinates of the heavy atom(s) directly from the Patterson. Part 5. Using SOLVE for heavy atom phasing Background SOLVE is an extremely powerful heavy atom search and refinement program. It is capable of scaling multiple derivatives, determining their heavy atom locations and determining a phase set. (Whilst this is an attractive "black box", analysis of the derivative/native scaling and visual assessment of the Harker planes remain invaluable processes before investing effort with SOLVE. Manual assessment of derivatives is also important in terms of working out whther or not to continue data collection). SOLVE endeavours to search for the heavy atom coordinates progressively and automatically, adding in as many sites as it can and using trees to keep track of all the combinations tried. It then uses a variety of measures to assess the quality of the phases obtained. It is also capable of determining the hand of the heavy atom cluster and of the space group via an analysis of the final map. These include 1) a score of the overall heights of all vectors in the Patterson 2) a score of the overall heights of peaks in difference fouriers 3) the figure of merit 4) the partitioning between protein and solvent in the heavy atom map We will start by using SOLVE for the ptnit dreivative only. Exercise Set up a SOLVE run for the native / ptnit data. For simplicity start with the already merged data in the scaleit output file. Use the SOLVE man pages to work out how to set this up. Questions 5.1 List the heavy atom sites obtained by SOLVE. Are these the same as you determined by hand? 5.2 List the temperature and occupancy associated with each heavy atom. What do these suggest ? 5.3 Examine the Cullis R-factor, the figure of merit and the phasing power of the solution. What does these suggest? 5.4 Examine the four scores produced by SOLVE for this solution. What do they suggest? Part 6 Solvent flattening Background The phases determined by MIR or SIR are often not very good (MAD on the other hand can yield spectacularly good maps). They seldom lead to a map that is completely traceable from one terminus to the next. In some instances they may only yield phases to low resolution (poorer than say 6Å). However, a variety of density modification techniques (DM) can be used to improve the situation. MIR maps inevitably do not meet certain expected properties of a protein map. These properties include flat solvent, non-crystallographic symmetry if present, an ideal histogram for the electron density and protein-like structure. DM seeks to improve the phases by iteratively enforcing these conditions onto the map and then recombining the altered structure factors with the observed amplitudes. Solvent flattening starts by determining an envelope for the protein within the MIR map, and then flattens all density outside of that (i.e. places it a a uniform level). Protein density is left alone, or sometimes made non-negative. The modified map is Fourier transformed, new phases calculated, which are then combined with the original Fobs values to generate a new map. The procedure is iterated, with new envelopes being computed every few cycles. We will seek to carry out solvent flattening by means of RESOLVE. RESOLVE is a new, very powerful capable not only of solvent flattening but also of attempting to build the structure straight into the map. RESOLVE uses maximum likelihood. To run RESOLVE do the following i) Create a file called resolve.in with the following lines in it line 1: solvent_content ### (where ### is the fractional solvent content appropriate to PsaA) line 2: no_build ii) Then type in at the command prompt export SYMOP /usr/local/ccp4/lib/data/symop.lib (you can place this in your .bashrc file if you like) iii) then run resolve from within the same directory you ran solve as follows resolve < resolve.in > resolve.log Exercise Start by computing the solvent content of the PsaA crystal. This can be computed within the CCP4 gui (assume say 300 residues for PsaA). Then use RESOLVE to carry out solvent flattening of the SOLVE electron density map. Questions 6.1 What percentage to you get for the solvent content? How does this compare with the average protein crystal? 6.2 Which (amplitude, phase) combination are used from the SOLVE output as input to RESOLVE and why? (look in the log file). Discuss. 6.3 What would happen during this procedure if the SOLVE phases were junk? Part 7. Compute and examine an electron density map Background The solvent flattened phases from RESOLVE are about as good as one can get from a single heavyatom derivative with significant anomalous signal. These phases can now be used to compute and electron density map, which may either be an (Fcalc, calc) map or an (mFobs,calc) map. Each of these has certain advantages. The former effectively assume that the Fcalc's are more accurate than the weighted Fobs's, whilst the latter assumes that the error resides in the phases and downweights the Fobs values appropriately. At this stage we do not know where in the unit cell the protein lies, so it is probably best to compute a large map volume (say a unit cell). Map calculation is readily performed using the ccp4 gui, and can be made directly into o maps. Exercise Compute an (mFobs,calc) electron density map in both CCP4 format and O format covering the entire unit cell using the CCP4 gui. Load the O formatted file into O and display it using the fm_ commands Questions 7.1 What is you overall impression of the solve map? Does it differentiate protein from solvent and why? What is your overall impression of the resolve map. Does the protein density look like protein and why? Can you see any features? 7.2 Use the map peak search routine within the CCP4 gui to find the highest point in this map. How high is it? What is it coordinates? Inspect this in the electron density map and comment on what you see? What is the likely source of this peak (read up something about PsaA from the protein data base).