Interpreting Residual Plots

advertisement

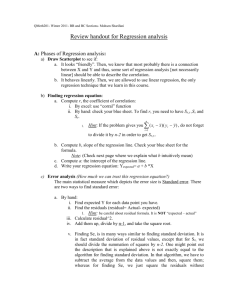

YALE School of Management MGT511: HYPOTHESIS TESTING AND REGRESSION K. Sudhir Lecture 7 Multiple Regression: Residual Analysis and Outliers Residual Analysis Recall: Use of Transformations with Simple Regression (Lecture 4) In lecture 4, in the context of simple regression (regression with one x variable), we suggested that we should use scatter plots of y against x to check if there are nonlinearity and non-constant variance problems in the data. Note that such plots are informative only if there is only one relevant predictor variable (if y is affected by many predictors, it becomes impossible to discover such problems in scatterplots of y against x). We showed how the presence of nonlinearity and non-constant variance led to the violation of two of the assumptions about the error term (and hence the residuals) in regression.1 (1) Nonlinearity in the relationship between the x and y variables. If the nonlinearity is ignored, the assumption E( i) = 0 that we used in regression is violated. This assumption implies that the expected value of the error term is zero, for every possible value of x. More generally, if we apply the wrong functional form, this assumption will be violated. (2) Non-constant variance. The second assumption in regression is Var ( i) = 2 ; The variance of the error term is the same for every possible value of x. We suggested the following solutions to the nonlinearity and non-constant variance problems: Nonlinearity Problem: We recommended two solutions to the nonlinearity problem: Solution 1: Add an x2 term in addition to the x term. This is especially appropriate if the marginal effect of interest not only changes in magnitude but also in sign. y 0 1 x 2 x 2 Solution 2: Under certain conditions (refer to lecture 6 for more details), we suggested taking the natural logarithm of the x variable. y 0 1 ln( x) , where ln(x) is the natural logarithm of x. Non-constant Variance Problem: 1 An additional assumption required in regression (for statistical inference about slopes, intercepts, predictions) is that the values for the error term (and hence the residuals) are normally distributed. Strictly speaking, violations of this assumption, and for violations of the other assumptions, usually implies that the model is misspecified (e.g. missing variable, wrong functional form). We suggested using the logarithm of the y variable to solve the non-constant variance problem. ln( y) 0 1 x Diagnosing the Need for Transformations with Multiple Regression In multiple regression, it is difficult and often impossible to detect the types of problems noted above by looking at the scatter plots of y against x, because the relationship between y and any particular x variable is typically confounded by the effects of all of the other x variables on y. For multiple regression, we can examine possible violations of assumptions by using residual plots. The advantages of residual plots are: 1. We look for systematic patterns in the unexplained variation in y against a horizontal line; 2. All the (linear, and perhaps some nonlinear) effects of the x-variables have been accounted for, so the residuals allow for an examination of violations that remain. In this lecture we focus on the use of residual plots to detect nonlinearity and non-constant variance problems. Residuals and Residual Plots with Excel Before we can diagnose the need for transformations using residual plots, we need to know how to obtain residuals and residual plots using Excel. Obtaining residuals and residual plots is relatively simple in Excel. Obtaining Residual and Residual Plots For example, suppose we want to run a multiple regression of y against x1 and x12 using the data shown in the excel spreadsheet. We can use standard regression dialog box, enter the y and x variables etc. as always. In addition you would check the “Residuals”, “Standardized Residuals” as well as the “Residual Plots” checkboxes as well. Note that there is a checkbox for “Line Fit Plots”, which we use in the next section when we discuss the problem of Outliers. 2 Interpreting Residual Output On running the regression with the above boxes checked, we obtain the normal three part output that includes (1) the R-square, standard error of regression etc, (2) ANOVA table and (3) the regression coefficients, its p-values, confidence intervals etc. In addition, we obtain a table of residuals under the label “Residual Output” as seen below. RESIDUAL OUTPUT ObservationPredicted Y Residuals Standard Residuals 1 13 2 1 2 13 -2 -1 3 18 -2 -1 4 18 2 1 5 25 -7.105E-15 -3.55271E-15 The above regression has 5 observations, so there are 5 residuals. The columns in the table are: (1) The first column shows the “observation number” (2) The second column labeled “Predicted Y” ( ŷ ), shows the predicted values of Y based on the regression. These predicted values are obtained for the sample data, and are often referred to as fitted values. This is given by ŷ ˆ0 ˆ1 x1 ˆ2 x12 (3) The third column labeled “Residuals”, shows the difference between actual and predicted (fitted) values, which is given by ˆ y yˆ . (4) The fourth column labeled “Standardized Residuals” shows the standardized residual obtained by subtracting out the average of the residuals (which is zero by definition, as long as the model includes an intercept) and dividing by the standard deviation of the residuals. ˆ Expected(ˆ) Standardized Residual Std Dev(ˆ) For the purposes of identifying nonlinearity and non-constant variance, either residuals or standardized residuals can be used. However, standardized residuals can be somewhat more easily interpreted. For example if the residuals follow a normal distribution, we know that 95% of the values of the standardized residuals will lie between 1.96. Interpreting Residual Plots As requested, Excel will provide plots with residuals on the y axis against each of the included x variables. Thus for example, if we had three variables, x1, x2 and x3 in the multiple regression, we would obtain three residual plots. If we did a regression of y against x and x2, we would get two residual plots. Residual plots can be used to diagnose nonlinearity. What we need to check is whether the residuals “systematically” have an average that is different from zero for any range of 2 We do not consider “Normal Probability Plots” in this lecture. x values. For example, we could “compute” the average residual separately for small, medium and large values of a predictor variable. The more these averages differ from zero, the more reason we have to reconsider the model specification. We should recognize that there will be some random deviations from average values, so we should be concerned about nonlinearity if we can see clear and systematic variations of these averages from zero. We use some examples to discuss when there are systematic deviations. We now look at a step-by step approach to diagnose and correct nonlinearity problems. Before illustrating a problematic residual plot, we first show examples of “good” residual plots where nonlinearity is not a problem. Below we show residual plots from a regression with two variables x1 and x2 and a look at the plots may suggest there are no systematic deviations from the assumption of zero average values of the residuals at all values of x1 and x2. This does not mean that the residuals are exactly zero around every value of x1 and x2. X2 Residual Plot X1 Residual Plot 10 5 0 -5 0 5 10 -10 15 Residuals Residuals 10 5 0 -5 0 2 4 6 8 -10 X1 X2 Diagnosis and Correction of Nonlinearity Problems Nonlinearity problems can seriously bias the estimates of a regression that fails to account for nonlinearity. Hence we should accommodate nonlinearities in the regression model. Here is a step-by-step approach to detect and correct for nonlinearity problems. Step 1: Use of Theory or A priori Knowledge of Nonlinearity Before doing a regression, think about whether there are any theoretical reasons to expect some nonlinearity between the y and x variables. For example, we had suggested earlier that it well known that the experience curve (the relationship between marginal cost of production and cumulative production) has a well known nonlinear relationship. To accommodate the nonlinearity we take the logarithm of the y and the x variables. Another example concerns the relationship between Sales and Advertising. While we would expect Sales to increase with an increase in advertising, we also expect the marginal effect of advertising to decline at higher levels of advertising. This suggests that we should use ln(Advertising) rather than advertising directly in the regression (ln = natural logarithm). 10 12 Step 2: If we do not have a priori expectations of nonlinearity, we estimate a linear regression model and then diagnose possible nonlinearity using residual plots. For example if the true relationship is curved as in the picture on the left, and we fit a straight regression line, the regression estimates will systematically overpredict the y values for very small and large values of x, while it systematically underpredicts the y Data y Negative Residuals Residuals Regression Line Positive Residuals Negative Residuals x x values for medium values of x. This is highlighted in the residual plot on the right hand side. In this case we would take an appropriate transformation of the x variable (add an x2 term or use ln(x)) and run the regression again. Step 3: Look at the residual plots against each of the x- variables with the new regression and make appropriate transformations on any of the variables until “good” residual plots emerge. Example: Consider a firm that seeks to estimate the potential profits from new product development projects that it invests in. It decides to use two explanatory variables (1) R&D investments used and (2) Managers’ a priori estimate of project risk prior to the beginning of the project. It uses data from a number of past projects to estimate a regression model. The data are as follows (Profits and R&D are in tens of thousands of dollars and Risk is on a 1-10 scale). PROFIT R&D RISK 396 133 130 82 508 146 172 90 256 114 31 54 102 76 102 72 536 152 102 75 214 109 200 92 158 92 31 61 116 78 120 90 270 106 270 112 9 8 10 8 7 8 7 8 10 8 6 9 7 7 8 6 9 8 Step 1: Though managers felt that there was some potential nonlinearity between R&D investments and profits, they did not have a good a priori notion of what this relationship might be. They decided that they would let the data tell them about any potential nonlinearity rather than assume a priori a specific type of nonlinear relationship. Step 2. They estimated a linear regression with Profits as the y variable and R&D and Risk as the x variables. Residual plots were used to diagnose nonlinearity problems. The residual plots are shown below. Looking at the residual plot (against R&D), there appears to be evidence of nonlinearity in the partial relationship between Profits and R&D. For small values of R&D (50-75), RISK Residual Plot 20 20 10 10 0 0 50 100 -10 150 200 Residuals Residuals R&D Residual Plot 0 -10 0 5 10 -20 -20 R&D RISK residuals are systematically positive. For moderate values of R&D (75-125), the residuals are systematically negative. For larger values (close to 150), they are positive. This nonlinearity of R&D suggests that we could include an R&D2 term in the regression. The residual plot against RISK is not clear. So we run a regression of Profits against R&D, R&D2, and Risk. 15 Step 3: The residual plots with the new regression are given below: RISK Residual Plot Residuals 20 10 0 0 -10 5 10 15 RISK R&D Residual Plot R&D^2 Residual Plot 20 10 0 -10 0 50 100 150 200 Residuals Residuals 20 10 0 -10 0 RD 5000 10000 15000 20000 25000 RD^2 With the new residuals plots, there does not appear to be systematic nonlinearity remaining. Hence we stop looking for further transformations and conclude that the residuals are well-behaved. Of course, we would make sure that the new results are interpretable (i.e. substantively meaningful). Diagnosis and Correction of Non-constant Variance Problems Unlike nonlinearity, non-constant variance problems do not bias the slope coefficients or the predicted values. Instead, we obtain incorrect estimates of prediction and confidence intervals for our estimates. To understand why, look at the following picture where we plot y against x and the residuals against x. y Residual x x Here we see a clear violation of the constant variance assumption. It appears that the residuals are much smaller for small values of x than for large values of x. Thus intuitively, we can be far more confident about our predictions for smaller values of x, than we can about predictions for larger values of x. However with the constant variance assumption, the computations assume that the uncertainty with regard to the residuals (the residual variance) is the same for all values of x. Hence with a constant variance assumption in this example, we are “under-confident” about our predictions for small values of x, while we are “over-confident” about our predictions for large values of x. As discussed earlier, we can solve this problem, by taking the ln(y) variable when doing the regression. This enables us to get variances that are constant over the entire range of the x variable. As we move to multiple regression with several x variables, it is more useful to plot the residuals not just against each of the x variables as we do above, but to plot the residuals against the predicted values (fitted values) of the y variable. If the solution to the nonconstant variance problem is simply to transform the y variable, it is reasonable to check if the residuals are of constant variance at all predicted values of y. However, it is also possible that a single x-variable is responsible for the non-constant variance problem. In that case, it is useful to identify which x-variable can be transformed (via residual plots against each x-variable) to achieve the desired constant residual variance. Obtaining the plot of residuals against predicted y Excel does not directly provide a plot of residuals against predicted y values ( ŷ ). However, the Excel “Residual Output” we showed earlier can be used to obtain the plot. Note the second column of the “Residual Output” had predicted y, the third column had the residuals, and the fourth column had standardized residuals. We can therefore obtain a scatter plot of residuals (or standardized residuals) against the predicted y values easily. Revisiting the earlier example of Profits versus R&D and Risk We plot the scatter plot of standardized residuals (residuals would have been fine as well) against predicted profits and we find there is no systematic evidence of non-constant variance. So we conclude there is no need to transform the Profit variable in this regression. 3 2.5 2 Std Residuals 1.5 1 0.5 0 0 100 200 300 -0.5 -1 -1.5 -2 Predicted Profits 400 500 600 Another Approach to correct for non-constant variance: Normalizing the y-variable So far, we had suggested that non-constant variance can be corrected by taking the natural logarithm of the y variable. In certain situations, an appropriate transformation is to normalize the y-variable. We illustrate the idea of normalizing the variable through an example. Suppose we had data on sales of a product in different cities, with different kinds of variables measuring the marketing mix: (1) price, (2) feature, indicating whether the product was featured in the Sunday newspaper insert, and (3) display, indicating whether the retailer prominently displayed the product at the end of the aisle. Since cities with different populations will have different levels of sales, we could consider the following regression equation: Sales 0 1 Pr ice 2 Feature 3 Display 4 Population (1) However this regression equation would be plagued by a non-constant variance problem, because the same price cut should lead to much greater change in sales in a larger city (say, New York or Chicago), than in a smaller city (say, New Haven or Des Moines). Similarly, the use of features or displays should also increase sales much more in a larger city, than it would in a smaller city. Hence we would see much greater variability in the residuals for smaller cities than we would in smaller cities. One way to tackle this problem is to take the natural logarithm of sales as the dependent variable. This would usually not be sufficient. A better solution may become clear if we consider the reason for the non-constant variance problem. Our intuition suggests that the impact of price or feature or display would be roughly the same on an individual no matter which town this person lives in. A more appropriate regression equation can be obtained by taking the dependent variable to be Sales/Population and estimating the following regression equation: Sales 0 1 Pr ice 2 Feature 3 Display (2) Population Thus the non-constant variance problem can be overcome in some situations where the problem is due to the effects of scale. By appropriately dividing by the relevant scaling variable (in this case population), we can solve the non-constant variance problem. See the graphs below to see how using the normalized variable corrects the non-constant variance problem. The graph on the left has the residuals from the regression equation (1), while the graph on the right has the residuals from the regression equation (2). The non-constant variance problem that is evident in the graph on the left is largely absent in the graph on the right. 2.5 2 2 1.5 1.5 1 0 0 10 20 30 40 50 60 -0.5 70 80 90 Std Residuals Std Residuals 1 0.5 0.5 0 7.35 -0.5 7.4 7.45 7.5 7.55 7.6 7.65 7.7 7.75 7.8 -1 -1 -1.5 -2 -1.5 -2.5 -2 Predicted Prices Predicted Sales Predicted Per-Capita Sales Predicted P/E A Solution using Interaction Effects: Why this is not as appealing? Could we not solve this problem using interaction variables by estimating the following regression equation? Sales 0 Population 1 Pr ice * Population 2 Feature * Population 3 Display * Population (3) Although mathematically, the two equations (2) and (3) are identical, equation (3) is not as appealing from the point of view of estimation. The reason is that by multiplying all the variables by Population, we have introduced a high level of correlation among the X variables used in the regression. This creates potential “multicollinearity” problems. Hence the normalization approach is preferred over the use of these interaction variables. What if some of the X variables may not have effects on a per-capita basis? Suppose there is an X variable such as advertising that is proportional to the population that needs to be included in the regression. While other X variables such as Price, Feature and Display affect Sales per capita (Sales/Population), Advertising is related to total sales. So if we define the dependent variable to be Sales/Population, how can we include Advertising into the regression? The answer to this question is to include Advertising also on a per-capita basis. Such a regression would have the form: Sales Advertisin g 0 1 Pr ice 2 Feature 3 Display 4 Population Population When to use Normalization: Common sense helps most of the time!! The approach of normalizing the dependent variable to solve for the non-constant variance problem is commonly used when we have effects that should be measured on a per-capita basis. Recall the Homework problem when we studied the effects of OSHA on accidents in companies. Here we would expect that OSHA would reduce accidents more in companies with more employees and less in companies with fewer employees. The appropriate dependent variable in that case would not be total accidents in the company, but Accidents/Employee. We recognize where such normalization should be used routinely by appealing to our common sense. For example, when we pool data from a large number of countries and try to understand the impact of an environmental policy on GNP, we would use per-capita GNP rather than total GNP in order to account for vastly different populations in different countries. Outliers Outliers are data points (either x or y values) that are far away from the rest of the data. We classify them to be of two types: Y-outliers and X-outliers Y-Outliers A Y-outlier is one which lies far away from the prediction line for one of the x variables. For example, see the graph below, called a Line Fit Plot in Excel. This can be obtained by checking the “Line Fit Plot” checkbox in Excel. Y X Line Fit Plot 70 60 50 40 30 20 10 0 Y Predicted Y 0 10 20 30 X Here the blue dots represent the data (Y) and the pink dots represented the fitted values of Y. As can be seen from the graph, there is one outlier (Y=10, X=24). As can be readily seen, this one points shifts the prediction line (connecting the pink points) considerably, from where it would otherwise have been (which would have connected all the blue points). Thus it can be seen that the Y outliers bias the results of the regression seriously. What to do when we have a Y outlier? (1) Drop the Outliers Y outliers may occur due to errors in coding the data. For example, it is common to have a decimal point in the wrong place etc. By graphing the data, we are able to recognize such errors. We can then either correct the error, or drop the data, if we cannot get access to the true data. Another possibility is that the Y outlier is due to a non-representative observation. For example, if we want to understand the effects of price changes on airline ticket sales, we may wish to drop the period around September 11, 2001 because this period is quite unrepresentative of normal behavior of airline passengers. (2) Y Outliers may require management action A Y outlier may call for management action. Suppose we are trying to understand the relationship between salesperson performance and territory characteristics. If we find one salesperson is an outlier in terms of superior performance, perhaps management should congratulate him, reward him with a bonus etc. If the person is an outlier with far below average performance, it would be useful to send the person a warning or fire him. Clearly some action is required in these situations by management. However, for the purpose of understanding representative behavior, it might be useful to drop these outlier observations and perform the regression itself. On dropping the Y outlier, we are able to obtain the unbiased regression line as seen in the Line Fit Plot below. Y X Line Fit Plot 70 60 50 40 30 20 10 0 Y Predicted Y 0 10 20 30 X X outliers X outliers are those data points where the values of X are far away from other observations. The effect of X outliers is not to bias estimates, but to make the confidence intervals appear far tighter than it should be and also inflate the R-square values. To understand the intuition for why X outliers artificially narrow the confidence intervals, recall our earlier discussion as to in which of the two cases below, we would be more confident of the regression line. In the figure below, the regression on the left is better, because we have greater variation in the x variable there, relative to the regression on the right. This enables us to be more confident about the slope of the regression line on the left, compared to the regression line on the right. y y x x Also recall the formula for the slope of a simple regression. The slope of a simple regression is given by sˆ , which also indicates that the standard error of the slope S xx falls when there is greater spread in the x values (as indicated by the 1 Sxx x i x 2 term). An X outlier will make the S xx term appear much larger and make the confidence intervals appear narrower. Illustrating the Effects of X outliers: Consider the following data and the line fit plot: Y1 13 11 15 13 16 11 9 X1 1 1 2 2 3 0 0 X1 Line Fit Plot 20 Y_1 15 Y_1 Predicted Y_1 10 5 0 0 1 2 3 4 X1 As can be seen there are no X outliers in this data. The regression results are given below: The regression equation is Y_1 = 10.0 + 2.00 X1 Predictor Coef SE Coef Constant 10.0000 0.6622 X1 2.0000 0.4019 S = 1.095 R-Sq = 83.2% T 15.10 4.98 P 0.000 0.004 R-Sq(adj) = 79.8% As can be seen the standard error of the slope coefficient is 0.4, the t-statistic is 4.98 and the R-square is 83.2%. Now consider the same data with one outlier included: Y_1 13 11 15 13 16 11 9 210 X1 1 1 2 2 3 0 0 100 The regression equation is Y_1 = 10.0 + 2.00 X1 Predictor Coef SE Coef T P Constant 10.0000 0.3831 26.10 0.000 X1 2.00000 0.01082 184.76 0.000 S = 1.000 R-Sq = 100.0% R-Sq(adj) = 100.0% Comparing the results with the previous regression we notice that the standard error of the slope coefficient is 0.01, the t-statistic is 184.76 and the R-square is 100%. Intuitively, we understand that one data point should not have so much influence on the standard errors and the R-square. This happens because the one additional data point has an X which is far away from the other values of X, causing the Sxx values to increase dramatically. In this problem, Sxx= 7.4 without the outlier, but with the outlier it increases to 8533. However, as stated earlier the X outlier does not bias the estimates. We obtain the same estimates with and without the X outlier. Regression Plot Y_1 = 10 + 2 X1 S=1 R-Sq = 100.0 % R-Sq(adj) = 100.0 % Y_1 200 100 0 0 10 20 30 40 50 60 70 80 90 100 X1 What to do when we have a X outlier? As with the Y outlier, it makes sense to drop the X outlier and run the regression without the outlier. Y and X outliers When we have both Y and X outliers, the effect will be to combine both outlier effects. The Y outlier will cause bias, while the X outlier will cause the estimates to have narrower confidence intervals as well as higher R-squares. Y_1 13 11 15 13 16 11 9 210 X1 1 1 2 2 3 0 0 50 In the previous example, the estimated regression was y1=10+2X1. Consider the last data point. Hence when X1=50, Y1=110. However, here we have Y1=210. Thus we have an X outlier (X1=50 is far away from the other X values) and a Y outlier (the value of Y1=210 is far away from what would be the predicted value without outlier, 110). The regression equation is Y_1 = 7.41 + 4.05 X1 Predictor Coef SE Coef Constant 7.4147 0.9678 X1 4.04547 0.05454 R-square = 99.9% T 7.66 74.17 P 0.000 0.000 As expected the estimates are now biased (intercept is different from 10, slope is different from 2). The standard errors are quite small and the t-statistics become large. The Rsquare is close to 100%. Thus with Y and X outliers, we have bias, smaller confidence intervals and too high R-square.