9 Basics of Thermal Physics

advertisement

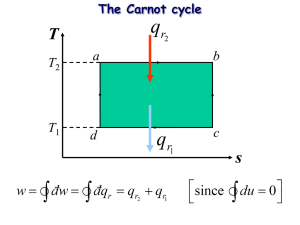

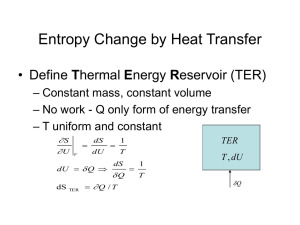

Basics of Thermal Physics 9 1 Basics of Thermal Physics Thermal physics is reconstructed here from information theory using two physical ingredients-the k in entropy is now Boltzmann’s constant and the average energy of a system has a corresponding Lagrange multiplier, , equal to 1/kT. In this chapter we will develop the fundamentals of thermodynamics from statistical mechanics. Properties of Z Various calculations can be performed relatively easily once the partition function Z is known. Remember that the discrete partition function is defined by the expression Z e Ei .Two very useful relations are: i E ln Z (1) and 2 2 ln Z , where is the standard deviation of energy. 2 (2) 1. Use the definition of Z to derive Eqs.(1) and (2). 2. Given that an electron in a magnetic field B has only the energies E B, find the partition function and use it to calculate the average energy. ans: Thermodynamic Entropy We want to demonstrate (again) that, with sufficient qualifications, information entropy is mathematically equivalent to thermodynamic entropy. That is, for reversible systems, dQ dS T Consider the differential of the average energy condition E p j E j such that dE E j dp j p j dE j This can be compared with the first law of thermodynamics, dE dQ dW (3) (4) Basics of ThermalPhysics 2 where dQ and dW represent heat going into the system and work done on the system. We identify dQ as the energy change due to the system occupying different available energy levels. Similarly, we identify dW with a mechanical shift in the energy levels. A system that undergoes a reversible process is able to return to its original configuration with no level changes. In such cases, dE dQ . Since the partition function is central to calculations in statistical mechanics, it is useful to express entropy (as well as energy) in terms of Z: S k pi ln pi k E ln Z . (5) i Taking the differential of S for a reversible process gives the desired equivalence, dQ dS k dE Ed ln Z kdE , T where we used Eq.(1) to affect the simplification. 3. Derive S k E ln Z using S k pi ln pi and the canonical distribution. i Classical thermodynamics defines entropy with the relation dQrev dS T It is worth repeating that, in our dynamic interpretation of temperature, entropy is a measure of the average number of state changes accessible to the system. Note that this applies only to reversible increments of heat. Heat added irreversibly cannot be fully utilized for state transitions. Consequently, the dS must be greater for an orderly reversible process than for an irreversible process . An important conclusion is dQrev dQ (6) dS , T T where the equality applies only for reversible processes (and closed systems). Reversibility is a convenient fiction because entropy is a state variable*—a real, irreversible process can be replaced by a reversible process that brings the system to the same end point. * A state variable is one that does not depend on its history. For example, the gravitational potential energy of an aircraft sitting on an airfield does not depend on how high it once flew. Basics of ThermalPhysics 3 Outline of Thermodynamics I-Basics Equations (4) and (6) correspond to the first and second laws of thermodynamics: dE dQ dW (4) dS dQ T (6) Combining these for reversible processes we have (7) dE T dS dW . This is the fundamental expression of thermodynamics for reversible systems (and we almost always “replace” real systems with reversible equivalents). Often, work is done on the surroundings by pressure P altering volume V so that dW PdV . We will see other forms of work in subsequent chapters. We usually know some relation between thermodynamic variables. Some examples include state equations like the ideal gas relation, energy relations like E V T 4 , or entropy relations. Frequently the problem then is to derive other relations between variables. Since we know in principle how to find entropy, we illustrate the procedure for relating variables to entropy. Write a physical expression for dS based on Eq.(7) and compare this with a mathematical identity: dE PdV Physics dS T T S S Math dS dE dV E V V E The comparison gives 1 P S S (8) and E V T V E T This illustrates a pattern whereby various alterations of Eq.( 7) –the physics– is compared to the corresponding mathematical identity. This enables thermodynamicists to wring varied relations from some known quantity. In the illustration, we assumed S was known. 4. Later we will find S for an ideal gas has the form S kN ln V f (T , E ) . Use this to find an expression for P in terms of V and T. [ans. PV = nRT] 5. Starting with the differential dU T dS PdV (physics) write the corresponding U U mathematical identity and show T and P . S V V S Basics of ThermalPhysics 4 Outline of Thermodynamics II-Potentials Some analyses or problems are best expressed in terms of a particular set of independent variables. For instance, a process that is both adiabatic (dQ = 0 or S = constant) and isochoric (V = constant) is conveniently expressed by a function of S and V, E E S ,V . Similarly, an isothermal and isochoric process is easily described by a function of T and V, F F T ,V . A number of “thermodynamic potentials” like these are available. The following are the most familiar thermodynamic potentials: E(S,V) System Energy dE TdS PdV (9) S(E,V) System Entropy dS dE PdV T T F(T,V) Helmholtz Free Energy F E TS (11) G F PV (12) G(T,P) Gibbs Free Energy 6. (10) Demonstrate that Helmholtz Free Energy, F E TS , is a function of independent variables T and V. (That is, show that dF is an expression that varies with dT and dV.) Helmholtz Free Energy F is very useful for converting statistical mechanical information into thermodynamic expressions. In particular, we can show that F is simply related to the partition function by the now familiar expression F kT ln Z (13) Once F is known, P and S can be found from the relations F F (14) P and S V T T V 7. Derive Eqs.(14). One of the most usual routines for applying statistical mechanics is (i) calculate the partition function, (ii) find F from (13), and (iii) generate state equations from Eqs.(14). Basics of ThermalPhysics 8. 5 In one dimension an increment of work is the product of an average force f and a distance dL The combined first and second law for reversible processes is then dE T dS f dL where, in this case, f is the force exerted by the system on the environment (the one-dimensional equivalent of pressure). (a) Show that for this system, F F f and S L T T L (b) Show that the partition function for a (fictitious) one-dimensional ideal gas of N particles in a “box” of length L is N 2 m 2 L Z h (c) Derive the state equation for this one-dimensional gas, f L NkT 1