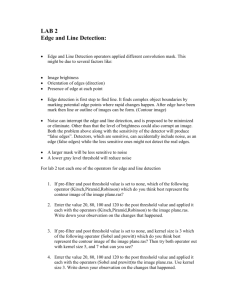

ECS 271 Midterm Examination

advertisement

Your name____________________________________________

ECS 271 Midterm Examination

Spring 2003

15 May 2003

You have 80 minutes

This examination has 80 points.

(Answers to a subset of questions)

Instructions:

This examination is open text book and open class notes.

Write your answers in the space provided. If you need extra space, use the back side of

the paper.

Be clear. Show all necessary work. Write legibly.

START HERE

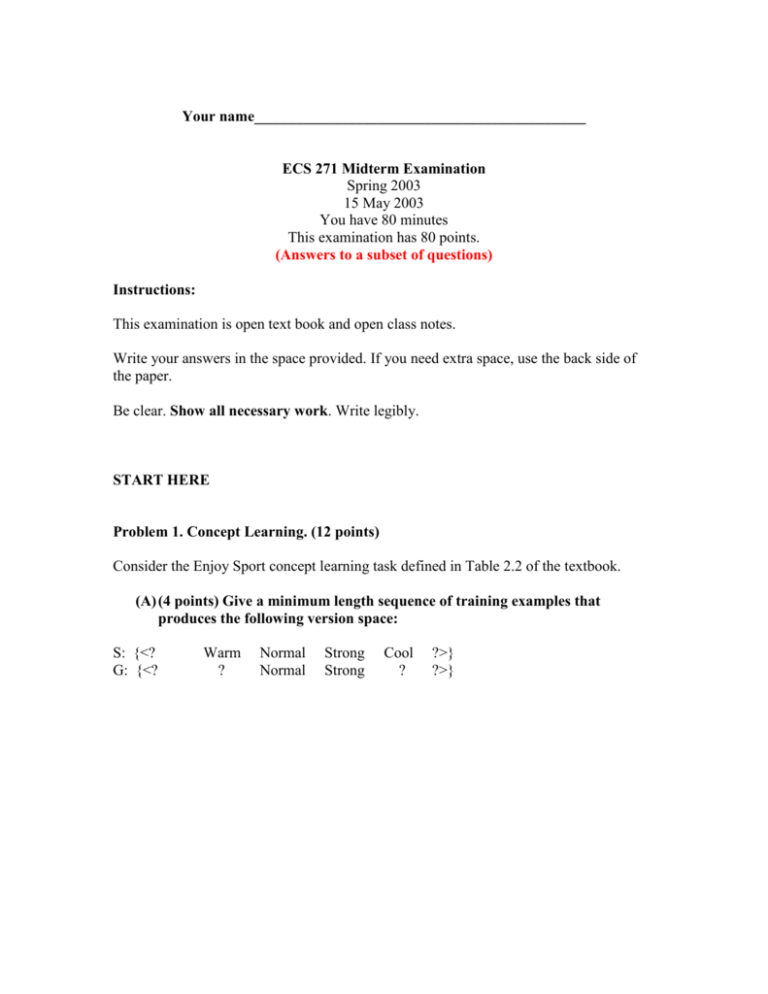

Problem 1. Concept Learning. (12 points)

Consider the Enjoy Sport concept learning task defined in Table 2.2 of the textbook.

(A) (4 points) Give a minimum length sequence of training examples that

produces the following version space:

S: {<?

G: {<?

Warm

?

Normal

Normal

Strong

Strong

Cool

?

?>}

?>}

(B) (4 points) How many additional examples are required to make the version

space converge to the following target concept? Explain.

{<?

?

Normal

Strong

?

?>}

(C) (4 points) Explain why there is never more than one specific model (say, in

the Allergy example discussed in the class or the Play Tennis example

discussed in the text)

Suggested Answer: It is not possible to produce multiple specific models because of

the way the generalization rule works. Recall that the generalization rule merges the

existing model with a positive example by inserting a "don't care" symbol into the

existing model in positions where the two differ. Accordingly, there is just one

specific model.

Problem 2. Neural Nets (12 points)

(A) (4 points) Consider a neural net with a step function threshold. (i) Suppose

that you multiply all weights and thresholds by a constant. Will the behavior

of the network change? (ii) Suppose that you add a constant to all weights

and thresholds. Will the behavior of the network change? Explain using the

language and notation of mathematics.

Suggested Solution: (i) The neurons in such a network fire if and only if the weighted

sum of the inputs is above a threshold.

w i

k k

T

where T is the threshold.

This inequality is not disturbed when you multiply both sides by the same amount

Suggested Solution: (ii) The neurons in such a network fire if and only if the weighted

sum of the inputs is above a threshold.

w i

k k

T

where T is the threshold.

This inequality IS disturbed when you add a constant c both sides

(B) (4 points) Does Back propagation descend the error surface that corresponds

to the training set error or the true error of the network? Explain in one or

two sentences.

You really do not know what the true error is. What all you calculate is the error on

the training set and back propagate it. So the answer is T

"Training error"

(C) (4 points) Sigmoid threshold units can be used to approximate perceptrons

arbitrarily closely. Describe the sigmoid unit weights to very closely

approximate the following 3-input perceptron

o( x1 , x2 , x3 ) 1 if

and o( x1 , x2 , x3 ) 0 , otherwise.

3. Decision Trees (8 points)

(a) (4 points) Assume we train a decision tree to predict Z from A, B and C, using

the following data.

Z

0

0

0

0

0

1

0

1

1

1

0

1

A

0

0

0

0

0

0

1

1

1

1

1

1

B

0

0

0

1

1

1

0

0

1

1

1

1

C

0

1

1

0

1

1

0

1

0

0

1

1

What would be the training set error for this data set? Express your answer as the

number of records out of 12 that would be misclassified?

Suggested Solution: We have four records with duplicate input variables, but only two of

these have contradictory output values. One item of each of these will always be

miscalssified.

(b) ( 4 points) Consider a decision tree built from an arbitrary set of data. If the

output is discreet- valued and can take on k different possible values, what is the

maximum training set error (expressed as a fraction) that any data set could

possibly have?

Suggested Solution: The answer is (k-1)/k. Consider data sets with identical inputs but

the outputs are evenly distributed among k classes. Then we will always get one correct

classification and (k-1) erroneous classifications.

4. Genetic Algorithms (8 points)

(a) ( 4 points) Two parents are given below. Show suitable positions for breaks

for the crossover operator

Parent 1: ( * ( X0 ( + x4 x8 ) ) x5 ( SQRT 5 ) )

Parent 2: ( SQRT ( X0 ( + x4 x8 ) ) )

Suggested solution. First draw the equivalent tree. All the non-leaf nodes

represent mathematical operators operating on one, two or many operands.

When you cut and paste branches, you want to make sure that there is no

violation of the operations.

Then I expect you to tell me ALL the places where thebrances can be cut.

(b) ( 4 points) Consider the populations of size L of N-bit chromosomes. Show

that the number of different populations is " ( L 2 N 1) choose (2 N 1) ".

Show all the work clearly.

5. Gaussians (12 points)

Consider a two-class classification problem. The two classes are w1 and w2 . P( w1 ) and

P( w2 ) are the prior probabilities. The classifier is said to assign a feature vector x to class

wi if

gi ( x) g j ( x), j i

Here g(x) is called a discriminant function. A popular choice for g is

gi ( x) ln p( x | wi ) ln P( wi )

(a) (4 points) Write the discriminant function if p( x | wi )

that x and are vectors and is a matrix.

N (i , i ) . Remember

(b) (4 points) Calculate the entropy of the normalized Gaussian distribution.

Show your work.

(c) (4 points) You are given a normal distribution. You do not know its mean,

. You want to estimate it. To do this, you take a sample x1 , x2 ,...xR and the

best you could do is to calculate the sample mean. Show that the best

estimate of the unknown mean is the sample mean.

6. PAC Learning/VC Dimension etc (12 points).

(a) (4 points) True or False. If the answer is false, give a counter example. If it is

True, give a one sentence justification.

Within the setting of PAC model, it is impossible to aassure with probability 1 that

the concept will be learned perfectly (i.e., with true error = 0), regardless of how

many training examples are provided.

Suggested solution: TRUE. In this setting, instances are drawn at random.

Therefore we can never be certain that we will see sufficient number of training

examples in any finite sample of instances.

(b) Consider the class of concepts H2p defined by conjunctions of two arbitrary

perceptrons. More precisely, each hypothesis h( x) : X {0,1} in H2p is of the form

h( x) p1 ( x) p2 ( x) , where p1 ( x) and p2 ( x) are any two-input perceptrons. The

figure below illustrates one such classifier in two dimensions.

Draw figure here

(b) (4 points) Draw a set of three points in the plane that cannot be shattered by

H2p.

Suggested solution: Note that each hypothesis forms a "V" shaped surface in the

plane, where points within the V are labelled positive. Three collinear points

cannot be shattered because no "V" can capture the case that includes the two

outermost points without including the middle point.

(c) (4 points) What is the VC dimension of H2p? (Partial credit will be given if you

can bound it. So show your reasoning)

Suggested solution: The VC dimension is 5. You can shatter a set of 5 points

spaced out evenly along the circumference of a circle. You cannot shatter a set of

6 evenly spaced points on the circumference because you cannot capture the case

where the labels alternate as in + - + - + -. (This does not prove that there exists

"NO" set of six points that can be shattered. But you will get full credit if you at

least show this type of argument).

7. Cross Validation (16 points)

Suppose we are learning a classifier with binary output values Y = 0 and Y = 1.

There is one real-valued input X. Here is the data set.

X

1

2

3

4

6

7

8

8.5

9

10

Y

0

0

0

0

1

1

1

0

1

1

Assume that we will learn a decision tree on this data. Assume that when the

decision tree splits on the real-valued attribute X, it puts the split threshold half-way

between the attribute that surrounds the split. For example, if the information gain

is used as the splitting criterion, the decision tree would choose a point halfway

between x = 4 and x = 6.

Let DT2 be the method of learning a decision tree with only two leaf nodes (i.e., only

one split). Let DT* be the method of learning the decision tree fully (with no

pruning). Now you will be asked a number of questions on error. In all these

questions, you can express your error as the number of misclassifications out of 10.

(a) ( 4 points) What will be the training set error of DT2 on our example?

Suggested answer: 1/10. Because the split is at the halfway point and you missed the

point corresponding to x = 8.5.

(b) ( 4 points) What will be the leave-one-out-cross-validation error of DT2 on

our example?

Suggested answer: 1/10. Because the decision tree will split x = 5 on most of the "folds"

and the left out point will be consistent with the prediction in all folds except when it is

8.5.

(c) ( 4 points) What will be the training set error of DT* on our example?

Suggested answer: 0/10. Because there will be no inconsistencies at all the leaf nodes.

(d) ( 4 points) What will be the leave-one-out-cross-validation error of DT* on

our example?

Suggested answer: 3/10. The leave-one-out points that will be wrongly predicted are x =

8.0, x = 8.5 and x = 9.0

The PAC learning bounds state that "with probability (1 ) the learner will succeed

in outputting a hypothesis with error at most ." This suggests that there is some

randomized experiment with the learner that, if repeated n times, would be expected

to succeed n(1 ) times. What exactly is this experiment? Explain.