L12_Matrices

advertisement

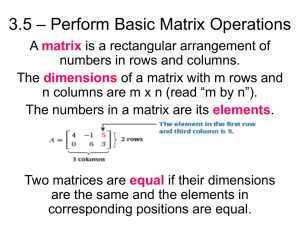

Matrices: Let us consider the data for a one-way ANOVA. Our wage-ethnicity data. Let’s write the data out as: A A A A A B B B B B C C C C C 5.90 5.92 5.91 5.89 5.88 5.51 5.50 5.50 5.49 5.50 5.01 5.00 4.99 4.98 5.02 And our model: Y_ij=_i + ij So, if we think about it from a regression point of view we could write Wage as Y and Ethnicity as X. For the mathematical model to fit it we need to write it as NUMBERS. We can dummy code it, defining X1 and X2 such that: X1 = 1 if race=A 0 ow X2 = 1 if race=B 0 ow So now we can write our MODEL as: Y_ij=_0 +_1 x_1 + _2 x_2 + So the data should REALLY be written as: Int X1 X2 Y 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 1 1 1 1 1 0 0 0 0 0 5.90 5.92 5.91 5.89 5.88 5.51 5.50 5.50 5.49 5.50 5.01 5.00 4.99 4.98 5.02 Essentially this is what SAS or R or MINITAB is doing in the background. However, in general this is the REGRESSION take on ANOVA. (this is also called the SET TO ZERO model). Generally in ANOVA we use the SUM to ZERO idea to deal with the last category. So the data would look like: Int X1 X2 Y 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 0 0 0 0 0 -1 -1 -1 -1 -1 0 0 0 0 0 1 1 1 1 1 -1 -1 -1 -1 -1 5.90 5.92 5.91 5.89 5.88 5.51 5.50 5.50 5.49 5.50 5.01 5.00 4.99 4.98 5.02 As we assume: Sum of the =0, as _i is the deviation from the overall mean, . General Linear Models: Y = X + Y 1 1 X 11 Y 2 . . . . Yn 1 X 1n X 21 . . X 2n X 31 . . X 3n X 41 0 1 1 2 . 2 . 3 X 4 n n 4 Y : response vector X : design matrix : parameter vector : error vector This is called the Matrix Notation for the General Linear Model. Matrices: What is a matrix? - Rectangular Array of numbers. Examples: A = a c b , B = d 1 2 4 3 5 2 Dimension of a Matrix: p (= number of rows), by q (= number of columns) A is 2 by 2 and B is 2 by 3. What is the dimension of: 4 1 2 4 C= 3 2 5 9 4 7 8 1 The number 4 can be thought to be a matrix of dimension (1 by 1) but not vice-versa. Notation: A = [aij] or A= ((aij)) Square Matrix: A square Matrix has the same number of rows and columns. Examples: 4 4 1 3 3 2 4 2 by 2 1 2 2 5 7 8 3by3 Vector: A matrix with one of the dimensions 1. A matrix with 1 column is a column vector, with one row is a row-vector. 4 3 4 6 4 1 2 Transpose: Interchanging the row and the column gives us a transpose of a matrix. 4 1 2 A= 3 2 5 A’= At= 4 7 8 4 3 4 1 2 7 2 5 8 Hence if A=[aij] then A’=[aji] Two Matrices are equal if all their corresponding elements are equal. A= B implies aij=bij Adding Matrices: We can add matrices of the same dimensions by adding each element of the two matrix. Example: A 2 3 , B 1 8 , C A B A 3 11 6 5 4 9 10 14 Subtracting follows the same rules. Note we can add and subtract matrices of the exact same dimensions. Multiplying matrices: 1. Multiplying matrices with a scalar: A scalar is an ordinary number so multiplying matrices by a scalar is elementwise multiplication. Each element in the matrix is multiplied to the scalar. Eg: c*A = [c*aij] 2. Multiplying Matrices by another matrix We can multiply Matrices A1 and A2 if the number of rows in A1 is same as the number of columns in A2. In Matrix multiplication each element in the row of one matrix is multiplied to each element in the column of the other. Example: a b 1 2 1a 3b c d 3 4 1c 3d 2a 4b 2c 4d 1 2 a b 1a 2c 3 4 c d 3a 4c 1b 2d 3b 4d Hence, unlike scalars, order of multiplication matters. One can multiple A to B as long as the number of rows in A is same as the number of Columns in B. Special Matrices: Symmetric Matrix: A matrix A is symmetric is A’ = A . 4 3 4 If A= 3 2 7 Here, even if we transpose A we still 4 7 8 get back the same matrix. Diagonal Matrix: A diagonal matrix is a square matrix with only non-zero entry along the main diagonal. All other entries are 0. 4 0 0 0 2 0 0 0 8 Identity Matrix: An identity diagonal matrix such that all the elements in the diagonal is 1 and all the other elements are 0. 1 0 .... 0 1 0 0 1 0 0 1 .... 0 , 0 1 0 , . 0 1 ... 0 0 1 0 0 .... 1 Idempotent Matrices: A matrix is idempotent if the matrix multiplied with itself yields itself. Idempotent matrices are necessarily square and symmetric. A’ A = A. Linear Dependence: When c scalars (not ALL zero) can be found such that k1C1 + k2C2+… + kcCc =0 1 2 4 A 2 3 6 4 4 8 C1 C2 C3 In the above matrix A, k3 =-2k2 then we have 0. Hence we have linear dependence. Then we consider the matrix to be linearly dependent. If the equality holds ONLY when all the ki are 0, the matrix is linearly independent. The number of linearly independent columns or rows of a matrix determines its RANK. A matrix that is linearly independent is called FULL RANK. Dividing Matrices: We cannot divide matrices as with regular numbers. But what we do is multiply in the inverse of one matrix to the other matrix. So by dividing A by B we multiply A with the inverse of B Inverting matrices: Inverse of a matrix A given by A-1 is such that AA-1 = Identity Matrix I. Also A-1A = I. Example: a b 1 d - b -1 A Then, A c d c a ad bc Hence in our context we are interested in the Least Square Estimates for: Y = X + . This is given by ' (Y X )' (Y X ) ˆ ( X ' X ) 1 X ' Y . Var ( ˆ ) ( X ' X ) 1 2 To remember things and relate it to scalar terms: Q ' ( X ' X ) Sxx X ' Y Sxy Writing the design matrix for 2 factors with interactions: A B unit y 1 1 1 1 1 1 2 2 2 2 2 2 3 3 3 3 3 3 1 1 2 2 3 3 1 1 2 2 3 3 1 1 2 2 3 3 1 2 1 2 1 2 1 2 1 2 1 2 1 2 1 2 1 2 10.9 10.9 10.5 9.8 9.7 10.0 11.3 11.7 9.4 10.2 8.8 9.2 12.8 12.2 12.7 12.6 12.7 12.3 a1 a2 b1 b2 a1b1 a1b2 a2b1 a2b2 Some Design Matrix Practice: We have a 2 by 2 design (A at two levels, B at 2 levels) and two reps each. The data are below: A B y A1 A1 A1 A1 A2 A2 A2 A2 B1 B1 B2 B2 B1 B1 B2 B2 2.1 3.4 2.6 2.7 2.9 3.9 3.6 3.7 We have a 2 by 2 with 3 blocks of size 4. A B U y A1 A1 A1 A1 A1 A1 A2 A2 A2 A2 A2 A2 B1 B1 B1 B2 B2 B2 B1 B1 B1 B2 B2 B2 1 2 3 1 2 3 1 2 3 1 2 3 2.1 3.4 3.4 2.6 2.7 3.4 2.9 3.9 3.4 3.6 3.7 3.4