INFOBrief

Dell™ PowerEdge™ 2650

High Performance Computing

Clusters

Key Points

High Performance

Computing Clusters

(HPCC) offer a cost

effective, scalable

solution for parallel

computing system

platforms designed

for demanding,

compute intensive

applications

Dell Product Group

Second generation of Dell’s High Performance Computing Cluster

(HPCC) provides computational-intensive capacity leveraging the

latest technology available in the market.

HPCC is a cost effective method for delivering a parallel computing

system platform, targeted towards compute- and data-intensive

applications.

Through Dell HPCC, users can aggregate standards-based server and

storage resources into powerful supercomputers to provide an

inexpensive yet powerful solution.

High Performance Computing Clusters (HPCCs) are popular methods

for solving these complex problems because of their low price points

and excellent scalability.

Page 1

Updated November 2003

Dell helps provide investment protection by offering solutions based

on industry standard building blocks that can be re-deployed as

traditional application servers as users integrate newer technology into

their network infrastructures.

Dell delivers high-volume, standards-based solutions into scientific

and compute-intensive environments that can benefit from economiesof-scale, and add systems as requirements change.

Dell’s technology and methodology are designed to provide high

reliability, price/performance leadership, easy scalability and

simplicity by bundling order codes for hardware, software and

support services for 8, 16, 32, and 64 node clusters.

Product Description

Compute Nodes

Master Node

The concept of HPCC or “Beowulf” (the project name used by original

designers) clusters originated at the Center of Excellence in Space Data and

Information Sciences (CESDIS), located at the NASA Goddard Space Flight

Center in Maryland. The project’s goal was to design a cost-effective,

parallel computing cluster built from off-the-shelf components that would

satisfy the computational requirements of the earth and space sciences

community.

As cluster solutions have gained acceptance for solving complex

computing problems, High Performance Computing Clusters (HPCC) are

starting to replace supercomputers in this role. The cost of commodity

HPCC systems has changed a purchase decision from evaluating

expensive proprietary solutions, where cost was not the primary issue, to

evaluating vendors based on their ability to deliver exceptional price-toperformance ratios and support capabilities.

Logical View of a High Performance Computing Cluster

External

Storage

Dell Product Group

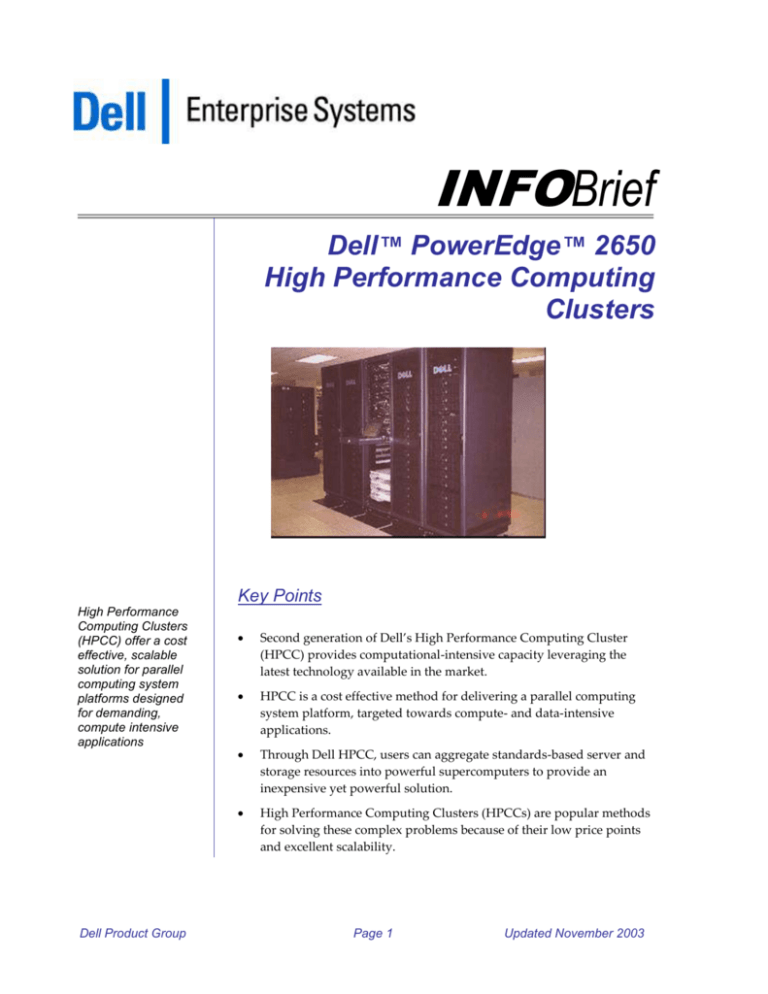

The strategy behind parallel computing is to “divide and conquer.” By

dividing a complex problem into smaller component tasks that can be

worked on simultaneously, the problem can often be solved more quickly.

This can help save time and resources, as well as monetary costs. Dell’s

HPCC uses a multi-computer architecture, as depicted in Figure 1. It

features a parallel computing system that consists of one master node and

multiple compute nodes connected via standard network interconnects.

All of the server nodes in a typical HPCC run an industry standard

operating system, which typically offers substantial savings over

proprietary operating systems.

Page 2

Updated November 2003

Figure 1

Logical View of High Performance Computing Cluster

The master node of the cluster acts as a server for the Network File System

(NFS), job-scheduling tasks, security, and acting as a gateway to end-users.

The master node assigns each of the compute nodes with one or more tasks

to perform as the larger task is broken into sub-functions. As a gateway,

the master node allows users to gain access to the compute nodes.

The sole task of the compute nodes is to execute assigned tasks in parallel.

A compute node does not have a keyboard, mouse, video card, or monitor.

Access to client nodes is provided via remote connections through the

master node.

From a user's perspective, a HPCC appears as a Massively Parallel

Processor (MPP) system. Common methods of using the system are to

access the master node either directly or through Telnet or remote login

from personal workstations. Once logged onto the master node, users can

prepare and compile their parallel applications and spawn jobs on a

desired number of compute nodes in the cluster.

In addition to compute nodes and master nodes, key components of HPCC

include: systems management utilities, applications, file systems,

interconnects, and storage and software solution stacks.

Dell Product Group

Dell OpenManage Systems Management

Because HPCC systems can consist of many nodes, it is important to be

able to monitor and manage these nodes from a single console. It is

possible to have thousands of nodes within one cluster. To help

manage such a sizable cluster, Dell OpenManage™ systems

management utilities are designed to provide system discovery, event

filtering, systems monitoring, proactive alerts, inventory and asset

Page 3

Updated November 2003

management as well as remote manageability for the compute nodes

and master nodes.

The Enterprise Remote Access is a separate management fabric that

offers features that include: remote power operations; virtualization of

FLOPPY, CDROM, and other peripherals; console redirect; and BIOS

flash update

Applications

Applications may be written to run in parallel on multiple systems and

use the message-passing programming model. Jobs of a parallel

application are spawned on compute nodes, which work

collaboratively until the jobs are complete. During the execution,

compute nodes use standard message-passing middleware to

coordinate activities and information passing.

Parallel Virtual File System

A Parallel Virtual File System (PVFS) is used as a high-performance,

large parallel file system for temporary storage and as an

infrastructure for parallel I/O research. PVFS stores data on the

existing local file systems of multiple cluster nodes, enabling many

clients access to the data simultaneously. Within a HPC cluster, PVFS

enables high-performance I/O that is comparable to that of other

proprietary file systems.

Interconnect

To communicate with each other, the cluster nodes are connected

through a network. The interconnect technology chosen depends on

the amount of interaction between nodes when an application is

executed. Some applications are similar to batch environments, and the

communication between compute nodes is limited. For these

environments, Fast Ethernet may be adequate. However, in

environments that require more frequent communication, a Gigabit

Ethernet interconnect is preferable.

Some application environments can also benefit from a special

interconnect that has been design to provide high-speed and low

latency between the compute nodes. For these applications, Dell’s

bundles are available with Myricom’s Myrinet.

Dell Product Group

High Performance Computing Cluster Solution Stack

Dell partners with service providers to deliver the software

components necessary for implementing a HPCC solution. The HPCC

stack includes the job-scheduler, cluster management, message passing

libraries, and compilers.

Page 4

Updated November 2003

High Performance Computing Market

Target markets for high performance computing clusters are: higher

education, large corporations, federal government, and technology sectors

that require high performance computational computing. Industry

examples include: oil and gas, aerospace, automotive, chemistry, national

security, financial and pharmaceutical.

Typical high computation applications include: war and airline

simulations, financial modeling, molecular modeling, fluid dynamics,

circuit board design, ocean flow analysis, seismic data filtering, and

visualizations.

Applications that use HPC clusters and their specific vertical markets can

be found in Table 1.

Table 1

Vertical Markets Appropriate for HPCC

Vertical

Description of Requirements

Typical Applications

Manufacturing

Crash worthiness, stress analysis, shock

and vibe, aerodynamics

Fluent, Radioss, Nastran,

Ansys, Powerflow

Energy

Seismic processing, geophysical

modeling, reservoir modeling

VIP, Eclipse, Vertias

Life Sciences

Drug design, bioinformatics, DNA

mapping, disease research

Blast, Charmn, NAMD, PCGamess, Gaussian

Digital Media

Render Farms

Renderman, Discreet

Finance

Portfolio Management (Monte Carlo

simulation), risk analysis

Barra, RMG, Sungard

Although market opportunities exist in environments made up of

thousands of nodes, standard HPCC configurations target the majority of

clusters within the 8-node to 128-node configuration range. Customers

investigating larger cluster configurations should contact the Dell

Professional Services organization for assistance.

Dell’s bundled HPCC solutions target customers with varying levels of

expertise, from complete turnkey solutions -- including hardware and

software - to easy-to-order hardware-only bundles. For those who do

require a complete solution, Dell also offers consulting assistance.

Dell Product Group

Page 5

Updated November 2003

Features and Benefits

The Dell High Performance Computing Cluster leverages many

advantages of Dell’s product line, including server, storage, peripheral,

and services components. By creating standard product offerings, Dell

solutions are designed to help minimize configuration complexity. These

standard packages consist of 8, 16, 32, 64 and 128 node configurations.

The key technology features of a Dell High Performance Computing

cluster configuration are shown in Table 2.

Table 2

The Key Technology Features of a Dell HPCC Configuration

Feature

Full featured

hardware

configurations

PowerEdge™ 2650

(Compute Node)

PowerEdge 2650

(Master Node)

Dell Product Group

Function

Pre-bundled order codes for 8

node, 16 node, 32 node, 64 node

and 128 node configurations; 16 –

256 CPU configurations

Dual Intel Xeon processors at

2.0GHz, 2.4GHz, 2.8GHz,

3.06GHz, and 3.2GHz with

533MHz FSB providing highest

performance2U form factor

3 slots on separate I/O buses

256MB of DDR SDRAM,

expandable to 12GB

Configurable sized drives

(expandable to 5 drives) for

internal storage

o SCSI drives

Dual Intel Xeon processors at

2.0GHz, 2.4GHz, 2.8GHz,

3.06GHz, and 3.2GHz with

533MHz FSB providing highest

performance2U form factor

3 slots on separate I/O buses

256MB of DDR SDRAM,

expandable to 12GB

Configurable sized drives

(expandable to 5 drives) for

internal storage

o SCSI drives

Page 6

Benefit

Simplified ordering process and

pre-qualified configurations

High performance compute

node for the most challenging

applications

High density enables large

compute clusters in a rack

Helps to minimize I/O

bottlenecks

Flexibility for increasing

storage capacity on compute

node

High performance and highly

available server in dense form

factor

Updated November 2003

Feature

Function

Interconnect –

The interconnect technologies in a

Options

HPCC configuration allow servers

Fast Ethernet – Low to communicate with each other

for node-to-node communications.

cost

Gigabit Ethernet –

High performance

Myrinet – High Speed

Low Latency

Storage Devices

PowerVault™ 220S SCSI external

storage device on the Master

Node for primary storage

Headless Operation The ability to operate a system

without keyboard, video or mouse

(KVM)

Redirection of serial ERA - Enhanced features for Outport

of-Band and Remote

Management to allow centralized

control of network devices through

serial console ports.

Operating System

Factory installation of Red Hat

software pre-install Linux operating system

Provides the capability to remotely

Wake on LAN

power-on compute nodes over the

Ethernet network

HPCC Software

Solution Stack –

Optional deliverable

through DPS

Benefit

The interconnect technology is

designed for message passing

between the nodes. Offering

Fast Ethernet and Gigabit

Ethernet enables customers to

choose between a low cost or

higher performance solution.

Myrinet provides a high speed

low latency interconnect for

application environments that

require frequent node-to-node

communication.

Provides a cost effective method

for a large amount of external

storage capabilities that can be

allocated across multiple

channels for maximized I/O

performance

Simplifies cable management

and helps lower cost of solution

by eliminating monitors,

keyboards and mouse

Helps increase manageability of

cluster from a single console

device.

Facilitates setup of cluster

configuration

Remote management tool that

can reduce system management

workload, provide flexibility to

the system administrator's job,

and help save time-consuming

effort and costs.

Dell tested tools for creating

system environment for parallel

computing infrastructure

Cluster Manager

Compilers

Job Scheduler

MKL, BLAS, Atlas

MPI interface

Server Management Embedded systems management Detects and remedies problems

detects errors such as fan failures within the cluster.

and temperature and voltage

problems, which generate alerts

and reports to Dell OpenManage

Console

Dell Product Group

Page 7

Updated November 2003

Key Customer Benefits

The performance of commodity computers and network hardware

continually improves as new technology is introduced and implemented.

At the same time, market conditions have led to decreases in the price of

these components. As a result, it is now practical to build parallel

computational systems based on low-cost, high-density servers, such as

the Dell PowerEdge 2650, rather than buy CPU time on expensive

supercomputers. Dell PowerEdge servers are tuned to take advantage of

the existing server/OS/application combination. Dell PowerEdge server

performance and price/performance are typically among the industry

leaders on a variety of benchmark standard scales (TPC-C, TPC-W;

SPECweb99).

Low cost and high performance are only two of the advantages of using a

Dell High Performance Computing Cluster solution. Other key benefits of

HPCC versus large Symmetric Multi Processors (SMP) are shown in Table

3.

Table 3

Comparison of SMP and HPCC Environments

Large SMPs

Dell Product Group

HPCC

Scalability

Fixed

Unbounded

Availability

High

High

Ease of Technology Refresh

Low

High

Application Porting

None

Required

Operating System Porting

Difficult

None

Service and Support

Expensive

Affordable

Standards vs. Proprietary

Proprietary

Standards

Vendor Lock-in

Required

None

System Manageability

Custom; better

usability

Standard;

moderate usability

Application Availability

High

Moderate

Reusability of Components

Low

High

Disaster Recovery Ability

Weak

Strong

Installation

Non-standard

Standard

Page 8

Updated November 2003

The features compared in Table 3 are defined as follows:

Scalability: The ability to grow in overall capacity and to meet higher

usage demand as needed. When additional computational resources

are needed, servers can be added to the cluster. Clusters can consist of

thousands of servers.

Dell Product Group

Availability: The access to compute resources. To help ensure high

availability, it is necessary to remove any single point of failure in the

hardware and software. This helps to ensure that any individual

system component, the system as a whole, or the solution (i.e.,

multiple systems) stay continuously available. A HPCC solution offers

high availability because the components can be isolated and, in many

cases, the loss of a compute node in the cluster does not have a large

impact on the overall cluster solution. The workload of that node is

allocated among the remaining compute nodes.

Ease of Technology Refresh: Integrating a new processor, memory,

disk, or operating system technology can be accomplished with

relative ease. In HPCC, as technology moves forward, modular pieces

of the solution stack can be replaced as time, budget and needs require

or permit. There is no need for a one-time 'switch-over' to the latest

technology. In addition, new technology is often integrated more

quickly into standards-based volume servers than proprietary system

providers.

Service and Support: Total cost of ownership – including post-sales

costs of maintaining the hardware and software – from standard

upgrades to unit replacement to staff training and education, is

generally much lower when compared to proprietary implementations

that typically come with a high level of technical services due to their

inherently complex nature and sophistication.

Vendor Lock-in: Proprietary solutions require a commitment to a

particular vendor, whereas industry-standard implementations are

interchangeable. Many proprietary solutions require only components

that have been developed by that vendor. Depending on the revision

and technology, application performance may be diminished. HPCC

enables solutions to be built from the best-performing industry

standard components.

System Manageability: System management is the installation,

configuration and monitoring of key elements of computer systems,

such as hardware, operating system and applications. Most large SMPs

have proprietary enabling technologies (custom hardware extension

and software components) that can complicate the system

management. On the other hand, it is easier to manage one large

system compared to hundreds of nodes. However, with wide

deployment of network infrastructure and enterprise management

software, it is possible to easily manage multiple servers of a HPCC

system from a single point.

Page 9

Updated November 2003

Reusability of Components: Commodity components can be reused

when off line, therefore preserving a customer’s investment. In the

future, when refreshing a Dell HPCC PowerEdge solution with next

generation platforms, the older Dell PowerEdge compute nodes can be

deployed as File/Print servers, Web Servers or other infrastructure

servers.

Installation: Specialized equipment generally requires expert

installation teams trained to handle such cases. They also require

dedicated facilities such as power, cooling, etc. For HPCC, since the

components are “off-the-shelf” commodities, installation is generic

and widely supported.

Hardware Options

The High Performance Computing Cluster configurations can be enhanced

in the following ways:

Increased memory in the compute nodes

Increased internal HDD storage capacity in the compute nodes

Increased external storage on the master node

Additional NICs for the compute nodes and master node

Through Dell Professional Services’ recommendations on faster interconnect

technologies

Related Web Sites

http://www.dell.com/clustering

http://www.oscar.org/

http://www.csm.ornl.gov/oscar/

http://www.beowulf.org/

http://www.dell.com/us/en/esg/topics/segtopic_servers_pedge_rackmain.htm

Service and Support

Dell HPCC systems come with the following:

Three year limited warranty1 and three years of standard Next

Business Day (NBD) parts replacement and one year of NBD on-site2

labor)

30-day “Getting Started” help line3

DirectLine network operating system support upgrades available with

limited three-year warranty

Telephone support 24 hours a day, 7 days a week, 365 days a year for

the duration of the limited three-year warranty.

Dell Product Group

Page 10

Updated November 2003

Dell Professional Services offers additional services to assist in:

Solution Design

Consultation

Installation and Setup

Pre-staging of solution at off-site location

For a copy of our Guarantees or Limited Warranties, please write Dell USA, L.P., Attn:

Warranties, One Dell Way, Round Rock, TX 78682. For more information, visit

www.dell.com/us/en/gen/services/service_service_plans.htm.

2 Service may be provided by third-part y. Technician will be dispatched if necessary

following phone-based troubleshooting. Subject to parts availability, geographical restrictions

and terms of service contract. Service timing dependent upon time of day call placed to Dell.

U.S. only.

3 30-day telephone support program is at no additional charge to help customers with

installation optimization and configuration questions during the critical 30-day period after

shipment of PowerEdge systems. This program is available to customers who purchase

Novell NetWare® or Microsoft Windows NT Server or Windows 2000 Server with their

PowerEdge server from Dell. Support provided after the 30-day Getting Started Program will

be for only the Dell hardware. Beyond 30 days from the invoice date, Dell’s DirectLine

telephone support service is available for purchase for NOS support.

1

Dell, OpenManage, PowerVault and PowerEdge are trademarks of Dell Computer

Corporation. Microsoft and Windows NT are registered trademarks of Microsoft Corporation.

Intel is a registered trademark of Intel Corporation. Other trademarks and trade names may

be used in this document to refer to either the entities claiming the marks and names or their

products. Dell disclaims proprietary interest in the marks and names of others.

©Copyright 2002 Dell Computer Corporation. All rights reserved. Reproduction in any

manner whatsoever without the express written permission of Dell Computer Corporation is

strictly forbidden. For more information contact Dell. Dell cannot be responsible for errors in

typography or photography.

Dell Product Group

Page 11

Updated November 2003