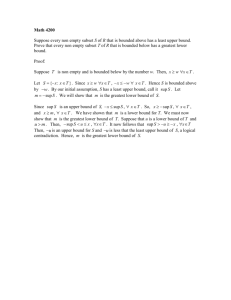

Problem 1

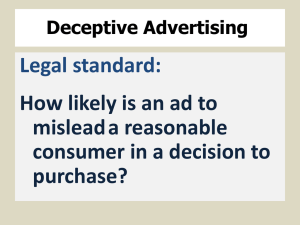

advertisement

Problem 1

Let S=(X, {a,b,c,d}) be a decision system, where {a,c} are stable attributes and

{b,d} flexible. Attribute d is the decision attribute. Find all action rules which can

be extracted from S following the Action Forest & Action Tree algorithms.

a

x1

a1

x2

a2

x3

a1

x4

a2

x5

a1

x6

a3

System S

b

b2

b2

b1

b2

b1

b2

c

c1

c1

c2

c2

c3

c2

d

d1

d2

d2

d2

d1

d2

Solution.

In the first step of the algorithm we extract classification rules from System S.

They are listed below (in a table representation):

a b

a1 b2

a1.

a2

a3

c

d

-> d1

c1 -> d1

->d2

c2 ->d2

c3 ->d1

->d2

In the next step we construct two trees, one for value d1, and the second for the value d2.

Next we use these two trees to get the action rules.

Visit http://www.cs.uncc.edu/~ras/KDD-LectureI.ppt and go to the page 8 for a hint.

Problem 2

Let S=(X, {a,b,c,d}) be a decision system, where {a,c} are stable attributes and

{b,d} flexible. Attribute d is the decision attribute. Find all action rules which can

be extracted from S following the ARAS & ARES algorithms.

a

x1

a1

x2

a2

x3

a1

x4

a2

x5

a1

x6

a3

System S

b

b2

b2

b1

b2

b1

b2

c

c1

c1

c2

c2

c3

c2

d

d1

d2

d2

d2

d1

d2

Solution:

Classification rules are: c2 -> d2, c3 -> d1, a2 -> d2, a3 -> d2, b1*c2 -> d2,

b2*c2 -> d2, b2*a1 -> d1, b2*a2 -> d2

Target classification rule has to contain flexible attributes which means only rules

b1*c2 -> d2, b2*c2 -> d2, b2*a1 -> d1, b2*a2 -> d2 can be considered.

Let’s take r = [b2*c2 -> d2] as the target classification rule. We are interested in

reclassification (d1 -> d2). First we compute U[r,d1] = (objects having property c2*d1).

It means there are no action rules linked with r = [b2*c2 -> d2] .

Let us take rule r = [b2*a1 -> d1] as a target rule. We are interested in reclassification

d2 -> d1. First we compute U[r,d2] = {x3} (objects having property d2*a1).

a1 – stable attribute in r = [b2*a1 -> d1]. We build terms following ARAS strategy:

1. [a1*b1]* = {x3, x5} {x3}

2. [a1*c1]* = {x1} {x3} marked negative because {x1} {x3} =

3. [a1*c2]* = {x3} {x3} marked but no action rule because a1, c2 both stable.

4. [a1*c3]* = {x5} {x3} marked negative because {x5} {x3} =

5. [a1*b1]* = {x3, x5} is only left so it can not be extended.

6.

For another example follow the link Class 4 (Action Rules) PPT on the class website

[http://www.cs.uncc.edu/~ras/KDD-Fall02.html], pages 43-44

Problem 3

Show how to cluster the data in the matrix below:

X

x1

x2

x3

x4

x5

x6

X1

X2

X3

X4

X5

X6

A

1

2

6

10

10

1

B

2

10

2

12

10

3

X1 x2

x3

x4

x5

9

5

12

19 10

17 8

1

8

14

12

6

2

18

16

x6

p(R,Q)= Ap(A,Q) + Bp(B,Q) + p(A,B) + |p(A,Q)-p(B,Q)| with

A = [MQ + MA]/[MQ + MA + MB], B =[MQ + MB]/[MQ + MA + MB],

= [-MQ]/[MQ + MA + MB]

{x1,x6} x2 x3 x4 x5

{x1,x6}

x2

x3

x4

x5

11

7

24

22

12

10

8

14

12

2

p({{x1},{x6}},x2) = 9 . 2/3 + 8. 2/3 - 1 . 1/3 = 6+5 - 1/3 = 11

p({{x1},{x6}},x3)= 5 . 2/3 + 6 . 2/3 – 1 . 1/3 = 7

p({{x1},{x6}},x4)= 19 . 2/3 + 18 . 2/3 – 1 . 1/3 = 24

p({{x1},{x6}},x5)= 17 . 2/3 + 16 . 2/3 – 1 . 1/3 = 22

{x1,x6} x2 x3 {x4,x5}

{x1,x6}

x2

x3

{x4,x5}

11

7

34

12

p({{x1},{x6}},{x4,x5})= ¾ . p({{x1},{x6}},x5) + ¾ . p({{x1},{x6}},x4) – 2/4 =

¾ . 22 + ¾ . 24 – 2/4 = 34

p(x2,{x4,x5}) =

assume that 7 is the winner then,

{x3, {x1,x6}} x2

{x4,x5}

{x3, {x1,x6}}

x2

{x4,x5}

Use Manhattan distance / d(pi, pj) = |xi1 – xj1 | + |xi2 – xj2 | + … / for single points pi, pj

and Lance-Williams Formula for distance between clusters:

p(R,Q)= Ap(A,Q) + Bp(B,Q) + p(A,B) + |p(A,Q)-p(B,Q)| with

A = [MQ + MA]/[MQ + MA + MB], B =[MQ + MB]/[MQ + MA + MB],

= [-MQ]/[MQ + MA + MB], where MA is the number of points in cluster A, MQ is the

number of points in Q, MB is the number of points in cluster B, and R is formed by

merging clusters A and B.

Problem 4

Let S=(X, {a,b,c,d}) be a decision system, where d is the decision attribute. Find

all classification rules in S describing d by following ERID algorithm. Give their

support and confidence.

a

x1

a1

x2

a2

x3

a1

x4

x5

a1

x6

a3

System S

b

b2

b1

b2

b1

b2

c

c1

c1

c2

c3

c2

d

d1

d2

d2

d2

d1

d2

Solution (let’s take minimum confidence = ½, minimum support = 1):

d1*= {(x1,1), (x5,1)}, d2*= {(x2,1), (x3,1), (x4,1), (x6,1)}

FIRST LOOP:

a1* = {(x1,1), (x3,1), (x4,1/3), (x5,1)}

sup(a1 -> d1) = 1*1+1*1 = 2, conf(a1 -> d1) = 2/(3+1/3) = 6/10 marked positive

sup(a1 -> d2) = 1*1+1*1/3 = 4/3, conf(a1 -> d2)= 4/10

not marked

a2*= {(x2,1), (x4,1/3)}

sup(a2 -> d1) = 0 marked negative

sup(a2 -> d2) = 1*1 + 1* 1/3= 4/3, conf(a2 -> d2) = 1 marked positive

a3*= {(x4,1/3), (x6,1)}

sup(a3 -> d1) = 0 marked negative

sup(a3 -> d2) = 1*1/3 + 1*1 = 4/3, conf(a3 -> d2) = 1 marked positive

b1* = {(x2,1/2), (x3,1), (x5,1)}

sup(b1 -> d1) = 1*1 = 1, conf(b1 -> d1) = 2/5

not marked

sup(b1 -> d2) = 1*1/2 + 1*1 = 3/2 , conf(b1 -> d2) = 6/10 marked positive

b2*={(x1,1), (x2,1/2), (x4,1), (x6,1)}

sup(b2 -> d1) = 1*1 = 1, conf(b2 -> d1) = 2/7

not marked

sup(b2 -> d2) = 1*1/2 + 1*1 + 1*1 = 5/2, conf(b2 -> d2) = 5/7 marked positive

c1* = {(x1,1), (x2,1), (x4,1/3)}

sup(c1 -> d1) = 1*1 = 1, conf(c1 -> d1) = 3/7, not marked

sup(c1 -> d2) = 1*1 + 1*1/3 = 4/3, conf(c1 -> d2) = 4/7 marked positive

c2* = {(x3,1), (x4,1/3), (x6,1)}

sup(c2 -> d1) =

sup(c2 -> d2) =

c3* = {(x4,1/3), (x5,1)}

sup(c3 -> d1) =

sup(c3 -> d2) =

SECOND LOOP [concatenate not-marked implications from the first loop which have

the same decision value]

[b1 -> d1] concatenate with [c1 -> d1]

we get b1*c1 -> d1,

[b1*c1]* = {(x2,1/2)}

sup[b1*c1 -> d1] = 0 marked negative

[b2 -> d1] concatenate with [c1 -> d1]

we get b2*c1 -> d1

[b2*c1]* = {(x1,1), (x2,1/2), (x4,1/3)}

sup[b2*c1 -> d1] = 1*1 = 1, conf[b2*c1 -> d1] = 6/11

……………………………….

……………………………….

marked positive

Output: Rules marked positive with their confidence and support

For another example follow the link Class 8 (Chase Methods) PPT on the class website

[http://www.cs.uncc.edu/~ras/KDD-Fall02.html], pages 21-35

Problem 5. Consider the following table describing objects {x1,x2,…,x7} by attributes

{k1,k2,k3,k4,k5}.

x1 x2 x3 x4 x5 x6 x7

k1

k2

k3

k4

k5

11

1

13

10

11

2

21

4

54

1

36

3

39

11

0

1

11

3

2

14

15

45

15

16

2

13 0

3 27

26 3

31 1

0 29

Construct binary TV-tree with threshold 4 for an attributes to be active. When building

the tree, you can split the table in a way that one of the subtables contains only one

object. Show how to use the tree to find the closest document to the one represented by

[0, 15, 10, 15, 10].

Solution:

In the original table T none of the attributes is active.

We chose by random attribute k3: {3,4, 13,15, 26,

39} – its domain.

p1

p2

p3

possible splitting points

We try p1 as the splitting point:

obtained partition T1={x2,x4,x7}, T2={x1,x3,x5,x6}

Table T1

x2

x4

2

21

4

54

1

1 0

11 27

3 3

2 1

14 29

k1

k2

k3

k4

k5

x7

k1, k3 –active. Information stored in the node corresponding to T1: (k1, 1), (k3, 3.5)

x1 x3

x5

x6

k1

11 36 15 13

k2

1

3 45

3

k3

13 39 15 26

k4

10 11 16 31

k5

11

0

2

0

No active attributes. No information stored in the node corresponding to T2.

We try p2 as the cut point: obtained partition T1 = {x3,x6}, T2={x1,x2,x4,x5,x7}

Table T1

x3

x6

k1

36 13

k2

3

3

k3

39 26

k4

11 31

k5

0

0

k2, k5 – active. . Information stored in the node corresponding to T1: (k2, 3), (k5, 0)

Table T2

x1 x2

k1

k2

k3

k4

k5

11

1

13

10

11

2

21

4

54

1

x4

1

11

3

2

14

x5

x7

15 0

45 27

15 3

16 1

2 29

None of the attributes is active which means no information is stored in the node

corresponding to T2.

Both cut points are giving the same number of active dimensions in the resulting

subtables (T1,T2) so either p1 or p2 can be chosen as the root of the TV-tree.

This algorithm is recursively repeated for both subtables.