Block Mapping - A Compiler Driven I/O Management study

Block Mapping - A

Compiler Driven I/O

Management Study

Wendy Zhang

Department of Computer Information Systems

Southern University at New Orleans

New Orleans, LA, U.S.A.

Ernst L. Leiss

Computer Science Department

University of Houston

Houston, TX, U.S.A

Most scientific programs have large input and output data sets that require out-of-core programming or use virtual memory management (VMM). VMM is not an affective approach because it results easily in substantial performance reduction. In contrast, compiler driven I/O management will allow a program’s data sets to be retrieved in parts, called blocks. Out-of-core programming defines the partitions of the data sets and the control of I/O. This study shows that block mapping I/O management may improve the performance of out-of-core problems by one order of magnitude or more. The study also shows that the block size chosen to partition the data sets plays an important role in compiler driven I/O management.

KEY WORDS :

I/O management, block mapping, VMM, out-of-core, compiler

1.

Introduction

Most significant scientific programs’ data sets exceed the available memory; thus if one wants to avoid virtual memory management

(which often suffers a severe performance penalty), these programs must be executed outof-core, an approach that requires the explicit movement of data between main memory and secondary storage.

In the last twenty years, the processor speed has increased faster than memory speed and disk access speed. Thus, it is important to use the memory hierarchy efficiently and construct I/O minimal programs.

Virtual Memory Management (VMM) system combines special purpose memory management hardware and operating system software to provide the illusion of an unlimited amount of main memory [1]. VMM system uses a static policy for storage management. As pointed in [3], this uniform page automatic transfer method is convenient to use, but the programmers are no longer fully in control of the program. VMM systems perform poorly on many scientific applications [6].

COMANCHE (an acronym for COmpiler

MANaged caCHE) is a compiler run time I/O management system [6]. It is a compiler combined with a user level runtime system and replaces for applications with out-of-core data sets. More details about COMANCHE will be given in Section 2.

Blocking or tiling [4][8] is a well-known technique that improves the data locality of numerical algorithms. Blocking can be used to achieve locality for different levels of memory hierarchy. [7][2] describe a locality optimization algorithm that applies a combination of loop interchanges, skewing, reversal, and tiling to improve the data locality of loop nests. [3] describes an I/O minimal algorithm where for a given amount of space the number of retrievals of blocks from external memory is minimal. [3] also points that the notion of I/O minimal depends on the block size available in the memory.

Out-of-core matrix multiplication is used in this paper to study the importance of blocking.

An I/O minimal algorithm for matrix multiplication is sketched which forms the basis of this work. In Section 3, we discuss the minimal amount of space required to carry out the computation as well as the I/O scheme used with COMANCHE system.

In testing the proposed I/O minimal matrix multiplication algorithm, it has become clear that the size of the blocks for the sub-matrices plays a very important role. In Section 4, we will compare the performance for different block sizes. In Section 5, we draw conclusions from the results of our study.

2. COMANCHE

Comanche [6] is a runtime system. It is written in C and uses the standard I/O library calls

– fopen, fclose, fread, fwrite, and fseek. The current Comanche system is running under the

RedHat 5.0 Linux operating system on a PC with a PentiumPro (160Mhz or faster) processor and

64MB or more RAM.

The standard entity in the Comanche runtime is a two-dimensional array. Higher dimensions can be supported, or else the accesses can be translated to two-dimensional accesses. Data are assumed to be in row major layout on disk. The ideal array is a square matrix.

Comanche is based on a compiler-managed cache where the main memory accommodates the cache. The compiler has unique information about the data needs of the program, the size and shape of arrays, and the access patterns of the program.

Note that it is this information that typically is not available to the operating system, which is customarily charged with the management of I/O calls. The out-of-core program is restructured to move data automatically between main memory and disk.

There are two structures declared in the

Comanche system. One is a block structure used in buffers to keep a block of data.

typedef structure block_s

{ double *data; int flag; int refcnt; int rowindex; int columnindex; int nrow; int ncol;

int blockno;

} Block;

The other structure is a matrix structure. This structure holds the information of the matrix on disk and has buffers to hold several blocks in memory. typedef struct array_s

{ int nblocks; int nelems int bsize; int elesize;

FILE *fp; long offset; char *name; int *map; int victim; int flag; int nbuffers;

Block **buffers; int total_mem;

} Matrix ;

Besides these structures, there are several functions in Comanche. Two major functions are attach_block and release_block which are used to perform the block mapping.

When the data set is too large to fit into memory, VMM will map the array into a onedimensional vector and then that vector is cut up into blocks. Comanche will take a row of an array and cut it up into blocks, so that a block only corresponds to a single row or a sub-matrix, and cuts it up into blocks. It is under the control of the resulting out-of-core program. The attach_block and release_block functions are used to improve the data locality to achieve better performance than VMM.

The attach_block and release_block functions tell the runtime system that the block is to be mapped into memory; then an address to the cached data is to be returned. The runtime system will not reclaim memory that has been attached as long as it remains attached. It does not matter how much time has passed since the block was last referenced. The out-of-core program manages the duration of mapped data and ensures that the number of attach operations will not over-fill the available memory before the data’s subsequent release.

Read-only, write-only, and read-write flags are added to the functions. If the flag is writeonly, attach_block allocates the buffer space for that particular block. There is no need to read data from disk. If the flag is write the runtime system must write the block back to disk when it is released.

3. Block matrix multiplication

The object of our study of the Comanche run time system is matrix multiplication. Assume that there are three matrices A, B, and C of size (N, N) on disk. We will multiply A by B and store the result in matrix C. To store matrices A, B, and C

in memory requires 3N 2 space. If N is large, the total amount of main memory available is less than the needed space of 3N 2 . Since the data cannot be entirely loaded into memory, the problem becomes out-of-core.

The traditional way of coding this in C is something like this: for (i=0; i<N; i++)

for (j=0; j<N; j++)

{

C[i][j]=0;

for (k=0; k<N; k++)

C[I][j]+=A[I][k]+B[k][j];

}

Note that although the same row of A is reused in the next iteration of the middle loop, a large volume of data used in the intervening iterations may be replaced. During processing, a virtual memory system would do much swapping, which is very time consuming.

One solution to solve this out-of-core problem is to split each matrix into several sub-matrices

(blocks) of size (M, M). Specially, if the dimensions of the matrix are divisible by the dimensions of the block we can use an algorithm suggested by the following observations.

Assume M is one half of N; then each matrix can be split into four sub-matrices of size (N/2,

N/2). Schematically we have:

A

11

A

12 X

B

11

B

12

= C

11

C

12

A

21

A

22

B

21

B

22

C

21

C

22

The C ij

’s are defined as follows:

C

11

= A

11

* B

11

+ A

12

* B

21

C

12

= A

11

* B

12

+ A

12

* B

22

C

21

= A

21

* B

11

+ A

22

* B

21

C

22

= A

21

* B

12

+ A

22

* B

22

Assuming all C i,j

are initialized to zero, the following instructions can be executed in any order:

C

11

= C

11

+ A

11

* B

11

C

11

= C

11

+ A

12

* B

21

C

12

= C

12

+ A

11

* B

12

C

12

= C

12

+ A

12

* B

22

C

21

= C

21

+ A

21

* B

11

C

21

= C

21

+ A

22

* B

21

C

22

= C

22

+ A

21

* B

12

C

22

= C

22

+ A

22

* B

22

A brute force approach might retrieve each of the twenty-four sub-matrices (blocks) regardless of reusability.

In out-of-core programming, we can sequence the instructions in order to reuse sub-matrices as often as possible. We use the

Comanche runtime function block_attach when we need a block and block_release when we no longer need that block.

Assume that the amount of available main memory is equal to four sub-matrix, 4 * N 2 /4. This means that we are able to keep a whole row of blocks of the A matrix, one block of the B matrix, and one block of the matrix C in memory during processing. The following sketches an efficient approach (see [3]):

C

11

A

11

B

1

// Attach C

11,

A

11,

B

11

C

11

B

21

A

12

// Release B

11, attach B

21,

A

12

C

12

A

11

B

12

// Release B

21,

C

11, attach C

12,

B

12

C

12

B

22

A

12

// Release B

12,

attach B

22

C

21

A

21

B

11

// Release all, attach C

21,

A

21,

B

11

C

21

B

21

A

22

// Release B

11, attach B

21,

A

22

C

22

A

21

B

12

// Release B

21,

C

21, attach

C

22,

B

12

C

22

B

22

A

22

// Release B

12, attach B

22,

A

22

// Release all

In this scheme, we only retrieve eight blocks from disk and store four blocks to disk.

We use a similar, but more complicated scheme if N is not divisible by M.

4. Experiments

We have implemented the algorithm suggested in Section 3 in an out-of-core program written in the C language. The C compiler under

Linux generates working code using the

Comanche runtime system. On a single processor

PC with a PentiumII, 333MHz microprocessor, the code was run under Redhat Linux 5.0 in command mode. The system used for the actual performance has 64MB of memory of that about

50MB are available to the program.

We have run one set of experiments for 1024 x

1024 double precision matrix multiplication. The total data set space is 1024 x 1024 x 8 x 3=24MB.

Another set of experiments was run for 2048 x

2048 double precision matrix multiplication. The

total data set space is 2048 x 2048 x 8 x 3 =

96MB. Data files are initialized with random values in the interval (-1, +1).

The Linux implementation requires that we execute tests on an unloaded machine and use elapsed time. For each experiment, we have recorded the following:

1.

Block size: given as number of rows and columns.

2.

User time: time used by executing the instructions of the program in seconds

3.

System time: time used by system function calls in seconds

4.

Elapsed: time used by executing the whole program

5.

Page fault: number of page faults in the virtual memory system

6. # of Seek: the number of seek function

calls used in the process

7. # of Read: the number of read function

calls used to map data from disk to memory

8. # of Write: the number of write function

calls used to map data from memory to disk

9. Memory usage: the memory locations allocated to the data structure used to hold

the blocks in memory which is mainly one

row of blocks plus two blocks.

During initial testing we found that block mapping large files has a significant impact on the system resources. We did additional tests to assess the impact of different block sizes on running time.

4.1 Matrix multiplication

In ordinary matrix multiplication (C = A x B), the data sets of the matrices A, B, and C are mapped into memory by an mmap system call.

Virtual memory is tested by mapping files into memory.

The original code for matrix multiplication

C = A x B (VMM) is given as following: double matmul(int n, double *A)

{

int i, j, k;

double dval;

double *a, *b, *c;

double *B, *C;

double sum;

B = A + n*n;

C = B + n*n;

a = A;

b = B;

c = C;

sum = 0.0;

// A[i]

// B[i]

// C[i]

for (i = 0; i < n; i++)

{

for (j = 0; j < n; j++)

{

dval = 0.0;

for (k = 0; k < n; k++) dval += a[k] * b[k*n+j];

c[j] = dval;

sum += dval;

}

a += n;

c += n;

}

return sum;

}

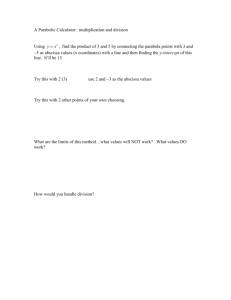

The performance values are showed in Figures

4.1 and 4.2. Compared with block mapping, this method causes more major page faults so the elapsed time is generally very large (over half an hour for the smaller problem and 4.5 hours for the larger).

Regular matrix multiplication (VMM) : C = A x B

User time:

1865.48

System time:

0.23

Elapsed:

31:05.7

Page fault (major):

6229

Page fault (minor):

11

Figure 4.1 Performance of matrix multiplication for matrix size 1024 x 1024

User time:

15,993.07

Regular matrix multiplication (VMM): C = A x B

System time: Elapsed: Page fault (major): Page fault (minor):

16.42 4:31:27 180,538 11

Figure 4.2 Performance of matrix multiplication for matrix size 2048 x 2048

4.2 The dimensions of the matrix are divisible by the dimensions of the block

To implement block multiplication, we divided the matrix into blocks. Each block has size M x M. Then we perform the block multiplication described in Section 3. We map one row of blocks of matrix A into the memory as well as one block of B and one block of C.

After each block multiplication, we store the block of matrix C and release the block of matrix

B. We release all blocks after we finish one row of blocks. We start over for another row of blocks until the whole matrix multiplication is finished. The block multiplication code is given as following: double matmul(int n,Matrix *mptr,int bs)

{

int i, j, k;

int bindex = n, cindex = n + n;

double dval;

double *a, *b, *c;

double sum = 0.0;

int bkrow = n/bs;

int bno, cno, ano, newbindex;

for (i = 0; i < n; i += bs)

{

for (j = 0; j < n; j += bs)

{

ano = i/bs * bkrow;

a=block_attach(mptr,ano,-1,

i,0,bs,bs);

assert(a);

cno=(cindex+i)/bs*bkrow+j/bs;

c=block_attach(mptr, cno, 0,

cindex+i,j,bs,bs);

assert(c);

bno= bindex/bs * bkrow + j/bs;

b=block_attach(mptr,bno,-1,

bindex,j,bs,bs);

assert(b);

sum+=sub_matmul(a,b,c,bs,bs,bs);

for (k = 1; k < bkrow; k++)

{

block_release(mptr, bno);

newbindex = bindex + k * bs;

bno = newbindex/bs*bkrow+j/bs;

b=block_attach(mptr,bno,-1,

newbindex,j,bs,bs);

assert(b);

++ano;

a = block_attach(mptr,ano,-1,

i,k*bs,bs,bs);

assert(a);

sum+=sub_matmul(a,b,c,bs,bs,bs);

}

block_release(mptr, bno);

block_release(mptr, cno);

}

for (k = 0; k < bkrow; k++) block_release(mptr, ano--);

} // end of row loop

return sum;

}

For comparison, we have measured the performance of the matrix multiplication for different block sizes. We did experiments on the block sizes 512 x 512, 256 x 256, 128 x 128, 64 x

64, 32 x 32, 16x16, and 8 x 8. We applied these different block sizes to the data sets of 24MB and

96MB. The performance values are listed in

Figures 4.3 and 4.4.

From the experiments we see that block multiplication uses only 25% to 50% of execution time of regular multiplication (VMM).

The one exception is for a laughably small block size (8 x 8).

4.3. The dimensions of the matrix are not divisible by the dimensions of the block

To implement the block multiplication, we have divided the matrix N x N into blocks of size

M x M. Since the dimensions of the matrix are not divisible by the dimensions of the block, the last block in a row of blocks is of size M x

M%N, the last row of blocks is of size N%M x

M, and the last block of a matrix is of size N%M x N%M. Before we can perform block multiplication, we save the block dimensions in a table. Then we apply the block multiplication sketched in Section 3. We map one row of blocks of A in memory, as well as one block of B and one block of C. After each macro block multiplication, we store the block of C and release block of B. We release all blocks after finish one row of blocks. We start over for another row of blocks until the whole matrix multiplication is finished.

Since we have to maintain different dimensions of blocks and perform different dimensions macro multiplication, the code is thus more complicated (code not given here, due to space limitation).

For comparison, we measured the performance of the matrix multiplication for

Case Number 1 2 3 4 5 6 7

Block Size

User time

System time

Elapsed

Page fault (major)

Page fault (minor)

512 x 512 256 x 256 128 x 128

1023.82

12.09

17:06.0

97

2065

587.61

4.52

09:52.0

97

787

438.03

11.75

07:30.0

97

344

64 x 64

446.15

32.26

07:59.0

97

158

32 x 32

340.15

105.97

07:46.0

97

85

16 x 16 8 x 8

428.33 963.67

400.82 1,589.48

13:50.0 42:33.0

97

59

97

80

# of Seek

# of Read

# of Write

40,960

30,720

10,240

122,880

102,400

20,480

409,600

368,640

40,960

1,474,560

1,392,640

81,920

5,570,560 21,626,880 85,196,800

5,406,720 21,299,200 84,541,440

163,840 327,680

Memory usage 8,388,863 3,146,199 1,311,911 593,607 292,103 186,759

Figure 4.3 Performance for matrices of size 1024 x 1024, dimensions divisible by block size

655,360

267,911

Case Number

Block Size

User time

System time

Elapsed

Page fault (major)

Page fault (minor)

# of Seek

# of Read

1 2 3

512 x 512 256 x 256 128 x 128

8170.23 5815.59 3479.9

33.99 55.65 110.1

4

64 x 64

3545.58

271.85

5

32 x 32

2709.36

872.67

6

16 x 16

3589.8

3286.81

7

8 x 8

10,257.57

13,242.61

2:30:42 1:40:08 1:09:51

101,782 84,780 90,329

3,091 1,304 608

1:32:20

82,097

289

1:10:02

82,152

158

2:02:11

82,135

129

6:41:08

90,282

242

245,760 819,200 2,949,120 11,141,120 43,253,760 170,393,600 676,331,520

204,800 737,280 2,785,280 10,813,440 42,598,400 169,082,880 673,710,080

# of Write

Memory usage

40,960 81,920 163,840 327,680

12,583,383 5,244,071 2,363,079 1,127,687

655,360 1,310,720

592,263 467,591

2,621,440

927,879

Figure 4.4 Performance for matrices of size 2048 x 2048, dimensions divisible by block size

Case number

Block size

User time

System time

Elapsed

Page fault (major)

Page fault (minor)

# of Seek

# of Read

# of Write

Memory usage

1 2 3

500 x 500 260 x 260 130 x 130

1389.07 1019.51 905.48

3.85 5.21 12.35

23:28.0 17:05.0 15:18.0

97 97 97

4

65 x 65

911.2

33.98

15:45.0

97

5

33 x 33

876.65

107.8

16:25.0

97

6

17 x 17

965.33

367.94

22:35.0

6,258

7

15 x 15

992.9

475.55

24:28.0

97

2,459 812 355 170 119 170 199

76,800 122,880 409,600 1,474,560 5,570,560 19,676,160 25,082,880

61,440 102,400 368,640 1,392,640 5,406,720 19,363,840 24,729,600

15,360 20,480 40,960

10,000,351 3,245,271 1,353,191

81,920

612,183

163,840

309,783

312,320

192,639

353,280

187,551

Figure 4.5 Performance for matrices of size 1024 x 1024, dimensions not divisible by block size

8

9 x 9

1323.09

1265.6

43:09.0

97

462

67,706,880

67,123,200

583,680

235,359

Case number

Block size

User time

System time

1 2 3

500 x 500 260 x 260 130 x 130

11077.7 8178.1 7215.81

77.03 67.32 127.15

4

65 x 65

7256.11

299.93

5

33 x 33

6996.72

884.59

6

17 x 17

7784.24

3056.78

7 8

9 x 9 15 x 15

8188.66 122248.54

3789.09 10455.48

Elapsed

Page fault (major)

Page fault (minor)

# of Seek

# of Read

# of Write

Memory usage

3:21:37

205,820

3,437

2:25:38

93,221

1,345

2:32:20

86,292

634

2:26:00

82,086

327

2:18:40

82,062

280

3:08:57

82,145

557

3:28:05

82,057

681

6:28:04

82,172

1,736

358,400 819,200 2,949,120 11,141,120 41,932,800 152,401,920 195,000,320 536,985,600

307,200 737,280 2,785,280 10,813,440 41,287,680 151,162,880 193,597,440 534,650,880

51,200 81,920 163,840 327,680 645,120 1,239,040 1,402,880 2,334,720

14,000,615 5,409,191 2,437,383 1,162,775 616,311 464,559 480,495 781,191

Figure 4.6 Performance for matrices of size 2048 x 2048, dimensions not divisible by block size

different block sizes. We did experiments for block sizes 500 x 500, 250 x 250, 130 x 130, 65 x

65, 33 x 33, 17x17, and 9 x9. We applied these different block sizes on the data sets of 24MB and 96MB. The performance values are listed in

Figures 4.5 and 4.6.

From the experiments, we observe that our block multiplication uses only 50% to 75% of the execution time of regular multiplication. There one exception is again a tiny block size (9 x 9).

We also see that our block multiplication uses about double the time compared to the case where the dimensions of the matrix are divisible by the dimensions of the block.

5. Conclusion

Data sets arising in scientific and engineering programs are often too large to fit into the available memory. In this case, data must be transferred from and to remote storage, such as disk. These I/O transfers can quickly dominate the execution time of the program. It becomes critically important to minimize the I/O between main memory and disk.

Building on previous work on Comanche

[Robin98], we proposed an approach to the problem of improving the calculation of out-ofcore problems. Our extension of Comanche involves the use of block mapping to minimize

I/O.

The results of our experiments on block matrix multiplication indicate that our approach outperforms regular matrix multiplication by a significant factor, except when the block size is extremely small.

We have tested our matrix multiplication using a wide variety of block sizes, both where the dimension of the matrix is divisible by the dimensions of the block and where it is not. Not surprisingly, the results for the first group are significantly better than those of the second. If follows that one should choose block dimensions that divide the dimensions of the matrix.

Our results indicate that the blocks of size

128 x 128 and 32 x 32, using elapsed time as primary measure, perform better than others.

Overall, we conclude that the block size plays a crucial role in block mapping I/O minimization.

References

[1] K. Hwang and F. A. Briggs, “Computer

Architecture and Parallel Processing”,

McGraw-Hill, Inc., New York, 1984.

[2] M. S. Lam, E. E. Rothberg, and M. E. Wolf,

“The Cache Performance and Optimizations

of Blocked Algorithms”, in 4 th international

Conference on Architectural Support for

Programming Languages and Operating

Systems, pages 63-74,

Santa Clara, Calif., USA, 1991.

[3] E. L. Leiss, “Parallel and Vector

Computing: a Practical Introduction”,

McGraw-Hill, Inc., New York, 1995.

[4] A.C. McKellar, and E. G. Coffman, Jr.

“Organizing Matrices and Matrix Operations

for Paged Memory Systems”, pages 153-169,

Communications of the ACM, March 1969.

[5] L. H. Miller and A. E. Quilici, “The Joy of

C: Programming in C”,

John Wiley & Sons, Inc., New York, 1997.

[6] E. M. Robinson, “Compiler Driven I/O

Management”, Ph.D. dissertation,

Department of Computer Science,

University of Houston, 1998.

[7] M.E. Wolf and M. S. Lam, “A Data Locality

Optimizing Algorithm”, in Proceedings of

the ACM SIGPLAN ’91 Conference on

Programming Language Design and

Implementation, pages 30-44,

Toronto, Canada, June 1991, ACM Press.

[8] M. Wolfe, “High Performance Compiler for

Parallel Computing”,

Addison-Wesley, New York, 1996.