back to Lecture 3

advertisement

Lecture 3

Lecture annotation

Approximate minimization of the energy functional, minimizing sequences, orthogonal series

expansion, Ritz method, Galerkin method, finite element method. Inhomogeneous boundary

conditions.

For an approximate minimization of the functional

(1)

F u u, u A 2 f , u

many methods have been developed. The majority of approximate variational methods for the

solution of Eq.(1) consists in the construction of a certain sequence of elements un HA, which

is proved to converge in the space HA to the searched generalized solution u0, i.e., it holds

(2)

lim un u0 A 0

n

As we know, the functional F(u) attains its minimum on HA just if u = u0 in HA and this

2

minimum is equal to u0 A . The solution u0 is uniquely determined by the relation (47) of

Lecture 2.

Such a sequence {un} of functions (elements) from HA is called the minimizing sequence, if it

holds

2

lim F un u0 A .

(3)

n

The most important methods for the minimizing sequence construction are Ritz’s method and,

with it closely related, finite element method. The oldest one is the method of orthogonal series

expansions.

Method of orthogonal series expansion

Presuming that the space HA is separable, we can find in this space a complete system of

orthonormal functions {n} which forms an orthonormal base in the space HA. The requirement

that there exists a base in the energetic space is very important in direct variational methods

because it means that an arbitrary function from the space HA can be approximated to arbitrary

accuracy (in the metric of this space) by an appropriate linear combination of the functions

{n}. Thus the completeness of the system plays an essential role in questions on convergence.

The generalized solution can be expanded into orthogonal series with respect to the system

{n}:

u0 u0 , n A n .

(4)

n 1

(back to Lecture 11)

Remark 1. Let {n} be a complete orthonormal system in the Hilbert space H, let u(x) be an arbitrary function

from this space. The numbers

n u , n ,

or the series

x

n 1

n

n

are called the Fourier coefficients, or the Fourier series of the function u(x) with respect to the system {n},

respectively. An example of a complete orthonormal system in L2(0,l) is the system of functions

2

n x

sin

, n 1, 2,...

l

l

1

Remind (Lecture 2) that the solution u0 is uniquely determined by the relation

u0 , v A f , v for all v H A ,

(5)

If we substitute in (5) v = n, we get

(6)

u0 , n A f , n .

From Eqs. (4) and (6) we get the generalized solution in the form of orthogonal series (in the

energetic space)

u0 f , n n .

(7)

n 1

The series (7) converges both in energetic metric and in the metric of the basic space L2 .

Remark 2. The convergence of an infinite series

v x ,

n 1

n

vn x L2

(8)

is defined on base of the concept of convergence of a sequence: We construct the sequence of so-called partial

sums

k

sk x vn x

(9)

n 1

and call the series (8) convergent in the space L2() with the sum s L2() if

sk s in L2

(10)

Denote by un the partial sums of the series (7)

n

u n f , n n ,

(11)

n 1

It can be seen that un converges to u0 according to energy. The drawback of the orthogonal

series expansion is the time consumption for performing the orthogonalization process.

Example 1. Using the method of orthogonal series expansion solve the problem for Poisson’s equation in the

domain (0<x<a,0<y<b)

(1a)

u f in , u 0 on .

There is a number of problems leading to this equation, see Lecture 1, Eq. (18) or Eq. (40).

Choose H = L2() and further choose as the domain of definition, DA = D-, a linear set of functions twice

continuously differentiable in and fulfilling the boundary condition (1a)2. This linear set is dense in

L2(). As we know from the example 3 of Lecture 2, the operator A=- is positive definite on D-, hence we can

construct the energetic space HA=H- in usual manner. As the base in this space, the complete orthogonal system

of functions

mx

ny

(1b)

sin

sin

, m, n 1,2,....

a

b

can be chosen. Denote these functions as follows:

x

y

1 sin

sin

,

a

b

2x

y

x

2y

(1c)

2 sin

sin

, 3 sin

sin

,

a

b

a

b

3x

y

2x

2y

x

3y

4 sin

sin

, 5 sin

sin

, 6 sin

sin

,

a

b

a

b

a

b

...........................................................................................................

The course of numbering of the functions in (1c) is apparent: We group the functions (1b) such that m + n = i, i =

2,3,…. and in every group we arrange these functions according to descending value of the first index m, which

gradually assumes the values i-1, i -2, ….,1 in every group. Thus we obtain the system of functions s(x,y), s =

1,2,…

2

As we know, it holds

0, if m j,

j x

m x

sin

d x a

a

a

, if m j,

0

2

and similarly

0, if n k ,

b

k y

n y

sin

sin

d

y

b

0 b

b

, if n k ,

2

Therefore it also holds

a b

j x

k y

m x

n y

j x

k y

m x

n y

sin

,sin

sin

sin

sin

sin

d xd y

sin

sin

a

b

a

b

a

b

a

b

00

a

sin

ab

j x

m x

k y

n y

, if m j and simultaneusly n k ,

sin

sin

d x sin

sin

dy 4

a

a

b

b

0

0

0

in other cases.

Obviously, the functions s are from the linear set D-, hence we can write

2

2

s , t s , t 2s 2s , t .

y

x

Because

2

jx

ky j 2 2

jx

ky

2 sin

sin

sin

,

2 sin

x

a

b

a

a

b

a

(1d)

(1e)

(1f)

b

(1g)

2

jx

ky k 2 2

jx

ky

sin

sin

sin

,

2 sin

2

y

a

b

b

a

b

it holds

a b

j 2 2 k 2 2

j x

k y

m x

n y

j x

k y

m x

n y

sin

sin

,sin

sin

sin

sin

sin

d xd y

2 2 sin

a

b

a

b

a

b

a

b

a

b

0 0

(1h)

ab j 2 2 k 2 2

2 , if m j and simultaneously n k ,

4 a2

b

0

in other cases.

The functions (1c) are orthogonal in the space H, because two different functions from this set have j m, nor jn.

Hence, according to (1h), the functions

1

x

y

1

sin

sin

,

a

b

ab 2 2

2 2

b

4 a

1

2x

y

1

x

2y

2

sin

sin

, 3

sin

sin

,

2

2

2

2

a

b

a

b

ab 4

ab

4

2 2

2 2

b

b

4 a

4 a

(1i)

1

3x

y

1

2x

2y

4

sin

sin

, 5

sin

sin

,

a

b

a

b

ab 9 2 2

ab 4 2 4 2

2 2

2 2

b

b

4 a

4 a

1

x

3y

6

sin

sin

,

a

b

ab 2 9 2

2 2

b

4 a

...........................................................................................................

are orthonormal in the space H-. Thus, the system (1i) forms an orthonormal base in the H-. According to (7), we

get the solution of the problem (1a) in the form

u0 a s s ,

(1j)

s 1

3

where

a1 f , 1

a2 f , 2

a b

1

f x, y sin

ab

2 2

4

a

b

2

2

0 0

(1k)

a b

1

x

y

sin

d x d y,

a

b

f x, y sin

2x

y

sin

d x d y,

a

b

ab 4

0 0

2 2

b

4 a

etc., so that with regard to the form of functions (1i), we can write

mx

ny ,

(1l)

u0 cmn sin

sin

a

b

m , n 1

where

a b

1

mx

ny

.

(1m)

cmn

f x, y sin

sin

d xd y

2 2

2 2

a

b

ab m

n

2 0 0

4 a 2

b

As we have seen in the beginning of this Lecture (se the Eq. (7)), the series (1l) converges for f L2() in the

energetic space H- and in the basic space L2() as well. Thus, the problem (1a) is solved.

2

2

Ritz method

The Ritz method was mentioned in the end of the Lecture 1. This method will now be

introduced in more detail against the background of the theory presented. First, consider the

following necessary presumptions: Let A be, as formerly, positive definite operator on the

linear domain of admissible functions DA, dense in a separable Hilbert space H and f H. Let

HA be energetic space of the operator A (hence also separable because H is separable). Consider

a base in the space HA (thus, a countable, linearly independent, complete system) :

1, 2…

(12)

In contrast to the method of orthogonal series, see example 1, it is not presumed that this base

is orthogonal (and, especially not orthonormal).

As already stated, the generalised solution of Au = f is defined as such a function u0 HA,

which minimizes the functional F u u, u A 2 f , u on HA, i.e. for which it holds

(13)

F u0 min F u .

uH A

Choose a natural number n and seek the approximation un of u0 in the following form

n

un ck k ,

(14)

k 1

where k are the functions of the base (12) and ck are yet unknown real constants, which are

determined to fulfil the condition

Fun = min.

(15)

In more detail, among all approximations of the form

n

vn bk k ,

(16)

k 1

where bk are arbitrary real constants (hence on n-dimensional subspace generated by functions

1, 2…n), the approximation (14) renders the functional F u u, u A 2 f , u minimal.

The system (12) is presumed to be the base in HA, hence the generalised solution u0 can be

approximated to arbitrary accuracy by a linear combination of the basis functions. Besides, the

condition (15) is analogous to the condition (13). Thus, it can intuitively be expected that for

sufficiently large n, the approximation (14) will differ on HA sufficiently little from the sought

solution u0.

4

The determination of constants ck in (14) is relatively simple (this is an essential advantage of

Ritz method). Namely, if (16) is substituted for u in F u u, u A 2 f , u , we get

F vn b11 ... bnn , b11 ... bnn A 2 f , b11 ... bnn

1 , 1 A b12 1 , 2 A b1b2 ... 1 , n A b1bn

2 , 1 A b2b1 2 , 2 A b22 ... 2 , n A b2bn

(17)

...............................................................................

n , 1 A bnb1 n , 2 A bnb2 ... n , n A bnn

2 f , 1 b1 2 f , 2 b2 ...2 f , n bn ,

or, considering the symmetry of scalar product

1,2 A b1b2 2 ,1 A b2b1 etc. ,

we obtain

F vn 1 , 1 A b12 2 1 , 2 A b1b2 ... 2 1 , n A b1bn

2 , 2 A b22 ... 2 2 , n A b2bn

...............................................................................

(18)

n , n A bnn

2 f , 1 b1 2 f , 2 b2 ...2 f , n bn .

Because the scalar products (i,k)A are fixed numbers determined by the functions of given

base, the functional F becomes, after substituting (16) for u, a quadratic function of variables

b1,…,bn. For this function to take a minimum at the point (c1,…,cn), it is necessary to satisfy the

equations:

F vn

F vn

(19)

0,...

0

b1 b c ,....,b c

bn b c ,....,b c

1

1

n

n

1

1

n

n

i.e. the equations

1 , 1 A c1 1 , 2 A c2 ... 1 ,n A cn f ,1 ,

1 , 2 A c1 2 , 2 A c2 ... 2 , n A cn f , 2 ,

................................................................................................

(20)

1 , n A c1 2 , n A c2 ... n , n A cn f , n ,

The system (20) represents a set of n linear equations for n unknown constants c1,….,cn.

Because the functions 1, 2…,n are presumed to be linearly independent, the determinant of

the system (20), (which is so-called Gram’s determinant of functions 1, 2…,n) is differing

from 0. Hence, the system (20 has a unique solution. The matrix with elements (i,k)A is

called the stiffness matrix, in connection with the finite element method (which is, in essence,

the Ritz method, where the base is formed by functions of a very special type), and the vector

on the right-hand side of (20) is called the loading vector.(back to Lecture 11)

Remark 3. Specially, if the base 1,…,n is orthonormal in HA – their functions will be denoted 1,… ,n in this

case, then it holds

0 for i k ,

(21)

i , k A

1 for i k

and the system (20) possesses the form

5

c1 f , 1 ,

c2 f , 2 ,

...................

cn f , n .

(22)

In this case, the Ritz method provides an approximation of un in the form of sum

n

un f , k k

k 1

(23)

which is identical with the partial sum (11), familiar from the method of orthogonal series.

Galerkin’s method

Consider a separable Hilbert space H and a set M of its functions, which is dense in H. Then we

know according to the basic lemma of variational calculus (see also Eq. (25)), that if it holds

for a certain function u

(24)

u, v 0 for every v M ,

then it follows that u = 0 in H. Let

1, 2…

(25)

be a base in H. We assert that if (u,k) = 0 for all k = 1,2…, then it follows again that u = 0

in H. In concise form

(26)

u,k 0 for k 1, 2... u 0 in H .

According to presumption, (25) is a base in H, hence the set N of all functions of the form

n

c

k 1

k

k

,

(27)

where n is an arbitrary natural number and ck are arbitrary real constants, is dense in H.

Because it holds (u,k) = 0 for every k, it also holds for every function (27) from the set N that

n

(28)

u, ckk 0 .

k 1

Observe, that from (28), Eq. (26) follows.

Consider in H the equation

Au f .

(29)

If we find such u0 DA, that it holds

(30)

Au0 f ,k 0 for every k 1, 2... ,

then, according to (26) we have

Au0 f 0 in H ,

(31)

hence u0 DA is the solution of (29) in H. The simple idea saying that from Eq. (30) there

follows Eq. (31), forms the basis of Galerkin’s method. Assume in addition, that the base (25)

and the domain of definition DA of the operator A are such that every linear combination (27) of

the basis function is from DA, and seek an approximate solution un of the equation (29) in the

form

n

un ck k ,

(32)

k 1

where n is arbitrary but fixed chosen natural number and ck are yet unknown constants, which

we determine from the condition

(33)

Aun f ,k 0, k 1, 2...., n ,

6

which is analogous to the condition (30). The condition (33) represents a set of n equations for

n unknown constants c1,…,cn. In the case, that the operator A is linear, the condition (33)

acquires the form

A1 , 1 c1 A1 , 2 c2 ... A1 , n cn f , 1 ,

A1 , 2 c1 A2 , 2 c2 ... A2 , n cn f , 2 .

................................................................................................

(34)

A1 , n c1 A2 , n c2 ... An , n cn f , n .

If, in addition, the operator A is positive (and, thus, symmetric, so that (Ai,j) = (i,Aj)) and

if we apply the formerly introduced scalar (u,v)A = (Au,v), we can write the system (34) in the

form identical with (20), which was obtained using the Ritz method. (back to Lecture 5-6)

Remark 4. In the case of positive definite operator, the Galerkin method brings no innovation over the Ritz

method; both methods lead to the solution of the same system of linear equations and to the same sequences of

approximate solution. However, the facilities of the Galerkin method are much wider in comparison to the Ritz

method. The Galerkin method, characterized by the relation (33), set no essential limitations on the operator A;

there is not required for the operator A to be positive definite, it has not to be symmetric, even not linear. Thus, the

Galerkin method can be formally used for very general operators. However, it can then be expected, that questions

regarding to the solvability of (33), and the convergence Galerkin’s sequence, are in general case very difficult to

resolve.

Remark 5. Though the Ritz method and the Galerkin method lead in case of linear, positive definite operators to

the same results, yet the basic principles of these methods are quite different, and the set of equations (20) and (34)

respectively, to which these methods lead, also formally differ. The distinction of the whole concept is apparent

e.g. at the routine approach to the solution of classical elasticity problems. We present a simple example: Solve the

problem of deflection u(x) of an inhomogeneous beam with varying cross section, length l and subjected to the

transverse loading q(x). Both ends of the beam are clamped. Engineers usually approach the solution of the

problem in one of two basic ways: Either they start from the corresponding differential equation

(35)

E x I x u q x ,

where E(x) stands for varying Young’s modulus, I(x) is the moment of inertia of the cross-section at the position x,

with the boundary conditions

(36)

u 0 u 0 0, u l u l 0 ,

or, they minimize the energetic functional

l

l

1

2

(37)

EIu

d

x

0 qu d x ,

2 0

which expresses the total potential energy of loaded beam, see Example 2 of the Appendix 1, on the set of

admissible, sufficiently smooth functions satisfying the conditions (36).

If we seek an approximate solution to the given problem in the form

n

un ck k ,

(38)

k 1

where the functions k(x) fulfil the boundary conditions (36), and if we apply the Galerkin method to the solution

of the problem (35) and (36), we arrive to the set of equations (34)

l

l

l

l

0

0

0

l

l

l

l

0

0

0

0

c1 EI1 1 d x c2 EI 2 1 d x ... cn EI n 1 d x q1 d x,

0

c1 EI1 2 d x c2 EI 2 2 d x ... cn EI n 2 d x q2 d x,

(39)

.....................................................................................................................

l

l

l

l

0

0

0

0

c1 EI1 n d x c2 EI 2 n d x ... cn EI n n d x q n d x.

If we minimize the functional (37) by Ritz method, seeking the approximation un in the form (38), we get the

system

7

l

l

2

l

l

0

0

l

l

c1 EI 1 d x c2 EI12 d x ... cn EI1 n d x q1 d x,

0

0

l

l

2

c1 EI12 d x c2 EI 2 d x ... cn EI2 n d x q2 d x,

0

0

0

(40)

0

....................................................................................................

l

l

l

0

0

0

l

2

c1 EI1n d x c2 EI2 n d x ... cn EI n d x qn d x.

0

The first distinction between the Galerkin method and the Ritz method is apparent – the systems (39) and (40) are

formally different. (In reality, they are identical, see the end of this Remark). However, the basic distinction

consists in a quite different approach to the solution of the same problem. If engineers use the Ritz method, they

start directly from the functional of the potential energy, without any previous examination of corresponding

differential equation and the properties of corresponding differential operator. Therein, a certain caution is needed.

However, the related questions are usually very simple. Especially, in the given case, it can be easily shown under

simple presumptions concerning the functions E(x) a I(x), that the concerned operator is positive definite on a

corresponding linear set of admissible functions, so that it is justified to seek an approximate general solution to

(35) and (36) using both the Ritz method and the Galerkin method. The problem of finding this general solution is

equivalent to minimizing the functional (compare with Eq. (1))

l

l

0

0

F u EIu 2 d x 2 qu d x

(41)

on an appropriate space HA. Here, the functional (41) is equal, except for the factor ½, to the energetic functional

(37). Hence, both approach presented are correct, if the chosen base fulfils the presumptions listed above.

Because the functions i satisfy, according to the presumption, the conditions (36), the integration by parts

l

l

l

l

EIi k d x EIi k 0 EIi k d x EIik 0

0

0

l

l

0

0

(42)

EIi k d x EIi k d x

leads to the simple conclusion that the coefficients of both systems (39) and (40) respectively, are identical.

The whole example is, of course, only illustrative one, since the problem (35) and (36) can be easily solved by a

sequent integration of the equation (35).(back to Lecture 5-6)

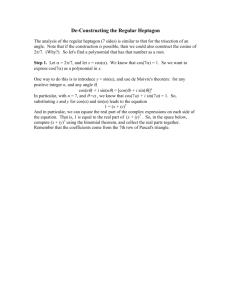

The finite element method (FEM)

As it was already mentioned, FEM is the Ritz method, in essence, where the base is formed by

functions of a very special type. To be concrete, solve approximately the problem

Au u 1 cos 2 x u

(43)

(44)

u 0 0, u 0

Note, that the associated operator A is positive definite on the set of its admissible functions

DA u; u C 2 0, , u 0 0, u 0 , see the Problem of Lecture 2. Divide the interval

0, into ten subintervals of length h = /10 and choose, for the basis functions, the functions

(45)

v1 x , v2 x ....v9 x

sketched in Fig. 1. For example, the function v2(x) is defined in the interval 0, by

8

0

10 x 1

v2 x

3 10 x

0

for 0 x

10

2

for

x

10

10

2

3

for

x

10

10

3

for

x

10

(46)

Fig. 1.

The just presented functions are examples of the so-called spline functions. In higher

dimensions, these functions are defined similarly. For example, if N=2, the “triangles” in Fig.1

are replaced by “pyramids”.

It is clear that, if these functions are to be used for the basis functions, the system (20) (not

(34)) should be applied: In the case of the problem (43), (44), we have

vi , vk A vivk 1 cos 2 x vi vk d x

(47)

0

and

Avi , vk vi 1 cos 2 x vi vk d x .

(48)

0

For the considered functions (45), the integral (47) has sense because these functions have

piecewise continuous derivatives, while he integral (48) loses its sense because second

derivatives of these functions do not exist.

For the equations of higher than of the second order, smoother functions than piecewise linear

spline functions are applied, namely piecewise polynomial functions with polynomials of

sufficiently high orders. (back to Lecture 4)

Note that if functions of the type (46) are applied to the solution of the problem (43), (44), the

approximate solution is obtained in the form of a piecewise linear (thus not a smooth) function.

(This circumstance does not appear only in the case of the problem (43), (44), of course). Thus,

if we want to obtain a good approximation of the solution, we have to choose a sufficiently fine

division of the interval 0,. This means to solve the corresponding Ritz system for a large

number of unknowns. It follows that if the FEM is applied a computer should be at hand; the

more efficient the higher is the number of dimensions (in the case of partial differential

equations).

In spite of some drawbacks, this method is one of the most often used in applications. One of

its greatest preferences is that it can be substantially automated, or programs for relatively

general cases can be directly designed. Numerical stability is ensured. The Ritz matrix is a

band matrix in this case (or a matrix of a related type) to the inversion of which a substantially

smaller number of operations is needed than to the inversion of a “full” matrix. A further

9

advantage of this method consists in the fact that it is well applicable to ”arbitrary” domains,

e.g. multiply connected (with “holes”), where in the case of the classical Ritz method the

choice of a suitable base could make difficulties. Another comment on FEM will be made in

next lectures.

Inhomogeneous boundary conditions

The so far explained theory presumes that the domain of definition DA of operator A is a linear

set. As we have seen, this presumption ensures that the approximation of the generalised

solution of a given problem can be sought in the form of linear combinations of functions from

DA or, at least, from the space HA, hence again using elements from a certain linear set.

E.g. in the case of the Dirichlet problem for Poisson’s equation

u f in , u 0 on ,

(49)

we have chosen as the linear set DA the functions twice continuously differentiable in

and satisfying the boundary condition (49)2. By contrast, the set M, its elements are

functions exhibiting the same smoothness properties like the functions from the linear set DA,

however which satisfy

(50)

u 2 on ,

is not a linear set. Because, if u and v are two functions from M, then it holds u + v =4 on ,

hence the function u + v does not satisfy the condition u + v =2 on , and thus it not from the

set M.

Similarly, the set of all functions possessing the same smoothness properties like the functions

from the linear set DA and satisfying the boundary condition

u

u on ,

(51)

n

comprise a linear set, while the set of functions with the same smoothness properties but

satisfying the boundary condition

u

u 3 on ,

(52)

n

do not comprise a linear set.

Thus, question arises how to incorporate the solution of differential equations with

inhomogeneous boundary conditions into the described theoretical framework, and even how to

formulate such problems in the case when the domain of the definition is not a linear set, and

what to make of the solution, or generalized solution of given problem respectively.

One of the possible approaches for resolving this question is quite elementary -to reduce a

problem with inhomogeneous boundary conditions to a problem with homogeneous boundary

conditions. As an example consider the Dirichlet problem for Poisson’s equation with

prescribed continuous function g on the boundary

u f in , u g on

(53)

Presume, that we succeed in constructing a sufficiently smooth w , satisfying the

boundary condition (53)2 and such that

(54)

w L2 .

If we search the solution of the problem (53) in the form

u w z,

(55)

we get for the function z the following conditions

z f w in , z 0 on ,

(56)

which is, however, the problem discussed in detail above. The same approach can be used in

case of a general operator A. Nevertheless, it should be pointed out that, in general case, there

10

is not easy to find such a function w whose properties allow reducing the problem with

inhomogeneous boundary conditions to the problem with homogeneous boundary conditions.

However, in a great number of practical problems the resolution of the considered question

used to be simple and we are able to put down the form of searched function w

straightforwardly. Let us show a simple example: Solve the Dirichlet problem for Laplace’s

equation u 0 in the domain (0 < x < a, 0 < y < b) and let the boundary condition g on the

boundary be given by

x

g sin

for 0 x a, y 0,

(57)

a

g 0 on the remaining part of .

Apparently, the function w can be chosen as follows

y x

w 1 sin

,

(58)

a

b

which fulfils the conditions (57) and reduce our problem to the already well-known problem

2 y x

(59)

z 2 1 sin

v , z 0 on ,

a b

a

If z0 is its (generalized) solution, then the solution of the original problem is u0 = w + z0.

Remark 6. A more suitable formulation of the problem with inhomogeneous boundary conditions was built up

basing on the concept of so-called traces, Sobolev space and the Lax-Milgram theorem. The interested reader is

referred to the specialized literature, e.g. Rektorys, K., Variational methods in Mathematics, Science and

Engineering, Ed. Dordrecht-Boston, Reide 1979.

Problems

1. Solve the Dirichlet problem for Poissson’s equation in the domain (-a < x < a, -b < y <b)

with homogeneous boundary conditions on the boundary , i.e.

u 1 in , u 0 on

(P1.1)

using the method of orthogonal series.

Hint.

As the base in the energetic space HA=H-, the complete orthogonal system of functions

m x

n y

cos

cos

, m, n 1,3,5....

(P1.2)

2a

2b

can be chosen. Denote these functions as follows:

x

y

1 cos cos

,

2a

2b

3 x

y

x

3 y

2 cos

cos

, 3 cos

cos

,

(P1.3)

2a

2b

2a

2b

5 x

y

3 x

3 y

x

5 y

4 cos

cos

, 5 cos

cos

, 6 cos

cos

,

2a

2b

2a

2b

2a

2b

...........................................................................................................

As we know, it holds

a

0, if m j ,

j x

m x

(P1.4)

a cos 2a cos 2a d x a, if m j,

and similarly

11

0, if n k ,

k y

n y

cos

dy

(P1.5)

b

,

if

n

k

,

2

b

2

b

b

Therefore it also holds

a b

j x

k y

m x

n y

j x

k y

m x

n y

cos

cos

,

cos

cos

cos

cos

cos

d xd y

cos

2a

2b

2a

2b a b

2a

2b

2a

2b

(P1.6)

a

b

ab, if m j and simultaneusly n k ,

j x

m x

k y

n y

cos

cos

d x cos

cos

dy

0

in other cases.

2a

2a

2b

2b

a

b

Instead of (1h) we have

a b

j 2 2 k 2 2

j x

k y

m x

n y

j x

k y

m x

n y

cos

cos

,

cos

cos

cos

cos

cos

cos

d xd y

2

2

2a

2b

2a

2b a b 4a

4b

2a

2b

2a

2b

b

cos

j 2 2 k 2 2

ab

, if m j and simultaneously n k ,

4a 2

4b 2

0

in other cases.

Orthonormal system (1i) has in the investigated case the form

1

x

y

1

cos

cos

,

2a

2b

2 2

ab 2 2

4b

4a

1

3 x

y

1

x

3 y

2

cos

cos

, 3

cos

cos

,

2

2

2

2

2

a

2

b

2

a

2

b

9

9

ab 2 2

ab 2 2

4b

4b

4a

4a

1

5 x

y

1

3 x

3y

4

cos

cos

, 5

cos

cos , (P1.7)

2a

2b

2a

2b

25 2 2

9 2 9 2

ab

ab

2

2

4b 2

4b 2

4a

4a

1

x

5 y

6

cos

cos

,

2a

2b

2 25 2

ab 2

4b 2

4a

...........................................................................................................

We get the solution of the problem in the form

u0 as s ,

(P1.8)

s 1

where

a1 f , 1

1

2 2

ab 2 2

4a 4b

a b

x

y

cos 2a cos 2b d x d y,

a b

3 x

y

a2 f , 2

cos

cos

d x d y,

2a

2b

9 2 2 a b

ab 2 2

4b

4a

etc., so that with regard to the form of functions (1i), we can write

1

a b

12

(P1.9)

u0

m, n 1,3...

cmn cos

m x

n y

,

cos

2a

2b

(P1.10)

where

cmn

a b

1

cos

m x

n y

cos

d xd y

2a

2b

m

n a b

ab

2

4b 2

4a

.

6

m 1

n 1

m n

1

4a

4

b

2

1

1 2

1 2

1 2

n

m 2 2 n 2 2 m

m2 n2

4

ab

mn 2 2

2

4b 2

b

4a

a

Numerical results obtained using MAPLE8 are presented for a=b=1 in problem3-1.mws.

2

2

2

2

Sometimes we can seek the solution in the form similar to (P1.8)

u0 x, y cnun x, y ,

(P1.11)

n 1

where un x, y has the form X n x Yn y and satisfies a given differential equation and some

of imposed boundary conditions. To find the functions X n x , Yn y for the problem (P1.1),

we first reduce Poisson’s equation (P1.1)1 to Laplace’s equation. To do so, we split the solution

u0 into two particular solutions v0 and w0

(P1.12)

u0 x, y v0 x w0 x, y ,

where v0(x) satisfies the ordinary differential equation

d 2 v0

(P1.13)

1

d x2

with the boundary conditions

(P1.14)

v0 a 0 ,

and the function w0(x,y) satisfies Laplace’s equation

(P1.15)

w0 x, y 0

and the boundary conditions

(P1.16)

w0 a, y 0, w0 x, b v0 x .

Integrate (P1.13) and obtain

a2 x2

(P1.17)

v0 x 1 2 .

2 a

w0(x,y) is sought in the form w0 x, y cn wn x, y with wn X n x Yn y . Substituting

n 1

wn(x,y) into (P1.15) leads to

X n Yn

0,

X n Yn

hence

X n

Y

kn , n kn , kn 0 .

Xn

Yn

The solution of the first of the differential equations (P1.19) is

X n x An sin kn x Bn cos kn x .

13

(P1.18)

(P1.19)

(P1.20)

The boundary conditions (P1.16)1 require X n a 0 ; hence

n 2 2

(P1.21)

n 1,3,5,... and An 0 . `

4a 2

The second equation (P1.19) has the general solution (using (P1.21)) as follows

n y

n y

Yn y Cn sinh

Dn cosh

,

(P1.22)

2a

2a

where, with respect to the symmetry of the boundary conditions (P1.16)2, Cn = 0. Thus, we can

finally seek the solution w0(x,y) in the form

n y

n x

(P1.23)

w0 x, y cn cosh

cos

2a

2a

n 1,3,..

where cn stands for BnDn. The constants cn have to be determined so that the boundary

conditions (P1.16)2 will approximately be satisfied. To this end the function v0 is expanded into

the Fourier series

a2 x2 a2

n x

(P1.24)

v0 x 1 2

vn cos

2 a 2 n1,3..

2a

where

a

n 1

1 x2

n x

32

n

32

(P1.25)

vn 1 2 cos

d x 3 3 sin

3 3 1 2 , n 1,3,...

a a a

2a

n

2 n

(see problem3-1.mws). Thus, from (P1.16)2, (P1.23), (P1.24) and (P1.25) it follows

n 1

n b

n x

a 2 32

n x

2 cos

(P1.26)

w0 x, b cn cosh

cos

1

3 3

2a

2a

2 n 1,3.. n

2a

n 1,3,..

wherefrom

kn

n 1

1 2

2

.

cn

a n 3

n b

cosh

2a

2a

The complete solution is then obtained from (P1.12):

(P1.27)

n 1

1 2

a x2

2

n y

n x

.

(P1.28)

u0 x , y 1 2

cosh

cos

3

2 a n 1,3,.. a n

2a

2a

n b

cosh

2a

2a

Numerical results obtained using MAPLE8 are again presented for a=b=1 in problem3-1.mws.

It is not surprising that the second method exhibits a better convergence, because the basis

functions in (P1.23) satisfy the differential equation in , in contrast to the basis functions

(P1.2), which satisfy only boundary conditions. (back to Lecture 5-6)

2

2. Using the method of orthogonal series solve the problem of deflection w of a simple

supported square plate

4w

4w

4w q

2 w 4 2 2 2 4

in 0 x a, 0 y b

x

x y

y

D

(P2.1)

2w

2w

w 0,

0 for x 0, x a, w 0,

0 for y 0, y b

x 2

x 2

where the transverse loading q(x,y) = q0= const.

Hint: Use the basis functions from the example 1.

14