Eigenvalues and Eigenvectors

advertisement

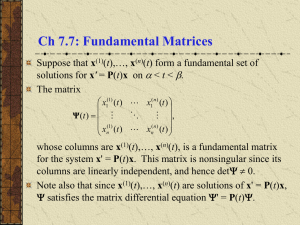

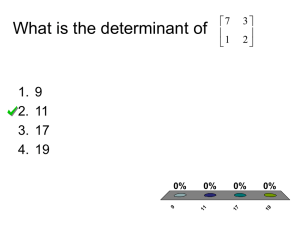

Eigenvalues and Eigenvectors If Av=λv with v nonzero, then λ is called an eigenvalue of A and v is called an eigenvector of A corresponding to eigenvalue λ. Agenda: Understand the action of A by seeing how it acts on eigenvectors. (A-λI)v=0 system to be satisfied by λ and v For a given λ, only solution is v=0 except if det(A-λI)=0; in that case a nonzero v that satisfies (A-λI)v=0 is guaranteed to exist, and that v is an eigenvector. det(A-λI) is a polynomial of degree n with leading term (-λ)n . This polynomial is called the characteristic polynomial of the matrix A. det(A-λI)=0 is called the characteristic equation of the matrix A There will be n roots of the characteristic polynomial, these may or may not be distinct. For each eigenvalue λ the corresponding eigenvectors satisfy (A-λI)v=0 ; the (nonzero) solutions v are the eigenvectors, and these are the nonzero vectors in the null space of (AλI). For each eigenvalue, we calculate a basis for the null space of (A-λI) and these represent the “corresponding eigenvectors”, with it being understood that any linear combination of these vectors will produce another eigenvector corresponding to that λ. We will see shortly that if λ is a nonrepeated root of the characteristic polynomial, then the null space of (A-λI) has dimension one, so there is only one corresponding eigenvector (that is, a basis of the null space has only one vector), which can be multiplied by any nonzero scalar. Observation: If λi is an eigenvalue of A with vi a corresponding eigenvector, then for any λ, (doesn’t have to be an eigenvalue) we have (A-λI)vi=(λi-λ) vi Fun facts about eigenvalues /eigenvectors: 1) Eigenvectors corresponding to different eigenvalues are linearly independent 2) If λ is a nonrepeated root of the characteristic polynomial, then there is exactly one corresponding eigenvector (up to a scalar multiple); in other words the dimension of the nullspace of (A-λI) is one. 3) If λ is a repeated root of the characteristic polynomial, with multiplicity m(λ) then there at least one corresponding eigenvector and up to m(λ) independent corresponding eigenvectors; in other words the dimension of the nullspace of (AλI) is between 1 and m(λ) 4) As a consequence of item 1) above, if the characteristic polynomial has n distinct roots (where the degree of the polynomial is n) then there are n corresponding independent eigenvectors, which in turn constitute a basis for Rn (or Cn if applicable) 5) When bad things happen to good matrices: deficient matrices. A matrix is said to be deficient if it fails to have n independent eigenvectors. This can only happen if (but not necessarily if) an eigenvalue has multiplicity greater than 1. However it is always true that if an eigenvalue λ has multiplicity m then null(A- λI)m has dimension m. The vectors in this null space are called generalized eigenvectors. 6) Complex eigenvalues: If A is real then complex eigenvalues/eigenvectors come in complex conjugate pairs: If λ is an eigenvalue with eigenvector v then λ* is an eigenvalue with eigenvector v* 7) If A is a triangular matrix, the eigenvalues are the diagonal entries Diagonalization If A has a full set of eigenvectors (n linearly independent eigenvectors) and we put them as columns in a matrix V, then AV=VΛ where Λ is a diagonal matrix with the eigenvalues (corresponding to columns of V) down the diagonal. Then V-1AV=Λ this is called diagonalizing A. Also, we have A=VΛV-1. Calculating powers of A: Am=( VΛV-1)m= VΛmV-1 In general, if P is any invertible matrix then P-1AP is called a similarity transformation of A. Diagonalizing A consists of finding a P for which the similarity transformation gives a diagonal matrix. Of course we know that such a P would need to be a matrix whose columns are a full set of eigenvectors. A is diagonalizable if and only if there is a full set of eigenvectors. Given any linearly independent set of n vectors, there is a matrix A that has these as eigenvectors, namely A=VΛV-1 for any diagonal Λ we wish to specify; the diagonal entries of Λ are the eigenvalues. A similarity transformation can be considered as specifying the action of A in a transformed coordinate system: Given y=Ax as a transformation in Rn, if we transform the coordinates by x=Pu and y=Pw (so that our new coordinate directions are the columns of P) then u and w are related by w=( P-1AP)u so that P-1AP is the transformed action of A in the new coordinate system, i.e. A is transformed to the new matrix P-1AP in the new coordinate system. If A doesn’t have a full set of eigenvectors then it cannot be diagonalized (why: if A can be diagonalized then A=PΛP-1 , we have AP=PΛ and the columns of P are seen to be of full set of eigenvectors) but you can always find a similarity transformation P-1AP such that P-1AP has a special upper triangular form, called Jordan form. We will not delve any further into this, however. Remember, however, we did note that a square matrix A always has a full set of generalized eigenvectors even when A itself is deficient. Additional remark on similarity transformations: If P-1AP=B then A and B have the same characteristic polynomial and the same eigenvalues and eigenvector structure in the sense that if v is an (generalized) eigenvector of A then P-1v is an (generalized) eigenvector of B. An algebraic way of seeing this is to note first that P-1(A-λI )P= B-λI and P-1(A-λI)kP=( B-λI)k and det(B-λI )=det (P-1(A-λI )P)= det(P-1)det(A-λI )det(P)=det(A-λI ) since 1=det(P-1P)= det(P-1) det(P) It follows that: (A-λI )v=0 implies P-1(A-λI )P P-1v=0 , (B-λI) (P-1v)=0 (A-λI)kv=0 implies P-1(A-λI )kP P-1v=0 , (B-λI)k (P-1v)=0 Symmetric matrices: AT=A Properties: 1) All eigenvalues are real 2) There is always a full set of eigenvectors 3) Eigenvectors from different eigenvalues are (automatically) orthogonal to each other. So, in particular if all the eigenvalues are distinct, there is an orthonormal basis of Rn consisting of eigenvectors of A. If the eigenvalues have multiplicity greater than 1, you can always arrange for the corresponding eigenvectors to be orthogonal to each other. (Gram-Schmidt process) So, finally, you can always arrange for the orthogonal eigenvectors of A to have magnitude 1 and thus construct a full set of orthonormal eigenvectors of A. If V is the matrix whose columns are those eigenvectors, then we have VTV=I, so that VT=V-1. Application to quadratic forms: F(x,y,z)=xy+2z2-5x2-7xz+13yz By writing a quadratic form as xTAx where A is symmetric, the transformation x=Vu , where the columns of V are an orthonormal set of eigenvectors of A, gives new coordinates u in which the quadratic form is xTAx= uTVTAVu= uTV-1AVu =uTΛu where Λ is the diagonal matrix of eigenvalues. This is called diagonalizing the quadratic form. In terms of the new coordinates the quadratic form consists pure of a combination of squares of the coordinates, with no “cross terms”. In this example we have >> A=[-5 .5 -3.5;.5 0 6.5;-3.5 6.5 2] >> A A = -5.0000 0.5000 0.5000 0 -3.5000 6.5000 >> [V,D]=eig(A) -3.5000 6.5000 2.0000 V = -0.6880 0.4788 -0.5453 0.7022 0.6290 -0.3336 -0.1833 0.6125 0.7690 D = -8.1222 0 0 0 -2.8892 0 0 0 8.0114 >> V'*V %the columns of V are orthonormal vectors ans = 1.0000 -0.0000 0.0000 -0.0000 1.0000 0.0000 0.0000 0.0000 1.0000 So the new quadratic form is xy+2z2-5x2-7xz+13yz =-8.1222u12-2.8892u22+8.0114 u32 where x=Vu The new coordinate system is easily displayed within the original x-y-z coordinates. The columns of V are the new coordinate vectors (shown as red, green, blue unit vectors, respectively), corresponding to the vectors i,j,k in the standard coordinate system. u3 3 2 z 1 0 u1 -1 -2 u2 -2 -3 0 -2 y 2 0 2 x