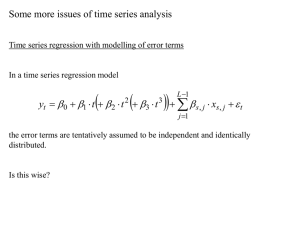

The AIC and SIC select a 5-th order polynomial in t to represent the

advertisement

In-Sample Overfitting There is a serious danger in looking at the simple R2 (or SSR or MSE) to select among competing forecast models. The R2 will always increase (and the SSR and MSE will always decrease) each time you add another variable to the right-hand-side of the regression. So, for example, if we mechanically apply this criteria to select the trend model from among models of the form: Tt = 0 + 1t + 2t2 + … + ptp these criteria will direct us to choose p as large as possible. For the hepi data, I fit the hepi to a trend model for p = 1,2,3,4. Here are the results. p 1 2 3 4 SSR 5435 833 324 286 So? What’s the problem? The problem is that improving the fit of the model over the sample period by adding additional variables can easily lead to poorer out of sample forecasts! The more variables you add to the right hand side of the forecasting model, the higher the variance of the forecast error! So, there is a tradeoff that needs to be addressed: On the one hand, adding variables to the regression improves the fit of the model. On the other hand, adding variables to the regression increases the variance of the forecast error. The simple R2, SSR, MSE criteria ignores the negative side effects of adding variables to the regression. We need criteria that account for both the benefits and costs of adding variables to the regression. Adjusted R2 Akaike Information Criterion (AIC) Schwartz Information Criterion (SIC) Select the model that max’s the adjusted R2. Select the model that min’s the AIC. Select the model that min’s the SIC. Let’s first look at each of these measures – Adjusted R2 R 1 s2 2 T (y t y ) 2 /(T 1) 1 where s 2 SSR /(T k ) = s.e. of the regression, k = number of regression parameters Note that the denominator term depends only on y1,…,yT (not on the model that the y’s are fit to). So, the only thing that will change as we fit different models will be s2. So, in effect, maximizing the adjusted R2 amounts to minimizing the standard error of the regression, SSR/(T-k), whereas maximizing the simple R2 amounts to minimizing the MSE of the regression, SSR/T. As we increase the number of variables in the model, SSR will decrease, T-k will decrease, and s2 will increase or decrease depending only whether SSR is decreasing less than or more than proportionally to the (linear) decrease in T-k. AIC and SIC log(AIC) = log(SSR/T) + 2k/T log(SIC) = log(SSR/T) + k*log(T)/T (Note – the AIC and SIC reported by EViews are “log(AIC)” and “log(SIC)” but they do not use exactly the same formulas as those given above, which are the ones used in the text, pp. 101-102. In fact, there are a number of variations of the AIC and SIC that are used by different books and programs. They all imply the same results with regard to ordering models according to these criteria.) Note that the AIC and SIC values will be decreasing as additional variables are added to the regression through the first term but will be increasing through the second term, i.e., the penalty term. The preferred model is the one that minimizes the AIC (or SIC). Unfortunately, these three criteria (adjusted R2,AIC, SIC) will not always select the same model! That is because of the differences in their penalty functions as illustrated by Figure 4.13 in your text. The SIC imposes the strongest penalty for additional variables, followed by the AIC and then the adjusted R2. So, when they select different models, the SIC will choose a more “parsimonious” model than the AIC, which, in turn, will choose a more parsimonious model than the R2. The AIC and SIC are more commonly used than the adjusted R2. Each of the two has certain (but different) theoretical properties that make them appealing. Which of the two to use in practice when they give different answers is somewhat arbitrary. If we maintain the KISS principle and select the simpler model when there is not a compelling reason to do otherwise, then we should use the SIC. Compute for the polynomial trends for hepi – p R-Bar-Square AIC SIC 1 2 3 4 5 6 0.969 0.995 0.998 0.998 0.999 0.999 7.75 5.92 5.02 4.94 3.73 3.76 7.83 6.04 5.18 5.14 3.97 4.04 Year 2004 2005 2006 2007 2008 2009 2010 Forecast (p=2) Forecast (p=5) 231.5 (Actual) 238.8 (3.4%) 244.1 (5.0%) 247.0 (3.4%) 257.8 (5.5%) 255.2 (3.3%) 273.7 (6.0%) 263.7 (3.3%) 292.2 (6.5%) 272.2 (3.2%) 313.7 (7.1%) 280.9 (3.2%) 338.5(7.6%) The AIC and SIC select a 5-th order polynomial in t to represent the trend component of the HEPI. There are, however, a couple of reasons why I might still end up selecting the quadratic trend: 1. The AIC and SIC are in-sample fit criteria. And although they account for the costs of “overfitting” through the inclusion of a penalty term, I am still concerned that extrapolating such a high-order polynomial into the future will be misleading. 2. What might be going on with this series is that the actual trend is a linear or quadratic function of time but the parameters of that function have changed during the sample period. E.g., perhaps yt = 0,1 + 1,1t yt = 0,2 + 1,2t T+1,… for t = 1,…,T0 for t = T0+1,…,T, There are a number of things that I can do to pursue these possibilities. Out-of-Sample Fitting What I am really interested in is the question: Having fit the model over the sample period, how well does it forecast outside of that sample? The in-sample fit criteria that we discussed do not directly answer this question. Consider the following exercise – Suppose we have a data sample y1,…,yT. 1.Break it up into two parts: y1,…yT-n (first T-n observations) yT-n+1,…,yT (last n observations) where n << T. 2. Fit the shortened sample, y1,…,yT-n to various trend models that may seem like plausible choices based on time series plots, in-sample fit criteria,…: linear, quadratic, the one selected by AIC/SIC, log linear,… 3. For each estimated trend model, forecast yT-n+1,…,yT and compute the forecast errors: e1,…,en 4. Compare the errors across the various models time series plots (of the forecasts and actual values of yT-n+1,…,yT; of the forecast errors) tables of the forecasts, actuals, and errors mean squared prediction errors (MSPE) 1 n 2 MSPE e i n i 1 The advantage of this approach is that we are actually comparing the trend models in terms of their out-of-sample forecasting performance. A disadvantage is that the comparison is based on models fit over T-n observations rather than the T observations we have available. (Note that if you do use this approach and, for example, settle on the quadratic model, then when you proceed to construct your forecasts for T+1, … you should use the quadratic model fit to the full T observations in your sample.)Will the fact that, for example, the quadratic trend model outperformed other models in forecasting out of sample based on the “short” sample mean that it will perform best in forecasting beyond the full sample? No. Structural Breaks in the Trend Suppose that the trend in yt can be modeled as Tt = 0,t + 1,tt where 0,t = 0,1 if t < T0 = 0,2 if t > T0 and 1,t = 1,1 if t < T0 = 1,2 if t > T0 In this case, TT+h = 0,2 + 1,2(T+h) Problem – How to estimate 0,2 and 1,2? A bad approach – Regress yt on 1,t for t=1,…,T Better approaches – Regress yt on 1,t for t = T0+1,…,T Problems with this approach – Not an ideal approach if you want to force either the intercept or slope coefficient to be fixed over the full sample, t = 1,…,T, allowing only one of the coefficients to change at T0. Does not allow you to test whether the intercept and/slope changed at T0. Does not provide us with estimated deviations from trend for t = 1,…,T0, which we will want to use to estimate the seasonal and cyclical components of the series to help us forecast those components of the series. Introduce dummy variables into the regression to jointly estimate 0,1, 0,2, 1,1, 1,2 Let Dt = = 0 if t = 1,…,T0 1 if t > T0 Run the regression yt = 0 + 1Dt + 2t + 3(Dtt) + t , over the full sample, t = 1,…,T. Then ˆ0,1 ˆ 0 , ˆ0, 2 ˆ 0 ˆ1 , ˆ1,1 ˆ 2 , ˆ1, 2 ˆ 2 ˆ 3 Suppose we want to allow 0 to change at T0 but we want to force 1 to remain fixed (i.e., a shift in the intercept of the trend line) – Run the regression of yt on 1, Dt and t to estimate 0, 1, and 2 ( = 1). Notes – This approach extends to higher order polynomials in a straightforward way, allowing one or more parameters to change at one or more points in time. This approach can be extended to allow for breaks at unknown time(s). Exponential (or,Log Linear) Trends Recall that an alternative to the polynomial trend is the exponential trend model – Tt e 0 1t 2 t 2 ... p t p since log(ex) = x. Assuming that y t = Tt + ε t we can estimate the β’s by applying nonlinear least squares: Choose β0, β1,…,βp to minimize T (y t 1 t e 0 1t 2 t 2 ... p t p 2 ) This minimization problem must be solved “numerically” (vs. analytically), but most modern regression software (including EViews) are well-equipped to solve this problem. To select p in this case – use the NLS residuals, yt Tt (ˆ ) , to compute the AIC and/or the SIC, then select the model that minimizes the AIC and/or the SIC. We can also compare the fit of these exponential trend models to the polynomial trend models by comparing AICs and SICs. If we do select an estimated exponential trend model, the forecast of yT+h,T is yˆ T h,T TT h ( ˆ ) ˆT h,T A related approach that is commonly used in practice – Assume that log(yt) = Tt + εt Tt = β0 + β1t + … + βptp (The ε’s are deviations of log(y) from its trend, vs. deviations of y from its trend.) In this case, we can fit log(yt) to 1,t,…,tp by OLS to estimate the β’s we can select p by minimizing the AIC and/or SIC across these regressions log( yˆ T h,T ) TT h ( ˆ ) ˆT h,T = TT h (ˆ ) if the ε’s are i.i.d. ˆ log(y ) yˆ T h,T e T h ,T This approach has the advantage of relying on OLS vs. NLS, but although this approach produces an unbiased forecast of log(yT+h), it produces a biased forecast of yT+h. [E(f(x)) ≠ f(E(x)) if f is nonlinear]. There has been some work done on ways to adjust the forecast to reduce this bias NLS is not particularly difficult or unreliable, especially in this setting. We should also note that the AICs and SICs from the log(y) regressions cannot be meaningfully compared to the AICs and SICs from the y regressions, so it is difficult to choose between the log linear models and the polynomial trend models based on in-sample fits. Although this model is a nonlinear model (in the β’s), its natural log is a linear model and so we also call it a log linear trend model log(Tt) = β0 + β1t + β2t2 + … βptp