handout6

advertisement

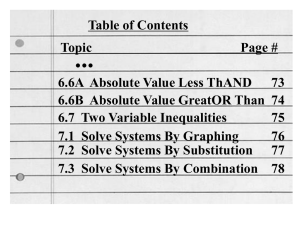

Section 6: Linear Programming This section is meant to be read in conjunction with chapter 6 of Helzer's book Applied Linear Algebra with APL, which has been distributed in Xeroxed form with the permission of the author. We will develop a MATLAB approach to linear programming problems, which will replace the APL code in the text. The Graphical Method Let us begin with a naïve approach. Consider the problem 21 in Section 6.1 of the handout from Helzer's text. Let the variables x and y denote respectively the numbers of the first and second types of chair that should be produced per day. There are three constraints, stemming from the availability of labor hours, fabric, and padding, and we want to maximize the profit. The corresponding functions are syms x y labor= 10*x+70*y fabric=2*x+3*y padding=20*x+10*y profit=2*x+5*y labor = 10*x+70*y fabric = 2*x+3*y padding = 20*x+10*y profit = 2*x+5*y The explicit constraints, then, are 10x+70y 490, 2x+3y 32, and 20x+10y 240. We can plot these constraints by finding the intercepts and plotting the straight lines accordingly. xil=double(solve(subs(labor,y,0)-490)) yil=double(solve(subs(labor,x,0)-490)) xif=double(solve(subs(fabric,y,0)-32)) yif=double(solve(subs(fabric,x,0)-32)) xip=double(solve(subs(padding,y,0)-240)) yip=double(solve(subs(padding,x,0)-240)) xil = 49 yil = 7 xif = 16 yif = 10.6667 xip = 12 yip = 24 plot([xil;0],[0;yil],'r') hold on plot([xif;0],[0;yif],'k') plot([xip;0],[0;yip],'b') axis([0,12,0,7]) hold off 7 6 5 4 3 2 1 0 0 2 4 6 8 10 12 The axis command makes the feasible region as large as possible in the plot. From the plot, we can see that the intersection of the red and blue lines is not in the feasible region, and therefore the maximum profit is attained at one of the two other intersections or at one of the intercepts at the corners of the plot [x1,y1]=solve(labor-490,fabric-32) [x2,y2]=solve(fabric-32,padding-240) x1 7 y1 6 x2 10 y2 4 = = = = subs(profit,[x,y],[x1,y1]) ans = 44 subs(profit,[x,y],[x2,y2]) ans = 40 subs(profit,[x,y],[xip,0]) ans = 24 subs(profit,[x,y],[0,yil]) ans = 35 From this, we see that the solution (x1,y1) maximizes the profit and that the appropriate plan is to manufacture seven chairs of type one and six of type two each day. Actually, we can rely on the graphics even more heavily by having MATLAB plot the straight lines that are contours of the profit function. plot([xil;0],[0;yil],'r') hold on plot([xif;0],[0;yif],'k') plot([xip;0],[0;yip],'b') genplot(profit,0:12,0:7,'contour',0:50,'g') axis([0,12,0,7]) hold off 7 6 5 4 3 2 1 0 0 2 4 6 8 10 12 It is now clear from the picture that the maximum of the profit will occur at the intersection of the red and black lines, corresponding to the labor and fabric constraints. This reproduces the solution we have already found, with even less computation. The method we have just exemplified only works if there are two variables, and few enough constraints so that the feasible region can be analyzed visually. The main purpose of a more sophisticated approach is to free us from these limitations. The Simplex Algorithm Let us now rework the chair problem, introducing auxilary variables and eschewing pictorial reasoning. We shall change the names of our variables from x and y to x1 and y1, and introduce "slack" variables, x3, x4, and x5. The purpose of this is to rewrite the constraining inequalities as equations with the slack variables (as well as the original variables) constrained to be non-negative. This gives us the following equations: labor: fabric: padding: 10x1+70x2+x3 = 490 2x1 + 3x2 +x4 = 32 20x1 +10x2 +x5 = 240 The profit function s has the form 2x1 + 5x2. The idea that underlies the simplex method is as follows: We have the three equations defined by labor, fabric and padding. Moreover, all five variables are constrained to be non-negative. If we set any two of the variables to zero, this turns two of the constraining inequalties to equations, called the "active constraints", and determines the other three variables, defining what is called a "vertex" of the system. A feasible vertex is one for which the three variables that are not zero are positive, and so satisfy the "passive" constraints. We can row reduce the system to express all the variables in terms of the ones that correspond to the active constraints. We may also rewrite the profit function in terms of these "active variables" . Then the constant term gives the profit function at the vertex in question, since the active variables take the value 0. We start at the vertex in which x1 and x2 have been set to zero, so that the original slack variables are the passive variables. This immediately gives x3=490, x4=32, and x5 = 240, so this vertex is feasible, and gives a profit of 0. Two vertices are adjacent if they share all but one of their active variables. In this case, there are only two active variables at a time. We can get an adjacent vertex by making either x1 or x2 passive, and another of the variables active. Since the profit increases faster with x2 than with x1, we will make x2 passive. The next question is which other variable to make active. In order not to make any variable negative, we must have x3=490-70x2, x4=32-3x2, and x5=240-10x2 all non-negative. Since these are all decreasing functions of x2, we must choose the smallest value of x2 that makes any of them 0, which means that we must increase x2 from 0 to the smallest of 490/70, 32/3, and 240/10. The smallest of these is 490/70=7, which will set x3 to 0, so our new active variables are x1 and x3. Now let us see how all of this can be done in terms of matrix manipulation. We begin with a matrix A whose rows correspond to the labor, fabric and padding equations. We will add an additional row at the bottom to correspond to the profit equation: y - 2x1-5x2=0. Here, y denotes the profit, and we can prefix a column corresponding to y whose only non-zero entry is a 1 in the last row. This gives us the matrix A=[0 0 0] 10 70 1 0 0 490;0 2 3 0 1 0 32; 0 20 10 0 0 1 240; 1 -2 -5 0 0 A = 0 0 0 1 10 2 20 -2 70 3 10 -5 1 0 0 0 0 1 0 0 0 0 1 0 490 32 240 0 We have chosen to make x2 inactive and x3 active. This means that we want to set x3 to zero, use the first equation to assign a value to x2, and eliminate x2 from all the other equations. This means that we pivot the matrix A on the entry in the first row and third column. We now obtain newA=pivot(A,1,3) newA = 0 0 0 1.0000 0.1429 1.5714 18.5714 -1.2857 1.0000 0 0 0 0.0143 -0.0429 -0.1429 0.0714 0 1.0000 0 0 0 0 1.0000 0 7.0000 11.0000 170.0000 35.0000 MATLAB does not appear to have a pivot function; the one used here can be downloaded with the other course m-files from the mfile subdirectory of the directory that contains these notes. We now have a matrix which corresponds to the vertex x1=x3=0. The profit function y takes the value 35 at this vertex, but it can still be increased by increasing x1. Thus we want to make the variable x1 passive, and the variable we choose to make active must be the first one that is driven to 0 as x1 increases from 0 and x3 remains 0. To see which variable that is, we look at the quotients of the first three entries in the last column by the first three entries in the x1- or second column. newA(1:3,7)./newA(1:3,2) ans = 49.0000 7.0000 9.1538 The smallest of these is the second, so we set x4 to be our new active variable, and pivot newA on the (2,2) position. newA=pivot(newA,2,2) newA = 0 0 0 1.0000 0 1.0000 0 0 1.0000 0 0 0 0.0182 -0.0273 0.3636 0.0364 -0.0909 0.6364 -11.8182 0.8182 0 0 1.0000 0 6.0000 7.0000 40.0000 44.0000 This gives us a profit of 44, which cannot be increased by increasing either active variable, so we are finished. Notice also that the first column will never change, and therefore can be omitted. Notice also that we can read off the values x1=7, x2=6, corresponding to producing seven chairs of type one and six of type two, reproducing the solution we found by the graphical method. This is the essence of the simplex algorithm. With more sophisticated programming, it can be completely automated, and for really large linear programming problems, such automation is necessary. We will restrict ourselves to the "semiautomatic" version we have just illustrated, which can work very nicely on problems that would be hopeless by the graphical method. An Interior Method The simplex method, illustrated above, considers only points on the boundary of the feasible region. This seems reasonable, since one knows that the extreme values of the objective function (the profit in the chair problem) will always correspond to vertices, which are on the boundary. By constrast, interior methods are methods in which points interior to the feasible region play a role. In recent years such methods have been gaining preference over the simplex method for a number of reasons. We will begin by illustrating an interior method, and then discuss some of the issues. We return once more to the chair problem. This time, we are going to build the constraints into the profit function by adding a term proportional to the sum of the logarithms of the slack variables, as expressed in terms of the original variables. The idea is that as we approach the boundary of the feasible region, at least one of the slack variables will approach zero, so that its logarithm will drive the profit negative. Thus there will be a maximum of the modified profit function in the interior of the feasible region. By making the constant of proportionality in the logarithmic term progressively smaller, we should force this maximum closer and closer to the true maximum of the profit function. Let us now see how this works. syms lambda prof=profit + lambda*(log(490-labor)+log(32-fabric)+log(240-padding)) prof = 2*x+5*y+lambda*(log(490-10*x-70*y)+log(32-2*x-3*y)+log(240-20*x-10*y)) Let us set lambda to .1 and look for critical points of prof. proff=subs(prof,lambda,.1) proff = 2*x+5*y+1/10*log(490-10*x-70*y)+1/10*log(32-2*x-3*y)+1/10*log(240-20*x10*y) gradproff=jacobian(proff,[x,y]) gradproff = [ 2-1/(490-10*x-70*y)-1/5/(32-2*x-3*y)-2/(240-20*x-10*y), 5-7/(49010*x-70*y)-3/10/(32-2*x-3*y)-1/(240-20*x-10*y)] [xc,yc]=solve(gradproff(1),gradproff(2));double([xc,yc]) ans = 6.9925 + 0.0000i 10.0895 + 0.0000i 9.0912 + 0.0000i 5.9627 + 0.0000i 3.9234 - 0.0000i 5.6784 - 0.0000i We see three critical points. We can determine which of them, if any, is in the critical region by evaluating proff. proffin=inline(vectorize(proff)) proffin = Inline function: proffin(x,y) = 2.*x+5.*y+1./10.*log(490-10.*x-70.*y)+1./10.*log(322.*x-3.*y)+1./10.*log(240-20.*x-10.*y) double(proffin(xc,yc)) ans = 44.0611 39.9744 - 0.3142i 46.7713 - 0.3142i This shows us that only the first critical point is in the feasible set. This is because outside of the feasible set, the argument to at least one of the log terms becomes negative, introducing an imaginary part to proff. Notice that the value of proff is slightly higher than the maximum of 44 we found for profit. Let us evaluate profit as well. What happens is that the logarithm of the unsaturated constraint is positive, making proff slightly larger than profit. profitin=inline(vectorize(profit)) profitin = Inline function: profitin(x,y) = 2.*x+5.*y double(profitin(xc,yc)) ans = 43.7987 - 0.0000i 39.7961 46.5744 We know, since we have already solved the problem, that we are very close to the maximum. Be aware that in a "real" problem there is no reason to expect the answers to be whole numbers, so that we may not use such a clue. However, there is another clue that we can use. constraints=[490-labor, 32-fabric,240-padding] constraints = [ 490-10*x-70*y, 32-2*x-3*y, 240-20*x-10*y] double(subs(constraints,[x,y],[xc(1),yc(1)])) ans = 2.6838 0.1267 + 0.0000i 40.5219 + 0.0000i This suggests strongly that we are headed for the vertex at which the first two constraints are saturated. We can get some confirmation of this by setting lambda even smaller. However, let us consider a slightly larger problem of the same nature. syms x y object=162*x + 150*y c1=126-6*x-8*y c2=155-9*x-8*y c3=135-10*x-7*y c4=132-9*x-7*y c5=84-7*x-2*y object = 162*x+150*y c1 = 126-6*x-8*y c2 = 155-9*x-8*y c3 = 135-10*x-7*y c4 = 132-9*x-7*y c5 = 84-7*x-2*y syms lam ob=object+lam*(log(c1)+log(c2)+log(c3)+log(c4)+log(c5)) ob = 162*x+150*y+lam*(log(126-6*x-8*y)+log(155-9*x-8*y)+log(135-10*x7*y)+log(132-9*x-7*y)+log(84-7*x-2*y)) obb=subs(ob,lam,.1) obb = 162*x+150*y+1/10*log(126-6*x-8*y)+1/10*log(155-9*x-8*y)+1/10*log(13510*x-7*y)+1/10*log(132-9*x-7*y)+1/10*log(84-7*x-2*y) gradobb=jacobian(obb,[x,y]); [xc,yc]=solve(gradobb(1),gradobb(2));double([xc,yc]) ans = -3.2043 -0.2774 3.0051 5.2104 5.8012 9.5399 9.5437 9.6811 10.4551 10.9677 22.9793 19.6865 14.9931 11.8409 11.3973 8.6420 8.5915 8.4830 5.4142 3.6170 Here, we see a difference from the simpler problem we considered before. The first two critical points can immediately be seen not to be in the feasible region because their x-coordinates are negative. Let us proceed further. obbin=inline(vectorize(obb)) obbin = Inline function: obbin(x,y) = 162.*x+150.*y+1./10.*log(126-6.*x8.*y)+1./10.*log(155-9.*x-8.*y)+1./10.*log(135-10.*x7.*y)+1./10.*log(132-9.*x-7.*y)+1./10.*log(84-7.*x-2.*y) double(obbin(xc,yc)) ans = 1.0e+003 * 2.9278 + 0.0006i 2.9080 + 0.0009i 2.7354 + 0.0006i 2.6200 2.6491 + 0.0003i 2.8414 + 0.0013i 2.8343 2.8405 2.5057 2.3192 + + + + 0.0006i 0.0009i 0.0006i 0.0003i Again, there seems to be only one critical point in the feasible region, at which the objective function takes the value 2620 (to five significant figures). A cautionary note here: It is essential to express the logaritmic term in the modified objective function as a sum of logarithms rather than (for instance to save typing) as the logarithm of a product. The reason for this is that we do not want imaginary parts of logarithms to cancel in the modified profit function. As we have it, the modified function will be real only in the feasible region; otherwise it will be real whenever an even number of the constraints are violated. Let us look at the constraint functions at the critical point we have found. double(subs([c1,c2,c3,c4,c5],[x,y],[xc(4),yc(4)])) ans = 0.0104 13.3792 0.0096 2.2200 23.8453 It certainly appears that we are headed for the point at which the first and third constraints are saturated. [xv,yv]=solve(c1,c3);double([xv,yv]) ans = 5.2105 11.8421 subs(object,[x,y],[xv,yv]) ans = 49788/19 Let us check our results by using the simplex method. A=[6 8 1 0 0 0 0 126;9 8 0 1 0 0 0 155;10 7 0 0 1 0 0 135;9 7 0 0 0 1 0 132;7 2 0 0 0 0 1 84;-162 -150 0 0 0 0 0 0] A = 6 9 10 9 7 -162 8 8 7 7 2 -150 1 0 0 0 0 0 0 1 0 0 0 0 0 0 1 0 0 0 0 0 0 1 0 0 0 0 0 0 1 0 126 155 135 132 84 0 In order to protect against errors, we make a working copy of A, that we can modify. AW=A; AW(1:5,8)./AW(1:5,1) ans = 21.0000 17.2222 13.5000 14.6667 12.0000 AW=pivot(AW,5,1) AW = 1.0e+003 * Columns 1 through 7 0 0.0063 0 0.0054 0 0.0041 0 0.0044 0.0010 0.0003 0 -0.1037 Column 8 0.0540 0.0470 0.0150 0.0240 0.0120 1.9440 0.0010 0 0 0 0 0 0 0.0010 0 0 0 0 0 0 0.0010 0 0 0 0 0 0 0.0010 0 0 -0.0009 -0.0013 -0.0014 -0.0013 0.0001 0.0231 0.0010 0 0 0 0 0 0 0.0010 0 0 0 0 -0.0015 -0.0013 0.0002 -0.0011 -0.0001 0.0250 0 0 0 0.0010 0 0 0.0013 0.0006 -0.0003 0.0002 0.0002 -0.0126 AW(1:5,8)./AW(1:5,2) ans = 8.5909 8.6579 3.6207 5.4194 42.0000 AW=pivot(AW,3,2) AW = 1.0e+003 * Columns 1 through 7 0 0 0 0 0 0.0010 0 0 0.0010 0 0 0 Column 8 0.0312 0.0273 0.0036 0.0080 0.0110 2.3195 AW(1:5,8)./AW(1:5,7) ans = 23.8421 46.6471 -10.5000 33.0000 45.4286 The negative value on the third row means that increasing the active variable will drive the corresponding passive variable larger rather than smaller, and can be ignored. AW=pivot(AW,1,7) AW = 1.0e+003 * Columns 1 through 7 0 0 0 0 0 0.0010 0 0 0.0010 0 0 0 Column 8 0.0238 0.0134 0.0118 0.0022 0.0052 2.6204 0.0008 -0.0004 0.0003 -0.0002 -0.0002 0.0096 0 0.0010 0 0 0 0 -0.0012 -0.0006 -0.0002 -0.0008 0.0002 0.0104 0 0 0 0.0010 0 0 0.0010 0 0 0.0000 0 -0.0000 This gives us a maximum for the objective function of 2620.4 at the point whose coordinates are roughly (5.2,11.8), which appears to corresponds fairly well to the values we found by the interior method. To get a better comparison, we can compare the coordinates of the maximum point directly. AW(5,8)-xv AW(3,8)-yv ans = 0 ans = 0 Some comments are in order here. The logarithmic barrier method we have just illustrated is not quite as fast as these examples have made it look. The limitation is in calculating critical points of the modified objective function. With k variables and n constraints this involves solving k n th degree polynomials in k variables, and the second example (involving two quintic polynomials in two variables) is fairly close to the limit of what MATLAB's solve facility can handle. The "industrial strength" versions of this method have to use numerical methods to find the critical point. This involves the Hessian matrix of the modified objective function, which tends to be ill-conditioned in the region of interest. Let us illustrate this point. hessobb=jacobian(gradobb,[x,y]); hesscrit=subs(hessobb,[x,y],[xc(4),yc(4)]); eig(hesscrit) ans = [ -2485757.6854625261864133536798877] [ -58461.8694850365439115762137461] ans(1)/ans(2) ans = 42.519298601950487470371071259939 Nonetheless these problems can be overcome and interior methods are not only the methods of choice for solving large linear programming problems but, since they are not framed in terms of matrix manipulations, and so do not depend on linearity as the simplex method does, they can be extended to nonlinear problems of the same general nature. We will explore this issue further in the next section of these notes. Problems 1) Solve problem 22 from Section 6.1 of Helzer's text, a) By the graphical method. b) By the Simplex method. c) By the logarithmic barrier method. 2) Solve problem 5 from Section 6.3 of Helzer's text by all three methods. Do not, for the present, be concerned with the dual problem. 3) Solve problem 13 from Section 6.3 of Helzer's text by the simplex method. (Finding the critical points in the logarithmic barrier method will crash MATLAB.) Ignore the dual problem for the present.